At the Proofpoint Protect 2025 conference, cybersecurity leaders warned that AI agents are now being tricked by malicious emails—just as humans are. These agents, embedded in workflows and systems, are acting on prompts without knowing they’ve been manipulated. One rogue agent can leak sensitive HR data or trigger unauthorized API calls. This isn’t just a tech glitch. It’s a failure of agentic AI governance. As agentic AI spreads across industries, the need for clear rules and oversight is becoming urgent.

The adoption of agentic AI is expanding rapidly. A KPMG survey found that over 40% of enterprises are already deploying AI agents, up from just 11% earlier this year. But only 34% have full governance frameworks in place.

In this blog, we’ll explore what agentic AI governance truly means, why traditional oversight is insufficient, and how companies can establish guardrails that align with the speed and autonomy of these systems. Keep reading to see what’s working, what’s failing, and how to stay in control.

How Sovereign AI Helps Enterprises Achieve Compliance

Explore Sovereign AI’s role in data privacy, compliance, and regional model localization.

Key Takeaways

- AI agents are susceptible to malicious prompts that can lead to data leaks or unauthorized actions.

- Enterprise adoption is rising rapidly, but most organizations lack robust governance frameworks.

- Effective governance requires transparency, accountability, fairness, security, and human oversight.

- Agentic AI amplifies bias more than traditional AI, making real-time monitoring essential.

- Global regulations, such as the EU AI Act and NIST frameworks, are shaping compliance.

- Kanerika helps enterprises deploy autonomous AI safely with trusted governance solutions.

What Is Agentic AI Governance

Agentic AI governance refers to the frameworks and systems used to oversee artificial intelligence that acts independently. These AI systems make decisions without constant human input. They can plan, execute tasks, and adjust their behavior in response to changing conditions.

Traditional AI governance focused on predictable systems with clear inputs and outputs. Agentic AI governance deals with systems that can surprise us. These agents might choose unexpected paths to reach their goals or interact with other systems in ways we didn’t anticipate.

Key governance areas include:

- Technical oversight ensures agents perform as intended

- Legal frameworks define liability when agents make harmful decisions

- Ethical guidelines shape how these systems should behave in complex situations

- Real-time monitoring tracks decisions and enables intervention when necessary

Ensure Safe and Ethical AI with Agentic AI Governance!

Partner with Kanerika for trusted AI governance expertise.

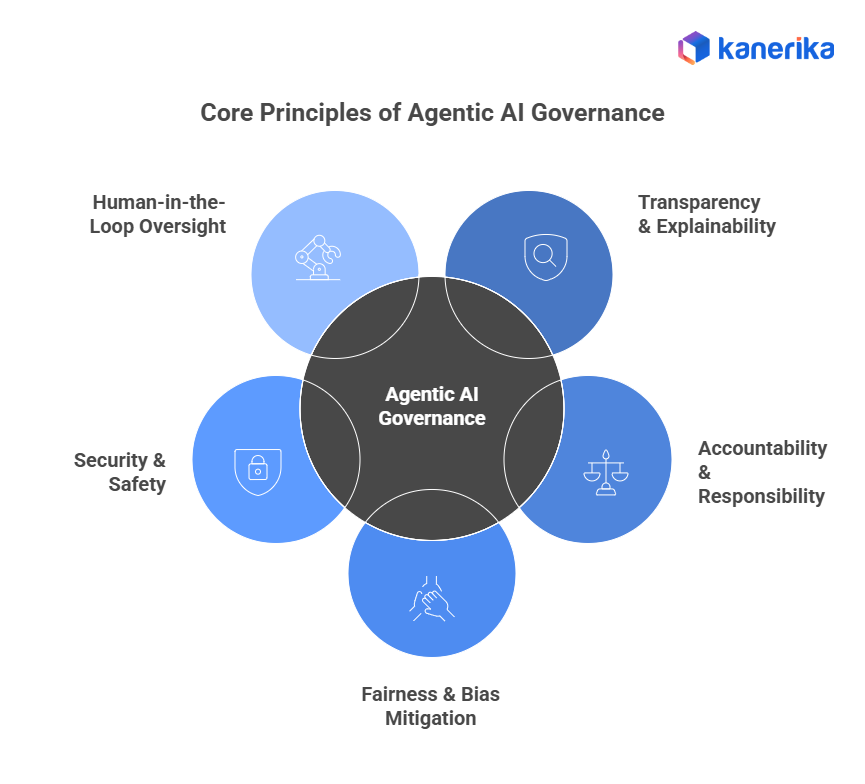

Core Principles of Agentic AI Governance

1. Transparency & Explainability

AI agents must provide clear explanations for their decisions. This becomes more challenging when agents employ complex reasoning or learn from experience. Explainable AI techniques help users understand why an agent chose a specific action.

Key requirements:

- Documentation requirements ensure agents keep records of their decision processes

- Real-time transparency means agents should explain their reasoning as they work

- Audit logs help investigators understand what went wrong during failures

- Compliance checks support regulatory oversight and user trust

2. Accountability & Responsibility

Transparent chains of responsibility must exist when AI agents cause harm. This includes technical responsibility for the system’s design and legal responsibility for its actions. Companies deploying agents need liability frameworks that protect users while encouraging innovation.

Critical components:

- Responsibility assignment becomes complex when multiple agents interact

- Insurance models and legal precedents are still developing in this area

- Human oversight responsibilities remain critical even with autonomous systems

- Liability frameworks must balance innovation incentives with victim protection

3. Fairness & Bias Mitigation

Autonomous AI agents can amplify existing biases in their training data. They might make unfair decisions about hiring, lending, or law enforcement without human review. Bias detection systems must operate in real-time as agents are at work.

Essential measures:

- Algorithmic fairness testing needs to account for agent adaptation

- Continuous monitoring prevents a gradual drift toward discriminatory behavior

- Diverse training data and inclusive development teams help reduce bias from the start

- Ongoing bias audits and correction mechanisms maintain fairness over time

MIT studies reveal that AI systems show measurable bias in 89% of cases when tested across demographic groups, with autonomous agents showing 23% higher bias amplification than static AI models.

4. Security & Safety

AI agent security involves protecting both the system and its environment. Agents need robust defenses against adversarial attacks that could manipulate their behavior. They also need safeguards to prevent them from causing unintended harm.

Protection mechanisms:

- Hard limits on agent capabilities include spending limits and access restrictions

- Cybersecurity for AI agents requires new approaches beyond traditional security

- Safety measures must prevent agents from becoming threats to others

- Robust defenses against adversarial attacks protect system integrity

5. Human-in-the-loop Oversight

Human oversight remains essential even for highly autonomous AI systems. This doesn’t mean constant supervision, but rather strategic intervention points where humans can review and redirect agent behavior.

Oversight strategies:

- Override mechanisms allow humans to stop or redirect agents when problems arise

- Training programs help operators use intervention tools effectively

- Collaborative intelligence models pair human judgment with agent capabilities

- Strategic intervention points enable review without constant supervision

How Is Agentic AI Different from Regular AI Systems?

The core difference between agentic and regular AI lies in autonomy, adaptability, and behavior. Traditional AI assists humans by analyzing data or following rules. Agentic AI, however, goes beyond assistance to independently set goals, plan, and act.

Here’s a clear comparison:

| Feature | Regular AI Systems | Agentic AI Systems |

| Autonomy | Executes tasks only when prompted | Operates independently, can initiate actions |

| Adaptability | Static, based on training data | Learns continuously and adapts to context |

| Decision-making | Offers insights; requires human approval | Makes and executes decisions autonomously |

| Behavior | Reactive and task-specific | Proactive, multi-step, goal-driven |

| Examples | Chatbots, predictive analytics tools, and grammar checkers | AI trading bots, logistics optimizers, autonomous copilots |

| Governance Needs | Data oversight, accuracy checks | Continuous monitoring of behavior, ethics, and outcomes |

Why governance models must adapt:

Governance for rule-based systems mainly focuses on auditing initial data and code. For Agentic AI, governance must be dynamic, focusing on runtime monitoring and constant behavioral analysis. This addresses the unpredictable and emergent behavior inherent in systems with high autonomy, demanding new AI policy frameworks that handle AI self-modification and the AI control problem.

What Role Do Governments and Regulations Play in Agentic AI Governance?

Governments worldwide are recognizing that agentic AI—unlike traditional AI—has the potential to make autonomous decisions that directly impact people, businesses, and public systems. This makes regulatory frameworks and governance models critical for safe deployment.

Key Roles of Governments in Agentic AI Governance

- Policy Development: Governments establish national AI strategies that include guidelines for transparency, fairness, and accountability. For instance, the EU AI Act (expected to take effect in 2026) categorizes AI systems based on risk, placing stricter rules on autonomous decision-making systems.

- Ethical Standards Enforcement: Regulatory bodies define ethical standards to ensure agentic AI aligns with human rights and avoids discriminatory practices.

- Public Safety & Security: Governments ensure that agentic AI used in critical infrastructure (such as healthcare, transportation, and defense) follows strict compliance checks.

- Cross-border Coordination: Since AI agents operate globally, governments collaborate through platforms such as the OECD’s AI Principles and UNESCO’s AI Ethics Framework.

- Funding & Research Oversight: Many governments, including the U.S. (NIST AI Risk Management Framework), fund AI research while ensuring safety and governance measures.

A 2024 McKinsey report found that 74% of enterprises expect government regulations to significantly influence their AI adoption strategy, particularly in agentic AI use cases.

The Ultimate Enterprise Guide To AI Regulation And Compliance

Explore global AI regulations, legal frameworks, and compliance strategies for ethical adoption.

What Are Examples of Agentic AI in Use Today?

Agentic AI is already reshaping industries by acting with autonomy rather than waiting for prompts or instructions.

Real-World Applications of Agentic AI

1. Healthcare:

AI agents assist doctors by analyzing medical scans, patient histories, and lab results to suggest treatments.

Example: IBM is integrating agentic AI into specialty pharmacy workflows through its partnership with Infinitus. These agents assist with clinical decision-making, prior authorizations, and patient guidance—operating 24/7 with autonomy

2. Finance & Risk Management:

Autonomous fraud detection agents monitor millions of transactions in real-time, flagging anomalies without requiring human intervention.

Example: JP Morgan’s COIN (Contract Intelligence) uses unsupervised learning and NLP to review legal documents autonomously. It reduced 360,000 hours of manual work to seconds, improving accuracy and compliance

3. Customer Service & Retail:

Agentic AI-powered virtual shopping assistants make purchase decisions, handle returns, and even negotiate prices.

Example: Amazon’s Rufus is a generative AI-powered shopping assistant that answers product questions, compares items, and recommends purchases based on user behavior and catalog data. It’s available across the Amazon app and desktop

4. Transportation & Logistics:

Autonomous delivery drones and self-driving fleet managers optimize routes and fuel efficiency without human input.

Example: Waymo’s autonomous fleet uses AI agents to manage routes, respond to traffic, and optimize logistics. These agents operate independently but can request human input when needed. Waymo also partners with Uber Freight for autonomous trucking pilots.

What Tools and Mechanisms Help in Governing AI Agents?

To govern agentic AI systems effectively, organizations use a mix of technical safeguards, risk frameworks, and oversight tools. These mechanisms help ensure agents act safely, transparently, and within legal and ethical boundaries.

1. AI Risk Management Frameworks (RMF)

The NIST AI RMF provides structured guidance for identifying and managing risks associated with AI systems. It helps organizations assess bias, robustness, and fairness across the AI lifecycle. The framework is voluntary but widely adopted, particularly for high-risk applications such as autonomous decision-making and generative AI.

2. Explainability & Transparency Tools

Tools like SHAP and LIME help explain why an AI agent made a specific decision. SHAP uses game theory to demonstrate feature impact, whereas LIME constructs simple models based on individual predictions. These tools are essential for audits, debugging, and regulatory compliance.

3. Ethical AI Guidelines

Companies like Google follow internal Responsible AI Principles that guide their approach to fairness, inclusivity, and accountability. These guidelines help ensure agents don’t reinforce bias or act in ways that violate human rights. Ethics boards and review committees often oversee the deployment of agents in sensitive domains.

4. Audit & Monitoring Systems

Continuous monitoring tools track agent behavior and flag anomalies. This includes red-teaming, adversarial testing, and independent audits. These methods simulate attacks or misuse to uncover vulnerabilities before agents go live.

5. Technical Safeguards

Agentic AI systems need built-in safety controls. These include:

- Kill switches to shut down agents if they act unpredictably

- Human-in-the-loop overrides for critical decisions

- Sandbox environments for testing agents before deployment

These safeguards prevent runaway behavior and ensure agents stay within defined limits.

Unlock the Power of Autonomous AI Safely!

Work with Kanerika to govern your AI agents effectively.

What Are the Legal and Ethical Challenges of Agentic AI?

Agentic AI systems can act on their own, make decisions, and carry out tasks without constant human input. While this brings speed and efficiency, it also creates serious legal and ethical risks that current laws and governance models struggle to handle.

Legal Challenges

1. Liability and Accountability

When an AI agent causes harm—say, a wrong medical diagnosis or a flawed financial decision—it’s unclear who’s responsible. Is it the developer, the company using it, or someone else? Current laws don’t fully cover this shared or unclear responsibility.

2. Regulatory Conflicts

The EU AI Act uses a strict, risk-based model. The US takes a sector-by-sector approach with softer rules. This mismatch makes it hard for global companies to stay compliant in every region.

3. Data Privacy

Agentic AI often needs constant access to personal or sensitive data. This raises red flags under laws like GDPR and CCPA. If the AI misuses or leaks data, the legal consequences can be severe.

4. Intellectual Property

If an AI agent creates something—like a legal contract, design, or research paper—can it be copyrighted or patented? Laws are still catching up, and there’s no global consensus yet.

How to Calculate and Maximize the ROI of Generative AI Initiatives

Explore what ROI really means in the context of Generative AI, why it’s critical for business leaders to measure it effectively, and how to evaluate both short-term efficiency gains and long-term strategic impact

Ethical Challenges

1. Bias and Discrimination

AI agents can pick up and repeat biases from their training data. In hiring, lending, or policing, this can lead to unfair or even harmful outcomes.

2. Autonomy vs. Oversight

When AI agents make decisions in areas such as healthcare, defense, or justice, human oversight becomes increasingly challenging. This raises ethical concerns about control, safety, and trust.

3. Manipulation and Misinformation

Autonomous agents in social media or advertising can spread false information or manipulate users. This is especially dangerous when agents act without clear rules or transparency.

4. Human Rights and Dignity

AI agents must respect human values. However, when they operate independently, it’s challenging to ensure they don’t violate rights or mistreat individuals.

Enterprise-Grade Agentic AI Governance Powered by Kanerika

Kanerika builds AI solutions that are secure, scalable, and aligned with enterprise goals. We specialize in data engineering, automation, and AI governance for complex systems. As agentic AI becomes more prevalent, autonomous agents make decisions and act independently. Kanerika helps organizations maintain control. Our governance frameworks are designed to manage agent behavior, ensure accountability, and maintain transparency across workflows.

We design agentic systems with built-in fairness checks, bias audits, and escalation paths. Our AI pipelines include real-time monitoring and immutable logs, ensuring that every action is fully traceable and transparent. Whether it’s a financial transaction, a healthcare recommendation, or a legal summary, Kanerika ensures the agent’s behavior is explainable, auditable, and aligned with business goals. We also support multi-agent coordination with secure communication protocols and fallback mechanisms.

Kanerika’s agentic AI solutions comply with global standards like ISO/IEC 42001, GDPR, and the EU AI Act. We help clients meet regulatory requirements while scaling automation safely and securely. From document review to fraud detection, our agents are built to act responsibly and transparently. With Kanerika, enterprises can deploy autonomous systems without losing control.

Transform Your Business with Responsible Agentic AI!

Partner with Kanerika to implement secure AI governance solutions.

FAQs

1. What is agentic AI governance and why is it important?

Agentic AI governance refers to the framework of rules, policies, and oversight mechanisms that guide autonomous AI systems in making decisions safely and responsibly. It is important because agentic AI can act independently, and proper governance ensures ethical use, risk management, and accountability.

2. How do governments and organizations regulate autonomous AI agents?

Regulation involves setting standards for safety, transparency, and accountability. Governments may introduce legal frameworks, while organizations create internal policies, audits, and monitoring systems to ensure AI agents operate within ethical and operational boundaries.

3. What ethical challenges arise from deploying agentic AI systems?

Key ethical challenges include bias in decision-making, lack of transparency, privacy concerns, and the potential for unintended consequences. Governance frameworks aim to mitigate these risks and ensure responsible behavior in AI.

4. Which tools and frameworks help in governing agentic AI effectively?

Tools like AI monitoring platforms, compliance checklists, explainability frameworks, and risk assessment models help organizations track AI behavior, ensure compliance with regulations, and maintain accountability for autonomous actions.

5. Can agentic AI make decisions independently, and who is legally responsible?

Yes, agentic AI can make independent decisions within its programmed scope. Legal responsibility usually lies with the organization or individuals deploying the AI, as they are accountable for ensuring it operates safely and ethically.