Consider a retail giant processing millions of daily transactions across stores, e-commerce platforms, and POS systems. Without a structured pipeline, valuable data remains isolated, hindering key insights for inventory management or customer experience. This is where an efficient ETL (Extract, Transform, Load) framework plays a pivotal role—integrating data from diverse sources, transforming it, and loading it into centralized systems for analysis.

The ETL tool market is expected to surpass $16 billion by 2025, underscoring the critical need for streamlined data integration to stay competitive. An ETL framework is essential for managing, cleaning, and processing data to deliver actionable insights and support smarter, data-driven decisions.

This blog will explore the core components of an ETL framework, its growing importance in modern data management, and best practices for implementation, helping businesses optimize data pipelines and drive digital transformation.

What is ETL Framework?

An ETL (Extract, Transform, Load) framework is a technology infrastructure that enables organizations to move data from multiple sources into a central repository through a defined process. It serves as the backbone for data integration, allowing businesses to convert raw, disparate data into standardized, analysis-ready information that supports decision-making.

Purpose and Value of ETL Framework

ETL frameworks deliver significant organizational value by:

- Ensuring data quality through systematic validation and cleaning

- Streamlining integration of multiple data sources into a unified view

- Enabling historical analysis by maintaining consistent data over time

- Improving data accessibility for business users through standardized formats

- Enhancing operational efficiency by automating repetitive data processing tasks

- Supporting regulatory compliance through documented data lineage

As data volumes grow, robust ETL frameworks become increasingly essential for deriving meaningful insights from complex information ecosystems.

Why Do Businesses Need an ETL Framework?

In today’s data-driven environment, an Extract, Transform, Load (ETL) framework plays a vital role in enabling organizations to manage, consolidate, and analyze data efficiently. Below are key reasons why businesses rely on ETL frameworks:

1. Data Integration

- ETL frameworks enable seamless extraction of data from diverse systems such as legacy databases, cloud applications, CRMs, and IoT platforms. Moreover, they consolidate this data into a centralized repository, ensuring consistency across departments.

- By integrating data across marketing, sales, finance, and operations, organizations gain a holistic view of their business. This integration breaks down data silos and helps uncover insights that would otherwise be hidden in disconnected systems.

2. Data Quality

- Raw data typically contains errors, duplications, or inconsistencies that reduce its reliability. Also, ETL frameworks apply cleansing and validation rules to correct inaccuracies and standardize formats.

- These processes enforce business logic and ensure that the data used for analysis is accurate and dependable. Consistent transformation rules across datasets promote a “single version of truth,” minimizing the risk of conflicting insights.

3. Scalability

- ETL frameworks are built to accommodate increasing data volumes and growing complexity as businesses scale. They offer modular and distributed processing capabilities that support expansion without requiring complete system overhauls.

- New data sources and processing logic can be added without disrupting existing pipelines. This scalability ensures long-term adaptability of the data infrastructure as business needs evolve.

4. Real-Time Data Processing

- Modern ETL frameworks support real-time or near real-time data integration in addition to traditional batch processing. Correspondingly, this allows businesses to access up-to-date information for time-sensitive decisions, such as tracking transactions or monitoring user activity.

- Real-time processing delivers continuous visibility into key metrics, improving responsiveness and agility. In the fast-growing industries, this capability provides a significant competitive edge by enabling quicker, data-driven actions.

Enhance Data Accuracy and Efficiency With Expert Integration Solutions!

Partner with Kanerika Today.

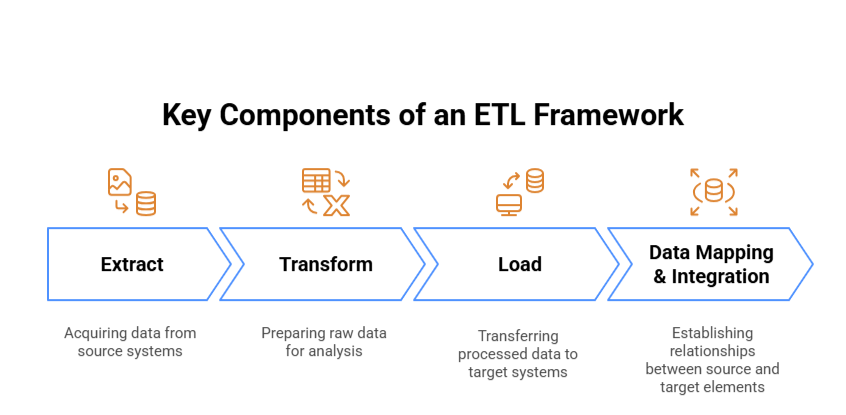

Key Components of an ETL Framework

Extract

The extraction phase acquires data from source systems like databases, APIs, and flat files. Organizations typically pull from multiple sources, using either full or incremental extraction methods. Common challenges include connecting to legacy systems, managing diverse data formats, and handling API rate limits. These can be addressed using extraction schedulers, custom connectors, and implementing retry mechanisms.

Transform

Transformation prepares raw data for analysis through cleansing, standardization, and enrichment. Key operations include:

- Removing duplicates and correcting inconsistencies

- Enriching data with additional information

- Standardizing formats across data points

Common tasks involve aggregating transactions, filtering irrelevant records, validating against business rules, and converting data types to match target requirements.

Load

The loading phase involves transferring the processed data into target systems for storage and analysis. Loading strategies vary based on business requirements and destination systems:

- Batch loading processes data in scheduled intervals, suitable for reporting that doesn’t require real-time updates

- Real-time loading continuously streams transformed data to destinations, essential for operational analytics

- Incremental loading focuses on adding only new or changed records to optimize system resources

The approach must consider factors like system constraints, data volumes, and business SLAs to determine appropriate loading frequency and methods.

Data Mapping & Integration

Data mapping establishes relationships between source and target elements, serving as the ETL blueprint. Proper mapping documentation includes field transformations, business rules, and data lineage. Integration mechanisms ensure referential integrity while supporting business processes, often through metadata repositories that track origins, transformations, and quality metrics.

Effective ETL frameworks balance these components while considering scalability, performance, and maintenance requirements. Modern solutions increasingly incorporate automation and self-service capabilities to reduce technical overhead while maintaining data quality and governance standards.

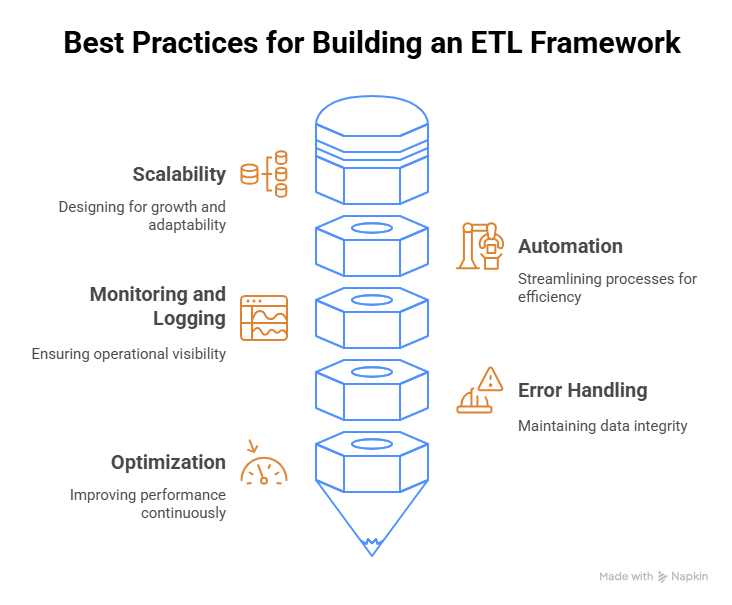

Best Practices for Building an ETL Framework

1. Scalability

Design your ETL framework with scalability as a foundational principle. Implement modular components that can be independently scaled based on workload demands. Use distributed processing technologies for large datasets and consider cloud-based solutions that offer elastic resources. Design data partitioning strategies to enable horizontal scaling as volumes grow.

2. Automation

Automate repetitive tasks throughout the ETL pipeline to enhance efficiency and reliability. Implement workflow orchestration tools to manage dependencies between tasks. Use configuration-driven approaches rather than hard-coding parameters. Automate validation checks at each stage to verify data quality without manual intervention.

3. Monitoring and Logging

Establish comprehensive monitoring and logging systems to maintain operational visibility. Track key metrics including job duration, data volume processed, and error rates. Implement alerting mechanisms for performance anomalies and failed jobs. Create dashboards visualizing ETL performance trends to identify potential bottlenecks proactively.

4. Error Handling

Develop robust error handling strategies to maintain data integrity. Implement retry mechanisms with exponential backoff for transient failures. Design graceful degradation patterns to handle partial failures without stopping entire pipelines. Create clear error messages that facilitate quick diagnosis and implement circuit breakers to prevent cascading failures.

5. Optimization

Continuously optimize your ETL framework for improved performance. Profile jobs regularly to identify bottlenecks and implement incremental processing where possible instead of full reloads. Consider pushdown optimization to perform filtering at source systems. Use appropriate data compression techniques and implement caching strategies for frequently accessed information.

Tools and Technologies for ETL Frameworks

1. ETL Tools Overview

The ETL landscape offers diverse tools to match varying business needs:

- Enterprise Solutions: Informatica PowerCenter and IBM DataStage provide robust, mature platforms with comprehensive features for complex enterprise data integration needs, though with higher licensing costs.

- Open-Source Options: Apache NiFi offers visual dataflow management, while Apache Airflow excels at workflow orchestration with Python-based DAGs. These tools provide flexibility without licensing fees.

- Mid-Market Tools: Talend and Microsoft SSIS deliver user-friendly interfaces with strong connectivity options, balancing functionality and cost for medium-sized organizations.

2. Cloud-Based ETL Solutions

Cloud-native ETL services have evolved to meet modern data processing needs:

AWS offers Glue (serverless ETL) and Data Pipeline (orchestration tool), Azure provides Data Factory, and Google Cloud features Dataflow supporting both batch and streaming workloads.

These solutions typically feature consumption-based pricing, simplified infrastructure management, and native connectivity to cloud data services. Most provide visual interfaces with underlying code generation.

3. Custom ETL Frameworks

Organizations develop custom ETL solutions when:

- Existing tools lack specific functionality for unique business requirements

- Processing extremely specialized data formats requires custom parsers

- Performance optimization needs exceed off-the-shelf capabilities

- Integration with proprietary systems demands custom connectors

- Cost concerns make open-source foundations with tailored components more attractive

Custom frameworks typically leverage programming languages like Python, Java, or Scala, often built on distributed processing frameworks such as Apache Spark.

4. Integrating with Data Warehouses

Modern ETL tools provide seamless integration with destination systems:

Cloud data warehouses like Snowflake, Redshift, and BigQuery offer optimized connectors for efficient data loading. The ELT pattern has gained popularity for cloud implementations, leveraging the computing power of modern data warehouses to perform transformations after loading raw data.

ETL vs. ELT: Understanding the Difference

1. ETL vs. ELT

ETL (Extract, Transform, Load) processes data on a separate server before loading to the target system. ELT (Extract, Load, Transform) loads raw data directly into the target system where transformations occur afterward. ETL represents the traditional approach, while ELT emerged with cloud computing and big data technologies.

2. ETL for Structured Data

- ETL excels when working with structured data that requires complex transformations before storage. It’s particularly valuable when:

- Data quality issues must be addressed before loading

- Sensitive data requires masking or encryption during the transfer process

- Target systems have limited computing resources

- Integration involves legacy systems with specific data format requirements

- Strict data governance requires validation before entering the data warehouse

ELT for Big Data

ELT has gained popularity in modern data architectures because it:

- Handles large volumes of raw, unstructured, or semi-structured data

- Utilizes the processing power of cloud data warehouses and data lakes

- Supports exploratory analysis on raw data

- Provides flexibility in transformation logic after data is centralized

- Enables faster initial data loading

ELT excels in cloud environments with cost-effective storage and scalable computing resources for transformations.

Data Ingestion vs Data Integration: How Are They Different?

Uncover the key differences between data ingestion and data integration, and learn how each plays a vital role in managing your organization’s data pipeline.

Challenges in Implementing an ETL Framework

1. Data Complexity

Implementing ETL solutions often involves integrating heterogeneous data sources with varying formats, schemas, and quality standards. Organizations struggle with reconciling inconsistent metadata across systems and handling evolving source structures.

Semantic differences between similar-looking data elements can lead to incorrect transformations if not properly mapped. Schema drift—where source systems change without notice—requires building adaptive extraction processes.

2. Real-Time Processing

Traditional batch-oriented ETL frameworks face challenges when adapting to real-time requirements. Stream processing demands different architectural approaches with minimal latency tolerances.

Technical difficulties include managing backpressure when downstream systems can’t process data quickly enough, handling out-of-order events, and ensuring exactly-once processing semantics.

3. Data Security

Security concerns permeate every aspect of the ETL process, particularly in regulated industries. Challenges include securely extracting data while respecting access controls, protecting sensitive information during transit, and implementing data masking during transformation. Compliance requirements may dictate specific data handling protocols that complicate ETL design.

4. Managing Large Data Volumes

Processing massive datasets strains computational resources and network bandwidth. ETL frameworks must implement efficient partitioning strategies to enable parallel processing while managing dependencies between related data elements. Organizations frequently underestimate infrastructure requirements, leading to performance bottlenecks that can cause processing windows to be missed.

Maximizing Efficiency: The Power of Automated Data Integration

Discover the key differences between data ingestion and data integration, and learn how each plays a vital role in managing your organization’s data pipeline.

Real World Implementation Examples of ETL Framework

1. Financial Services Industry

JPMorgan Chase implemented an enterprise ETL framework to consolidate customer data across their numerous legacy systems. Using Informatica PowerCenter, they created a customer 360 view by extracting data from mainframe systems, CRM databases, and transaction processing platforms.

Their framework included robust change data capture mechanisms that significantly reduced processing time through incremental updates. The implementation enforced strict data quality rules to maintain regulatory compliance while encryption protocols secured data throughout the pipeline.

2. E-commerce Platform

Amazon developed a sophisticated ETL framework using Apache Airflow to process their massive daily transaction volumes. Their implementation includes real-time inventory management through Kafka streams connected to warehouse systems across global fulfillment centers.

The framework features intelligent data partitioning that distributes processing across multiple AWS EMR clusters during peak shopping periods like Prime Day, automatically scaling based on load. Their specialized transformations support dynamic pricing algorithms by combining sales history, competitor data, and seasonal trends.

3. Healthcare Provider

Cleveland Clinic built an ETL solution with Microsoft SSIS and Azure Data Factory to integrate electronic health records, billing systems, and clinical research databases. Their implementation includes advanced data anonymization during the transformation phase to enable research while maintaining HIPAA compliance.

The framework processes nightly batch updates for analytical systems while supporting near-real-time data feeds for clinical dashboards. Custom validation rules ensure data quality for critical patient information.

4. Manufacturing Company

Siemens implemented Talend for IoT sensor data processing across their smart factory floors. Their ETL framework ingests time-series data from thousands of production line sensors, applies statistical quality control algorithms during transformation, and loads results into both operational systems and a Snowflake data warehouse.

The implementation features edge processing that filters anomalies before transmission to central systems, reducing bandwidth requirements significantly. Automated alerts trigger maintenance workflows when patterns indicate potential equipment failures.

The Future of ETL Frameworks

1. Cloud Adoption

ETL frameworks are rapidly migrating to cloud environments with organizations embracing platforms like AWS Glue, Azure Data Factory, and Google Cloud Dataflow. This shift eliminates infrastructure management burdens while enabling elastic scaling. Cloud-native ETL solutions offer consumption-based pricing models that optimize costs by aligning expenses with actual usage rather than peak capacity requirements.

2. Real-Time Data Processing

The demand for real-time insights is transforming ETL architectures from batch-oriented to stream-based processing. Stream processing technologies like Apache Kafka, Apache Flink, and Databricks Delta Live Tables are becoming core components of modern ETL pipelines, enabling businesses to reduce decision latency from days to seconds.

3. AI and Automation

Machine learning is revolutionizing ETL processes through automated data discovery, classification, and quality management. AI-powered tools now suggest optimal transformation logic based on historical patterns. Natural language interfaces make ETL accessible to business users without deep technical expertise.

4. Serverless ETL

Serverless architectures eliminate the need to provision and manage ETL infrastructure, automatically scaling resources in response to workload demands. Function-as-a-Service approaches enable granular cost control with per-execution pricing models. Event-driven triggers are replacing rigid scheduling, allowing ETL processes to respond immediately to new data.

Simplify Your Data Management With Powerful Integration Services!!

Partner with Kanerika Today.

Experience Next-Level Data Integration with Kanerika

Kanerika is a global consulting firm that specializes in providing innovative and effective data integration services. We offer expertise in data integration, analytics, and AI/ML, focusing on enhancing operational efficiency through cutting-edge technologies. Our services aim to empower businesses worldwide by driving growth, efficiency, and intelligent operations through hyper-automated processes and well-integrated systems.

Our flagship product, FLIP, an AI-powered data operations platform, revolutionizes data transformation with its flexible deployment options, pay-as-you-go pricing, and intuitive interface. With FLIP, businesses can streamline their data processes effortlessly, making data management a breeze.

Kanerika also offers exceptional AI/ML and RPA services, empowering businesses to outsmart competitors and propel them towards success. Experience the difference with Kanerika and unleash the true potential of your data. Let us be your partner in innovation and transformation, guiding you towards a future where data is not just information but a strategic asset driving your success.

Enhance Data Accuracy and Efficiency With Expert Integration Solutions!

Partner with Kanerika Today.

Frequently Asked Questions

1. What is an ETL framework?

An ETL (Extract, Transform, Load) framework is a structured approach to data integration that helps extract data from multiple sources, transform it into a consistent format, and load it into a target system such as a data warehouse.

2. Why do businesses need an ETL framework?

Businesses generate data from various platforms and applications. An ETL framework integrates this data, cleans it, and prepares it for analysis. Without an ETL framework, data silos and inconsistencies can hinder decision-making and slow down operations.

3. What are the core components of an ETL process?

The core components include:

- Extract: Retrieving data from various sources.

- Transform: Cleaning, normalizing, and applying business rules.

- Load: Inserting transformed data into a data warehouse or destination system.

Each stage is essential for delivering clean, usable data.

4. How does an ETL framework improve data quality?

ETL frameworks apply validation checks, error handling, and cleansing routines to raw data. They correct inconsistencies, remove duplicates, and enforce business rules before loading data into the target system.

5. Can ETL frameworks handle real-time data?

Yes, modern ETL frameworks support real-time or near real-time processing through streaming architectures. This allows data to be processed and delivered continuously instead of in scheduled batches.

6. How does an ETL framework support scalability?

ETL frameworks are designed to scale horizontally or vertically to meet growing data demands. They support distributed processing, parallel execution, and modular components to handle increasing data volumes efficiently.

7. What are the benefits of using a custom ETL framework over off-the-shelf tools?

A custom ETL framework offers flexibility to address specific business logic and compliance requirements. It can be optimized for performance, integrated tightly with internal systems, and controlled end-to-end.