Do you why 87% of AI projects still fail to reach production? The answer might surprise you – it’s not the algorithms or computing power that’s holding us back.

Your AI agent can write perfect code, analyze data patterns, and even draft that quarterly report. But ask it to pull your latest CRM data, connect with your team’s Slack history, or reference that critical document from last month’s board meeting? Suddenly, it’s working with one hand tied behind its back.

This disconnect between AI capability and real-world application has created what researchers call the “context gap”—where agents operate in isolation, cut off from the very information sources that could make them truly valuable. The result? Hallucinations, incomplete responses, and frustrated teams watching promising AI initiatives crumble.

This is where MCP—Model Context Protocol—starts to make a real difference. It’s not about making the AI smarter. It’s about giving it the right context, at the right time, from the right sources. In our latest webinar, Amit Kumar Jena throws light on what MCP actually is, and why it could be the missing link to making AI agents actually useful at work.

The Idea Behind Protocols Like MCP

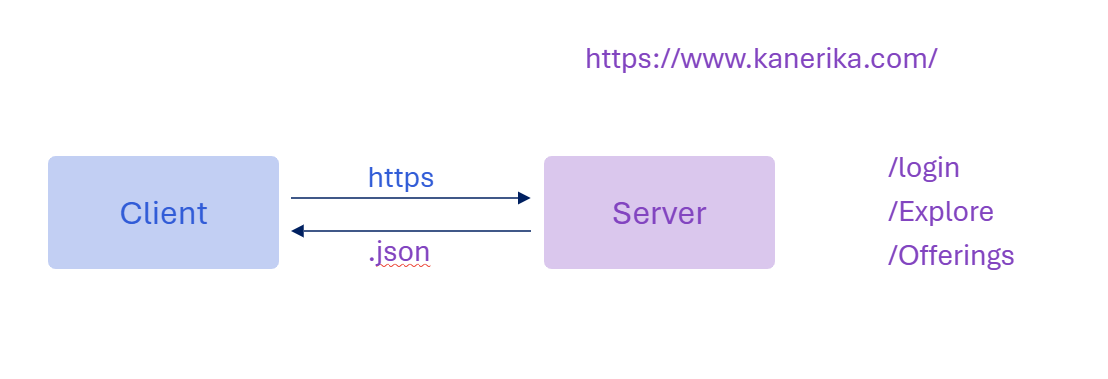

Let’s say you own a website—like Kanerika.com.

On one side, you’ve got a Client (basically, a user’s browser or app), and on the other side, there’s a Server (where your website’s data and logic live). Now, when someone wants to log in, explore, or check offerings on your site, the client and server need to talk to each other.

How do they do that?

They use a protocol—in this case, HTTPS with REST APIs. So, the client sends a request (often in JSON format) and the server replies with a response, also in JSON. This is a clear, agreed-upon way of sharing data, so both sides know what to expect.

Understanding How LLMs Worked (Before MCP)

Now let’s compare that to how Large Language Models (LLMs) used to work, before Model Context Protocol (MCP) came into the picture.

1. Basic GenAI Model

This is like giving a single brain (the LLM) a question and expecting a smart answer.

- You give it input (text prompt).

- It gives back output (a response).

- Models like GPT, LLaMA, Gemini fit here.

- The model tries to solve the task on its own—no tools, no extra help.

The problem? It can only respond based on what it already knows. So, if it doesn’t have recent or domain-specific info, it either gets stuck or starts guessing.

2. Multimodal Agents

Then came more advanced setups. These LLMs were paired with external tools:

- They could talk to databases (SQL),

- Look up Wikipedia (Wiki),

- Pull facts from a RAG database,

- Even do web searches (Serpent Search).

So, now the AI Assistant wasn’t just relying on memory—it could look stuff up. But even here, there was no clear protocol for how all these parts talked to each other. It was messy, slow, and hard to manage.

And that’s why MCP matters.

Just like APIs gave websites a standard way to communicate, MCP gives AI agents a cleaner, more reliable way to fetch context, ask for data, and respond better. But that part comes next.

A Practical Look at MCP vs A2A: What You Should Know Before Building AI Agents

A hands-on comparison of Model Context Protocol (MCP) and Agent-to-Agent (A2A) communication—what they are, how they differ, and when to use each for building AI agents.

What Are the Current Limitations of AI Agents without MCP?

Even though AI agents seem smart, they struggle with quite a few things under the hood:

1. Inconsistent Tool Usage

AI agents can connect to tools, but there’s no standard way to do it. It’s like plugging random chargers into a phone—sometimes it works, sometimes it fries the battery. This causes erratic behavior and unreliable results.

2. Limited Control

Once you hand something off to the AI, it’s hard to steer it or correct it mid-task. You often don’t know how it came to a decision or why it chose a certain source.

3. Lack of Composability

AI agents can’t easily mix and match data or tools. If you want to build more complex tasks using smaller parts, tough luck—it’s not modular.

4. Limited Context

They often miss out on real-time data, user history, or ongoing business context. So they end up giving half-right answers.

5. Brittle Integrations

If something changes—like a data source or API—it breaks the whole setup. These agents aren’t very adaptable.

6. Security Risks

Without standard checks, giving them access to sensitive systems can be risky. No clear guardrails means higher chances of leaks or misuse.

7. Poor Reusability

Every time you build something new, you start from scratch. There’s no clean way to reuse what worked before.

8. Static Knowledge

They rely mostly on what was trained into them. If your data or tools change, the agent doesn’t automatically keep up.

Agentic Automation: The Future Of AI-Driven Business Efficiency

Explore how agentic automation is driving next-level business efficiency by enabling AI systems to act, decide, and execute with minimal human effort.

Understanding Model Context Protocol (MCP)

Model Context Protocol (MCP) is like a rulebook that helps AI agents stay informed, stay connected, and act more reliably.

Let’s break that down simply.

Right now, AI models—like ChatGPT, Gemini, or Claude—mostly work in isolation. They’re great at language but struggle to understand what’s actually going on in the environment where they’re being used. They might not know:

- What tools are available to them

- What task they’re solving

- What the user already knows

- Or even what happened 5 minutes ago

That’s where MCP steps in.

MCP gives a structured way to feed real-time context to AI models. It tells the model:

- What problem it’s solving

- What data or tools it can use

- Who it’s interacting with

- And what the current state of the system is

It works kind of like how websites use APIs to talk to each other. MCP gives the AI a clean and reliable way to ask for what it needs, fetch info, and act accordingly—without getting confused or hallucinating.

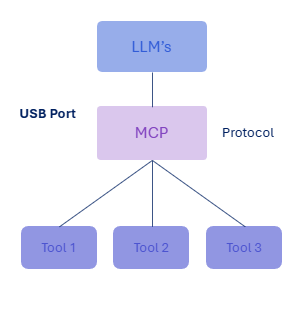

How LLMs Work with MCP

Think of MCP as the USB-C port between the AI model (LLM) and the tools it needs to use. It acts like a translator and connector all at once. On one side, you have the LLM (like GPT or Claude), and on the other side, you’ve got tools like code editors, databases, APIs, etc.

Without MCP, the AI would need a custom setup to connect with each tool—which is messy and time-consuming. MCP removes that mess.

MCP+LLM

- The LLM talks to MCP, not directly to every tool.

- MCP acts as a protocol or bridge.

- Any tool (Tool 1, 2, 3) can plug into MCP, as long as it follows the protocol.

- Developers don’t need to hardcode each tool’s integration anymore—they just follow the MCP structure.

So yes, MCP is like a universal port—you plug in what you need, and it just works.

Key MCP Components

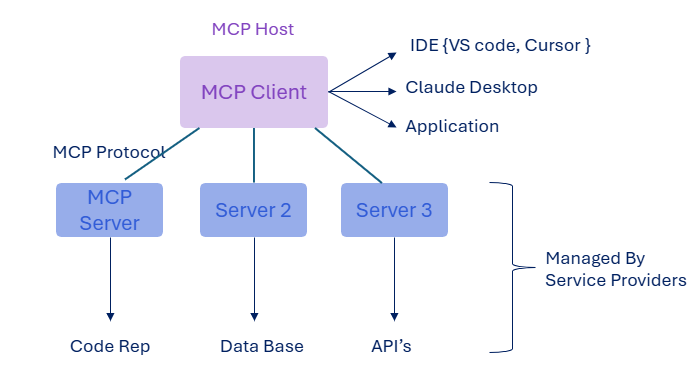

1. MCP Host

- This is where the setup lives. Could be your IDE (like VS Code), Claude desktop app, or any other platform running AI-powered features.

- The host runs an MCP Client—this is what actually interacts with the rest of the system.

2. MCP Client

- Acts like a middleman.

- Talks to different MCP Servers using the MCP protocol.

3. MCP Servers

These servers are connected to different services:

- One might handle a code repository

- Another might handle a database

- Another might fetch data from external APIs

Each of these is managed by their own service providers, but thanks to MCP, the LLM doesn’t care about how they’re built—it just gets what it needs, when it needs it.

Agentic RAG: The Ultimate Framework for Building Context-Aware AI Systems

Discover how Agentic RAG provides the ultimate framework for developing intelligent, context-aware AI systems that enhance performance and adaptability.

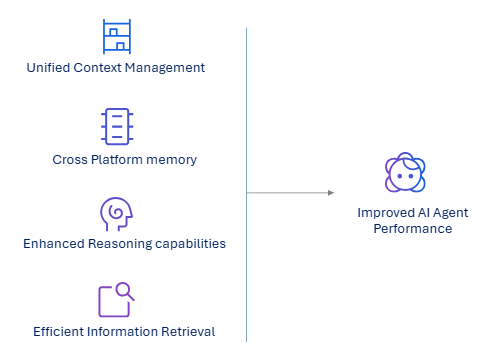

How Does Model Context Protocol Enhance AI Agents’ Performance

1. Unified Context Management

MCP brings all relevant data—like user history, app info, and tools—into one place. This helps AI agents avoid confusion and work more smoothly, since they’re not dealing with scattered or missing pieces of information.

2. Cross-platform Memory

With MCP, AI agents can remember what you did or said across different devices. So if you start a task on your phone and finish it on your laptop, the agent picks up right where you left off—no repeated steps.

3. Improved Reasoning Capabilities

Because MCP gives the AI access to complete and organized context, it connects the dots better. This leads to smarter decisions, fewer mistakes, and more relevant responses—even for tasks that need multiple steps or involve different tools.

4. Efficient Information retrieval

MCP helps AI know exactly where to find the right data or tool. Instead of guessing or searching everything, it accesses what it needs directly saving time and reducing errors in the process.

How Model Context Protocol (MCP) Transforms Your AI into a Powerful Digital Assistant

Explore how Model Context Protocol (MCP) gives your AI real-time context, tool access, and memory—turning it into a reliable, task-ready digital assistant.

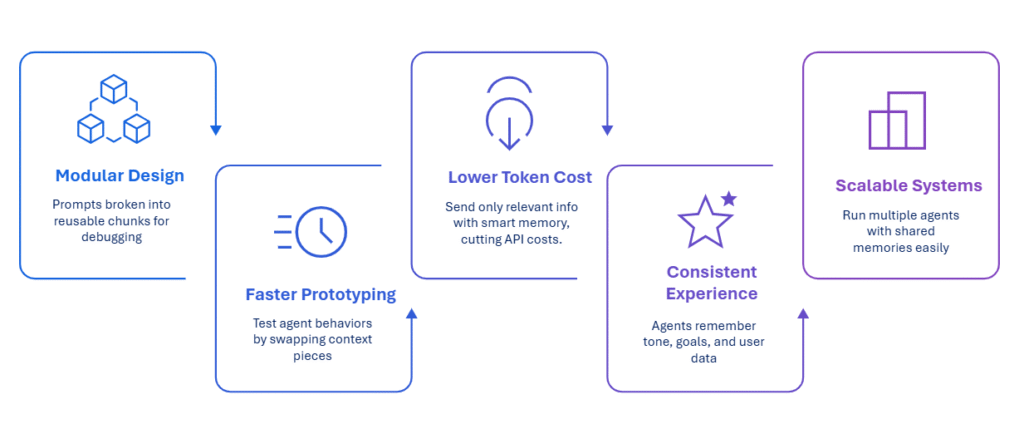

What Are the Various Benefits of MCP-Enabled AI Agents for Businesses?

1. Modular Design

Think of MCP like building with Lego blocks instead of custom-carved wood pieces. Developers can break complex AI systems into reusable, interchangeable components. Need to connect a new data source? Just snap in the right module rather than rebuilding everything from scratch.

2. Lower Token Cost

MCP acts like a smart librarian, only sending relevant information to your AI model instead of dumping entire databases. This selective approach dramatically reduces the tokens consumed per query, cutting your API costs while improving response speed and accuracy.

3. Faster Prototyping

instead of spending weeks building custom integrations, developers can quickly test new AI agent behaviors by swapping different context pieces. It’s like having a toolbox where every tool works with the same handle – rapid experimentation becomes possible.

4. Consistent Experience

Your AI agents maintain the same behavior, tone, and capabilities across all your business systems. Whether they’re pulling data from Salesforce or Slack, users get reliable, predictable interactions instead of the inconsistent performance that plagues current implementations.

5. Scalable Systems

MCP enables shared context across multiple AI agents, creating a collaborative intelligence network. As your business grows, agents can work together seamlessly, sharing insights and maintaining consistency without the exponential complexity that typically comes with scaling AI systems.

RAG vs LLM? Understanding the Unique Capabilities and Limitations of Each Approach

Explore the key differences between RAG and LLM, understand how to choose AI models that best align with your specific needs.

![]() Learn More

Learn More

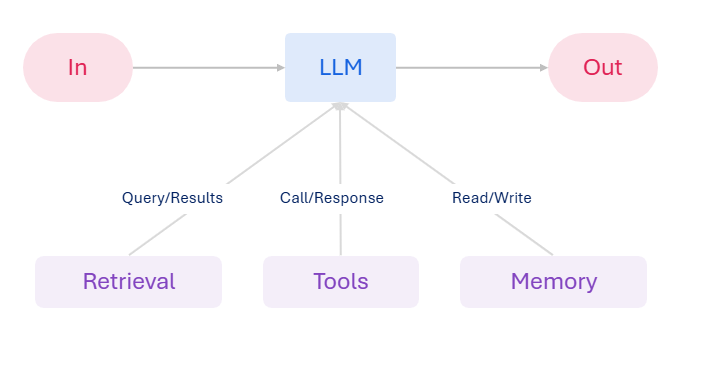

How to Build Effective AI Agents with MCP?

1. Input Data

First, the AI agent needs something to work with. This could be a question, a file, a task, or any kind of user input. Think of it as giving the AI the raw material it needs to do its job.

2. Process through LLM

Once the input is ready, it goes to the LLM (Large Language Model). This is the brain of the operation. It looks at the input and tries to understand what you’re asking or what needs to be done.

3. Query/Results Handling

Sometimes the LLM needs extra info—like data from a company database, a knowledge base, or the internet. So, it sends out a query through MCP and gets the results back. This step helps it answer with real, useful information.

4. Call/Response Integration

Here’s where the AI agent actually does something—it might call a tool, trigger an API, or take an action (like scheduling a meeting). The LLM knows what to do because it’s getting proper context and instructions via MCP.

5. Output with Memory

Finally, the agent gives you a result—but it also remembers what happened. Thanks to MCP, it stores the context so next time, it can pick up where it left off or keep improving based on past interactions.

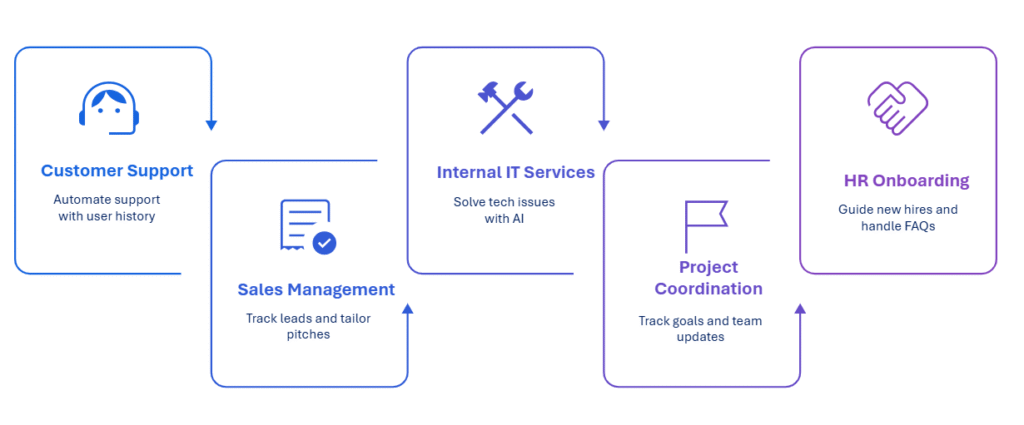

Applications of MCP-Enhanced AI Agents for Businesses

1. Customer Support

With MCP, AI agents can access past conversations, user profiles, and support history. This allows them to offer faster, more accurate help—without asking users to repeat themselves. It makes automated support feel more human and cuts down on resolution time.

2. Sales Management

AI agents can track interactions with leads, organize sales data, and even adjust pitches based on previous conversations. MCP ensures the AI has full context—like past emails or CRM info—so it can support sales teams with better timing and personalization.

3. Internal IT Services

AI agents can troubleshoot common tech issues like software bugs, login problems, or access requests. MCP gives them access to system logs, IT policies, and helpdesk tools—so the agent can act like a first-line tech assistant without wasting employee time.

4. Project Coordination

AI agents using MCP can follow project goals, track task progress, and share updates across teams. They can even remind teammates of deadlines or changes. With consistent access to tools like calendars and project boards, they help keep everyone aligned and informed.

5. HR Onboarding

New hires often have loads of questions. AI agents powered by MCP can guide them through policies, help them find documents, or answer FAQs. Since MCP connects the AI to relevant systems and HR tools, responses are accurate, timely, and consistent.

Agentic AI: How Autonomous AI Systems Are Reshaping Technology

Explore how autonomous AI systems are changing the way technology works, making decisions and taking actions without human input.

Kanerika’s Powerful AI Agents and Business Needs They Address

DokGPT

DokGPT is your AI-powered chat assistant that brings your entire business knowledge to tools like WhatsApp, Microsoft Teams, and more. It helps you get answers instantly—without digging through folders or waiting on emails.

No matter where you are, DokGPT gives you direct access to company data—whether it’s in a document, spreadsheet, video, or business system.

Here’s what makes it stand out:

- Instant Answers: Ask a question about any file, and get clear, accurate replies in seconds.

- Works with Any Format: Supports documents, videos, spreadsheets, and HR data.

- Smart Summaries: Get the key points from long reports or training videos—fast.

- Connects to Your Tools: Taps into platforms like Azure, Zoho, and others.

- Multilingual & Visual Support: Understand content in any language and get tables or charts in chat.

Karl AI

KarL AI is your go-to AI assistant for making sense of business data—no coding, no confusing dashboards. Just ask questions in plain English and get answers you can act on.

Whether you’re checking sales performance, spotting trends, or reviewing product metrics, KarL helps you get there faster.

Here’s what makes KarL stand out:

- Talk to Your Data: Ask questions like “How did sales perform last month?” and get clear answers, charts, and insights.

- Visual Insights: KarL builds charts automatically to help you see patterns instantly.

- Simple Stats: Understand trends, spikes, and gaps without needing a degree in analytics.

- Works with What You Have: Easily connects to your spreadsheets, databases, and files.

- In-depth Exploration: Ask follow-ups to dig deeper into any detail without starting over.

Elevate Your Enterprise Workflows with Kanerika’s Agentic AI Solutions

Kanerika brings deep expertise in AI/ML and purpose-built agentic AI to help businesses solve real challenges and drive measurable impact. From manufacturing to retail, finance to healthcare—we work across industries to boost productivity, cut costs, and unlock smarter ways to operate.

Our custom-built AI agents and GenAI models are designed to tackle specific business bottlenecks. Whether it’s streamlining inventory management, speeding up information access, or making sense of large video datasets—our solutions are built to fit your workflows.

Use cases include fast document retrieval, sales and financial forecasting, arithmetic data checks, vendor evaluation, and intelligent pricing strategies. We also enable smart video analysis and cross-platform data integration—so your teams spend less time hunting for answers and more time acting on them.

At Kanerika, we don’t just build AI. We help you use it meaningfully.

Partner with us to turn everyday tasks into intelligent outcomes.

Upgrade Your AI Stack With Contextual Intelligence via MCP!

Partner with Kanerika Today.

Frequently Asked Questions

What is a Model Context Protocol?

Model Context Protocol (MCP) is a standard way for large language models (LLMs) to receive real-time context from external tools, systems, or environments. It helps AI agents stay updated, informed, and responsive by organizing and delivering relevant task, user, and tool-specific data.

How Does MCP Work?

MCP acts as a bridge between an AI model and external tools or services. It collects context—like task goals, user data, or system state—and sends it to the model. This structured exchange enables the model to respond more accurately and perform actions based on current information.

Is MCP like API?

MCP and APIs serve similar goals—data exchange—but in different ways. APIs connect software systems, while MCP connects LLMs to tools through structured context. Think of MCP as an “API for AI agents” that enables smarter, more context-aware responses without hardcoding each integration.

Why do we use MCP?

MCP is used to improve the performance of AI agents by providing them with relevant, real-time context. It reduces errors, improves decision-making, supports tool usage, and makes AI more useful across complex workflows without needing custom integrations for every new system.

Is Model Context Protocol free?

The protocol itself is open for implementation, though using it may involve costs depending on the tools, platforms, or hosting environments involved. If a vendor builds and maintains MCP-based systems, those services may be priced accordingly, but the protocol is not inherently paid or proprietary.

Can ChatGPT use MCP?

Not natively. As of now, ChatGPT doesn’t have built-in support for MCP out of the box. However, developers can build external systems that feed ChatGPT structured context following MCP principles through API-based wrappers, making it behave like an MCP-aware agent.

What is the advantage of MCP?

MCP enables AI agents to access up-to-date context from different sources, improving their accuracy, reliability, and usefulness. It simplifies tool integration, allows memory across sessions or platforms, and reduces the complexity of building scalable, intelligent assistants across enterprise systems.

Will MCP replace API?

No, MCP won’t replace APIs. Instead, it works alongside them. APIs are still essential for data exchange between systems. MCP helps models understand and use that data more intelligently by structuring context delivery. It’s a complement to APIs, not a replacement.