“If the data isn’t ‘big,’ the machine will function as a calculator, not an oracle.” This is a quote from the insurance company, Lemonade’s article discussing AI and big data use cases in insurance.

Here’s an often overlooked truth: We only look at insurance companies to help us out whenever we find ourselves in grave trouble.

Here’s another unfortunate truth: Traditionally, insurance companies have relied on outdated demographic data, some dating back 40 years, to create their products.

Why should this concern us? This archaic approach often leads to incorrectly priced policies and missed financial opportunities.

However, there is a light at the end of this tunnel!

The application of big data in insurance is proving to be a game-changer for the industry.

Here are some improvements brought about by the implementation of big data in the insurance industry: 30% better access to insurance services, 40-70% cost savings, and a whopping 60% higher fraud detection rates.

This blog aims to illuminate the important role of big data use cases in the insurance industry and the innovative ways in which insurance companies can leverage big data.

Big Data’s Impact On The Insurance Sector’s Growth

The insurance sector is now on the cusp of a revolution, thanks to big data in insurance. Anna Maria D’Hulster, Secretary-General of The Geneva Association, aptly stated, “Going forward, access to data, and the ability to derive new risk-related insights from it will be a key factor for competitiveness in the insurance industry.

The challenge in the insurance sector has always been twofold.

On one end, customers grapple with questions about the reliability, offers, and market reputation of insurance companies. On the other hand, insurers struggle to comprehend customer behavior, fraud, policy risks, and claim sureties.

These challenges, however, are being addressed head-on with the application of big data analytics in insurance.

Investment in big data in the insurance industry is escalating, with projections of up to $4.6 billion by 2023.

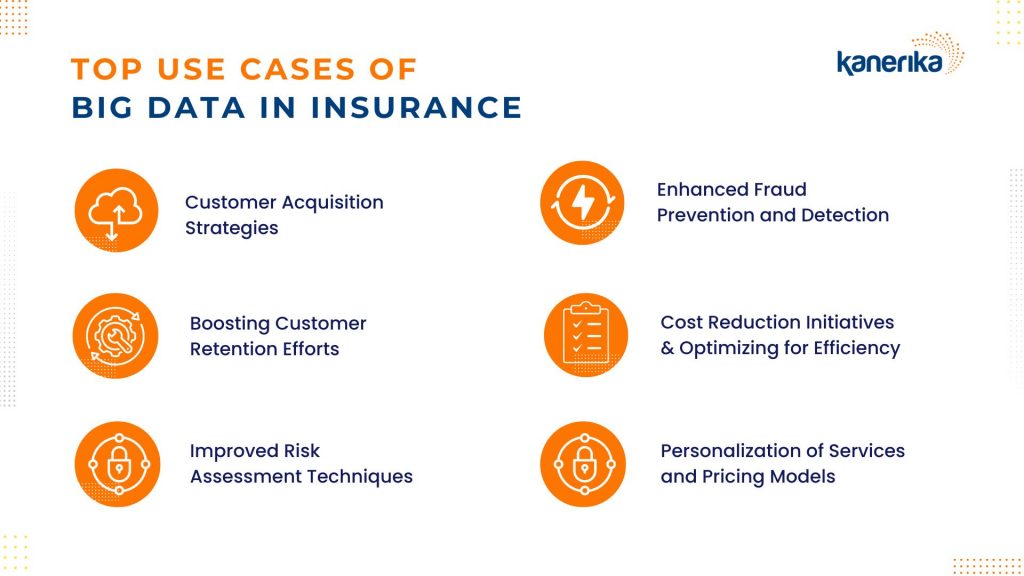

Let’s delve into the 7 big data use cases where big data and the insurance industry’s collaboration is reshaping the sector for insurers and customers.

7 Key Big Data use cases in Insurance

Use Case 1: Customer Acquisition Strategies

Did you know that data-driven organizations are 23 times more likely to excel in customer acquisition? According to the McKinsey Global Institute, data-driven entities are six times as likely to retain customers and an impressive 19 times more likely to be profitable.

The adage ‘knowledge is power’ has never been more pertinent.

In today’s digital era, every online action generates a wealth of data. This data, emanating from social networks, emails, and customer feedback, forms a colossal pool of unstructured data, a vital component of big data. For the insurance sector, this is a goldmine.

Using big data analytics in insurance transforms this unstructured data into actionable insights. Imagine moving beyond traditional surveys and questionnaires with frequent misleading insights.

Instead, insurance companies can now use big data insurance strategies to analyze online customer behaviors, uncovering patterns and preferences that were previously hidden.

The result? A more streamlined, effective, and customer-centric approach to acquisition.

Use Case 2: Boosting Customer Retention Efforts

Big data in insurance helps insurers understand their customer preferences better and can lead to an increase in customer retention

While acquiring new customers is important, there’s another step in the funnel that is even more pivotal: customer retention.

Here’s a cold fact: the probability of selling to an existing customer ranges between 60% and 70%, significantly higher than the 5% to 20% likelihood of selling to a new prospect.

In the context of the insurance industry, where the average customer retention rate hovers around 83%, the question arises: how can big data in insurance help catapult this figure into the coveted 90s range?

A high customer retention rate is a hallmark of success, and this is where big data analytics in insurance comes into play.

The application of big data in the insurance industry allows for the creation of sophisticated algorithms that can detect early signs of customer dissatisfaction.

These insights enable insurance companies to swiftly respond to customer queries. This is while also improving their services in real-time to resolve specific customer grievances.

Use Case 3: Improved Risk Assessment Techniques

How does big data transform traditional risk assessment in the insurance sector? 2 short answers – unparalleled precision and depth of analysis.

Big data in insurance enables companies to delve deeply into variables like demographics, claims history, and credit scores, revolutionizing how they predict and manage risks.

Utilizing machine learning algorithms and predictive modeling, insurers develop detailed risk profiles for policyholders, leading to more tailored coverage and premium rates.

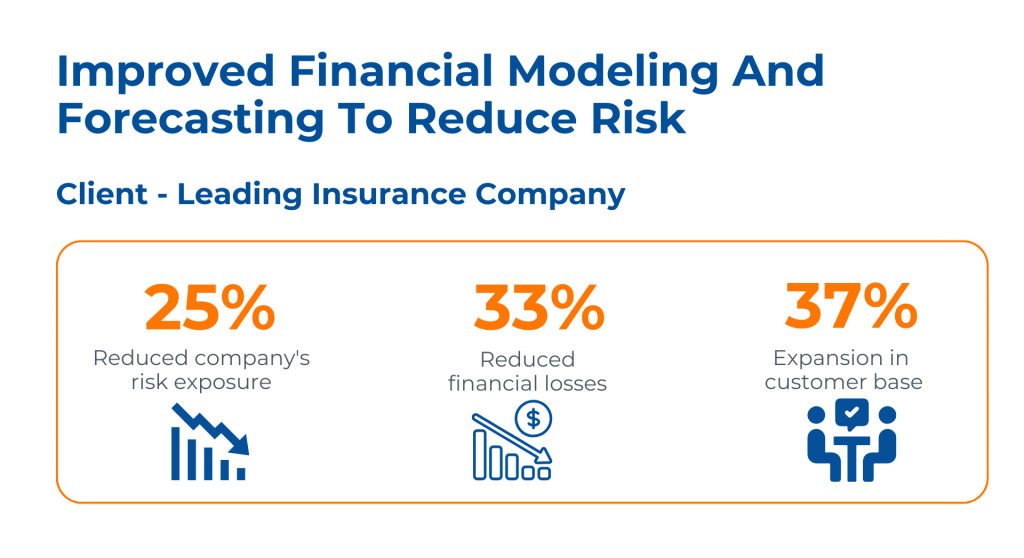

Here’s an example of how Kanerika’s team used financial modeling and forecasting to reduce risk.

When a leading insurance company faced challenges like limited financial health access and vulnerability to fraud, we implemented advanced AI data models and Machine Learning algorithms, including Isolation Forest and Auto Encoder.

This strategic intervention not only reduced the company’s risk exposure by 25% and financial losses by 33% but also expanded its customer base by 37%. This case highlights the transformative impact of technology on optimizing insurance operations and enhancing customer trust.

Use Case 4: Enhanced Fraud Prevention and Detection

The Coalition Against Insurance Fraud reveals a staggering loss of over $80 billion annually by U.S. insurance companies due to fraudulent activities.

This affects everyone across the industry, from the insurers to the stakeholders. Therefore, fraud detection in big data and the insurance industry is easily a priority to the sector.

The solution to this pervasive problem? The strategic application of big data.

By analyzing data on policyholders, claims, and other pertinent factors, insurers can identify behavioral patterns indicative of fraud. For instance, big data enables the examination of claims data to spot inconsistencies or anomalies, such as unusually high or frequent claims.

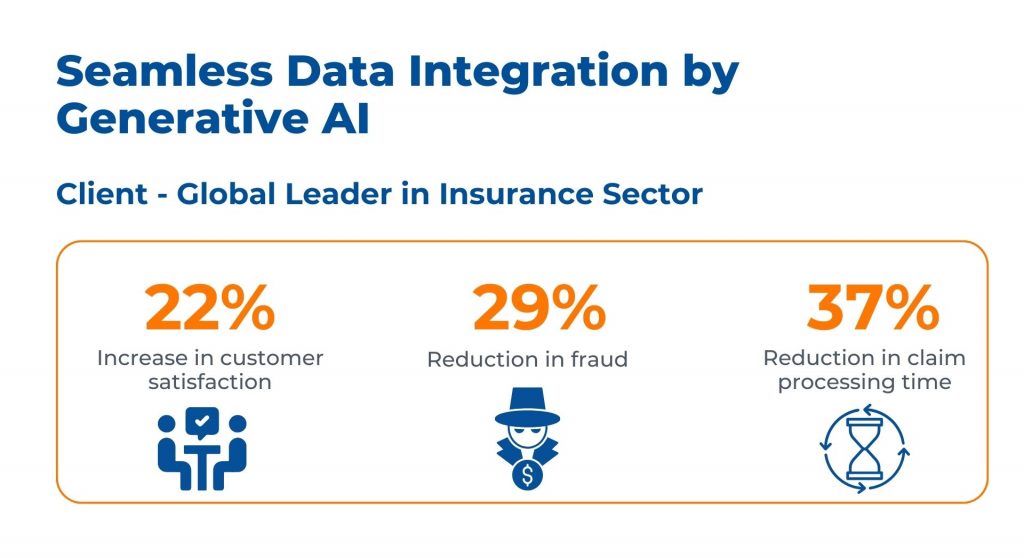

Kanerika’s work with a global leader in the insurance sector illustrates this impact vividly.

We tackled challenges like time-consuming manual data integration and the complexity added by emerging data sources like wearable devices and electronic health records.

The solution? An innovative approach utilizing Kafka for automated data extraction, Talend for data standardization, and Gen AI models TensorFlow and PyTorch for seamless data integration.

The outcomes were remarkable – a 22% increase in customer satisfaction, a 29% reduction in fraud, and a 37% decrease in claim processing time.

Use Case 5: Cost Reduction Initiatives

A primary business goal of any organization is to cut down its costs without compromising the quality of work.

The integration of big data technology in insurance is a powerful tool in this regard.

One of the primary areas where big data in insurance proves invaluable is in the handling of claims and administration. The traditional manual processes are not just time-consuming but also prone to errors, leading to financial losses and inefficiencies.

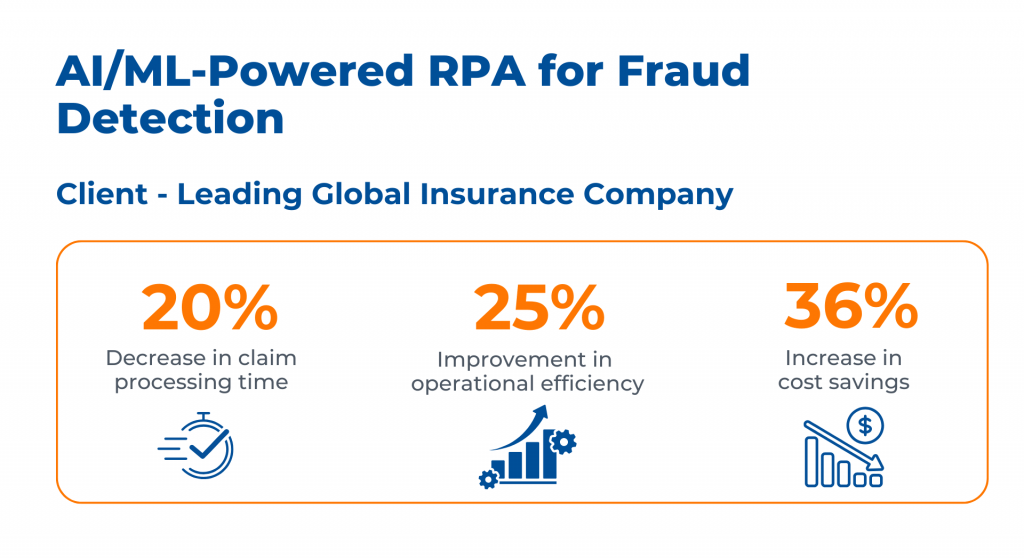

An example of this in action is our work at Kanerika with a leading global insurance company.

We faced challenges such as manual processes in insurance claims leading to inefficiencies and financial losses, a lack of robust fraud detection systems, and inflexible processes hindering effective data analysis.

Our solution was to implement AI/ML-driven Robotic Process Automation (RPA) for enhanced fraud detection in insurance claims, significantly reducing fraud-related financial losses.

And, the outcomes were significant: a 20% reduction in claim processing time, a 25% improvement in operational efficiency, and a 36% increase in cost savings.

Use Case 6: Personalization of Services and Pricing Models

The insurance industry is shifting its focus from offering generic services to providing tailoring experiences and products to meet the unique needs of each customer.

What’s the secret?

Analysis of unstructured data, which allows companies to craft services that resonate more closely with individual customer profiles.

Take, for instance, life insurance. By leveraging big data, these policies can now be customized by taking into account a customer’s medical history and lifestyle habits, tracked through activity monitors.

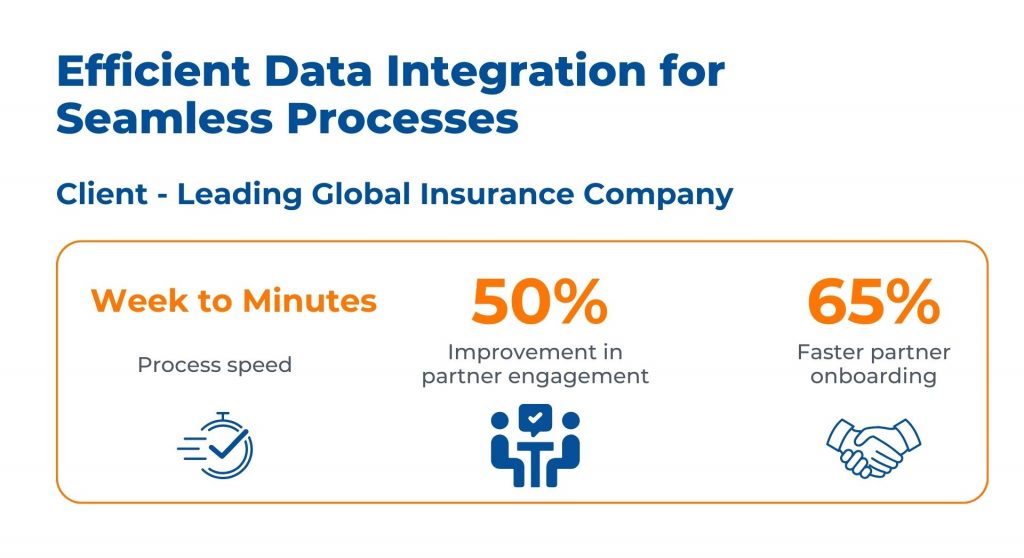

A practical example of this approach is seen in our work at Kanerika with a leading global insurance company.

We faced challenges such as manual processing of claim files leading to delays and errors, a lack of standardized data formats, and reactive processes causing bottlenecks.

Our solution involved automating bordereau processing, which included data transformations to enhance efficiency and claim accuracy.

The outcomes of these initiatives were transformative: the processing speed escalated from weeks to minutes, partner engagement improved by 50%, and partner onboarding became 65% faster.

Use Case 7: Optimizing Internal Processes for Efficiency

A study by McKinsey and Company highlighted that automation could save up to 43% of the time for insurance employees.

This is where the power of big data in insurance becomes evident. Big data technology in the insurance industry enables rapid processing of customer profiles. Insurers can swiftly access a customer’s history, determine the appropriate risk class, devise a suitable pricing model, automate claim processing, and thus, deliver superior services.

An example of such optimization is evident in our work at Kanerika with a leading insurance company. We faced challenges with diversely formatted data from over 280 Managing General Underwriters (MGUs), which included information on policies, premiums, claims, and other services.

Our solution was to implement an AI/ML algorithm for auto-detecting data mapping from various sources to insurance systems.

The outcomes were significant, with a 94% accuracy in AI-based mapping and automation, a 30% reduction in new onboarding processes, and the ability to support 38% more business with less staff.

Common Pitfalls to Avoid While Using Big Data in Insurance

While big data holds immense potential for revolutionizing the insurance industry, it’s essential to navigate potential pitfalls to maximize its benefits. Here are some common pitfalls to avoid while using big data in insurance:

1. Overreliance on Data

One of the significant pitfalls of utilizing big data in insurance is relying solely on data without considering other factors.

While data-driven insights are invaluable, it’s crucial to analyze them along with human expertise and industry knowledge. Failing to do so may result in overlooking critical nuances and making flawed decisions.

2. Lack of Data Security Measures

Failing to implement robust security measures can expose insurers to significant risks, including data breaches and regulatory penalties.

Insurance companies must invest in encryption, access controls, and regular security audits to safeguard customer data and maintain trust.

Data security has become a priority for insurers due to the growing risk of security breaches

3. Ignoring Data Quality Issues

The quality of the data used in big data analytics directly impacts the accuracy and reliability of insights.

Overlooking data quality issues, such as inaccuracies, incompleteness, or inconsistency, can lead to incorrect conclusions and flawed decision-making.

Regular data cleansing, validation, and maintenance processes are essential to ensuring data integrity and validity.

4. Neglecting Ethical and Privacy Considerations

As insurers gather and analyze vast amounts of personal data, it’s important to follow ethical standards and respect customer privacy. Neglecting ethical and privacy considerations can damage reputation and trust, leading to regulatory scrutiny and legal repercussions.

Insurers must strictly follow:

data protection regulations

obtain consent for data collection

transparently communicate their data usage policies to customers.

5. Failure to Adapt to Changing Regulatory Landscape

Failure to stay abreast of regulatory changes and adapt compliance practices accordingly can expose insurers to compliance risks and penalties.

It’s essential to establish stringent compliance frameworks, conduct regular audits, and engage with regulatory authorities to ensure adherence to evolving regulatory requirements.

Future Trends for Big Data in Insurance in 2024

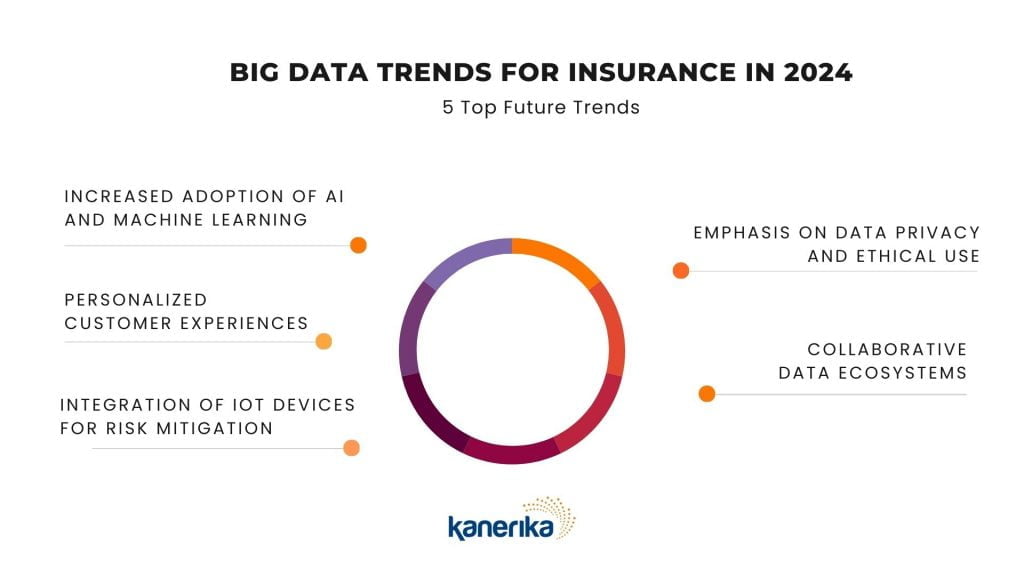

As we look ahead to 2024, several trends are poised to shape the future of big data in insurance:

Trend 1 – Increased Adoption of AI and Machine Learning

Artificial intelligence (AI) and machine learning (ML) technologies are expected to play a more prominent role in insurance operations.

Insurers will leverage AI and ML algorithms to analyze vast amounts of data quickly.

This will help them identify patterns, and automate processes such as underwriting, claims processing, and risk assessment.

This increased adoption of AI and ML will enhance efficiency, accuracy, and decision-making capabilities across the insurance value chain.

Trend 2 – Personalized Customer Experiences

Insurance companies will increasingly focus on delivering personalized customer experiences with the abundance of data available.

By leveraging big data analytics, insurers can gain deeper insights into customer preferences, behaviors, and life events. From personalized pricing models to proactive risk management solutions, insurers will strive to enhance customer satisfaction and loyalty through personalized experiences.

With growing competition, personalized customer experiences help insurers stand out and attract new customers

Trend 3 – Integration of IoT Devices for Risk Mitigation

The Internet of Things (IoT) presents vast opportunities for insurers to gather real-time data and mitigate risks effectively.

In 2024, we can expect to see a surge in the integration of IoT devices, such as telematics sensors, smart home devices, and wearable technologies, into insurance offerings.

Insurers will leverage data from IoT devices to assess risk more accurately, offer usage-based insurance policies, and provide proactive risk prevention services.

Trend 4 – Emphasis on Data Privacy and Ethical Use

As concerns about data privacy and the ethical use of data continue to rise, insurance companies will place greater emphasis on ensuring compliance with regulatory requirements and ethical standards.

In 2024, insurers will invest in robust data privacy frameworks, transparent data governance practices, and ethical AI principles to safeguard customer data and maintain trust.

Trend 5 – Collaborative Data Ecosystems

Collaboration and data sharing will become more prevalent in the insurance industry as insurers recognize the value of utilizing external data sources and partnerships.

In 2024, we can expect to see the emergence of collaborative data ecosystems, where insurers, technology providers, and other stakeholders exchange data and insights to drive innovation and address industry challenges collaboratively.

Kanerika: Your Partner in Data Implementation

Big data in insurance benefits both customers and insurers.

If you are an insurance company trying to reduce fraud and costs to offer better services, Kanerika stands as your ideal partner.

Whether it’s understanding policy trends, attracting customers, building loyalty, or adapting to societal needs, Kanerika’s team employs the latest in AI/ML, big data processing, and cloud-based integration.

Let’s connect today for a free consultation!

FAQs

What are big data use cases?

Big data’s power lies in tackling massive datasets to reveal hidden patterns. Examples range from personalized recommendations (like Netflix suggesting shows) to predicting equipment failure (preventative maintenance in factories). Essentially, anywhere complex, large-scale data can be analyzed for actionable insights, you’ll find big data in action. This includes improving healthcare, optimizing supply chains, and even fighting fraud.

What are the five applications of big data?

Big data’s impact spans various sectors. Firstly, it dramatically improves customer understanding through personalized experiences and targeted marketing. Secondly, it optimizes operations in areas like supply chain management and fraud detection by identifying crucial patterns. Thirdly, it fuels scientific discovery by analyzing vast datasets impossible to process manually. Finally, it enhances risk assessment and predictive modeling in finance and healthcare.

What is the current use of big data?

Big data’s current uses are incredibly diverse, fundamentally changing how we operate across sectors. It fuels everything from personalized recommendations you see online to sophisticated fraud detection systems in finance. Ultimately, it allows for more accurate predictions and better decision-making based on massive datasets previously impossible to analyze. This leads to more efficient processes and entirely new opportunities across many industries.

Is Netflix an example of big data?

Yes, Netflix is a prime example of a big data company. They collect massive amounts of viewing data – what you watch, when, where, and even how you interact with the interface – all analyzed to personalize recommendations and improve their service. This constant stream of varied data requires sophisticated tools and analytics to manage effectively. Essentially, their business model *is* big data.

Where is big data used?

Big data’s applications are incredibly broad. Essentially, anywhere massive datasets need analyzing for insights, it’s used. Think personalized recommendations on Netflix, fraud detection in finance, or optimizing traffic flow in smart cities. Its power lies in uncovering hidden patterns and predicting future trends.

What are data use cases?

Data use cases are specific examples of how you can apply data to solve problems or create value. They demonstrate the practical application of data analysis, showing how insights derived from data translate into tangible benefits. Think of them as real-world scenarios highlighting the power of data – for example, improving customer service with sentiment analysis or optimizing logistics with predictive modeling. They’re essentially proof points for the value of your data.

How does Amazon use big data?

Amazon leverages massive datasets to personalize your shopping experience, predicting what you might want next and tailoring recommendations. This data also fuels its logistics, optimizing delivery routes and warehouse efficiency for faster shipping. Crucially, it informs pricing strategies and new product development, driving competitiveness and innovation. Essentially, big data is the engine powering Amazon’s entire operation.

Who uses big data and why?

Big data’s used by anyone needing to analyze massive datasets for insights. Businesses leverage it for smarter marketing, improved operations, and better customer understanding. Researchers utilize it for scientific breakthroughs and societal analysis. Essentially, anyone seeking to extract actionable intelligence from vast information utilizes big data.

What are the 5 V's of big data?

Big data’s “5 Vs” describe its defining characteristics: Volume refers to the sheer amount of data; Velocity highlights its rapid creation and processing speed; Variety encompasses the diverse data formats (text, images, etc.); Veracity stresses the importance of data accuracy and reliability; and Value represents the ultimate goal – extracting meaningful insights for decision-making. Understanding these Vs is key to effectively managing and leveraging big data.

How does Starbucks use big data?

Starbucks leverages big data to personalize the customer experience, predicting preferences and tailoring offers through their mobile app. They analyze purchase history and location data to optimize store operations, staffing, and product placement. This allows them to understand customer behaviour on a massive scale, improving efficiency and boosting sales. Ultimately, it fuels a more targeted and rewarding relationship with each customer.

What are big data types?

Big data isn’t just *big* – it’s diverse. We categorize it by its structure: structured data fits neatly into rows and columns (like databases), semi-structured has some organization but lacks rigid format (like JSON), and unstructured is raw and unorganized (like text or images). Understanding these types guides how we process and analyze this massive information.

How does Spotify use big data?

Spotify leverages massive datasets to personalize your listening experience. This involves analyzing your listening habits, discovering trends across millions of users, and using this information to suggest new music and podcasts tailored just for you. It also helps them improve their algorithms, curate playlists, and even inform artist development strategies. Ultimately, big data fuels Spotify’s core functionality and competitive edge.

Is AWS big data?

No, AWS isn’t *itself* big data; it’s a platform *hosting* big data. Think of it as a vast, adaptable warehouse: you can store and process your own big data within it using various AWS services like EMR, Redshift, or S3. AWS provides the tools, but the data itself and its size determine whether it constitutes “big data.”

What are the 3 V's of big data?

Big data’s core characteristics are best understood through its three Vs: Volume refers to the sheer scale of data; Velocity highlights its rapid generation and processing speed; and Variety emphasizes the diverse formats – from structured databases to unstructured social media posts. These three interwoven aspects define the challenges and opportunities presented by big data.

How does Netflix use big data?

Netflix leverages massive datasets to personalize your viewing experience, predicting what shows you’ll like based on your watch history and preferences of similar users. This data also informs content creation, helping them decide what types of shows to produce and even which actors to cast. Essentially, big data allows them to optimize everything from recommendations to original programming. It’s all about understanding viewer behavior to maximize engagement and subscriber retention.