Parameter-efficient Fine-tuning (PEFT) is an NLP technique designed to enhance the performance of pre-trained language models for specific tasks without needing to fine-tune all the model’s parameters. This approach optimizes resource usage while delivering high accuracy on targeted applications.

Fine-tuning an LLM like GPT-4 can require terabytes of data and extensive computational power, making it both costly and resource-intensive. Parameter-Efficient Fine-Tuning (PEFT) is a game-changing approach that reduces these demands while maintaining performance.

Understanding Parameter-efficient Fine-tuning is essential for anyone looking to optimize their Gen AI strategy. By only fine-tuning a small number of extra parameters while freezing most of the pre-trained model, PEFT prevents catastrophic forgetting in large models and enables fine-tuning with limited computing. PEFT approaches only fine-tune a small number of extra model parameters while freezing most parameters of the pre-trained LLMs, thereby greatly decreasing the computational and storage costs.

What is Parameter-efficient Fine-tuning (PEFT)?

If you’re familiar with transfer learning, you know that it’s a powerful technique that allows you to leverage pre-trained models to solve a wide range of downstream tasks. However, fine-tuning these models can be computationally expensive, especially when dealing with large models like GPT-3 or BERT that have billions of parameters.

This is where Parameter-efficient Fine-tuning (PEFT) comes in. PEFT is a set of techniques that propose to fine-tune large pre-trained models using a small subset of parameters while preserving most of the original pre-trained weights fixed. By fine-tuning only a small subset of the model’s parameters, you can achieve comparable performance to full fine-tuning while significantly reducing computational requirements.

PEFT approaches are beneficial when you have limited computational resources or when you need to fine-tune a model on a specific task quickly. With PEFT, you can fine-tune a model using only a fraction of the resources required for full fine-tuning, making it a cost-effective and efficient solution.

PEFT has become an increasingly popular technique in the field of Natural Language Processing (NLP), where large pre-trained models like GPT-3 and BERT are commonly used. With PEFT, researchers and practitioners can fine-tune these models on specific NLP tasks with much less computational resources than full fine-tuning.

Achieve 10x Business Growth with AI-driven Solutions

Partner with Kanerika for Expert AI implementation Services

What are the Differences Between Fine-tuning and PEFT?

| Criteria | Fine-Tuning | Parameter-Efficient Fine-Tuning (PEFT) |

| Parameter Updates | Updates most or all parameters of the model. | Updates a small subset of parameters or adds a few trainable layers. |

| Computational Cost | High; requires significant computational resources. | Lower; designed to minimize computational demands. |

| Storage Requirements | High; each fine-tuned model variant requires full storage of all parameters. | Reduced; stores only a fraction of the full model parameters. |

| Scalability | Less scalable due to high resource demands. | More scalable, especially on consumer-grade hardware. |

| Model Size | Trained model size increases slightly or significantly depending on the dataset. | Trained model size remains closer to the pre-trained model’s size. |

| Performance | Can achieve higher performance on the new task, especially with large datasets. | May achieve slightly lower performance compared to full fine-tuning, but often comparable. |

| Flexibility | Less flexible; each model version is tailored to specific tasks. | More flexible; allows a base model to adapt to multiple tasks with minimal changes. |

| Suitability | Ideal for tasks with large amounts of task-specific data. | Ideal for tasks with limited data or resource constraints (e.g., deployment on edge devices). |

| Data Requirements | Often requires substantial data for effective training. | Can be effective with less data, improving performance in low-data regimes. |

| Generalization | Risk of overfitting if not properly managed. | Generally, better at generalizing to out-of-domain data due to fewer parameters being tuned. |

| Deployment | Deploying multiple fine-tuned models is resource intensive. | Easier deployment as the base model can be augmented with small, task-specific adjustments. |

Generative AI Vs. LLM: Unique Features and Real-world Scenarios

Explore the key differences and real-world impact of Generative AI vs. LLMs—discover which drives innovation for your business!

Large Language Models and PEFT

Large Language Models (LLMs) are models that have millions or even billions of parameters. They are pre-trained on vast amounts of text data and can be fine-tuned for specific NLP tasks with a relatively small amount of task-specific data. However, training and fine-tuning LLMs can be computationally expensive and require a significant amount of memory.

PEFT, or Parameter-efficient Fine-tuning, is a technique designed to address these issues. PEFT approaches only fine-tune a small number of extra model parameters while freezing most parameters of the pre-trained LLMs. This greatly decreases the computational and storage costs and overcomes the issues of catastrophic forgetting, a phenomenon where the model forgets previously learned information when fine-tuned on a new task.

Role of Pre-Trained Models

Pre-trained models play a crucial role in PEFT. They provide a starting point for fine-tuning and allow for efficient transfer learning. Pre-trained models are trained on large amounts of text data, and their parameters are optimized to capture general language patterns. This pretraining enables the model to perform well on a range of NLP tasks without the need for extensive task-specific training.

PEFT builds on this pretraining by fine-tuning the model on a specific task using a small amount of task-specific data. This fine-tuning process allows the model to learn task-specific information while retaining its general language understanding.

Parameters

PEFT’s parameter-efficient approach is achieved by freezing most of the pre-trained model’s parameters and only fine-tuning a small number of extra model parameters. This greatly reduces the amount of memory required for fine-tuning and makes it possible to fine-tune large models on smaller hardware.

The number of extra parameters that are fine-tuned in PEFT depends on the task and the amount of task-specific data available. In general, the more task-specific data available, the fewer extra parameters need to be fine-tuned.

Open Source LLM Models: A Guide to Accessible AI Development

Partner with Kanerika for Expert AI implementation Services

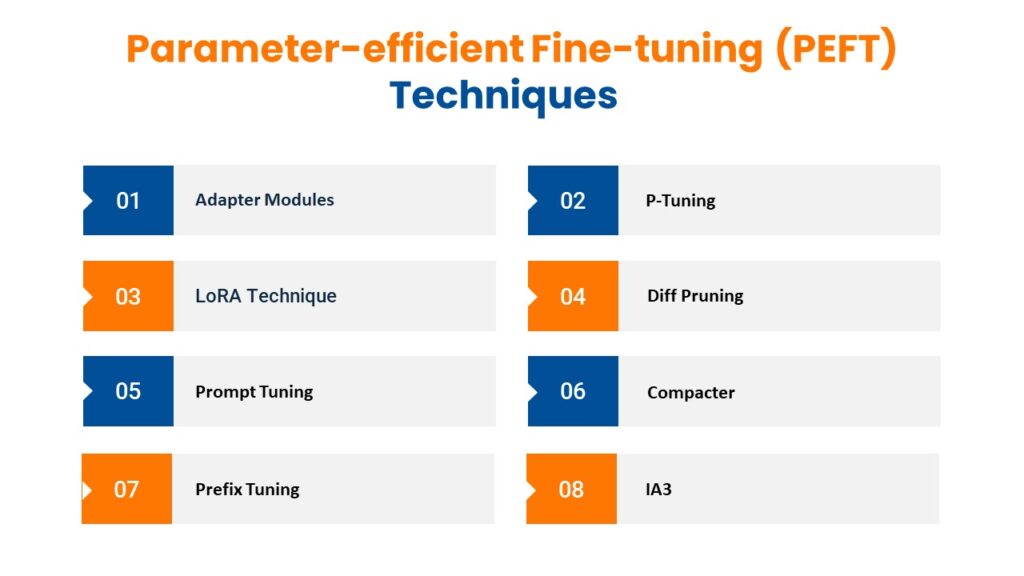

8 Key Parameter-efficient Fine-tuning Techniques

Fine-tuning pre-trained models allows them to excel at specific tasks. Here are some of popular methods, along with their strengths:

1. Adapter Modules

Imagine attaching task-specific modules onto a pre-trained LLM. That’s the essence of adapter modules. These lightweight modules are trained on the new task data without modifying the core LLM parameters. This approach is particularly effective for tasks requiring moderate additional context, such as sentiment analysis or named entity recognition (NER). Popular examples include:

- Conditional Adapter Network (CAN): Introduces a single adapter module that adapts to different tasks based on a task-conditioning input.

- Adapter-Tuning: Employs multiple adapter modules placed strategically throughout the LLM architecture to capture task-specific information.

2. LoRA Techniques

LoRA modifies a pre-trained model by introducing low-rank matrices that approximate the updates to the model’s weights. During fine-tuning, these low-rank matrices are adjusted instead of the full weight matrices. This reduces the number of trainable parameters and can significantly cut down on memory usage and computational costs. Here are two popular approaches:

- Low-Rank Matrix Factorization: This technique decomposes weight matrices in the LLM architecture into lower-rank matrices, effectively compressing the information stored within them.

- Randomized Parameter Sharing: Randomly shares weights across different parts of the model, reducing the overall number of unique parameters needed.

3. Prompt Tuning

Prompt tuning involves appending a sequence of trainable parameters (prompts) to the input of a model. The model then learns to perform a specific task by adjusting these prompts while keeping the main model parameters unchanged. This technique leverages the idea that large language models are highly sensitive to their input format, making it possible to steer the model’s behavior with carefully designed prompts. This is highly efficient as it requires tuning only a tiny fraction of the total parameters.

4. Prefix Tuning

Similar to prompt tuning, prefix tuning adds a sequence of trainable vectors (prefixes) at the beginning of the input sequence. Unlike prompts that are added to the actual input text, prefixes are additional, separate tokens that precede the input and are optimized during training. This method allows the model to learn task-specific adjustments without altering the pre-trained weights directly.

LLM Training: How to Level Up Your AI Game

Unlock the full potential of AI—explore LLM training and take your skills to the next level today!

5. P-Tuning

P-tuning fine-tunes the pre-trained model on task data, but limits updates to a specific subset of its parameters. This method is well-suited for context-heavy tasks like language modeling and machine translation, offering good performance while keeping resource consumption in check.

6. Diff Pruning

Diff Pruning is a technique where a sparse mask is applied to the model’s parameters, determining which parameters will be updated during the training process. This creates a sparse differential update on top of the pre-trained model, allowing for efficient fine-tuning with a limited impact on the model’s parameter count.

7. Compacter

Compacter combines the principles of adapters and compact transformations. It uses hypercomplex multiplications to create efficient adapter layers with fewer parameters. This method extends the adapter approach by further reducing the parameter count through the use of mathematical transformations that compress the adapter’s weight matrices.

8. IA3

The IA3 (Iterative Amortized Attention and Alignment) technique is a more specialized approach within the realm of parameter-efficient fine-tuning for natural language processing models. It’s designed to optimize the process of aligning and adapting pre-trained models to new tasks or datasets with high efficiency. It focuses on the efficient alignment of pre-trained model embeddings with task-specific embeddings. The key idea is to iteratively adjust a small set of parameters that control the alignment between the base model’s understanding of language and the specific requirements of the new task.

Benefits of Parameter-efficient Fine-tuning

In the context of Natural Language Processing (NLP), pre-trained language models (LLMs) have become critical, capable of tackling diverse tasks. However, fine-tuning these behemoths often comes at a hefty cost – immense computational resources and storage needs. This is where Parameter-efficient Fine-tuning (PEFT) steps in, offering a compelling solution. Below are key benefits PEFT brings to the table:

1. Reduced Computational Cost and Faster Training

PEFT fine-tunes only a small subset of the pre-trained model’s parameters, focusing on aspects most relevant to the new task. This significantly reduces the computational power required for training, leading to faster training times. Imagine training a model on your local machine instead of needing a powerful server – that’s the kind of efficiency PEFT offers.

2. Lower Storage Requirements

Large, fine-tuned models can be cumbersome to store. PEFT, by keeping most pre-trained parameters untouched, results in a smaller final model size. This translates to lower storage needs, making deployment on resource-constrained devices (e.g., mobile phones) more feasible.

3. Combating Catastrophic Forgetting

Full fine-tuning can sometimes lead to “catastrophic forgetting,” where the model loses its previously learned knowledge from pre-training. PEFT mitigates this risk by leaving most pre-trained parameters intact, ensuring the model retains its foundational knowledge while adapting to the new task.

4. Improved Performance in Data-Scarce Scenarios

Large datasets are often required for successful fine-tuning. PEFT shines in situations with limited data. By focusing on a smaller parameter set, PEFT can often achieve better performance on small datasets compared to full fine-tuning, which might struggle to learn effectively with limited data points.

5. Enhanced Portability and Deployment

PEFT-tuned models are typically smaller in size, making them easier to distribute and deploy across different platforms. This allows you to readily transfer the model to new tasks or environments without needing to retrain the entire model from scratch.

6. A More Sustainable Approach

The significant computational resources required for traditional fine-tuning can have a considerable environmental impact. PEFT’s reduced training time and lower resource consumption make it a more sustainable approach to NLP tasks.

Google Gemini AI: Your Superpowered AI Assistant for the Future

Experience the future of AI with Google Gemini—your ultimate assistant for smarter, faster decisions!

Training Your Model with PEFT: A Step-by-Step Guide

The power of pre-trained language models (LLMs) comes at a cost – immense computational resources needed for fine-tuning. Parameter-efficient fine-tuning (PEFT) offers a solution, allowing you to train task-specific models with significantly less computational power. Here’s a breakdown of the key steps involved in training your model using PEFT:

1. Preliminaries

Choose a PEFT Technique: Consider factors like task complexity, data availability, and your computational resources. Popular options include Adapter Modules, LoRA, Prefix Tuning, or Prompt Tuning..

Select a Pre-trained LLM: Choose a pre-trained model relevant to your task and domain. Popular options include BERT, RoBERTa, and XLNet. Ensure compatibility with your chosen PEFT technique.

Prepare Your Dataset: Gather and clean your task-specific data, ensuring it’s suitable for the chosen PEFT method. Consider data augmentation techniques to improve model performance if needed.

2. Setting Up the Training Environment

Deep Learning Framework: Choose a framework like PyTorch or TensorFlow that supports PEFT techniques. Popular libraries like Hugging Face Transformers offer pre-built implementations for several PEFT methods.

3. The Training Process

Load the Pre-trained Model: Load the chosen pre-trained LLM using your deep learning framework and PEFT library.

Define the PEFT Configuration: Specify the parameters of your chosen PEFT technique. This might involve defining the structure of adapter modules, knowledge distillation settings, or pruning ratios.

Prepare the Training Loop: Set up the training loop that iterates through your data, feeding it to the PEFT model. Define the optimizer and loss function suitable for your task.

Training with PEFT: Train the model based on your chosen PEFT technique. This might involve training only the adapter modules, fine-tuning with knowledge distillation, or updating a sparse subset of parameters after pruning.

Monitor Training Progress: Track metrics like training loss, accuracy, and validation performance to assess model learning and prevent overfitting.

4. Model Evaluation and Deployment

Evaluation: Evaluate the trained PEFT model on a held-out test dataset to assess its performance on unseen data. Compare its performance to other models or baselines.

Deployment: Deploy the trained PEFT model for real-world use. Its smaller size compared to a fully fine-tuned model often makes it ideal for deployment on resource-constrained devices.

Pitfalls to avoid in PEFT

When using Parameter-efficient Fine-tuning (PEFT) to fine-tune a pre-trained model, there are some pitfalls that you should be aware of to avoid suboptimal results. Here are some key things to keep in mind:

1. Overfitting

Since PEFT only fine-tunes a small number of extra parameters, it is possible to overfit the model to the training data. To avoid overfitting, it is important to use regularization techniques such as weight decay and dropout. You can also monitor the validation loss during training to detect overfitting and stop the training early if necessary.

2. Choosing the Right Adapter Size

PEFT uses adapter modules to add new functionality to a pre-trained model. Choosing the right adapter size is crucial for achieving good performance. If the adapter is too small, it may not be able to capture the necessary information. On the other hand, if the adapter is too large, it may lead to overfitting. A good rule of thumb is to choose an adapter size roughly 10% of the size of the pre-trained model.

3. Choosing the Right Learning Rate

Choosing the right learning rate is important for achieving good performance in PEFT. If the learning rate is too high, it may cause the model to diverge. If the learning rate is too low, it may cause the model to converge too slowly. A good approach is to use a learning rate schedule that gradually decreases the learning rate over time.

4. Choosing the Right Pre-trained Model

Not all pre-trained models are created equal. Some models may be better suited for certain tasks than others. When choosing a pre-trained model for fine-tuning with PEFT, it is important to consider factors such as the size of the model, the quality of the pretraining data, and the performance of similar tasks.

Here’s a list of pre-trained models where Parameter-Efficient Fine-Tuning (PEFT) techniques have been applied:

- BERT (Bidirectional Encoder Representations from Transformers)

- GPT-3 (Generative Pre-trained Transformer 3)

- T5 (Text-to-Text Transfer Transformer)

- RoBERTa (A Robustly Optimized BERT Pretraining Approach)

- XLNet

- ELECTRA

- ALBERT (A Lite BERT

Federated Learning: Train Powerful AI Models Without Data Sharing

Discover how Federated Learning enables AI innovation while safeguarding data privacy—learn more now!

Uses Cases of Parameter-Efficient Fine-Tuning (PEFT)

1. Natural Language Processing (NLP)

Text Classification

Sentiment Analysis: PEFT can be used to fine-tune large language models for sentiment analysis tasks with minimal resource requirements. This is particularly useful for real-time analysis of social media posts, reviews, and customer feedback.

Named Entity Recognition (NER): Efficiently fine-tuning models for identifying entities in text, such as names, organizations, and locations, which is crucial for information extraction in various domains like healthcare and finance.

Machine Translation

Fine-tuning pre-trained models for specific language pairs or domains using PEFT allows for high-quality translations with reduced computational overhead, making it feasible to deploy these models in resource-constrained environments.

2. Conversational AI

Chatbots and Virtual Assistants

PEFT can be used to customize pre-trained conversational models for specific industries or companies, ensuring that the assistant can handle unique queries and contexts without extensive retraining of the entire model.

3. Computer Vision

Image Classification

Adapting pre-trained vision models to specific datasets with minimal updates to the parameters. This is useful in applications like medical imaging, where models need to be fine-tuned to recognize specific conditions without extensive computational resources.

Object Detection

Fine-tuning models to detect and classify objects in images and videos with less computational power. This is especially useful in surveillance, autonomous driving, and retail for inventory management.

4. Speech Recognition

PEFT can be employed to adapt large pre-trained speech recognition models to specific accents, dialects, or languages, improving accuracy and usability in diverse environments.

5. Recommendation Systems

Personalizing recommendation engines for specific user bases or content types. PEFT allows these systems to quickly adapt to new trends and preferences without requiring full model retraining, enhancing user experience and engagement.

6. Healthcare

Medical Diagnostics

Fine-tuning models on specific medical datasets to assist in diagnosing diseases from medical images or patient data with reduced computational requirements. This is particularly important in resource-limited settings.

Drug Discovery

Using PEFT to adapt pre-trained models to analyze large datasets of chemical compounds and predict their interactions, speeding up the drug discovery process while conserving computational resources.

7. Finance

Fraud Detection

Customizing models to detect fraudulent transactions or activities in financial data. PEFT allows these models to stay updated with the latest fraud patterns without extensive retraining, ensuring timely and accurate detection.

Algorithmic Trading

Fine-tuning models to specific market conditions and trading strategies, allowing for more efficient and effective algorithmic trading systems.

Generative AI Use Cases in Pharmaceutical Industry: A Comprehensive Guide

Explore how Generative AI is transforming the pharmaceutical industry!

Kanerika’s AI Solutions: Revolutionizing Business Efficiency with Advanced LLMs

Kanerika’s AI solutions are revolutionizing business efficiency by leveraging advanced Large Language Models (LLMs). With deep expertise in AI, ML, and generative AI, we help businesses enhance operations through intelligent automation, data-driven insights, and predictive analytics. By integrating LLMs, our experts enable precise language understanding and processing, optimizing workflows and decision-making processes. This leads to significant improvements in operational efficiency and drives business growth.

Our solutions are tailored to address specific business challenges, ensuring scalable and adaptable AI implementations. Trust us to transform your business with cutting-edge AI technologies, enhancing productivity and fostering innovation.

Leap Ahead in Your Industry with Gen AI-powered Solutions

Partner with Kanerika for Expert AI implementation Services

Frequently Asked Questions

What is parameter efficient fine-tuning?

Parameter-efficient fine-tuning (PEFT) cleverly adapts pre-trained large language models (LLMs) to new tasks without changing most of the model’s weights. It focuses on updating only a small subset of parameters, significantly reducing computational cost and memory requirements. This makes it ideal for adapting LLMs to niche tasks or deploying them on resource-constrained devices. Think of it as a “light touch” upgrade instead of a complete rebuild.

What is the difference between fine-tuning and PEFT?

Fine-tuning retrains the entire model on a new dataset, which is resource-intensive. PEFT (Parameter-Efficient Fine-Tuning) methods, like LoRA or Adapter, modify only a small subset of the model’s parameters, making them much more efficient and requiring less compute power. Essentially, PEFT is a smarter, more economical way to adapt a large language model. Think of it as tweaking vs. rebuilding.

What are the benefits of PEFT?

PEFT, or Parameter-Efficient Fine-Tuning, offers significant advantages by making large language model adaptation more efficient. It drastically reduces the computational cost and memory needed for fine-tuning, allowing for quicker and cheaper deployment of specialized models. This also means less environmental impact compared to training entirely new models. Ultimately, PEFT makes AI more accessible and sustainable.

What is parameter efficiency?

Parameter efficiency describes how well a machine learning model uses its parameters to learn. A highly parameter-efficient model achieves high accuracy with relatively few parameters, avoiding overfitting and resource waste. This is crucial for deploying models on devices with limited memory or processing power. Essentially, it’s about getting the most “bang for your parameter buck.”

What is the use of PEFT model?

PEFT (Parameter-Efficient Fine-Tuning) models drastically reduce the computational cost of adapting large language models. Instead of retraining the entire model, PEFT focuses on tweaking a small subset of parameters, making fine-tuning faster and more resource-efficient. This is crucial for deploying LLMs on devices with limited resources or for quickly adapting to specific tasks without massive retraining. Essentially, they let you get a lot of mileage out of a pre-trained model with minimal effort.

How does parameter tuning work?

Parameter tuning optimizes a model’s performance by systematically adjusting its internal settings. It involves experimenting with different values to find the combination that yields the best results on a validation dataset. Think of it as fine-tuning the knobs and dials of a machine to get the best output. Ultimately, it’s a search for the sweet spot between model complexity and generalization ability.

What is the difference between RLHF and PEFT?

Reinforcement Learning from Human Feedback (RLHF) refines a model’s behavior using human preferences as a reward signal, essentially teaching it what’s “good” output. Parameter-Efficient Fine-Tuning (PEFT) focuses on adapting *existing* large language models efficiently, modifying only a small subset of parameters for a new task, conserving resources. In short, RLHF shapes behavior, PEFT adapts existing capabilities. They are distinct approaches, though sometimes used together.

What is the difference between IA3 and LoRA?

IA3 and LoRA are both techniques for efficiently fine-tuning large language models, but they differ in their approach. IA3 adapts the entire model’s weights, offering potentially broader improvements but requiring more memory and compute. LoRA, conversely, only modifies a small set of “low-rank” matrices, making it significantly more memory-efficient and faster, albeit possibly with less comprehensive performance gains. The choice depends on your resource constraints and desired level of model adaptation.

What is a PEFT adapter?

PEFT adapters are lightweight, specialized neural networks designed to quickly adapt large language models (LLMs) to new tasks. Instead of retraining the entire massive LLM, they add a small, trainable network “on top,” allowing for efficient fine-tuning. This makes adapting LLMs for specific purposes much faster and cheaper, a key advantage in the field. Think of it as a customizable lens for your powerful, but general-purpose, LLM.

What is LoRA in LLMs?

LoRA (Low-Rank Adaptation) is a training technique for Large Language Models (LLMs) that dramatically reduces the memory and computational resources needed for fine-tuning. Instead of updating all the model’s weights, LoRA adds small, low-rank matrices to specific layers. This allows for efficient personalization or adaptation of the LLM to specific tasks or datasets, making it much more accessible. Essentially, it’s a clever shortcut for making LLMs smarter without breaking the bank.

What are the parameters of fine-tuning?

Fine-tuning adjusts a pre-trained model for a specific task. Key parameters include the learning rate (how much the model updates itself), the number of training epochs (passes through the data), and the size of the training dataset. Essentially, you’re tweaking these to balance fitting the new task without losing the pre-trained knowledge.

Which is a distinguishing feature of PEFT?

PEFTs, or Parameter-Efficient Fine-Tuning methods, are distinguished by their resource efficiency. Unlike full fine-tuning, they adapt pre-trained models with minimal parameter changes, saving significant storage and computational power. This makes them ideal for deploying large language models on resource-constrained devices. Essentially, they achieve impressive performance improvements with a lighter footprint.

What is the difference between SFT and PEFT?

SFT (Standard Fine-Tuning) trains a whole language model from scratch on a new dataset, completely altering its internal parameters. PEFT (Parameter-Efficient Fine-Tuning) tweaks only a small portion of the model’s parameters, making it much faster and less resource-intensive. Think of SFT as a full remodel and PEFT as a targeted renovation. This leads to significant differences in training time and computational cost.