Ever wondered how a search engine pulls out exactly the right person, place, or company from a sea of words? Or how chatbots seem to understand which entities in your message are crucial? This is where Named Entity Recognition (NER) steps in — a key technology in Natural Language Processing (NLP) that enables machines to identify and categorize the essential pieces of text. From recognizing that “Apple” refers to the tech giant rather than a fruit to picking out critical terms in medical documents,

According to a recent report by MarketsandMarkets, the global NLP market size is expected to grow from $18.9 billion in 2023 to $68.1 billion by 2028, with NER playing a crucial role in this expansion. This remarkable growth underscores the increasing importance of Named Entity Recognition in unlocking the potential of unstructured data across various industries. NER transforms vast unstructured data into actionable insights. It’s estimated that unstructured data accounts for 80-90% of all data, making tools like NER indispensable for converting this information into meaningful patterns.

In this comprehensive guide, we’ll explore what makes NER so pivotal, how it works, and the technology behind its ability to detect entities in a fast-paced, data-driven world.

What is Named Entity Recognition?

Named Entity Recognition (NER) is a key technique in Natural Language Processing (NLP) that focuses on identifying and classifying specific entities from unstructured text. These entities can be names of people, organizations, locations, dates, and more. NER converts raw data into structured information, making it easier for machines to process and understand.

It operates by first detecting potential entities and then categorizing them into predefined groups. NER is widely used in applications such as search engines, chatbots, and information retrieval systems, helping to extract actionable insights from vast volumes of text.

Data Preprocessing Essentials: Preparing Data for Better Outcomes

Explore the essential steps in data preparation that pave the way for high-performing machine learning model

Key Concepts of Named Entity Recognition (NER)

1. Tokenization

This is the first step in NER, where text is broken down into smaller, manageable units like words or phrases. These tokens serve as the foundation for recognizing entities within the text. For example, the sentence “Steve Jobs founded Apple” would be split into tokens such as “Steve,” “Jobs,” “founded,” and “Apple”.

2. Entity Identification

In this phase, the system scans the tokens to detect which parts of the text are potential entities. For instance, in “Steve Jobs founded Apple,” “Steve Jobs” would be identified as a person and “Apple” as an organization.

3. Entity Classification

After identifying entities, the system categorizes them into predefined classes like “Person,” “Organization,” “Location,” and others. In our example, “Steve Jobs” would be classified as a person, and “Apple” as an organization.

4. Contextual Analysis

Context plays a key role in improving entity recognition accuracy. Words can have multiple meanings (e.g., “Apple” as a fruit or a company), and contextual analysis helps the system decide which interpretation is correct based on the surrounding words.

5. Post-processing

This final step refines the results by resolving ambiguities, merging multi-word entities, and validating the detected entities with external knowledge sources or databases to ensure accuracy.

What Are the Popular Approaches to Named Entity Recognition?

Several approaches have been developed to implement Named Entity Recognition (NER) effectively. Here’s a detailed explanation of the most common methods:

1. Rule-Based Approaches

Rule-based NER relies on manually defined patterns and rules to identify and classify entities. These rules are often based on linguistic insights and predefined lists.

Regular Expressions: This involves pattern matching to detect entities based on specific structures, such as email formats or phone numbers. For example, a rule might detect names based on capital letters in the middle of sentences.

Dictionary or Lexicon Lookup: In this method, the system uses predefined dictionaries of names or terms. If the word matches a known entity (e.g., “George Orwell”), it is classified accordingly.

Pattern-Based Rules: Entities are recognized based on common language structures. For example, proper nouns that are capitalized in the middle of a sentence might indicate a named entity.

Advantages: Easy to implement and highly effective in specialized domains where entities follow consistent patterns.

Disadvantages: Lacks scalability and struggles with generalizing to new domains or data sets.

2. Machine Learning-Based Approaches

This approach involves training machine learning models on labeled data to identify and classify entities. It uses feature engineering to analyze aspects of the text.

Feature Engineering: Common features include word characteristics (capitalization, prefixes, suffixes), syntactic information (part-of-speech tags), and word patterns (e.g., formats like dates or quantities).

Algorithms: Machine learning methods use algorithms like Support Vector Machines (SVM), Decision Trees, and Conditional Random Fields (CRF) to classify entities.

Advantages: More scalable than rule-based methods and capable of handling more diverse data, particularly with well-annotated training sets.

Disadvantages: Requires substantial effort in feature engineering and model training and may not perform well without large amounts of labeled data.

3. Deep Learning Approaches

Deep learning methods, particularly Recurrent Neural Networks (RNN) and Transformer models like BERT, have revolutionized NER. These models automatically learn features from vast datasets without the need for manual feature engineering.

Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM): These models are ideal for sequential data like text, capturing dependencies between words. LSTMs are particularly useful for recognizing entities spread across multiple words (e.g., “New York City”).

Transformers (e.g., BERT): Transformer architectures like BERT are now widely used for NER. They capture contextual relationships by processing the entire text simultaneously, rather than word-by-word, making them highly effective for disambiguating entities (e.g., “Apple” as a company vs. the fruit).

Advantages: Deep learning models automatically learn important features, reducing the need for manual intervention. They excel in large-scale, complex tasks.

Disadvantages: Require vast amounts of training data and computational resources. They are also difficult to interpret compared to simpler machine learning models.

4. Hybrid Approaches

Hybrid approaches combine rule-based, machine learning, and deep learning techniques to leverage the strengths of each. For example, rule-based methods might be used to handle domain-specific entities, while machine learning or deep learning is used for more general-purpose entity recognition.

Advantages: Hybrid models can handle a wide variety of entities across different domains and contexts, offering flexibility and robustness.

Disadvantages: Complex to implement and maintain, as they require integrating multiple techniques effectively.

Data Transformation – Benefits, Challenges and Solutions in 2024

Uncover the benefits of data transformation in 2024, while addressing the challenges and providing modern solutions for seamless implementation.

Named Entity Recognition Techniques

1. BIO Tagging

This technique labels tokens as Beginning (B), Inside (I), or Outside (O) of a named entity. It’s simple and widely used, allowing for the representation of entity boundaries and types. BIO tagging is particularly useful for handling multi-token entities.

- B: Marks the beginning of an entity

- I: Indicates a token inside an entity

- O: Represents tokens outside any entity

2. BILOU Tagging

An extension of BIO, BILOU adds more granularity by including Last (L) and Unit (U) labels. This scheme can potentially improve accuracy in entity detection, especially for longer or nested entities.

- B: Beginning of a multi-token entity

- I: Inside of a multi-token entity

- L: Last token of a multi-token entity

- O: Outside any entity

- U: A single-token entity

3. Inside-outside-beginning (IOB) Tagging

Similar to BIO, but with a slight difference in how the beginning of entities is marked. In IOB, the B tag is only used when an entity starts immediately after another entity of the same type.

- I: Inside an entity

- O: Outside any entity

- B: Beginning of an entity (only used when necessary to disambiguate)

4. Conditional Random Fields (CRFs)

A statistical modeling method used for structured prediction. CRFs consider the context and relationships between adjacent tokens, making them effective for sequence labeling tasks like NER.

- Captures dependencies between labels

- Can incorporate various features (e.g., POS tags, capitalization)

- Often used in combination with other machine learning techniques

5. Word Embeddings

Dense vector representations of words that capture semantic and syntactic information. They play a crucial role in modern NER systems by providing rich, contextual information about words and their relationships.

- Pre-trained embeddings (e.g., Word2Vec, GloVe) can be used

- Contextual embeddings (e.g., BERT, ELMo) provide dynamic representations

- Improves generalization and performance of NER models

Data Visualization Tools: A Comprehensive Guide to Choosing the Right One

Discover the top data visualization tools in 2024 and learn how to choose the one that best fits your business needs and objectives.

Tools and Libraries for Named Entity Recognition

Popular Python Libraries for NER

spaCy: A fast and powerful library for NLP tasks, spaCy provides pre-trained NER models that can recognize entities like names, dates, and locations. It’s widely used for production-grade applications due to its speed and accuracy.

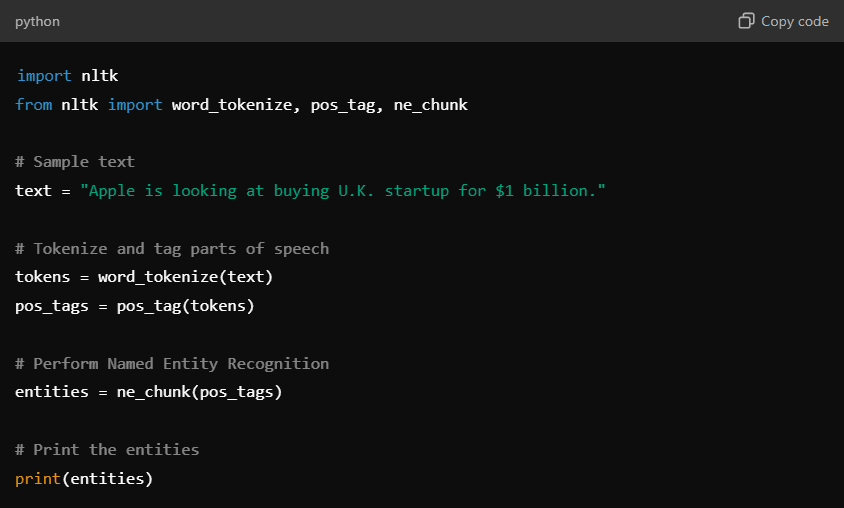

NLTK (Natural Language Toolkit): One of the oldest libraries for NLP in Python, NLTK offers tools for tagging, parsing, and recognizing named entities. While it’s comprehensive, it can be slower compared to more modern libraries like spaCy.

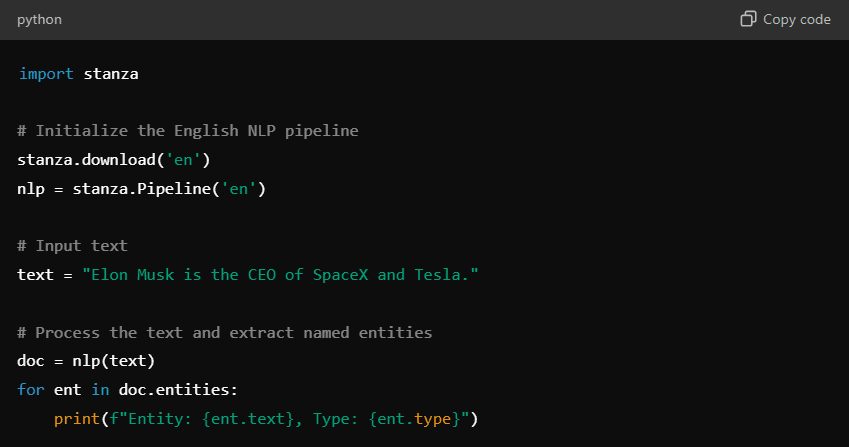

Stanford NER: Developed by the Stanford NLP Group, this is a Java-based library with Python wrappers. It is known for its accuracy and support for multiple languages.

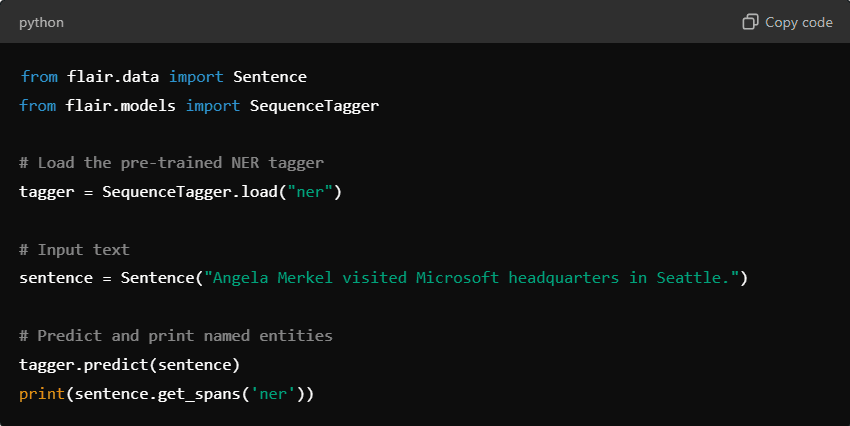

Flair: A simple and flexible NLP library from the Zalando Research group. It uses deep learning models to handle various tasks, including NER, and allows combining different pre-trained models for better results.

Cloud-based NER Services

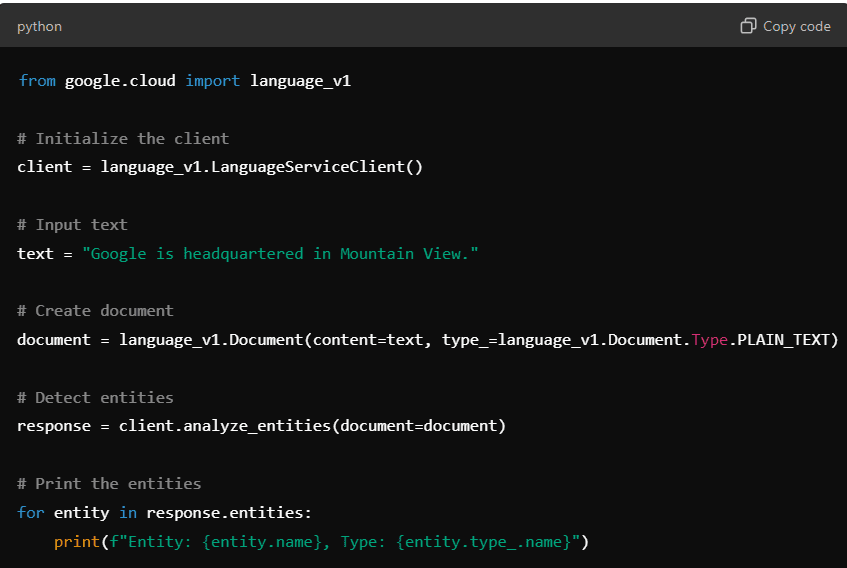

Google Cloud Natural Language API: Google’s NLP service provides entity analysis that identifies and categorizes entities from text data. It also offers sentiment analysis and syntax analysis.

Amazon Comprehend: AWS’s machine learning service that uses NER to extract entities like people, organizations, and dates from documents. It’s integrated into AWS’s ecosystem, making it easy to deploy in production environments.

IBM Watson Natural Language Understanding: Watson’s service can identify and classify named entities from unstructured text. It’s widely used in enterprises for extracting insights from large text datasets.

Applications of Named Entity Recognition (NER)

1. Information Retrieval and Search Engines

NER plays a key role in improving search engine accuracy by identifying and categorizing entities like names, places, or products. This enables more precise search results, helping users quickly locate relevant information from vast data sources.

2. Content Recommendation Systems

By identifying key entities in content (e.g., names of artists, locations, or topics), NER improves the relevance of recommendations on platforms like Netflix or Spotify. It tailors recommendations based on the entities extracted from user preferences.

3. Customer Service and Chatbots

NER enhances customer service by enabling chatbots to recognize and categorize user queries effectively. For instance, extracting product names, dates, or locations from a customer’s question allows chatbots to deliver more accurate and context-specific responses.

4. Social Media Monitoring and Sentiment Analysis

NER helps in extracting entities from social media posts, enabling businesses to monitor mentions of their brand, competitors, or products. Combined with sentiment analysis, it can gauge public opinion and identify key trends.

5. Healthcare and Biomedical Research

In the healthcare industry, NER is used to extract medical terms, drug names, diseases, and patient information from unstructured clinical notes and research papers. This speeds up research processes and improves patient data management.

6. Legal Document Analysis

NER assists in legal research by quickly identifying names of people, companies, dates, and locations in legal documents. It reduces the time required to sift through lengthy contracts or case files, making legal research more efficient.

7. Business Intelligence and Competitive Analysis

By extracting relevant information from reports, articles, and news, NER provides companies with valuable insights into competitors, market trends, and emerging opportunities. This enables better strategic decision-making.

Best Practices for Implementing NER

Implementing Named Entity Recognition effectively requires following best practices to ensure optimal performance and accuracy:

1. Data Preparation and Cleaning

Before applying NER, it’s crucial to prepare and clean the data for accuracy. Clean, well-structured data improves the performance of NER models by reducing noise and inconsistencies.

- Remove irrelevant text, such as stopwords, special characters, and non-entity-related content.

- Normalize the data (e.g., lowercasing or removing punctuation) to ensure consistency.

- Annotate a representative dataset for training and evaluation, ensuring diverse entity types are included.

2. Choosing the Right Algorithm or Model

Selecting the appropriate NER model depends on the dataset size, complexity, and domain. The choice can range from rule-based systems to advanced deep learning models.

- For smaller, domain-specific tasks, rule-based or traditional machine learning models (e.g., CRF, SVM) might suffice.

- For large datasets or complex tasks, consider deep learning models like BERT or LSTM-based architectures, which offer higher accuracy by learning context automatically

3. Fine-tuning and Transfer Learning

Fine-tuning pre-trained models and leveraging transfer learning can greatly improve NER accuracy, especially in domains with limited data.

- Start with a pre-trained model like BERT or Flair and fine-tune it on your specific dataset.

- Use transfer learning to apply knowledge from a general NER model to a domain-specific one, saving time on training from scratch.

4. Handling Domain-specific Entities

Different industries or domains often require recognizing unique entities not found in generic datasets. Customizing the model for these needs is critical.

- Create a domain-specific dictionary or lexicon to handle entities unique to the industry (e.g., medical terms in healthcare).

- Use hybrid approaches, combining rule-based methods with machine learning, to capture both general and domain-specific entities

5. Addressing Multilingual NER Challenges

Multilingual NER requires models to recognize entities across different languages, which can present challenges due to varying syntax and grammar.

- Use language-specific models or tools like SpaCy’s multilingual pipelines to handle different languages.

- Incorporate transfer learning from high-resource languages (e.g., English) to low-resource languages to improve model performance in less-studied languages

Data Consolidation Best Practices: Mastering the Art of Information Management

Master the key principles of data consolidation with best practices that ensure seamless information management and enhanced decision-making.

Tools and Libraries for Named Entity Recognition (NER)

1. Popular Python Libraries

spaCy

A modern and fast library widely used in production, spaCy offers pre-trained NER models capable of recognizing entities like people, organizations, and dates. It’s designed for industrial-strength NLP tasks and is known for its ease of use and efficiency. In this example, spaCy identifies entities like “Barack Obama” (Person) and “Hawaii” (Location) from the text.

NLTK (Natural Language Toolkit)

One of the oldest NLP libraries, NLTK offers tools for text processing and entity recognition. Though slower compared to newer libraries like spaCy, it is comprehensive and great for educational purposes or small-scale projects. This example uses NLTK’s ne_chunk to detect named entities, recognizing “Apple” as an organization and “U.K.” as a location.

Stanford NER

Developed by the Stanford NLP Group, this is a Java-based library with Python wrappers available. It’s highly accurate, supports multiple languages, and is widely used in research and academic settings. Here, Stanford NER through stanza identifies “Elon Musk” as a person and “SpaceX” and “Tesla” as organizations.

Flair

Flair is an NLP library developed by Zalando Research. It uses deep learning models for NER and provides state-of-the-art performance by combining word embeddings, making it particularly useful for complex and large datasets. Flair recognizes “Angela Merkel” as a person, “Microsoft” as an organization, and “Seattle” as a location.

2. Cloud-based NER Services

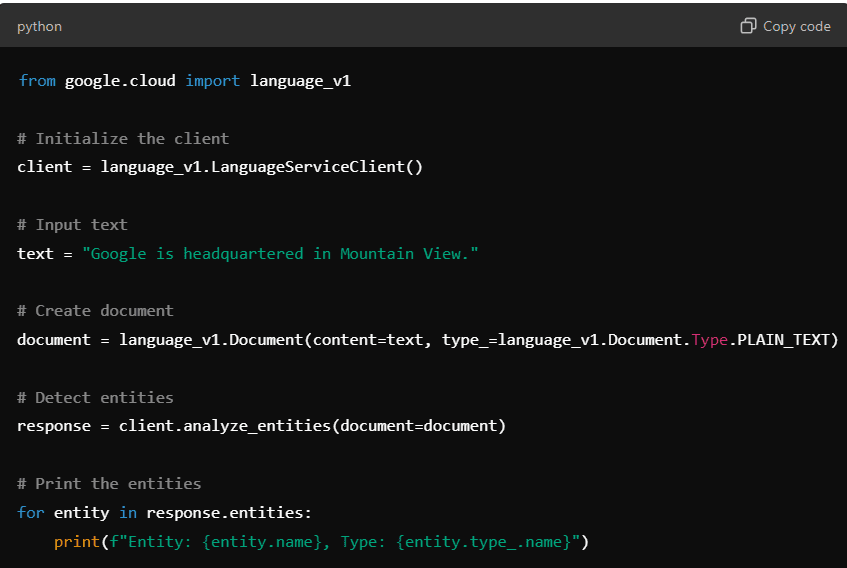

Google Cloud Natural Language API

Google’s NLP API provides pre-trained models for entity recognition that identify and classify entities like people, organizations, and locations in text. It integrates easily into Google’s cloud ecosystem, making it scalable and efficient for real-time applications. Here’s an example of how to interact with it via Python:

In this case, the API identifies “Google” as an organization and “Mountain View” as a location.

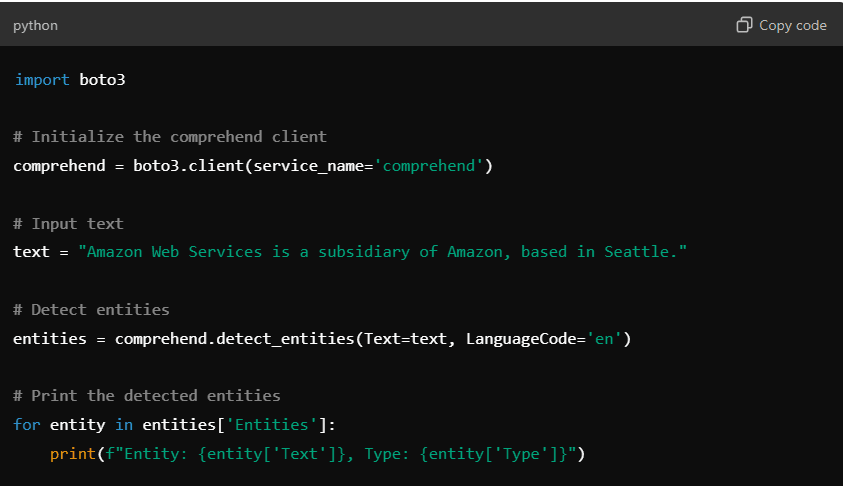

Amazon Comprehend

Amazon’s NER service, part of AWS, extracts entities such as people, places, dates, and products from unstructured text. It’s designed to work with large-scale data and integrates seamlessly with other AWS services. Amazon Comprehend recognizes “Amazon Web Services” as an organization and “Seattle” as a location.

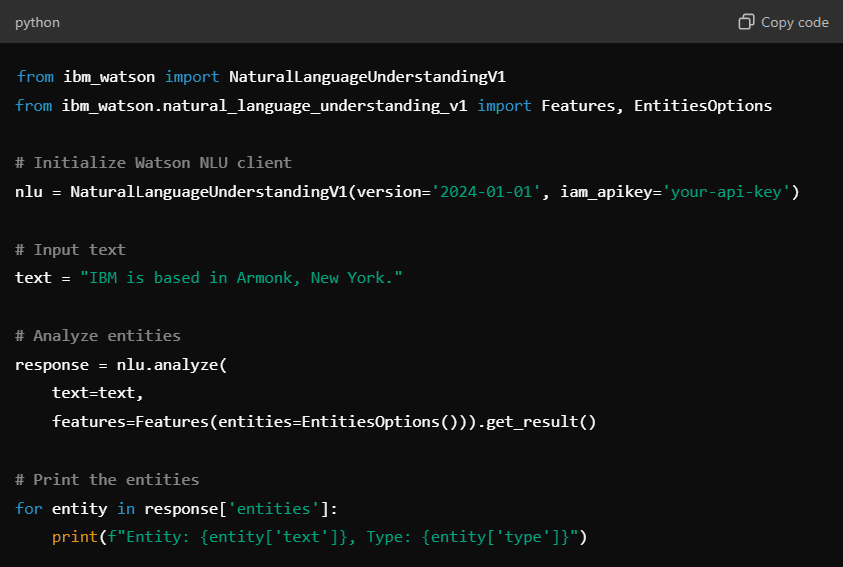

IBM Watson Natural Language Understanding

IBM’s Watson NLU service provides advanced NER capabilities, identifying a variety of entities from unstructured data. It is particularly popular in enterprise environments for business applications, including sentiment analysis and entity extraction. This example shows Watson recognizing “IBM” as an organization and “Armonk, New York” as a location.

Challenges in Identifying Named Entities

1. Ambiguity

Ambiguity arises when the same word or phrase can refer to multiple different entities. For example, the word “Apple” could refer to the technology company or the fruit, depending on the context. Disambiguating such terms requires advanced techniques, as simple keyword matching can often lead to incorrect classifications. This challenge is particularly pronounced in industries like media or finance, where entities often have overlapping names.

2. Context Dependency

Entities derive meaning from the context in which they are used. For example, “Paris” could refer to the capital of France, or it could be a person’s name. The same word can mean different things depending on the sentence structure and surrounding words. Named Entity Recognition (NER) systems must account for these variations to accurately identify entities. This challenge highlights the importance of context-aware models like BERT, which consider the entire sentence structure.

3. Multilingual Considerations

Identifying named entities becomes even more complex when dealing with multilingual text. Different languages have unique syntactic rules, entity formats, and even cultural differences in naming conventions. Moreover, some languages lack standardized capitalization for proper nouns, making it harder to distinguish entities from regular words. Handling these variations requires NER systems trained in multiple languages or those capable of leveraging cross-lingual data.

How to Improve Data Accessibility in Your Organization

Discover practical strategies to enhance data accessibility in your organization, ensuring seamless data flow and empowering informed decision-making..

Kanerika: Transforming Your Unstructured Data into Smart Business Decisions

Kanerika, a leading provider of data and AI solutions, specializes in transforming unstructured data into actionable insights using advanced techniques such as Named Entity Recognition (NER). By leveraging best-in-class tools like Microsoft Fabric and Power BI, we empower businesses to unlock the full potential of their data. Our tailored solutions are designed to address your unique business challenges, ensuring that you can derive valuable insights, improve decision-making, and drive growth.

Partnering with Kanerika offers businesses across industries—such as banking and finance, manufacturing, retail, and logistics—a strategic advantage in managing vast volumes of unstructured data. Whether you’re dealing with customer feedback, financial reports, or supply chain data, our expertise enables you to make informed, data-driven decisions. Kanerika’s advanced data processing solutions provide clarity and structure to complex datasets, helping businesses stay competitive and innovative in today’s fast-paced environment.

Let Kanerika help you turn data into your most valuable asset, providing insights that power growth and efficiency across every industry we serve.

Turn Unstructured Data into Actionable Insights with Named Entity Recognition

Partner with Kanerika Today.

Frequently Asked Questions

What is a named entity recognition?

Named Entity Recognition (NER) is like teaching a computer to spot and identify important names in text. It’s all about finding things like people, places, organizations, and dates, and tagging them so the computer understands their meaning and context. This helps computers process and understand human language more effectively, going beyond just reading words to grasping the underlying concepts. Think of it as giving context to raw text data.

What is the purpose of a NER?

Named Entity Recognition (NER) helps computers understand the meaning behind text by identifying and classifying key information like people, places, and organizations. It’s like giving context to words, transforming raw text into structured data. This structured data is crucial for various applications, allowing computers to better process and understand human language. Essentially, NER helps machines “read between the lines.”

What is the difference between NER and NLP?

NER (Named Entity Recognition) is a *specialized* NLP (Natural Language Processing) task. NLP is the broad field of making computers understand human language, while NER focuses specifically on identifying and classifying named entities like people, places, and organizations within text. Think of NER as a single tool within the larger NLP toolbox.

What is an example of a name entity?

A named entity is simply a real-world object that’s been given a specific name. Think of it like a label – “Barack Obama” is a named entity (person), “New York City” is another (location), and “Apple Inc.” (organization) is yet another example. Essentially, it’s any word or phrase referring to a specific individual, place, thing, or concept.

What is the use case of NER?

NER, or Named Entity Recognition, helps computers understand the context of text by identifying key pieces of information like people, places, organizations, and dates. This is crucial for applications needing to extract meaningful data from unstructured text, such as customer support chatbots understanding locations or news aggregators automatically categorizing articles. Essentially, it’s the first step towards making sense of human language for machines.

What is the difference between classification and named entity recognition?

Classification sorts data into predefined categories (e.g., spam/not spam). Named Entity Recognition (NER) is more specific; it pinpoints and classifies *entities* within text (e.g., identifying “Barack Obama” as a person). Essentially, NER is a specialized type of classification focusing on identifying meaningful pieces of information within unstructured text. Classification is broader and encompasses many other tasks.

What is the full form of NER?

NER stands for Named Entity Recognition. It’s a crucial technique in natural language processing (NLP) that identifies and classifies key information within text, like names of people, places, organizations, and dates. Think of it as teaching a computer to understand the context and meaning behind specific words and phrases. Essentially, NER helps computers “read between the lines” and extract meaningful facts.

What is named entity recognition using NLTK?

Named Entity Recognition (NER) with NLTK is like giving a computer super-powered reading comprehension. It automatically identifies and classifies key information in text, such as people, places, organizations, and dates. NLTK provides tools to build and use these “name-finding” systems, making it easier to extract structured data from unstructured text. This is crucial for tasks like information retrieval and knowledge graph creation.

What is tokenization named entity recognition?

Tokenization named entity recognition (NER) is a two-step process. First, text is broken into individual words or “tokens.” Then, NER identifies and classifies key entities within those tokens (like people, places, organizations) based on context. It’s like labeling important pieces of information within a sentence to make the data easier to understand and use for applications like search engines or chatbots.

What is the difference between keyword extraction and named entity recognition?

Keyword extraction pulls out the most important words from a text, focusing on frequency and importance for general topic representation. Named Entity Recognition (NER), however, is more specific; it identifies and classifies *named* entities like people, places, and organizations, regardless of their overall text importance. Essentially, keyword extraction is broader, while NER is focused on precise entity identification. NER is a *subset* of information extraction tasks that keyword extraction is not.

What is named entity recognition in banking?

Named Entity Recognition (NER) in banking is like giving your computer super-powered reading comprehension for financial documents. It automatically identifies and classifies key pieces of information – names, dates, account numbers, locations – extracting vital data from messy text. This speeds up processes like fraud detection, KYC/AML compliance, and customer service by automating information extraction. Essentially, it’s making sense of unstructured data to make banking smarter.

How does a NER work?

Named Entity Recognition (NER) works by identifying and classifying named entities in text. It uses machine learning, often deep learning models, to pinpoint keywords and phrases representing people, places, organizations, etc. These models learn patterns in text data to recognize and categorize entities based on context and linguistic features. Essentially, it’s like a super-powered find-and-replace that understands meaning.

What is HMM for named entity recognition?

HMMs in named entity recognition (NER) act like sophisticated guessers. They use probabilities to predict whether a word is part of a named entity (like a person, place, or organization) based on its context and the words around it. Essentially, they model the sequence of words as a hidden chain of entity tags, learning the likely transitions between these tags. This allows them to handle ambiguous cases more effectively than simpler methods.

What are three NER approaches?

Named Entity Recognition approaches include rule-based methods, machine learning models, and deep learning techniques, such as recurrent neural networks and long short-term memory networks, to accurately identify and classify entities in unstructured data, enabling effective information extraction and text analysis for business applications like sentiment analysis and customer service automation.

What is a named entity?

A named entity refers to a specific object or concept in text, such as organizations, locations, or individuals, that can be identified and extracted using natural language processing techniques, enabling businesses to analyze and understand large volumes of unstructured data for improved decision making and information retrieval in applications like text analysis and sentiment analysis.

Which model is best for named entity recognition?

For named entity recognition, the spaCy model is often considered the best due to its high accuracy and efficiency in identifying and categorizing entities such as organizations, locations, and people in unstructured text, making it a valuable tool for natural language processing applications in business and research.

Is Chatgpt llm or NLP?

ChatGPT is a large language model that utilizes natural language processing techniques, including named entity recognition, to generate human-like text. It is built on transformer architecture, a type of neural network, to understand and respond to user input, making it a key application of NLP in language generation and text analysis for business intelligence and customer service automation.

What are the 4 types of ML?

Machine learning has four primary types: supervised, unsupervised, semi-supervised, and reinforcement learning. These categories are crucial in natural language processing techniques like named entity recognition, which helps businesses extract valuable insights from unstructured data, enabling informed decision-making and improved customer experiences through advanced text analysis and information retrieval.