Is your data keeping up with the speed and scale of your business? As businesses increasingly rely on data to make decisions, even a minor glitch in a pipeline can lead to significant problems. That’s why data observability tools are gaining serious traction. These platforms act like a health monitor for your data systems, helping teams spot issues early, trace them to the source, and keep everything running smoothly.

According to a recent Gartner report, insufficient data costs companies an average of $12.9 million annually. These costs come from wasted resources, missed opportunities, and flawed decision-making, all of which can be avoided with better data observability.

Continue reading this blog to discover how data observability tools are transforming the way businesses manage, monitor, and trust their data.

Maximize Your Business Uptime with Data Observability Tools.

Partner with Kanerika to prevent data failures before they disrupt operations.

What are Data Observability Tools?

Data observability tools are platforms that help teams monitor and understand the health of their data systems. They give a complete view of how data moves and changes—from the moment it enters your system to the point where it’s used. Unlike traditional monitoring, which checks for known issues, these tools are designed to catch unexpected problems, things you didn’t even know could go wrong. They continuously track metrics such as data freshness, volume, and structure, helping teams spot issues early and fix them quickly.

As data systems become more complex and spread across different platforms, these tools are becoming more critical. According to Gartner, by 2026, more than half of companies with distributed data setups will be using data observability tools. Why? Because they help prevent data downtime, improve trust in data, and make it easier to find and fix problems. With real-time alerts and deep insights, these tools are changing how businesses manage their data.

Data Observability: Why It’s Essential for Data-Driven Businesses in 2025

Discover how data observability tools ensure data accuracy, trust, and smooth business operations with real-time visibility.

The Five Pillars of Data Observability

At their core, data observability tools monitor data across five key pillars:

- Freshness: How up-to-date and timely is your data? Are there unexpected delays or gaps in data arrival?

- Volume: Is the amount of data flowing through your pipelines within expected ranges? Are there sudden drops or spikes that could indicate missing or duplicate data?

- Schema: Has the structure of your data changed unexpectedly? Are there schema drifts that could break downstream applications or analyses?

- Distribution: Do the values within your data columns fall within expected statistical ranges? Are there anomalies or outliers that suggest data corruption or errors?

- Lineage: Where does your data come from, where does it go, and what transformations does it undergo along the way? Understanding data lineage is crucial for pinpointing the root cause of issues and assessing their impact.

By continuously monitoring these dimensions, data observability tools offer a comprehensive view of data health, allowing data teams to identify and address issues before they escalate and impact business outcomes.

Benefits of Data Observability Tools

The adoption of data observability tools delivers a myriad of benefits that are critical for any data-intensive organization:

- Improved Data Quality and Trust:

Observability tools for data catch problems early, such as missing values or errors, so teams can resolve them before they impact reports or decisions. With clean and consistent data, individuals throughout the organization feel more comfortable using it.

- Faster Troubleshooting and Reduced Downtime: Data incidents can be costly, leading to misguided decisions, operational disruptions, and revenue loss. Observability tools enable swift identification of the root cause of data problems through automated anomaly detection, real-time alerts, and detailed lineage, significantly reducing Mean Time to Detection (MTTD) and Mean Time to Resolution (MTTR).

- Enhanced Collaboration: By providing a shared, transparent view of data health, data observability fosters better collaboration between data engineers, data analysts, data scientists, and business stakeholders. Everyone can quickly understand the status of critical datasets and work together to resolve issues.

- Increased Operational Efficiency: Automation of monitoring, alerting, and even aspects of root cause analysis reduces the manual effort required to maintain data health. This frees up valuable time for data teams to focus on higher-value activities like building new data products and deriving insights.

- Proactive Issue Prevention: Beyond reacting to problems, advanced data observability tools leverage machine learning to learn standard data patterns and predict potential issues before they fully materialize. This allows for proactive intervention, preventing costly data downtime.

- Better Decision-Making: With consistent access to accurate and timely data, organizations can make more informed, data-driven decisions that lead to improved business performance, customer satisfaction, and competitive advantage.

- Stronger Data Governance and Compliance: Data observability provides the visibility needed to enforce data governance policies and track data flows for compliance with regulations like GDPR or CCPA.

8 Mind-Blowing Tech Trends Retailers Must Watch in 2025

Discover all 8 jaw-dropping innovations and stay ahead!!

Top 10 Data Observability Tools in 2025

The data observability landscape is rapidly evolving, with a growing number of powerful tools catering to diverse organizational needs. Here are 10 leading data observability tools anticipated to be prominent, along with their key features:

1. SYNQ

SYNQ is an AI-powered data observability platform. It offers a deep, customizable dbt and SQLMesh integration that eliminates duplicate alerts, links anomalies and test failures to the exact root cause. It provides a comprehensive 360-degree overview of your data’s health. At the center of SYNQ is Scout, an autonomous, always-on data quality AI agent. Scout proactively monitors data products, recommends what and where to test, does root-cause analysis, and fixes issues. It connects lineage, issue history, and contextual data to help teams fix problems faster.

Key features:

- Data product–centric observability (dashboards, metrics, ML outputs).

- AI agent (Scout) for automated test recommendations, anomaly detection, and guided resolution.

- Deep native integrations with dbt and SQLMesh.

- End-to-end lineage for root cause analysis.

- Built-in incident management workflows with clear ownership.

Ideal use case:

Ideal for analytics and data engineering teams building on dbt or SQLMesh who want observability natively integrated into their transformation workflows. The AI agent reduces the heavy lifting in testing, monitoring, and incident resolution, making data reliability easier and faster to achieve.

2. Monte Carlo

Monte Carlo is considered the gold standard in the data observability space. It introduced the concept of “data downtime”—periods when data is inaccurate or missing. Consequently, it built an automated solution to prevent it. Its AI-powered approach continuously monitors data for freshness, volume anomalies, and schema changes.

Monte Carlo integrates with popular tools like Snowflake, BigQuery, dbt, Airflow, and Looker, offering a seamless plug-and-play experience. It’s ideal for data teams who need reliable monitoring without extensive setup or manual configurations.

Key Features:

- Automated anomaly detection across tables, dashboards, and pipelines.

- End-to-end lineage to trace root causes of issues.

- Freshness, volume, distribution, and schema monitoring.

- Real-time alerts through Slack, Teams, and email.

- Supports cloud data platforms (Snowflake, Redshift, Databricks, etc.).

Ideal use case:

Monitoring complex, enterprise-scale data pipelines requires automated anomaly detection, end-to-end lineage, and minimal manual setup.

3. Acceldata

Acceldata has introduced multidimensional data observability, which encompasses a wide variety of sources and targets currently available in the market. It goes beyond monitoring the quality of your data and also offers operational and financial visibility into your data infrastructure. Built for complicated, massive systems, it’s relied upon by enterprises running hybrid and multi-cloud operations. Acceldata is best known for its expertise in data performance, reliability, and spend optimization – serving both engineering and finance teams.

Key Features:

- End-to-end visibility into data pipelines, compute resources, and reliability metrics.

- Anomaly detection, drift monitoring, and schema validation.

- Cloud cost and performance insights.

- Supports Spark, Airflow, Hadoop, and significant data warehouses.

Ideal use case:

Managing hybrid or multi-cloud data environments where observability needs to cover data quality, pipeline performance, and infrastructure cost optimization.

4. Databand (by IBM)

Databand, now a part of IBM, focuses on observability for data engineering workflows. It is highly compatible with modern orchestration and transformation tools like Apache Airflow, Spark, and dbt. Databand helps teams detect and resolve pipeline failures, bottlenecks, and delays before they impact analytics or production applications.

It’s best suited for organizations running complex pipelines that demand high availability and precision timing.

Key Features:

- Monitors job execution, latency, and volume discrepancies.

- Alerting for failed or delayed DAGs.

- Metadata integration for lineage and trend analysis.

- Supports on-prem, cloud-native, and hybrid environments.

Ideal use case:

Observing orchestration-heavy data workflows (e.g., Airflow or Spark) where early detection of job delays or failures can prevent downstream reporting or ML errors.

5. Metaplane

Metaplane markets itself as the “Datadog for your data stack.” It is a lightweight, totally developer-focused platform that allows you to easily introduce data pipeline deviations in your layers without alerting users too much. It is simple to install and configure, making it a good fit for small or mid-sized teams.

The platform leverages historical trends to detect anomalies in data freshness, null rates, and distribution—particularly beneficial in the context of how you manage your data using tools like dbt, Snowflake, and Redshift.

Key Features:

- AI monitoring for tables, columns, and metrics.

- Pre-built integration with tools like dbt, Airflow, Looker, and others.

- Intuitive dashboards and root cause analysis.

- Simple setup via CLI and minimal config.

Ideal use case:

Lightweight, fast-to-deploy observability for modern analytics teams using tools like dbt, Snowflake, and Looker who need intelligent alerts without alert fatigue.

6. Bigeye

Bigeye helps data teams create and enforce SLAs on data quality. Built by former Uber data engineers, it empowers users to create monitors on data health metrics and receive alerts when something breaks. With a strong visual interface and powerful customization, Bigeye is an excellent choice for companies looking to scale quality across multiple teams.

Key Features:

- Custom data quality metrics and anomaly detection.

- Alerting thresholds and SLA enforcement.

- Dashboard with historical trends and performance benchmarks.

- Seamless integrations with central data warehouses and BI tools.

Ideal use case:

Organizations looking to enforce and monitor custom data SLAs at scale while providing teams with visual dashboards and flexible metric-based monitoring.

7. Datafold

Datafold is the go-to tool for data diffing, which allows teams to compare data across environments, such as staging vs production. This is especially valuable in modern analytics teams where frequent deployments of dbt models or transformation logic can introduce bugs.

Datafold ensures that code changes don’t unintentionally affect the data that flows into reports, dashboards, and ML models.

Key Features:

- Column-level data diffing between tables.

- Code impact analysis and visual lineage.

- Git-integrated deployment previews for dbt projects.

- Ideal for data QA and CI/CD practices.

Ideal use case:

Data engineering teams frequently deploy dbt or SQL transformations, which require data diffing and deployment previews to prevent production issues.

8. Dynatrace

Though traditionally known for application performance monitoring, Dynatrace has expanded into full-stack observability, including data pipelines. Its AI engine, Davis, helps detect anomalies across infrastructure, applications, and data flow, offering a single pane of glass for all observability needs.

Organizations already using Dynatrace for monitoring cloud-native applications will benefit from adding data observability without needing a separate tool.

Key Features:

- Full-stack AI-powered observability.

- Automatic dependency mapping and root cause detection.

- Monitoring for cloud-native, Kubernetes, and serverless environments.

- End-to-end traces from frontend to backend data systems.

Ideal use case:

Enterprises seeking unified observability across applications, infrastructure, and data layers—especially in cloud-native or Kubernetes environments.

9. Datadog

Datadog is a widely used observability platform that’s now broadening monitoring to data pipelines and workflows. The strength of Datadog lies in its ecosystem, which integrates easily with hundreds of tools spanning from cloud to infrastructure and down through applications. This allows easy association of data issues with upstream system behavior.

For organizations that already use Datadog for infra and APM, adding observability to the data level is simple.

Key Features:

- Dashboards for data job runtimes, errors, and throughput.

- Real-time alerts and anomaly detection.

- Built-in integrations with cloud providers (such as AWS, GCP, and Azure)

- Unified view for logs, metrics, traces, and data workflows.

Best use case:

Teams that are already using Datadog for infrastructure or application monitoring and would like to gain better visibility into data workflows (and pipelines), all in the same platform.

10. Elastic Observability (ELK Stack)

Elastic Observability, built on the popular ELK Stack (Elasticsearch, Logstash, Kibana), provides real-time insights across your data pipelines. It collects logs, metrics, and traces, and helps visualize and alert on system behavior. Due to its high flexibility and open-source nature, it is preferred by DevOps teams, as well as data engineering or ML ops teams, for those requiring complete control over observability configurations. It is well-suited for log-heavy systems, distributed pipelines, and custom activity monitors.

Key Features:

- Real-time ingestion and visualization of logs, metrics, and APM traces.

- Customizable dashboards in Kibana.

- Supports alerting and anomaly detection via Machine Learning.

- Open-source flexibility with powerful community and plugin support.

Ideal use case:

Engineering teams who want a highly customizable, open-source observability solution for ingesting, analyzing, and visualizing logs, metrics, and traces across distributed systems.

Top 10 Data Observability Tools in 2025

| Tool | Key Features | Ideal For |

| Monte Carlo | Enterprises need full-stack, secure observability. | Fast-growing teams seeking an easy setup. |

| Acceldata | Performance, reliability & cost insights for data pipelines. | Big data, hybrid cloud environments. |

| Databand (IBM) | Real-time alerts, cross-pipeline visibility, lineage tracking. | Fast-growing teams seeking an easy setup. |

| Metaplane | Schema alerts, dbt monitoring, CI/CD integration. | Dev teams prefer a data-as-code approach. |

| Bigeye | Custom data quality checks, SQL-based metrics. | Dev teams prefer a data-as-code approach. |

| Soda | Code-first testing, open-source (Soda SQL). | Teams want unified observability. |

| Datafold | Teams want unified observability. | CI/CD-focused data engineering teams. |

| Dynatrace | AI-based full-stack anomaly detection. | Enterprises seeking automated insights. |

| Datadog | Pipeline + infrastructure monitoring in one platform. | Teams wanting unified observability. |

| Elastic (ELK) | Custom log & trace monitoring via Elasticsearch. | Teams with existing ELK stack use. |

Supercharge Your Data Strategy with Kanerika’s Observability Solutions

Monitor, detect, and resolve data issues before they impact your business.

Use Cases Across Industries

Financial Services

Challenges:

- Financial institutions operate in highly regulated environments that depend on the accuracy of data to model risks, detect fraud, or submit compliance reports.

- Data pipelines are frequently spread across various systems — trading platforms, customer databases, and regulatory feeds among them — meaning there is ample opportunity for latency, schema drift, and silent failures to occur.

- A single data issue can lead to incorrect credit scoring, missed fraud alerts, or non-compliance penalties.

Impact of Data Observability:

- Enables real-time monitoring of transactional and market data pipelines.

- Detects anomalies in data volume, schema changes, and freshness delays.

- Offers lineage tracking to help you pinpoint the source of problems and understand downstream implications.

Example:

J.P. Morgan uses Monte Carlo to monitor its credit risk and trading data pipelines. When a schema change occurred in a key data source feeding its risk models, Monte Carlo flagged the issue before it reached production dashboards. This proactive alerting helped the bank avoid a potential compliance breach and reduced incident resolution time by over 60%.

Healthcare

Challenges:

- Healthcare organizations manage sensitive patient data, clinical trial records, and IoT device outputs—all of which must be accurate, timely, and compliant with regulations like HIPAA.

- Data inconsistencies can compromise patient safety, delay drug development, and lead to legal liabilities.

- Integrating data from diverse sources (EHRs, lab systems, wearables) adds complexity and risk.

Impact of Data Observability:

- Monitors data pipelines for schema drift, missing values, and latency.

- Ensures completeness and accuracy of clinical and operational datasets.

- Supports compliance by maintaining audit-ready lineage and quality metrics.

Example:

Roche adopted Acceldata to manage data ingestion from clinical systems and IoT medical equipment. Once Acceldata flagged a delay in patient vitals data from wearables, for instance, it sounded an alert that enabled the data engineering team to remediate the issue before studies were affected. This observability configuration provided better data integrity and increased speed to drug development.

Retail & E-commerce

Challenges:

- Retail platforms depend on real-time data for inventory management, dynamic pricing, personalized marketing, and customer experience.

- Broken product feeds, stale pricing data, or inconsistent customer behavior metrics can lead to lost sales and poor user satisfaction.

- Data flows from suppliers, warehouses, and digital storefronts must be synchronized and monitored continuously.

Impact of Data Observability:

- Tracks the freshness and accuracy of product, pricing, and inventory data.

- Detects anomalies in customer behavior metrics and sales trends.

- Ensures consistency across multiple channels and systems.

Example:

Instacart uses Bigeye to monitor its product catalog and pricing pipelines. During a major promotional campaign, Bigeye detected a drop in data freshness for pricing updates from a key supplier. As a result, Instacart was able to take swift action and fix the issue before it impacted customers, safeguarding revenue and trust.

Manufacturing

Challenges:

- Modern manufacturing relies on sensor data from machines for predictive maintenance, quality control, and operational efficiency.

- Data latency, schema mismatches, or anomalies in sensor readings can lead to equipment failures, production delays, and compliance issues.

- Integrating data from thousands of IoT devices across facilities adds complexity.

Impact of Data Observability:

- Monitors real-time sensor data for anomalies and schema drift.

- Ensures timely and accurate data for predictive analytics and reporting.

- Supports compliance by maintaining traceable data lineage.

Example:

Bosch deployed Telmai across its smart factories to monitor sensor data from assembly lines. When a schema change occurred in temperature sensor readings, Telmai flagged the issue before it disrupted predictive maintenance models. This observability setup helped Bosch avoid a potential production halt and saved millions in downtime costs.

Media & Entertainment

Challenges:

- Streaming platforms rely heavily on real-time user behavior data to power recommendation engines, personalize content, and optimize ad targeting.

- Any delay, inconsistency, or anomaly in data pipelines can lead to irrelevant content suggestions, poor user experience, and lost advertising revenue.

- Keeping data fresh and accurate across an ever-expanding range of devices is an ongoing challenge, especially when millions of users are interacting with your service.

Impact of Data Observability:

- Enables continuous monitoring of user engagement metrics such as watch time, click-through rates, and content preferences.

- Detects anomalies in content consumption patterns, such as sudden drops in viewership or unexpected spikes in certain regions.

- Ensures data freshness so that personalization algorithms and ad engines operate on the most current data available.

Example:

Rakuten Viki uses Rakuten SixthSense to monitor data pipelines powering its recommendation engine. When a delay was detected in user interaction data, the platform triggered an alert, allowing engineers to fix the issue before it affected users. This improved recommendation accuracy by 35% and boosted engagement.

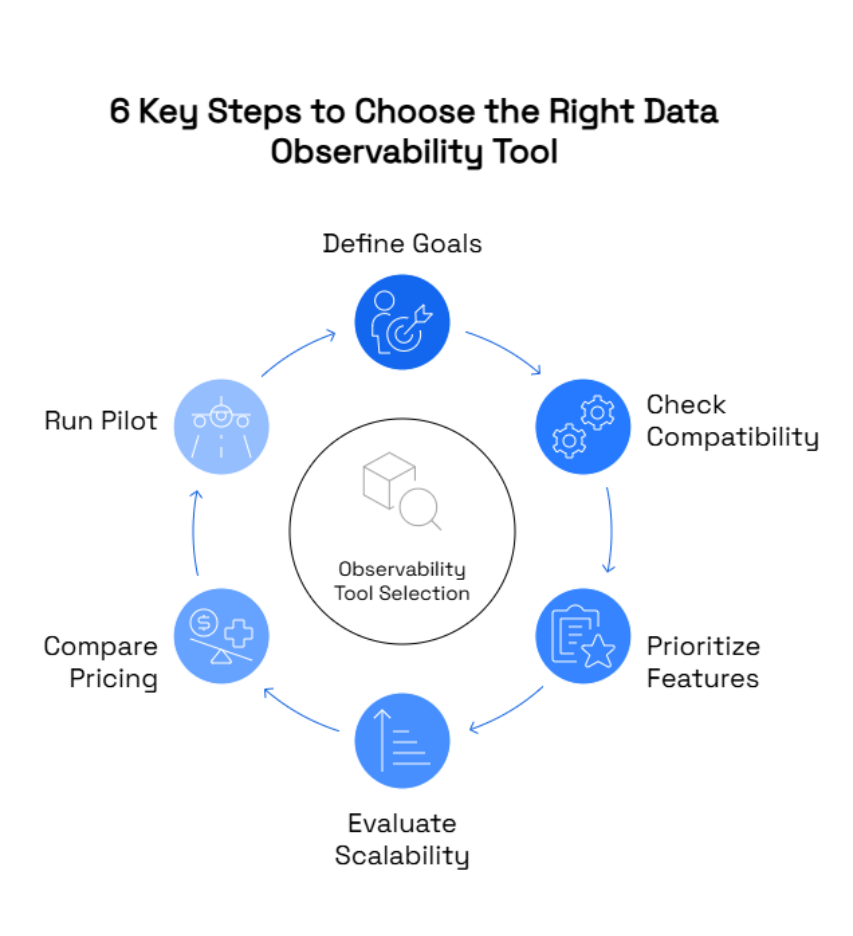

How to Choose the Right Data Observability Tool

Choosing the right data observability tool is not just a technical decision—it’s a strategic investment in your organization’s data reliability, operational efficiency, and business agility. Here are six essential considerations to guide your selection:

1. Define Your Objectives Clearly

Before evaluating tools, clarify what you want to achieve with data observability. Common objectives include:

- Reducing data downtime in dashboards and reports

- Improving data quality for analytics and AI models

- Ensuring compliance with regulations like GDPR, HIPAA, or SOX

- Accelerating root cause analysis for pipeline failures

- Enhancing collaboration between data engineering and business teams

For example, a retail company may prioritize freshness and accuracy of pricing data, while a healthcare provider may focus on schema consistency and lineage for compliance.

2. Evaluate Compatibility with Your Data Stack

A tool’s ability to integrate with your existing infrastructure is critical. Check for native support or easy API integration with:

- Data Warehouses: Snowflake, BigQuery, Redshift

- ETL/ELT Tools: dbt, Airflow, Fivetran, Informatica

- Data Lakes: Databricks, AWS S3, Azure Data Lake

- BI Platforms: Tableau, Power BI, Looker

- Orchestration Frameworks: Spark, Dagster, Prefect

For instance, if your team uses dbt for transformations and Snowflake for storage, tools like Soda or Monte Carlo offer seamless integration and faster setup.

3. Prioritize Essential Features

Not all observability tools offer the same depth. Focus on features that align with your use cases:

- Anomaly Detection: ML-powered or rule-based alerts for volume, schema, and freshness issues

- Data Lineage: Visual tracking of data flow across systems to trace root causes

- Custom Metrics & SLAs: Define thresholds for business-critical data assets

- Alerting & Incident Management: Real-time notifications via Slack, email, or PagerDuty

- Historical Trends & Reporting: Analyze long-term data health and performance

For example, Bigeye allows teams to define custom SLAs and monitor them with SQL-based metrics, making it ideal for data-driven organizations.

4. Assess Scalability and Performance

Your observability tool should grow with your data ecosystem. Consider:

- Volume Handling: Can it monitor millions of rows or hundreds of pipelines?

- Real-Time Monitoring: Does it support streaming data or only batch processing?

- Multi-Cloud Support: Is it compatible with AWS, Azure, GCP, or hybrid environments?

Enterprises like Roche and J.P. Morgan use tools like Acceldata and Monte Carlo because they scale across global operations and complex data architectures.

5. Consider Pricing and Vendor Support

Pricing models vary widely:

- Usage-Based: Pay per data volume or number of monitored assets

- Flat-Rate Licensing: Fixed cost for enterprise-wide deployment

- Open-Source Options: Free to use but may require internal support

Also, evaluate vendor support:

- Onboarding and training resources

- Dedicated customer success teams

- Active user communities and forums

- Transparent product roadmaps

For example, Metaplane is known for its fast setup and responsive support, making it ideal for startups and agile teams.

6. Run a Pilot and Align with Governance

Before full deployment, test the tool on a critical pipeline:

- Monitor a high-impact dashboard or data feed

- Set up alerts and SLAs

- Measure improvements in issue detection and resolution time

Also, ensure the tool supports governance needs:

- Audit Trails: For compliance and traceability

- Data Masking & Encryption: For sensitive data

- Policy Integration: With platforms like Collibra or Alation

Involving stakeholders, data engineers, analysts, and compliance officers during the pilot ensures alignment across technical and business goals.

Kanerika’s Approach to Data Observability

At Kanerika, we know that seamless and accurate data is the foundation of effective digital transformation. Our data observability is grounded in an intimate understanding of enterprise data ecosystems and a commitment to delivering real business value.

We believe data observability is not just about preventing failure, but about driving an organization to the full potential of its assets. We work with businesses to:

- Adopt a Holistic Approach: Employ the top-ranked data observability platforms and strategies to develop best-in-class monitoring, alerting, and lineage functionalities for your data pipelines.

- Foster a Culture of Trust: Work to cultivate an environment where data quality and trustworthiness are owned across the company, with every team confident in their source of truth.

- Drive Business Results: Turn technical findings from data observability into actions for cost savings, efficiency gains, faster decision making, and stronger compliance.

- Leveraging our knowledge in data, AI, and automation to seamlessly incorporate observability into your broader overall data strategy. Ultimately, your company’s data should be a strategic asset and not a liability.

Our expertise in data, AI, and automation allows us to integrate data observability seamlessly into your broader data strategy, ensuring your data is always an asset, never a liability.

Data Observability vs Data Quality: Which One Does Your Business Need?

Understand the key differences between data observability and data quality for better data trust and pipeline reliability.

FAQs

1. Which is the best observability tool?

The best data observability tool depends on your organization’s specific requirements and scale. Monte Carlo, Datadog, and Acceldata are top-rated for enterprises, while Metaplane and Bigeye are great for agile teams.

2. What is data observability?

Data observability is the ability to monitor, detect, and resolve data issues across pipelines using automated tools to ensure data reliability and trust.

3. What are the types of observability data?

The main types are:

1. Metrics – quantitative measurements

2. Logs – system-generated records

3. Traces – request flows across systems

4. Metadata – schema, lineage, and quality info

4. Which is better, Dynatrace or Datadog?

Dynatrace excels in AI-powered root cause analysis and full-stack monitoring. Datadog is more flexible and integrates well with diverse tools. Choice depends on your tech stack and use case.

5. How to build data observability?

Start by setting clear goals, implement monitoring tools, define SLAs, integrate with your data stack, and continuously track anomalies and lineage.