Virtual Large Language Models (vLLMs) are emerging as a critical solution amid the explosive growth of large language models—and the tightening need for scalable, cost-efficient inference. As of 2025, 67% of organizations worldwide have adopted generative AI relying on LLMs, reflecting how indispensable this technology has become. However, deploying traditional LLMs isn’t easy—they demand massive GPU memory, suffer latency issues with long prompts, and rack up high operational costs.

That’s where vLLMs step in, offering a smarter architecture to manage memory, serve multiple queries efficiently, and scale across clusters without inflating infrastructure costs. This blog provides a detailed guide on vLLMs—exploring their architecture, core benefits, real-world use cases, and the future of scalable LLM serving. Whether you’re a developer, technical lead, or product manager, this post will equip you to navigate the next frontier in AI deployment.

What is a Virtual Large Language Model?

A Virtual Large Language Model (Virtual LLM or vLLM) refers to an optimized way of serving and running large language models that emphasizes efficiency, scalability, and memory management. Unlike traditional LLM deployments, which can be resource-heavy and inefficient when handling long prompts or concurrent requests, a Virtual LLM uses specialized techniques to maximize hardware utilization while reducing latency.

The key difference lies in how Virtual LLMs manage memory and inference workloads. Standard LLMs often store entire attention states in GPU memory, which quickly becomes a bottleneck for long-context queries or batch processing. Additionally, virtual LLMs solve this with innovations like PagedAttention, a memory management system that treats attention states like virtual memory—loading only what’s needed into GPU memory while offloading the rest. This enables models to handle much longer inputs and larger batch sizes without exhausting hardware resources.

In practice, Virtual LLMs make scaling AI inference more cost-effective and accessible, especially for production environments where thousands of requests need to be served simultaneously. As well as, they integrate well with distributed systems, APIs, and existing ML frameworks, making them a natural fit for SaaS products and enterprise applications.

One of the most well-known examples is vLLM, an open-source library that implements PagedAttention to deliver high-throughput, low-latency inference for models like LLaMA, Mistral, and GPT-style architectures. Moreover, by abstracting hardware inefficiencies, it allows developers to deploy large language models more effectively in real-world scenarios.

Why Traditional LLM Serving Faces Challenges

While large language models (LLMs) are powerful, serving them in production environments poses serious challenges for businesses and developers.

1. High GPU Memory Requirements

LLMs contain billions of parameters, which require massive GPU memory for inference. Even high-end hardware struggles to handle large models efficiently, especially when multiple requests come in simultaneously.

2. Latency in Long-Context Processing

When dealing with extended prompts or large context windows, traditional LLM serving engines often experience latency spikes. This delay makes it difficult to deliver real-time, conversational responses.

3. High Inference Costs in Production

Running LLMs continuously is expensive. Without optimization, GPU utilization is inefficient, leading to inflated cloud costs—especially for SaaS businesses serving thousands of users.

4. Difficulty in Scaling for Multi-Tenant Environments

In enterprise settings, one model often serves multiple teams or clients. Traditional LLM serving engines struggle with multi-tenancy, making it hard to balance workloads and ensure fair resource allocation.

5. Limited Efficiency with Large Batches

Batching queries is a common optimization strategy, but most traditional serving frameworks fail to manage large batches efficiently. This results in wasted compute cycles and inconsistent performance under heavy traffic.

Core Features of Virtual LLMs

Virtual Large Language Models (vLLMs) are designed to overcome the performance, scalability, and cost bottlenecks that plague traditional LLM serving frameworks. Below are the core features that make vLLMs stand out.

1. PagedAttention Mechanism: Efficient Memory Management

One of the biggest innovations in vLLMs is PagedAttention, which manages GPU memory like a virtual memory system. Instead of storing the entire attention state in limited GPU memory, PagedAttention dynamically “pages in” and “pages out” what’s needed. Hence, this allows for handling much larger contexts without running out of memory, significantly reducing overhead.

2. Throughput Optimization: Serving Multiple Queries Simultaneously

vLLMs are optimized to serve multiple concurrent queries efficiently. Traditional serving frameworks often process requests sequentially, leading to idle GPU cycles. In contrast, vLLMs batch and schedule queries intelligently, maximizing GPU utilization and improving overall throughput.

3. Long-Context Handling: Better Support for Large Prompts

Modern LLM applications often require long context windows—for example, analyzing research papers or supporting multi-turn conversations. vLLMs are designed to manage these contexts smoothly, avoiding the latency and memory issues that traditional serving systems struggle with.

4. Distributed Inference: Scaling Across Clusters

To support enterprise-scale deployments, vLLMs enable distributed inference across multiple GPUs and nodes. This horizontal scaling ensures that large workloads can be spread across clusters, improving reliability and response time while keeping costs predictable.

5. Compatibility with Popular Ecosystems

Virtual LLMs are built to integrate easily with popular AI ecosystems. They support Hugging Face models, OpenAI-compatible APIs, Ray Serve, and other inference frameworks. As well as, this compatibility lowers the barrier to adoption and allows teams to plug vLLMs into existing AI pipelines without major rewrites.

6. Performance Improvements in Practice

In benchmark tests, vLLMs consistently outperform traditional serving engines. For example, a single GPU running vLLM can deliver 2–4x higher throughput while maintaining lower latency, even under long-context workloads. This means SaaS companies can serve more users at lower cost without compromising response speed.

How Virtual LLMs Work: Step by Step Process

- Request Ingest

A client sends a prompt via an OpenAI-compatible REST/gRPC API (with params like max_tokens, temperature, top_p).

- Preprocessing & Tokenization

The server applies the prompt template (system/user/assistant roles) and tokenizes the text into IDs.

- Admission Control

Requests enter a priority queue. SLAs, max queue length, and model availability determine when each job is scheduled.

- Dynamic Micro-Batching

Compatible requests (same model/precision, similar sequence lengths) are batched to fully utilize GPU cores while keeping latency low.

- KV Cache Paging (Setup)

vLLM allocates the attention key/value cache in fixed-size pages. Each sequence gets a page table mapping token positions to physical pages (like virtual memory).

- PagedAttention in Action

For each decoding step, the runtime pulls only the needed pages into GPU HBM (hot set) and evicts cold pages to CPU memory/NVMe. This avoids running out of GPU memory on long contexts.

- Fused Kernels & Decoding

GPU kernels compute attention over the hot pages, produce logits, and sample the next token (greedy/top-k/top-p). Streaming tokens can be sent back to the client as they’re generated.

- Long-Context Optimizations

Techniques like prefix/prompt caching, sliding windows, and attention sinks reuse earlier computations—keeping latency stable even for big prompts.

- Distributed Inference (Scale-Out)

For large models or high traffic, vLLM shards across multiple GPUs/nodes (tensor/pipeline parallel). NCCL handles collectives; the scheduler coordinates paging and batches across shards.

- Memory Reuse & Cleanup

When a sequence finishes or is truncated, its pages are returned to the pool. Paging keeps fragmentation low and throughput high.

- Observability & Autoscaling

Metrics (latency p95, tokens/sec, GPU util, memory pressure) feed autoscalers to add/remove replicas and tune batch sizes dynamically.

- Ecosystem Compatibility

Models load from Hugging Face; serving exposes OpenAI-compatible endpoints; integrations with Ray Serve/Kubernetes make deployment production-ready.

Private LLMs: Transforming AI for Business Success

Revolutionizing AI strategies, Private LLMs empower businesses with secure, customized solutions for success..

Virtual LLM vs Traditional LLMs

| Feature | Virtual LLM (vLLM) | Traditional LLMs |

| Deployment Model | Dynamic resource allocation | Fixed resource allocation |

| Batch Processing | Efficient batching of requests | Sequential processing |

| Latency | Low latency under load | Increases with concurrent users |

| Memory Management | PagedAttention optimization | Standard memory allocation |

| Throughput | High concurrent request handling | Limited by static resources |

| Resource Utilization | Adaptive, efficient usage | Often underutilized or overloaded |

| Cost Efficiency | Lower infrastructure costs | Higher fixed costs |

| Scalability | Automatic scaling | Manual scaling required |

| Use Cases | Production, enterprise apps | Research, single-user scenarios |

| Setup Complexity | More complex initial setup | Simpler deployment |

1. Deployment Models Explained

Virtual LLMs use dynamic resource allocation that adjusts computing power based on actual demand. When more users need text generation, the system automatically allocates additional resources. During quiet periods, it scales down to save costs.

Traditional LLMs run on fixed infrastructure with predetermined resource allocation. Once deployed, they use the same amount of computing power regardless of how many users are actively making requests. Moreover, this approach wastes resources during low usage but can become overwhelmed during peak times.

2. Performance Differences

Virtual LLMs excel at handling multiple requests simultaneously through intelligent batching. They group similar requests together and process them efficiently, maintaining fast response times even when serving hundreds of users at once.

Traditional deployments process requests one after another or with limited parallelism. This works fine for single users or small teams but creates delays when traffic increases.

3. Memory Efficiency Advantages

Virtual LLMs use PagedAttention, a technique that reduces memory waste by only storing the data that’s actually being used for text generation. This optimization allows the same hardware to handle larger models or serve more users simultaneously.

Traditional approaches allocate fixed memory blocks that often contain unused space, leading to inefficient resource usage and higher costs.

4. Cost and Use Case Considerations

Virtual LLMs deliver significant cost savings for production applications where usage varies throughout the day. Companies pay for resources they actually use rather than maintaining expensive hardware that sits idle during quiet periods.

Traditional LLMs work better for research environments or consistent workloads where simplicity matters more than efficiency. They’re easier to set up and understand, making them suitable for experimentation and development work.

The choice depends on your specific needs: pick virtual LLMs for production applications with variable demand, and traditional deployments for research or consistent usage patterns.

Why Small Language Models Are Making Big Waves in AI

Disrupting AI landscapes, Small Language Models are delivering efficient, targeted solutions with minimal resource demands.

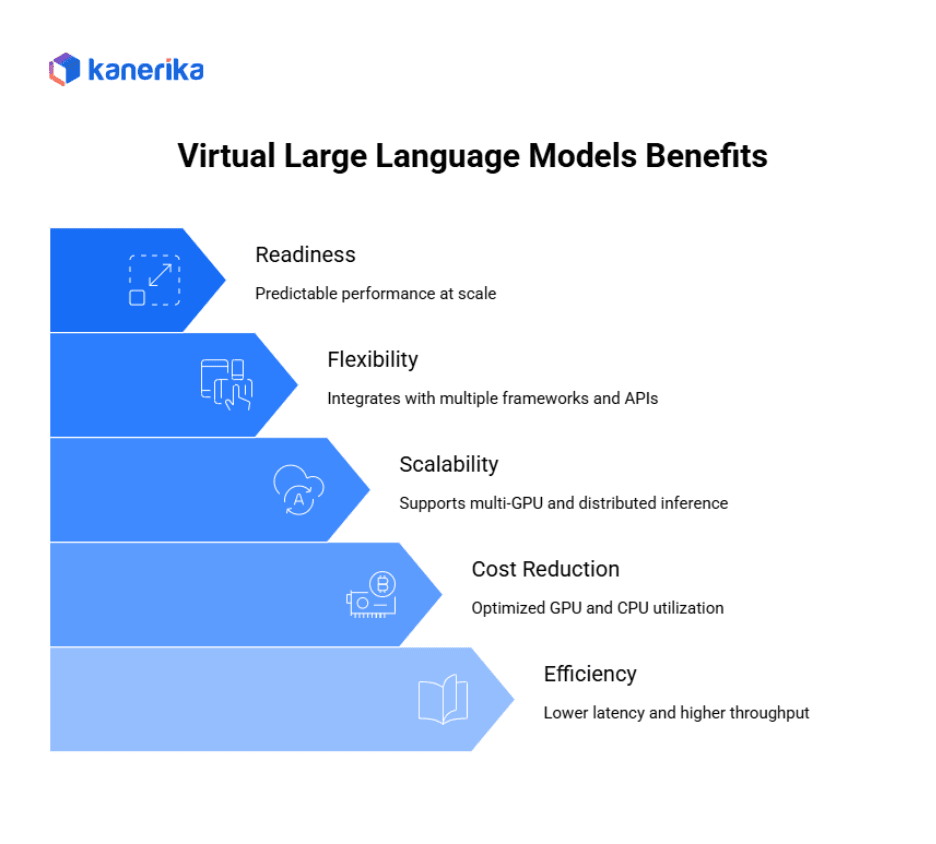

Benefits of Virtual Large Language Models

Virtual Large Language Models (vLLMs) are designed to make LLM inference faster, cheaper, and more scalable in production. They address the inefficiencies of traditional serving systems and unlock several business-ready advantages.

1. Efficiency Gains

With mechanisms like PagedAttention and intelligent batching, vLLMs achieve lower latency and higher throughput. This ensures users get faster responses, even with long-context inputs or heavy query traffic.

2. Cost Reduction

By optimizing GPU and CPU utilization, vLLMs reduce wasted compute cycles. Organizations save significantly on cloud GPU costs, making large-scale deployment economically viable.

3. Scalability

vLLMs support multi-GPU and distributed inference, enabling them to handle enterprise-scale workloads. Whether serving thousands of concurrent requests or very large models, vLLMs scale horizontally with ease.

4. Flexibility

They integrate seamlessly with multiple frameworks and APIs, including Hugging Face, OpenAI-compatible APIs, and Ray Serve. This makes it easier for teams to adopt vLLMs without major workflow changes.

5. Ready

vLLMs provide predictable performance at scale, making them suitable for mission-critical SaaS and enterprise applications where uptime and consistency are essential.

Example in Action

A SaaS provider running AI-powered chatbots migrated from a traditional inference setup to vLLM.

The result: 2x higher throughput and 40% lower GPU costs, enabling them to serve more customers without expanding infrastructure.

Popular Virtual LLM Frameworks & Ecosystem

The rise of Virtual Large Language Models (vLLMs) has sparked an ecosystem of frameworks and serving engines designed to make inference faster and more scalable. Each option comes with unique strengths and trade-offs.

1. vLLM (Open-Source Project)

The most prominent example, vLLM, is an open-source library built around the PagedAttention mechanism. It’s optimized for high throughput, long-context handling, and memory efficiency. Additionally, with Hugging Face integration and OpenAI-compatible APIs, it’s developer-friendly and production-ready.

- Pros: Excellent memory management, strong community support, open-source flexibility.

- Cons: Requires GPUs for best performance, setup can be complex for small teams.

2. Ray Serve Integration

vLLM integrates with Ray Serve, enabling distributed inference across clusters. This combination allows enterprises to scale from single GPU deployments to large multi-node setups with minimal friction.

3. TGI (Text Generation Inference)

Developed by Hugging Face, TGI is another popular serving engine optimized for inference. It provides production-grade features like token streaming, quantization support, and integration with Hugging Face Hub.

- Pros: Stable, well-maintained, great for Hugging Face ecosystem users.

- Cons: Less optimized for long-context inference compared to vLLM.

4. FasterTransformer

NVIDIA’s FasterTransformer focuses on GPU kernel-level optimizations. It delivers extremely fast inference for supported models, especially when paired with NVIDIA hardware.

- Pros: Unmatched speed on NVIDIA GPUs, strong for enterprise-grade deployments.

- Cons: Tied to NVIDIA ecosystem, less flexibility with non-NVIDIA environments.

DeepSpeed-Inference

From Microsoft, DeepSpeed-Inference emphasizes model parallelism and inference efficiency for extremely large models. It’s often used in research and enterprise environments for multi-billion parameter models.

- Pros: Excellent for massive models, robust distributed features.

- Cons: Higher complexity, requires expertise to configure and tune.

Real-World Applications of Virtual LLMs

Virtual Large Language Models (vLLMs) are not just a technical optimization—they’re enabling real-world business applications by making LLMs faster, cheaper, and more scalable. From chatbots to enterprise systems, vLLMs power diverse use cases.

1. Conversational AI

Virtual LLMs are ideal for powering chatbots and virtual assistants at scale. With lower latency and higher throughput, customer queries can be handled instantly, even during traffic spikes. Additionally, SaaS providers delivering AI-powered support systems have reported 2–3x faster response times after migrating to vLLM.

2. Search & Retrieval-Augmented Generation (RAG)

RAG systems rely on injecting external documents into LLM prompts for context-aware answers. vLLMs enable long-context handling, ensuring large documents can be processed efficiently. Finance firms, for instance, use vLLM-backed RAG systems for regulatory document analysis, reducing research hours significantly.

3. Multi-Agent Systems

In multi-agent environments, multiple AI agents often run in parallel—one for reasoning, another for information retrieval, and another for task execution. vLLMs’ throughput optimization allows these agents to run efficiently without bottlenecks, making them popular in research labs and automation platforms.

4. Enterprise Workflows

Businesses use vLLMs for report generation, document summarization, and building internal knowledge bases. A healthcare provider, for example, adopted vLLM for summarizing patient records, cutting documentation time for doctors while maintaining compliance with strict data governance rules.

5. Cloud AI Platforms

Major cloud AI providers are beginning to integrate vLLM into their inference backends. As well as, by offering inference-as-a-service, these platforms let customers run models at scale with predictable costs and reduced GPU overhead. This makes advanced LLMs more accessible to smaller enterprises.

Case Studies

- SaaS Company: Migrated customer support chatbots to vLLM, reducing inference costs by 40% while doubling throughput.

- Financial Services Firm: Deployed vLLM in a RAG pipeline for compliance research, cutting manual review time by 60%.

- Healthcare Provider: Leveraged vLLM for medical summarization and decision support, enabling doctors to process complex patient data faster.

PLLM Agents: Innovating AI for Driving Business Growth

Driving business growth, LLM Agents are innovating AI solutions with advanced automation and deep contextual insights.

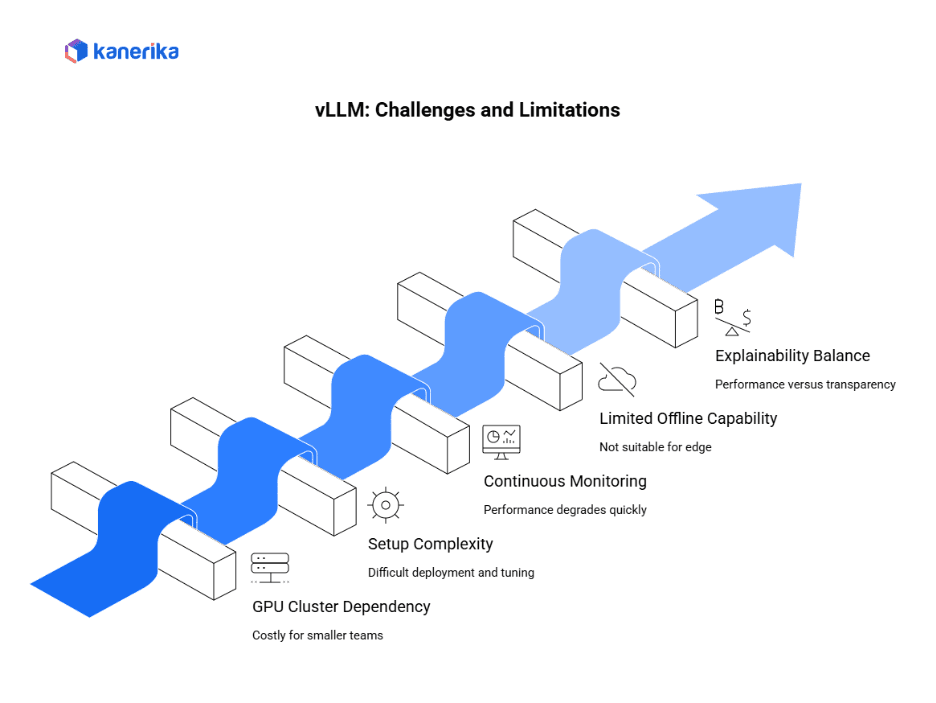

Challenges and Limitations

While Virtual Large Language Models (vLLMs) solve many bottlenecks of traditional LLM serving, they are not without challenges.

1. Requires GPU Clusters for Maximum Benefit

To unlock their full performance, vLLMs often require GPU clusters. This can make adoption costly for startups or smaller teams without access to high-end infrastructure.

2. Setup Complexity for Smaller Teams

Deploying and tuning vLLMs involves configuring batching, paging, and distributed inference. Smaller teams may find the setup and ongoing optimization more complex compared to simpler, out-of-the-box solutions.

3. Continuous Monitoring Needed for Stability

Because vLLMs operate in multi-tenant, high-throughput environments, continuous monitoring of GPU utilization, latency, and memory paging is critical. Moreover, without strong observability, performance can degrade quickly.

4. Limited Offline Capability

Unlike lightweight frameworks such as Ollama, which run models locally on laptops, vLLMs are designed for server-first deployments. This makes them less suitable for offline or edge scenarios.

5. Balancing Speed with Explainability

While vLLMs optimize speed and efficiency, they do not inherently address the “black box” problem of LLMs. Enterprises must still balance performance with explainability, especially in regulated industries.

Future of Virtual LLMs

The future of Virtual Large Language Models (vLLMs) is poised to reshape how enterprises and developers deploy large-scale AI. Also, several emerging trends highlight where this technology is headed.

1. Hybrid Cloud + Edge Deployment

As businesses seek both scale and responsiveness, we’ll see more hybrid models, with vLLMs running in the cloud for large-scale workloads and lightweight agents deployed at the edge for low-latency interactions.

2. Integration with Serverless AI

vLLMs will increasingly integrate with serverless AI platforms, allowing developers to spin up inference workloads on demand. This will reduce idle GPU costs while ensuring scalability during usage spikes.

3. Advances in Long-Context Models

With research pushing context windows beyond 100k+ tokens, vLLMs will play a crucial role in making such large models feasible. PagedAttention and memory paging will be critical to handle these extended contexts efficiently.

4. Enterprise Adoption for Mission-Critical Workloads

From financial compliance to healthcare decision support, enterprises will adopt vLLMs as the backbone of mission-critical applications, valuing their predictability, scalability, and efficiency.

5. Long-Term Vision

The ultimate trajectory is AI as a service powered by highly optimized vLLM backends. Just as cloud transformed IT infrastructure, vLLMs will underpin a new era of scalable, reliable, and cost-effective AI delivery, enabling businesses of all sizes to integrate advanced language capabilities seamlessly.

Trust Kanerika to Harness Virtual LLMs for Your AI/ML Solutions

The LLM landscape is vast and constantly evolving, making it challenging to identify and integrate the right model for your needs. Kanerika’s team of AI specialists excels in navigating this complex domain, ensuring your AI/ML solutions are built on cutting-edge technology. Moreover, from selecting the ideal model to seamless integration with your existing infrastructure, we deliver robust and efficient AI solutions tailored to your goals.

Partnering with Kanerika gives you a competitive edge by leveraging the latest advancements in virtual LLMs while minimizing costs and time to market. Our commitment to ethical development practices ensures your AI solutions are not only powerful but also trustworthy and transparent. Contact Kanerika today to explore how we can help transform your business with open-source LLMs.

Accelerate Success with AI-Driven Business Optimization

Partner with Kanerika for Expert AI implementation Services

FAQs

1. What is a Virtual Large Language Model (vLLM)?

A vLLM is an optimized system for serving large language models efficiently, using innovations like PagedAttention for better memory and throughput.

2. How do vLLMs differ from traditional LLM serving?

Unlike traditional servers, vLLMs optimize GPU memory, reduce latency in long prompts, and allow multi-tenant, high-throughput deployments at scale.

3. What are the main benefits of vLLMs?

They offer efficiency gains, cost reduction, scalability, ecosystem flexibility, and predictable enterprise-grade performance.

4. Which frameworks support vLLMs?

vLLMs integrate with Hugging Face models, OpenAI-compatible APIs, and Ray Serve, making them easy to adopt into existing AI pipelines.

5. What are common use cases of vLLMs?

Applications include chatbots, RAG pipelines, enterprise workflows (summarization, knowledge bases), and cloud inference-as-a-service.

6. What challenges do vLLMs face?

They require GPU clusters for best results, have setup complexity, need monitoring for stability, and are less suited for offline use compared to lightweight tools like Ollama.

7. What is the future of vLLMs?

The future includes hybrid cloud + edge deployments, serverless AI integration, 100k+ token contexts, and AI-as-a-service powered by optimized vLLM backends.