Ever feel like your business is drowning in data but starving for insight? You’re not alone. In today’s hyper-connected world, organizations collect data from dozens of sources—CRMs, ERPs, marketing platforms, IoT devices, and more. But without a way to unify it all, even the most advanced analytics tools fall short.

That’s where data integration comes in.

According to a recent Gartner report, over 80% of data analytics projects fail due to poor data integration and quality issues. In a landscape where real-time decision-making is critical, siloed data isn’t just inefficient—it’s a liability.

Take Netflix, for example. The streaming giant integrates data from content licensing, user behavior, device logs, and regional trends to deliver hyper-personalized recommendations and make billion-dollar content decisions. Without seamless data integration, that kind of agility would be impossible.

So, what is data integration—and why does it matter more now than ever? In this blog, we’ll break down the concept, explore real-world benefits, share common challenges, and guide you through best practices for integrating data across systems in a scalable, future-proof way.

What is Data Integration?

Data integration is a critical process that enables you to combine data from various sources, providing a cohesive view across different datasets. The primary goal is to create a unified and actionable data landscape for your organization. In your quest to make informed decisions, data integration is a foundational step that empowers you with comprehensive insights by merging data residing in diverse formats and locations. Whether you’re analyzing market trends or combining customer information from separate databases, data integration ensures that you have access to consistent and high-quality data.

Understanding the types and techniques of data integration is essential for efficient implementation. You may encounter methodologies like Extract, Transform, Load (ETL) and Extract, Load, Transform (ELT), each with its advantages, depending on your needs for real-time processing and the scale of data operations. Moreover, with tools and services from technology providers like IBM and Google Cloud, you have the option to streamline this process through code-free solutions that can reduce complexity and increase agility. As you explore the world of data integration, consider the challenges, such as data quality and security, alongside the benefits like enhanced data analytics and better strategic decision-making.

Enhance Data Accuracy and Efficiency With Expert Integration Solutions!

Partner with Kanerika Today.

Data Integration Techniques

Effective data integration methods streamline your business processes by allowing more efficient access to and analysis of data from disparate sources.

1. ETL Process

Extract, Transform, Load (ETL) is a sequential process where you first extract data from homogeneous or heterogeneous sources. Next, in the transform stage, this data is cleansed, enriched, and converted into a format suitable for analysis. Finally, the processed data is loaded into the target system, such as a data warehouse. ETL is traditionally used in batch integration, where data movement occurs at scheduled intervals.

- Pros:

- Ensures data quality through the transformation stage

- Data is consolidated, which aids in performance and analysis

- Cons:

- Can be time-consuming due to the batch processing model

- Less responsive to real-time data changes

2. ELT Process

Extract, Load, Transform (ELT), on the contrary, alters the traditional sequence. Here, you extract data and immediately load it into the target system. The transformation happens after loading, leveraging the power of modern data storage systems. ELT is particularly well-suited for handling large volumes of data and supporting real-time analytics applications.

- Pros:

- Enables real-time data processing

- More scalable as it handles vast data volumes efficiently

- Cons:

- May have implications for data governance and quality control

- Relies heavily on the capabilities of the target system for transformation

3. Data Virtualization

In Data Virtualization, you integrate data from different sources without physically moving or storing it in a single repository. Instead, it provides an abstracted, integrated view of the data which can be accessed on-demand. Additionally, this approach supports real-time data integration, often with less overhead compared to traditional methods.

- Pros:

- No need for a physical data warehouse

- Offers greater agility with real-time access to data

- Cons:

- May face performance issues with complex queries

- Requires a robust network and system infrastructure

4. Data Federation

Data Federation software creates a virtual database that provides an integrated view of data spread across multiple databases. It differs from data virtualization in that federation often targets more complex queries and transactional consistency. And, this method unifies data from multiple sources while maintaining their physical autonomy.

- Pros:

- Centralized access to data without consolidating into a single physical location

- Useful for complex data environments with diverse data stores

- Cons:

- Can encounter performance bottlenecks

- Data freshness might be a concern as it typically works better with batch processing rather than real-time

Data Ingestion vs Data Integration: How Are They Different?

Uncover the key differences between data ingestion and data integration, and learn how each plays a vital role in managing your organization’s data pipeline.

The Business Impact of Data Integration

Data integration leverages technology to streamline business processes and improve operational efficiency. Moreover, it is a catalyst in transforming raw data into valuable business insights, fostering collaboration, and driving smarter decision making.

1. Improving Decision Making

Your ability to make informed business decisions is significantly enhanced through data integration. By consolidating data from various sources, you gain a comprehensive view of your business landscape. Additionally, this allows you to identify trends, assess performance metrics, and make decisions backed by data. The speed at which you can access integrated data means you can respond more swiftly to market changes and operational demands.

2. Enhancing Collaboration and Communication

Integrated data breaks down silos and encourages unified collaboration among different departments within your company. When everyone has access to the same information, inter-departmental communication is more efficient, reducing misunderstandings and errors. This unity can lead to improved business processes and operational workflow, which are essential for a well-functioning business environment.

3. Gaining Business Insights and Fostering Innovation

Data integration is a gateway to gaining deeper business insights and drives innovation. By analyzing the integrated data, you can uncover patterns and opportunities that may not be visible in isolated datasets. This insight propels strategic initiatives and can lead to innovative solutions to business challenges, directly impacting your company’s growth and adaptability in a competitive market.

4. Calculating the Return on Investment (ROI)

Understanding the ROI of data integration is crucial to justify its implementation. You must consider both tangible benefits, like cost savings from improved operational efficiencies, and intangible benefits, such as enhanced data quality. Measuring ROI involves scrutinizing the costs of data integration against the financial gains—whether through direct revenue increases or indirect cost reductions—to ensure that your investment is yielding a positive return.

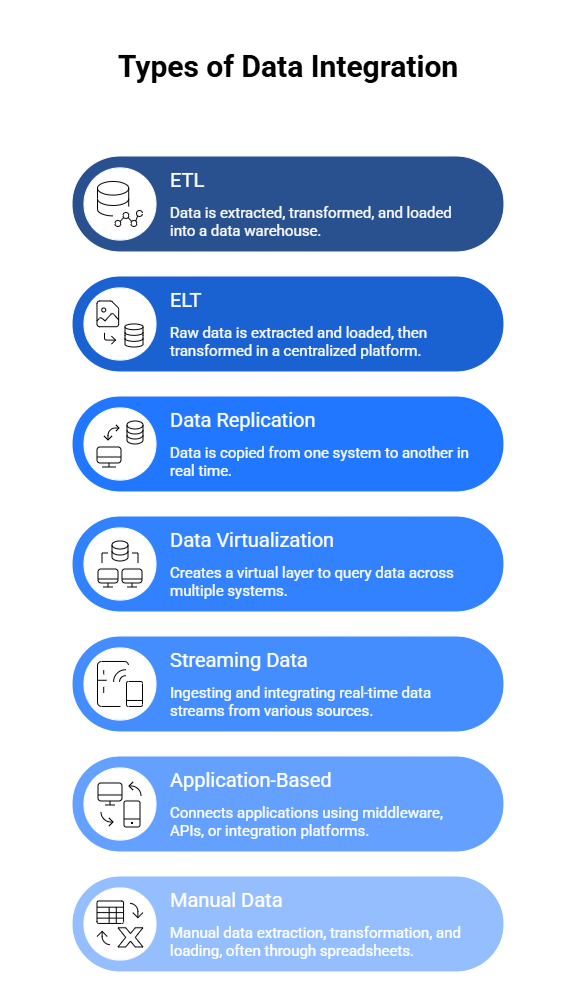

Types of Data Integration

There are several types of data integration that can be used to combine data from multiple sources.

1. ETL (Extract, Transform, Load)

ETL is the most traditional and widely used method. Data is first extracted from multiple sources (databases, CRMs, flat files), then transformed into a standardized format (cleaned, enriched, deduplicated), and finally loaded into a target data warehouse. It’s best suited for structured data and batch processing, such as overnight analytics jobs or monthly reporting.

2. ELT (Extract, Load, Transform)

In this approach, raw data is extracted and loaded directly into a centralized platform like a cloud data lake. The transformation happens after loading, leveraging the target system’s scalable compute. ELT is ideal for modern cloud-first architectures where real-time or near-real-time data is needed, and flexibility in transformation is a priority.

3. Data Replication

This method copies data from one system to another, either in real time or at scheduled intervals. It ensures systems remain in sync, and is commonly used for disaster recovery, failover, or mirroring operational data to analytics systems. It minimizes latency and allows continuous availability of critical data.

4. Data Virtualization

Unlike other methods, data virtualization does not physically move data. It creates a virtual layer that allows users to query data across multiple systems (databases, APIs, cloud storage) as if it were in one place. It reduces storage redundancy, accelerates access, and supports real-time decision-making.

5. Streaming Data Integration

This method focuses on ingesting and integrating real-time data streams from sources such as IoT devices, sensors, applications, or logs. It is essential for use cases that require low latency, such as fraud detection, dynamic pricing, or live dashboards.

6. Application-Based Integration

This uses middleware, APIs, or integration platforms (like MuleSoft or Zapier) to connect applications and ensure smooth data exchange. It’s ideal for synchronizing SaaS tools, automating workflows, or enabling real-time operational insights across systems.

7. Manual Data Integration

Still used in some smaller or legacy environments, this approach involves manual data extraction, transformation, and loading—often through spreadsheets or scripts. It’s labor-intensive, error-prone, and not scalable, but may serve in limited one-time migrations or non-critical processes.

Popular Tools and Platforms

Choosing the right data integration tool depends on your data architecture, scalability needs, and budget. Here are some widely used platforms:

1. Informatica

Informatica is a market leader in enterprise-grade data integration. It offers powerful ETL capabilities, cloud-native tools, and robust data governance features—ideal for large-scale, complex environments.

2. Talend

Talend is an open-source data integration platform known for its flexibility and user-friendly interface. It supports both batch and real-time processing, with built-in tools for data quality, transformation, and pipeline orchestration.

3. Microsoft Azure Data Factory

Azure Data Factory is a cloud-based ETL and data orchestration service that integrates well with Microsoft’s ecosystem. It allows building scalable data pipelines with minimal code, making it suitable for hybrid and multi-cloud environments.

4. Apache NiFi

An open-source project from the Apache Foundation, NiFi offers a visual interface for designing data flows. It excels at streaming data integration and real-time data routing with fine-grained control.

5. Fivetran / Stitch

Both Fivetran and Stitch provide fully managed ELT solutions for quick, code-free integration. They’re ideal for startups and mid-sized businesses that want to move data from multiple sources into a warehouse like Snowflake or BigQuery with minimal setup.

Maximizing Efficiency: The Power of Automated Data Integration

Discover the key differences between data ingestion and data integration, and learn how each plays a vital role in managing your organization’s data pipeline.

Common Use Cases of Data Integration

Data integration plays a critical role across industries by breaking down silos and making data accessible, accurate, and actionable. Here are some of the most impactful use cases:

1. Customer 360 Views in Marketing and CRM

By integrating data from CRM systems, email platforms, social media, and customer support tools, businesses can create a comprehensive view of each customer. This unified perspective enables personalized marketing, better customer service, and improved retention strategies.

2. Real-Time Analytics in E-Commerce

E-commerce platforms use data integration to combine information from websites, inventory systems, payment gateways, and user behavior analytics tools. This enables real-time dashboards, dynamic pricing, fraud detection, and smarter product recommendations.

3. IoT and Sensor Data in Manufacturing

Manufacturers rely on data integration to collect and unify sensor data from machinery, production lines, and logistics systems. This allows for predictive maintenance, improved quality control, and increased operational efficiency.

4. Healthcare Data Interoperability

Hospitals and healthcare providers integrate data from EMRs, lab systems, and insurance platforms to enable a more complete patient record. This supports better diagnosis, treatment planning, and regulatory compliance.

5. Financial Reporting and Risk Compliance

Financial institutions consolidate data from transactional systems, risk management platforms, and external data sources to ensure accurate reporting, risk assessment, and adherence to compliance standards such as IFRS and GDPR.

Simplify Your Data Management With Powerful Integration Services!!

Partner with Kanerika Today.

Challenges and Best Practices in Data Integration

In the realm of data integration, you’ll encounter an array of challenges that can impact the efficiency and effectiveness of your systems. On the cusp of delving into these issues, it’s crucial to also identify the best practices that can mitigate these hurdles and enhance your data integration processes.

1. Dealing with Data Silos

Data silos create barriers due to their isolated nature, making it tough to achieve a unified view. A primary challenge you’ll face is the fragmentation of data across multiple sources, which hinders your ability to extract meaningful insights. To counteract this:

- Identify and catalog your data sources

- Implement integration tools that support various data types and sources

- Foster a collaborative culture to reduce resistance to data sharing

2. Integration of Structured and Unstructured Data

You must tackle the complexity of merging structured and unstructured data, as they require different approaches for processing and analysis. The process includes:

- Using ETL (Extract, Transform, Load) practices for structured data

- Applying data mining and NLP (Natural Language Processing) for unstructured data

- Ensuring semantic integration for meaningful aggregation and analysis

3. Technical Challenges and Solutions

Technical challenges in data integration span interoperability, real-time data processing, and maintaining data quality. Your solutions should focus on:

- Adopting interoperable systems that ensure seamless data exchange

- Utilizing middleware or ESB (Enterprise Service Bus) for real-time data processing needs

- Instituting stringent data quality protocols to maintain the integrity of integration

4. Adaptability and Future-Proofing Your Integration Strategy

The rapidly evolving data landscape requires you to be proactive and adaptable. Future-proof your strategy by:

- Choosing scalable integration platforms that can grow with your data needs

- Planning for emerging data formats and standards to avoid future compatibility issues

- Creating an agile integration framework that permits quick adaptation to new technologies and methodologies

Current Trends and Future Directions

The data integration landscape is rapidly evolving with significant advancements in technology influencing how you manage and leverage data for strategic advantages.

1. Machine Learning and AI

Machine learning (ML) and artificial intelligence (AI) are redefining data integration processes. Your systems are becoming more predictive and intelligent, enabling them to automate data management tasks and provide insights. This integration of ML and AI aids in data quality and governance, ensuring your data is accurate and usable.

- Predictive Analytics: By incorporating ML algorithms, you unlock the ability to forecast trends and behaviors

- Data Enhancement: AI empowers the enrichment of data, providing additional context and relevance

2. Data Integration and the API Economy

The proliferation of the API economy has made API data integration a key element in your digital strategy. APIs simplify the way distinct systems communicate, and they enable a more seamless flow of data across various platforms.

- Standardization: APIs lead to standardized methods of data exchange, enhancing compatibility between systems

- Real-time Access: Through APIs, you gain immediate access to data, thus supporting up-to-date decision-making

3. DevOps and DataOps Integration

Your operational efficiency improves with the integration of DevOps and DataOps. These methodologies focus on streamlining the entire data lifecycle, from creation to deployment and analysis, thus reducing the time-to-market for new features and improving the data quality.

- Continuous Deployment: DevOps principles facilitate rapid and reliable software delivery

- Improved Collaboration: DataOps fosters a culture of collaboration, aligning teams toward common data-related goals

4. The Evolution of Data Platforms

Data platforms are becoming more sophisticated, incorporating advanced analytics and machine learning capabilities. The evolution ensures that your data platforms are not just storage repositories but analytical powerhouses that drive decision-making.

- Scalability: Modern data platforms scale on-demand to manage varying data loads

- Integration Capabilities: These platforms now offer built-in support for integrating a wide array of data sources, including IoT devices and unstructured data

Data Integration Services in the California

Explore Kanerika’s data integration services in California, designed to seamlessly connect diverse data sources, streamline workflows, and enhance data accessibility.

Case Studies: Kanerika’s Successful Data Integration Projects

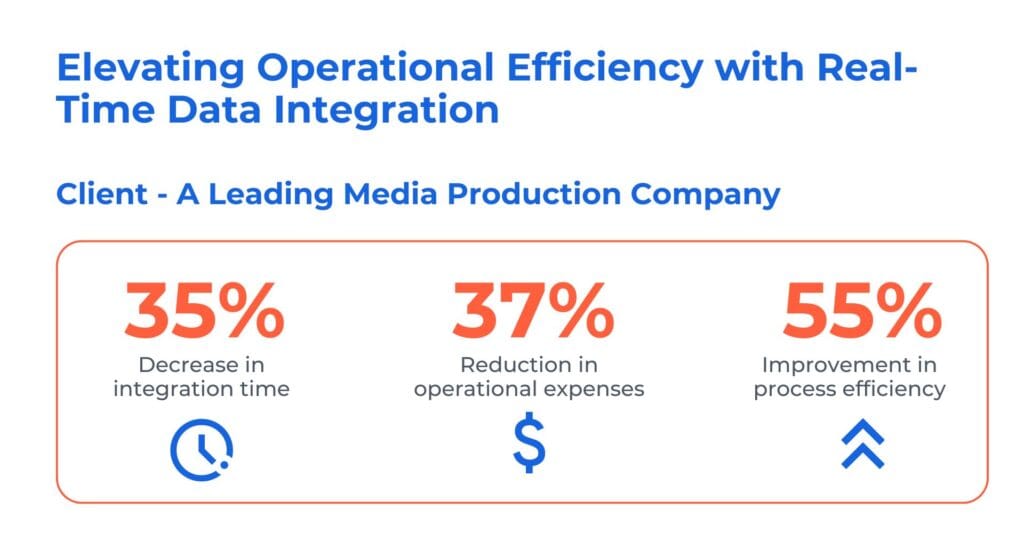

1. Unlocking Operational Efficiency with Real-Time Data Integration

The client is a prominent media production company operating in the global film, television, and streaming industry. They faced a significant challenge while upgrading its CRM to the new MS Dynamics CRM. This complexity in accessing multiple systems slowed down response times and posed security and efficiency concerns.

Kanerika has reolved their problem by leevraging tools like Informatica and Dynamics 365. Here’s how we our real-time data integration solution to streamline, expedite, and reduce operating costs while maintaining data security.

- Implemented iPass integration with Dynamics 365 connector, ensuring future-ready app integration and reducing pension processing time

- Enhanced Dynamics 365 with real-time data integration to paginated data, guaranteeing compliance with PHI and PCI

- Streamlined exception management, enabled proactive monitoring, and automated third-party integration, driving efficiency

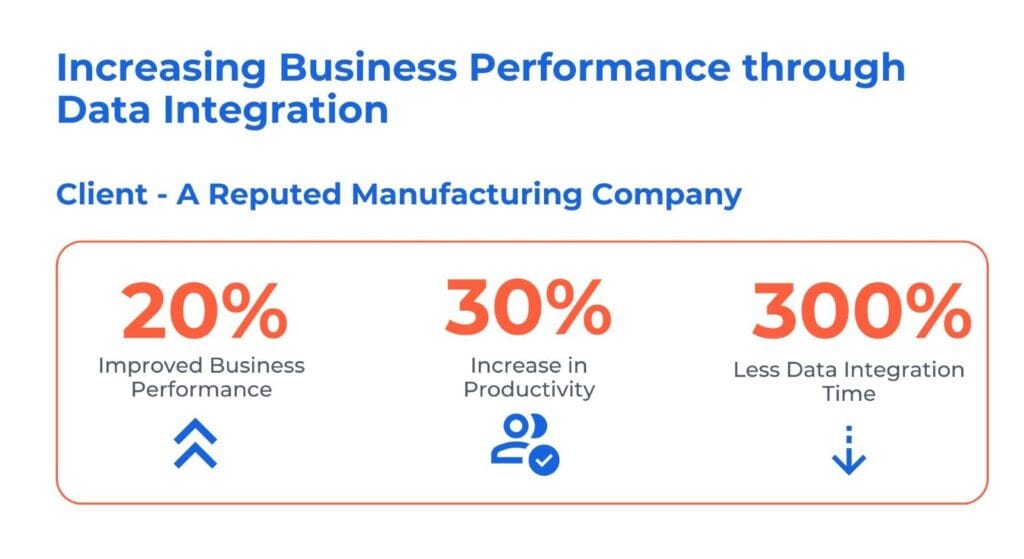

2. Enhancing Business Performance through Data Integration

The client is a prominent edible oil manufacturer and distributor, with a nationwide reach. The usage of both SAP and non-SAP systems led to inconsistent and delayed data insights, affecting precise decision-making. Furthermore, the manual synchronization of financial and HR data introduced both inefficiencies and inaccuracies.

Kanerika has addressed the client challenges by delvering follwoing data integration solutions:

- Consolidated and centralized SAP and non-SAP data sources, providing insights for accurate decision-making

- Streamlined integration of financial and HR data, ensuring synchronization and enhancing overall business performance

- Automated integration processes to eliminate manual efforts and minimize error risks, saving cost and improving efficiency

Kanerika: The Trusted Choice for Streamlined and Secure Data Integration

At Kanerika, we excel in unifying your data landscapes, leveraging cutting-edge tools and techniques to create seamless, powerful data ecosystems. Our expertise spans the most advanced data integration platforms, ensuring your information flows efficiently and securely across your entire organization.

With a proven track record of success, we’ve tackled complex data integration challenges for diverse clients in banking, retail, logistics, healthcare, and manufacturing. Our tailored solutions address the unique needs of each industry, driving innovation and fueling growth.

We understand that well-managed data is the cornerstone of informed decision-making and operational excellence. That’s why we’re committed to building and maintaining robust data infrastructures that empower you to extract maximum value from your information assets.

Choose Kanerika for data integration that’s not just about connecting systems, but about unlocking your data’s full potential to propel your business forward.

Empower Your Data-Driven Workflows With Robust ETL Solutions!

Partner with Kanerika Today.

FAQs

Data integration is the process of combining data from multiple sources into a unified view. It enables businesses to access, analyze, and manage data efficiently across systems such as CRMs, ERPs, databases, and cloud applications. It helps eliminate data silos, ensures consistency, and improves decision-making. With integrated data, organizations can gain a complete view of operations, customers, and performance—leading to better outcomes. Common types include: ETL (Extract, Transform, Load) ELT (Extract, Load, Transform) Data replication Streaming integration Data virtualization Application-based integration It enables real-time analytics, improves data quality, supports automation, and empowers teams to make data-driven decisions more quickly and accurately. Key challenges include data inconsistency, integration complexity, security concerns, legacy systems, and the cost of implementation and maintenance. Popular tools include Informatica, Talend, Azure Data Factory, Apache NiFi, Fivetran, and Stitch, each offering different capabilities for batch, real-time, or cloud integration. No. Scalable and cloud-based integration tools make it accessible to small and mid-sized businesses as well—especially with no-code or low-code platforms. Data integration is combining information from different sources into a single, unified view. This allows you to access and analyze all your data together, regardless of where it came from. It’s crucial for getting a complete picture and making more informed decisions. Data quality ensures your information is accurate, complete, and reliable. Data integration is about bringing all your data together from different sources into one unified view. Together, they ensure you have good, consistent data you can trust for better business decisions. Data integration is the process of collecting information from different sources and bringing it all together. The goal is to create a single, consistent view of your data. This makes it easier to access, analyze, and use for better decisions across your organization. The 4 C’s of data quality are Completeness (having all necessary data), Consistency (data is uniform and doesn’t contradict), Accuracy (data is correct and reflects reality), and Currency (data is up-to-date and available when needed). They ensure your information is reliable for better decision-making. Tools for data integration help you gather information from many different systems and combine it into one consistent place. These specialized tools often extract data, transform it to fit your needs, and then load it into a new destination. This process ensures your data is clean and ready for use. The four main types of integration are: System Integration (connecting different software applications); Data Integration (combining information from various sources); Process Integration (automating and linking business steps); and User Interface Integration (unifying how users interact with multiple applications). Data integration means bringing information from different places together into one unified view. Examples include combining customer details from your sales and support systems into one complete profile. Another is merging sales figures from your website, physical stores, and mobile app into a single report. The four main types of data in data science are: Nominal: Categories with no specific order (e.g., colors, types of fruit). Ordinal: Categories with a meaningful order (e.g., shirt sizes like S, M, L; survey ratings like good, better, best). Discrete: Countable numbers, representing whole distinct items (e.g., number of children, cars). Continuous: Measurable numbers that can take any value within a range (e.g., height, temperature, time). The top 5 data integration patterns are: Batch Processing, Real-time Integration, Data Virtualization, Data Replication, and API Integration. These methods help connect diverse systems to share information effectively. ETL stands for Extract, Transform, Load. It’s a process to gather data from various sources. The data is Extracted, then Transformed (cleaned and organized) to fit specific needs. Finally, the prepared data is Loaded into a central system for reporting and analysis. The main goal of data integration is to combine information from various different sources into one place. It creates a unified, consistent, and accurate view of all your data. This makes it easier to access, analyze, and use this reliable information for better decision-making. No, they are related but not the same. Data integration is the overall process of combining data from different sources into a unified view. ETL (Extract, Transform, Load) is a specific type of process or method used to achieve data integration, often for moving data into a new system like a data warehouse. So, ETL is one way to do data integration. Data integration involves first gathering data from different sources. Then, you clean and transform this data so it’s consistent and ready for use. Finally, it’s loaded into a central system where all information can be accessed and analyzed together. The four key steps in data processing are: 1. Collection: Gathering all the raw information from various sources. 2. Preparation: Cleaning, organizing, and structuring that collected data. 3. Processing: Transforming and analyzing the data to extract insights. 4. Output: Presenting the results in a clear, useful format like reports or charts. Data integration combines information from various sources into a unified view. This process helps identify and correct errors, remove duplicates, and ensure data is consistent across all systems. The result is more accurate, complete, and trustworthy data.1. What is data integration?

2. Why is data integration important?

3. What are the main types of data integration?

4. How does data integration improve business performance?

5. What are the challenges of data integration?

6. What tools are commonly used for data integration?

7. Is data integration only for large enterprises?

What do you mean by data integration?

What is data quality and integration?

What best defines data integration?

What are the 4 C's of data quality?

Which tool is used for data integration?

What are the four types of integration?

What are examples of data integration?

What are the 4 types of data in data science?

What are the top 5 data integration patterns?

What is ETL in data integration?

What is the main goal of data integration?

Is data integration the same as ETL?

What are the steps of data integration?

What are the 4 steps of data processing?

How does data integration improve data quality?