Data extraction for businesses is crucial to gather valuable information from numerous, often unstructured, sources such as websites, documents, or customer databases. Data extractors can efficiently retrieve essential data, saving time and resources. The primary benefits of this process include improved decision-making, increased revenue, and reduced costs while also addressing high customer expectations.

Implementing data extraction services in your organization allows for better business decisions, enhanced productivity, and valuable industry insights. Data extraction is crucial when dealing with substantial volumes of diverse data stored in various locations. With this information, you can analyze customer behavior, develop buyer personas, and refine your products and customer service, ultimately promoting growth within your organization.

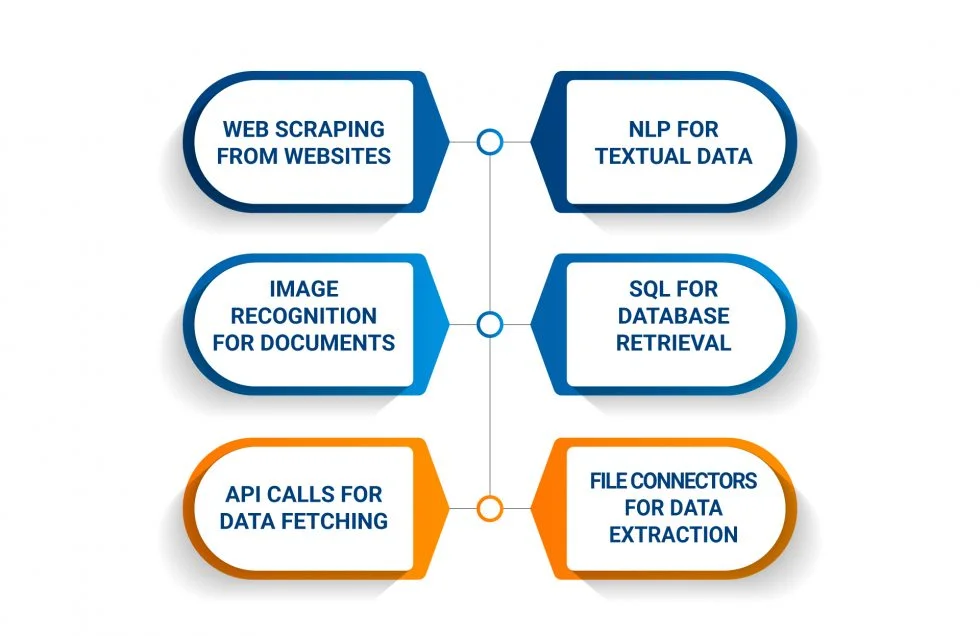

Types of Data Extraction

When it comes to data extraction, various techniques can be used depending on the type of data being extracted. Here are some of the most common types of data extraction:

- Web Scraping: This involves extracting data from websites by scraping the HTML code. Web scraping can be done manually or with the help of automated tools.

- Text Extraction: This involves extracting data from unstructured text sources such as emails, social media posts, and news articles. Text extraction can be done using natural language processing (NLP) techniques.

- Image Extraction: This involves extracting data from images using image recognition technology. Image extraction can be used to extract data from scanned documents, receipts, and invoices. You can also use that technology to convert images to text.

- Database Extraction: This involves extracting data from SQL and NoSQL databases. Database extraction can be done using SQL queries or with the help of database connectors.

- API Extraction: This involves extracting data from application programming interfaces (APIs). API extraction can be done using API calls or with the help of API connectors.

- File Extraction: This involves extracting data from various files such as PDFs, Excel spreadsheets, and CSV files. File extraction can be done using specialized software or with the help of file connectors.

Each type of data extraction has its advantages and disadvantages. The choice of technique depends on the type of data being extracted and the project’s specific requirements.

The Data Extraction Process

Data extraction involves collecting or retrieving disparate types of data from various sources, many of which may be poorly organized or unstructured. This process enables the consolidation, processing, and refining of data for storage in a centralized location, preparing it for transformation.

The process of data extraction involves the following steps:

- Identifying the data sources: The first step in the data extraction process is to identify the data sources. These sources can be internal or external to the organization. Examples of internal sources include databases, spreadsheets, and files, while external sources may include social media platforms, websites, and online directories.

- Extracting the data: Identify the data sources and use the appropriate tools and techniques for extraction tailored to the type and source of the data. Methods can range from web scraping tools and APIs to specialized data extraction software.

- Cleaning the data: Remove duplicates, correct errors, and standardize the data post-extraction to facilitate easy analysis.

- Transforming the data: Convert the cleaned data into an easily analyzable format, such as a spreadsheet or database, in preparation for analysis.

- Loading the data: The final step in the data extraction process is to load the data into a centralized location, such as a data warehouse or a data lake. This makes it easy to access and analyze the data.

Overall, the data extraction process is an essential part of any data-driven organization. By collecting and consolidating data from various sources, organizations can gain valuable insights, make informed decisions, and drive efficiency within all workflows.

By implementing the data extraction process, your data-driven organization can achieve valuable insights, make informed decisions, and enhance workflow efficiency.

Data Extraction Examples and Use Cases

Data extraction is a powerful tool that can help businesses gather valuable information from various sources. Here are some examples of how data extraction can be used:

- Lead Generation: Many businesses use data extraction to gather contact information from potential customers. For example, a real estate company might extract contact information from online property listings to create a database of potential clients. This can help them reach out to these potential clients with their services in the future.

- Price Monitoring: Data extraction can also be used for price monitoring. For example, an e-commerce website might use data extraction to monitor the prices of its competitors’ products. This can help them adjust their prices to remain competitive in the market.

- Social Media Analysis: Data extraction can be used to gather information from social media platforms. For example, a business might use data extraction to gather information about their brand’s mentions on Twitter. This can help them understand how their audience perceives their brand and adjust their marketing strategy accordingly.

- Financial Analysis: Data extraction can gather financial data from various sources. For example, a business might use data extraction to gather financial data from their competitors’ annual reports. This can help them understand their competitors’ financial performance and adjust their strategy accordingly.

- Web Scraping: Data extraction can also be used for web scraping. For example, a business might use data extraction to scrape product information from online marketplaces. This can help them gather information about their competitors’ products and adjust their product offerings accordingly.

Utilizing data extraction in these various ways enables businesses to obtain valuable intelligence from numerous sources. This information can provide critical insights into the market, competition, and customer base, ultimately allowing you to make well-informed decisions and maintain an edge over your rivals.

How Data is Extracted: Structured & Unstructured Data

Data extraction is the process of retrieving data from various sources, including structured and unstructured data. Structured data is organized and stored in a specific format, such as a database or spreadsheet. On the other hand, unstructured data is not organized in a predefined manner and can be found in sources such as PDFs, emails, and social media.

Structured Data Extraction

It is relatively straightforward since the data is stored in a specific format. The process involves identifying the data fields and extracting the relevant information. Common methods of extracting structured data include:

- Using SQL queries to retrieve data from databases

- Using APIs to extract data from web applications

- Using web scraping tools to extract data from websites

- Using ETL (Extract, Transform, Load) tools to extract data from various sources and transform it into a structured format

Unstructured Data Extraction

Extracting data from unstructured sources often presents more challenges due to the data’s disorganized nature. This process involves converting the data into a structured format for analysis and business application.

Common methods of extracting unstructured data include:

- Using natural language processing (NLP) techniques to extract information from text-based sources such as emails, social media, and news articles

- Using optical character recognition (OCR) software to copy text from image and scanned documents.

- Using machine learning algorithms to identify patterns and extract information from unstructured data sources

In conclusion, data extraction is a critical process that involves retrieving data from various sources, including structured and unstructured data. The methods used for extracting data depend on the type of data source and the format of the data. Structured data extraction is relatively straightforward, while unstructured data extraction requires more advanced techniques such as NLP and machine learning.

Using Data Extraction on Qualitative Data

When conducting a systematic review, data extraction is crucial to identifying relevant information from included studies. Data extraction, while often linked to quantitative data, also plays an essential role in extracting data from qualitative studies.

To extract data from qualitative studies, you should follow these steps:

- Develop a data extraction table with relevant categories and subcategories based on the research questions and objectives.

- Review the included studies and identify relevant data to extract.

- Extract the data by summarizing key findings and themes, including direct quotes and supporting evidence.

- Ensure the accuracy of the extracted data by having two or more people extract data from each study and compare their results.

- Analyze the extracted data to identify patterns, themes, and relationships between the studies.

Overall, data extraction for qualitative studies requires careful consideration of the research questions and objectives and attention to detail and accuracy in the extraction process. These steps ensure the extracted data is relevant, reliable, and useful for your systematic review.

Data Extraction and ETL

Looking at the ETL process might help put the importance of data extraction into perspective. To sum it up, ETL enables businesses and organizations to centralize data from various sources and assimilate various data types into a standard format.

The ETL process consists of three stages: Extraction, Transformation, and Loading.

- Extraction: It is the process of obtaining information from multiple sources or systems. To extract data, one must first identify and discover important information. Extraction enables the combining of various types of data and the subsequent mining of business intelligence.

- Transformation: Next, it is time to refine the extracted data. Data is organized, cleaned, and categorized during the transformation step. Audits and deletions of redundant items are among the measures to ensure the data is reliable, consistent, and useable.

- Loading: A single site stores and analyzes the converted high-quality data.

Benefits of Data Extraction

Data extraction tools offer many benefits to organizations. Here are some of the benefits:

- Eliminates Manual Data: There is no need for your employees to devote their time and energy to manual data extraction. It will frustrate them in sorting out unlimited data without errors. Data extraction tools use an automated process to organize data.

- Choose your Perfect Tool: The data extraction tools are developed to handle automated data extraction procedures. It can handle even complex projects such as web data, PDF, images, or any documentation or process.

- Diverse Data: The data Extraction tool can handle various data types like databases, web data, PDFs, documents, and images. There are no limitations when managing and extracting data from various sources. Many data formats are available to suit your preferences and business requirements from most service providers.

- Accurate and Specified Data: The provided information will be accurate, error-free, and reliable for your enterprise requirements. Even the minute specifications will be considered while extracting data. It improves data quality and makes data administration easier.

Data Extraction for Business Challenges

When it comes to data extraction, businesses face several challenges that can make the process complex and time-consuming.

Here are some of the common challenges businesses face when it comes to data extraction:

1. Data Quality

One of the biggest challenges businesses face with data extraction is ensuring data quality. Poor data quality can lead to inaccurate insights, negatively impacting business decisions. To ensure data quality, businesses must clearly understand the data they are extracting, including its source, format, and structure.

2. Data Volume

Another challenge businesses face with data extraction is dealing with large volumes of data. Extracting data from multiple sources often leads to the accumulation of a massive amount of data, which requires thorough processing and analysis. To overcome this challenge, businesses need the right tools and resources to handle large volumes of data.

3. Data Integration

Data extraction is just one part of the data management process. Once the data is extracted, it must be integrated with other data sources to provide a complete picture of the business. This process can be challenging, as different data sources may have different formats and structures.

4. Data Security

Data security is a critical concern for businesses regarding data extraction. Extracting data from multiple sources can increase the risk of data breaches and cyber-attacks. To ensure data security, businesses need robust security measures like encryption, firewalls, and access controls.

5. Data Privacy

Data privacy is another important concern for businesses regarding data extraction. Extracting data from multiple sources can result in collecting sensitive data, such as personal information. To ensure data privacy, businesses must comply with data protection regulations like GDPR, CCPA, and HIPAA.

Kanerika: Your Trusted Data Strategy Partner

When it comes to data extraction, having a trusted partner to guide you through the process can make all the difference. That’s where Kanerika comes in.

Kanerika, a global consulting firm with expertise in data management and analytics, excels in assisting businesses in aligning their data strategy with their overall business strategy. This approach integrates complex data management techniques with a deep understanding of business objectives.

Our no-code DataOps platform, FLIP, can transform your business.

Here are just a few reasons why Kanerika is your go-to partner for data extraction:

- Expertise in Data Management: Kanerika has a unique data-to-value model enables businesses to get self-serviced insights across their heterogeneous data sources. This means you can consolidate your data into a single repository, prepare it for analysis, share it with external partners, and store it for archival purposes.

- Enable Self-Service Business Intelligence: Kanerika can help you enable self-service business intelligence, so your team can access the data they need to make informed decisions. With Kanerika’s help, you can modernize and scale your data analytics, drive productivity, and reduce costs.

- Microsoft Gold Partner: Kanerika is a Microsoft Gold Partner, which means they have expertise in Microsoft Power BI and Azure Solutions for implementing BI solutions for their customers. This partnership ensures you have access to the latest technology and solutions for your data extraction needs.

Kanerika is your trusted partner for all your data extraction needs. With their expertise in data management, self-service business intelligence, and Microsoft partnership, you can be confident that you’re getting the best solutions for your business.

FAQs

What is meant by data extraction?

Data extraction is like carefully picking out specific information from a larger dataset. It’s a process of separating the wheat from the chaff – focusing on the data points you need, whether from a spreadsheet, database, or even a webpage. The goal is to transform raw, unstructured data into something usable and readily analyzable. Think of it as intelligent data harvesting.

What are the three data extraction methods?

Data extraction boils down to three core approaches: First, you can directly access structured data using APIs or database queries. Second, you can scrape semi-structured or unstructured data from websites and documents using web scraping tools. Finally, human-in-the-loop methods involve manual review and extraction, often for complex or nuanced data. These methods often work in combination for optimal results.

What is the purpose of extracting data?

Data extraction’s core purpose is to unlock hidden value within raw information. It transforms messy, disparate data into a usable format, allowing for analysis and informed decision-making. Essentially, it’s about turning raw facts into actionable intelligence. This fuels everything from business strategy to scientific discovery.

What is SQL data extraction?

SQL data extraction is the process of pulling specific information from a database using SQL queries. Think of it as precisely targeting and retrieving the data you need, like fishing for specific types of fish from a vast ocean. It’s crucial for analysis, reporting, and transferring data to other systems. The efficiency depends entirely on how well-crafted your SQL query is.

What is a data processing method?

A data processing method is simply a systematic way to transform raw data into something useful. It involves steps like cleaning, organizing, and analyzing the data to extract meaningful information or insights. This could range from simple calculations to complex algorithms depending on the goal. Ultimately, it’s about making raw data understandable and actionable.

What is data extraction and ETL?

Data extraction is the process of pulling specific information from various sources – think of it as carefully harvesting data from different fields. ETL (Extract, Transform, Load) builds on this by taking that extracted data, cleaning it up (transforming), and then organizing it into a usable format (loading) for analysis or other purposes. Essentially, ETL turns raw data into insightful information.

What is the meaning of data pull?

A data pull is like actively requesting information from a source. Instead of data being pushed to you automatically, you initiate the process to retrieve specific data you need. Think of it as going to the library and selecting only the books you require, rather than receiving all books delivered to your home. This gives you control over what data is collected and when.

What is ETL in programming?

ETL stands for Extract, Transform, Load – a crucial process in data warehousing. It’s like a data pipeline, pulling raw data from various sources (extract), cleaning and shaping it to fit a specific format (transform), and finally loading it into a target database (load). Essentially, ETL makes messy, disparate data usable for analysis and reporting.

What is the data mining process?

Data mining isn’t a single step, but a cyclical process. It begins with defining a clear goal (what insights are you seeking?), then involves cleaning and preparing messy real-world data. Next, various algorithms are applied to uncover patterns and relationships, and finally, these findings are interpreted and communicated to drive decisions. It’s an iterative process, often refining steps based on initial results.

What is meant by data analysis?

Data analysis is the process of inspecting, cleaning, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. It’s essentially detective work for numbers, using various techniques to uncover patterns, trends, and anomalies hidden within raw data. The aim is to translate raw facts into actionable insights. Ultimately, it’s about making sense of information to solve problems or answer questions.

How to extract data from SQL?

Extracting data from SQL involves using a SELECT statement, specifying the columns you need and optionally adding filtering (WHERE clause) to get only relevant rows. You can then output this data to your application, a spreadsheet, or another database. Essentially, you’re querying the database to retrieve specific information. The complexity depends on the intricacy of your data retrieval needs.