Artificial intelligence is advancing rapidly, propelled by powerful language models and innovative techniques like Retrieval-Augmented Generation (RAG). Large Language Models (LLMs) are sophisticated AI systems trained on extensive text datasets to understand and generate human-like language. RAG enhances these models by integrating external knowledge sources, enabling real-time retrieval of relevant information to improve the accuracy and depth of their responses.

According to recent studies, the global AI market is expected to exceed $190 billion by 2025, with demand for more specialized AI models like RAG and LLM growing exponentially. “The future of AI isn’t about choosing between technologies, but understanding how they complement each other,” says Dr. Elena Rodriguez, AI Research Director at TechInnovate. This blog will explore the key differences between RAG and LLM, helping businesses make informed decisions on which AI model best aligns with their specific needs.

Upgrade From Tableau To Power BI!

Kanerika handles report mapping through simple structured migration tasks.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is an AI architecture that enhances large language models (LLMs) by combining their generative capabilities with information retrieval systems. Unlike standard LLMs that rely solely on their pre-trained knowledge, RAG systems dynamically access and incorporate external knowledge sources during the generation process.

How it Works

RAG operates through a two-stage process. First, a retrieval component searches through a knowledge base (which can include documents, databases, or other structured information) to find content relevant to the user’s query. Then, a generation component (typically an LLM) uses both the query and the retrieved information to produce a comprehensive response. This approach grounds the model’s output in specific, relevant information rather than relying exclusively on its parametric knowledge.

Key Benefits and Uses

RAG offers several significant advantages over traditional LLMs. It dramatically reduces hallucinations by anchoring responses to information, making AI systems more reliable for critical applications. It enables models to access up-to-date information beyond their training cutoff, solving the problem of knowledge obsolescence.

RAG also improves accuracy on domain-specific tasks by incorporating specialized knowledge bases. Additionally, it enhances transparency, as organizations can trace responses back to source documents, providing greater auditability and trust in AI-generated content.

Retrieval-Augmented Generation (RAG) System Components

1. Document Ingestion Layer

The document ingestion process prepares source documents for analysis by collecting materials from various formats. It involves parsing different file types, extracting meaningful content, cleaning text, and breaking down large documents into manageable chunks that can be effectively analyzed and retrieved.

2. Embedding Model

Embedding models transform textual information into dense numerical vector representations that capture semantic meaning. These models convert text chunks into high-dimensional vectors, enabling precise similarity comparisons and preserving the underlying contextual relationships.

3. Vector Database

Vector databases are specialized storage systems designed to handle vector embeddings efficiently. They index and store vector representations, allowing rapid semantic search and nearest neighbor comparisons across large document collections.

4. Retrieval Mechanism

The retrieval mechanism uses advanced algorithms like cosine similarity to find the most relevant document chunks. It compares query vector representations with stored document vectors, ranking and selecting the most contextually appropriate segments.

5. Prompt Engineering Module

Prompt engineering bridges retrieved information with language model response generation. This module constructs comprehensive prompts by integrating the original query, retrieved documents, and necessary metadata.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

What is LLM?

LLMs, or large language models, are a kind of artificial intelligence that helps process human text. They are constantly very deep neural networks trained on a large amount of data, such as GPT-3 and GPT-4. LLMs can read and write language in a way that resembles human language comprehension, which allows a broad range of applications.

How It Works

LLMs operate by analyzing large datasets containing billions of words. During training, these models learn to recognize patterns in language, such as syntax, context, and meaning. LLMs are built using deep learning methodologies, where an intricate series of computations are generated, enabling the model to forecast the subsequent term in a string, produce comprehensible sentences, or reply to requests in a pertinent framework.

LLMs have been subsequently trained on large data sets that help them create sophisticated human-like text based on previous text.

Key Benefits and Uses

LLMs excel in tasks involving natural language understanding and generation. They are commonly used in chatbots, content creation, and summarization. They can generate high-quality text, simulate conversations, and provide personalized recommendations. LLMs are also utilized in customer support, creative writing, coding assistance, and many other domains where human-like text generation is valuable. Their ability to process and predict language has made them one of the most powerful tools in AI development.

Top 5 LLMs Making Impact Across Industries

1. OpenAI’s GPT-4o

An advanced language model with improved context handling and reasoning capabilities. Offers more precise instruction-following and expanded knowledge base. Supports larger context windows and demonstrates enhanced performance across various applications.

2. Anthropic’s Claude 3.7 Sonnet

The most recent Claude model, featuring superior analytical skills and nuanced understanding. Provides advanced multimodal capabilities with improved reasoning and efficiency. Represents a significant leap in conversational AI and complex task resolution.

3. Meta’s Llama 3.3

An open-source language model with enhanced multilingual support and improved reasoning abilities. Offers robust performance across research and practical domains. Provides increased safety features and more flexible implementation options.

4. Google’s Gemini

Google’s cutting-edge multimodal language model with advanced reasoning capabilities. Demonstrates strong performance in scientific reasoning, cross-linguistic understanding, and complex problem-solving. Represents a significant advancement in AI technology.

5. Mistral’s Mixtral 8x7B

A powerful open-weight mixture-of-experts model known for its efficiency and competitive performance. Mixtral 8x7B dynamically activates only a subset of its expert models during inference, enabling high-quality results with reduced computational cost. It’s gaining traction for its balance of performance, transparency, and open-access deployment across enterprise and research settings.

RAG vs LLM: Key Differences

| Aspect | RAG (Retrieval-Augmented Generation) | LLM (Large Language Models) |

| Definition | Combines generative models with external data retrieval to enhance response quality. | Trained on massive datasets to understand and generate human-like text. |

| Primary Function | Integrates real-time data retrieval into the generative process to provide specific and accurate answers. | Generates text based on patterns learned from data without external information retrieval. |

| Data Usage | Uses external databases, knowledge sources, or APIs to improve response accuracy. | Uses pre-existing data learned during training to generate responses. |

| Flexibility in Responses | Can respond based on up-to-date or specialized information retrieved during the query. | Responses are based on pre-trained data, without real-time information. |

| Accuracy | More accurate in niche or domain-specific queries as it retrieves information from external sources. | Accurate in general language tasks but may struggle with domain-specific information. |

| Performance with Long Contexts | Performs well in tasks requiring specific or detailed context due to the retrieval mechanism. | Can generate text fluently but may lose accuracy or context in complex, long conversations. |

| Task Specialization | Excels in tasks requiring knowledge outside of pre-trained models, such as detailed question answering. | Suitable for tasks like writing, summarizing, and general conversation but not as specific as RAG. |

| External Dependency | Dependent on access to external data sources for improved output. | Operates independently of external data sources after training. |

| Use Case | Best for applications like customer support, legal research, and medical queries, where accuracy is crucial. | Ideal for general NLP tasks, creative writing, and content generation. |

| Response Generation | Generates responses based on both pre-trained data and real-time data retrieval. | Generates responses only from pre-trained data, lacking real-time awareness. |

RAG vs. LLM: A Comprehensive Breakdown of Key Differences

1. Primary Function

- RAG:

Its core function is to improve the relevance and accuracy of generated responses by augmenting the generation process with retrieved content. This is especially valuable when the query pertains to recent events, specialized knowledge, or uncommon topics not covered in the model’s training data.

- LLM:

Primarily focused on generating human-like text based on what it has learned during training. It excels at general understanding and language tasks but cannot reference new or unseen data unless retrained or fine-tuned.

2. Data Usage

- RAG:

Actively uses external sources of information, such as search indexes, APIs, or document repositories. This allows it to deliver factually accurate and updated information in real-time or on-demand.

- LLM:

Relies entirely on the static data it was trained on. If the training data doesn’t include certain information, the model won’t be able to produce accurate responses about it — particularly for recent events or niche domains.

3. Flexibility in Responses

- RAG:

Offers dynamic response generation because it retrieves relevant content at the time of the query. This enables it to adapt to changes in information or user needs, offering more flexibility in domains like news, finance, healthcare, etc.

- LLM:

Has limited flexibility, as it can only generate responses based on what it already knows. While it’s impressive in constructing fluent and logical text, it can’t incorporate new knowledge unless retrained.

4. Accuracy

- RAG:

Generally more accurate in domain-specific or factual queries. Since it pulls data from authoritative sources in real time, it can ensure the answer is based on actual references, reducing hallucinations or incorrect facts.

- LLM:

Performs well in general use cases but may hallucinate or provide outdated/incorrect information in areas where it lacks data coverage or contextual depth.

5. Performance with Long Contexts

- RAG:

Handles long and detailed queries better because it can retrieve context-relevant snippets to base its answers on. This is beneficial in tasks like legal document analysis or research support.

- LLM:

While it can generate long-form responses, maintaining accuracy, coherence, and relevance over long spans of text or conversations can be a challenge, especially without retrieval support.

6. Task Specialization

- RAG:

Ideal for tasks requiring up-to-date or specific information, such as answering questions about newly published research, legal documents, or medical guidelines. Its ability to tap into live data gives it an edge in these areas.

- LLM:

Best suited for general-purpose NLP tasks, such as summarization, paraphrasing, translation, story writing, or chat-based assistance, where real-time data is less critical.

7. External Dependency

- RAG:

Heavily reliant on access to external data sources, such as search engines, databases, or custom knowledge bases. Without access, its performance drops closer to that of a standalone LLM.

- LLM:

Self-contained after training. It doesn’t require any external data connection and can function independently, which is useful in privacy-sensitive or offline environments.

8. Use Case

RAG:

Suited for high-accuracy, domain-specific applications like:

- Customer support with tailored or technical knowledge

- Legal research where citation and detail matter

- Medical applications where up-to-date and reliable data is crucial

LLM:

Great for creative and general tasks like:

- Content creation (blogs, scripts, stories)

- Conversational AI

- General summarization or classification

9. Response Generation

- RAG:

Responses are generated using a fusion of retrieved content and generative modeling, making them more grounded in real-world data. It essentially expands the knowledge horizon of the base LLM.

- LLM:

Generates responses solely based on internalized training data, which can lead to creative but sometimes less factual outputs.

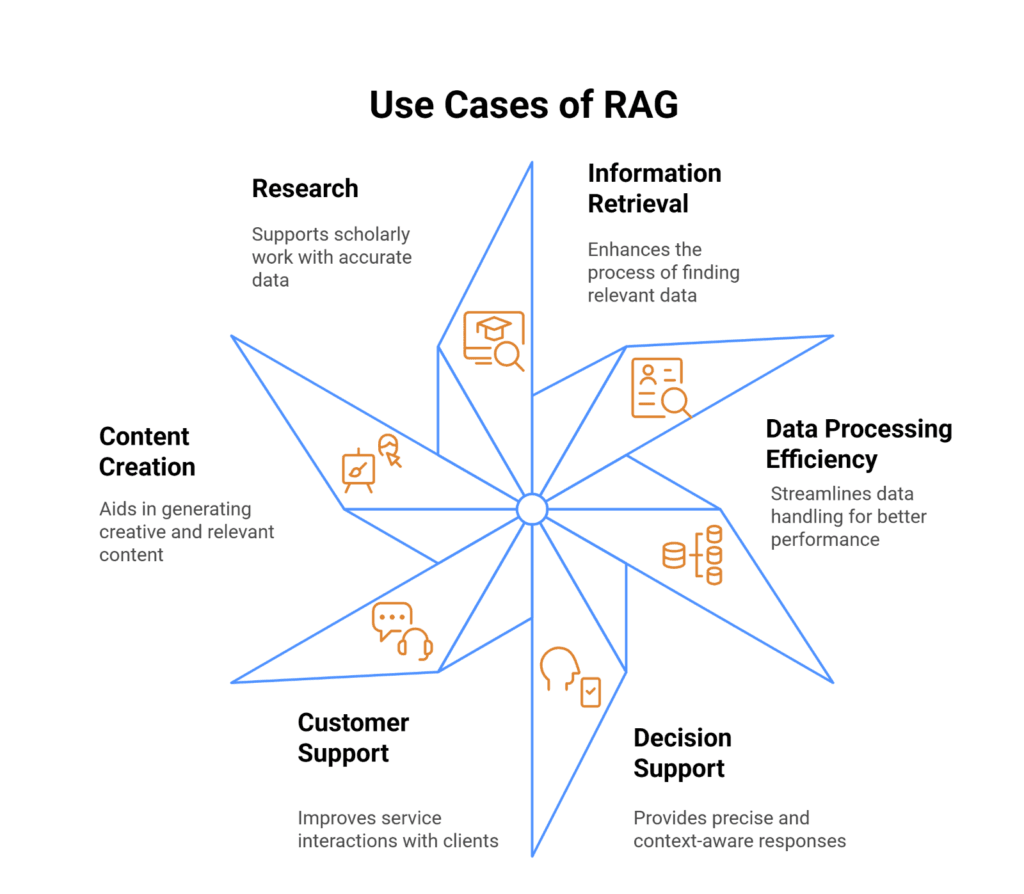

Use Cases for RAG

Retrieval-Augmented Generation (RAG) excels in scenarios demanding precise, context-specific information across various domains.

1. Enterprise Knowledge Management

Enables organizations to create intelligent knowledge bases that provide accurate, contextual responses using internal documentation. Unlike standalone LLMs, RAG systems can reference the latest company-specific documents, ensuring responses align with current organizational policies.

2. Customer Support

Benefits from RAG by retrieving specific product documentation, troubleshooting guides, and previous support interactions. This approach reduces resolution times while maintaining high accuracy across complex product ecosystems.

3. Legal and Compliance

These environments leverage RAG to navigate regulatory frameworks. Also, by connecting generative models to databases of laws and case precedents, professionals receive nuanced guidance with proper citations and references.

4. Healthcare Applications

Utilizes RAG to maintain medical accuracy. Clinical decision support systems can retrieve information from medical literature and guidelines, assisting healthcare providers with diagnostic recommendations while ensuring traceability to authoritative sources.

5. Research and Development

These teams implement RAG to stay current with scientific literature, enabling researchers to query the latest findings with direct citations to relevant papers.

6. Educational Systems

Uses RAG to create adaptive learning experiences, similar to those found on the best language learning website, drawing from textbooks and supplementary materials to provide students with accurate, tailored information.

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

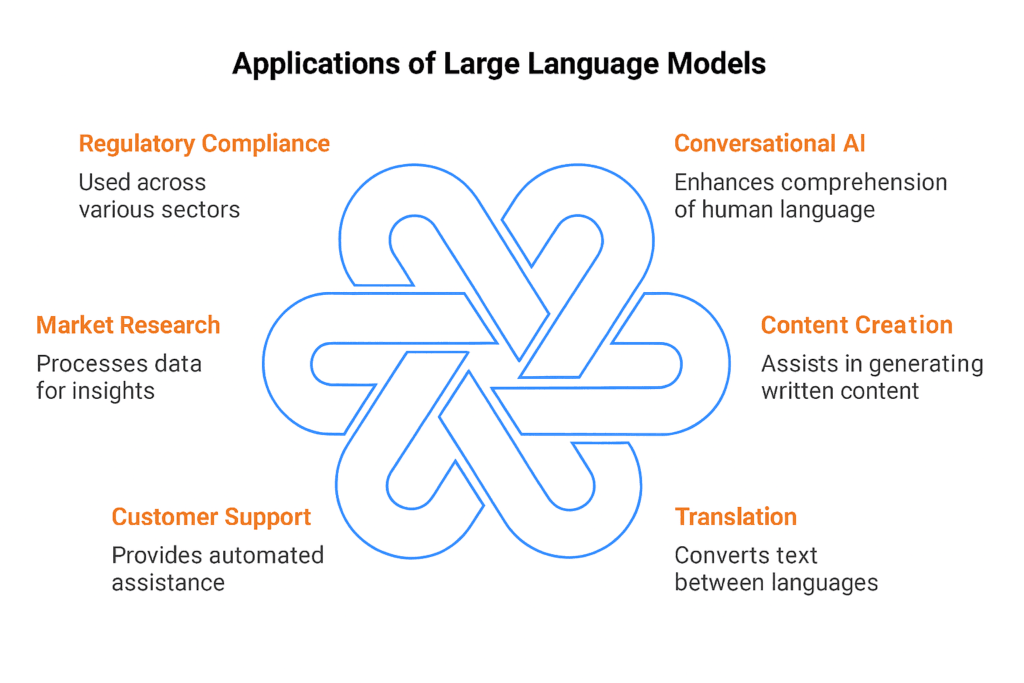

Use Cases for Large Language Models (LLM)

Large Language Models (LLMs) have shown enormous cross-domain transfer capabilities, revolutionizing how organizations communicate and analyze tasks.

1. Content Creation

Allows marketing teams to generate blog posts, social media content, product descriptions, and creative writing. LLMs quickly produce diverse content styles, scaling production while maintaining contextual relevance.

2. Code Generation

Redefines how we build and write software, using predictive text or block completions to help you along the way, boilerplate code generators, understanding the languages, documenting processes, and fixing bugs all in one place. Tools such as GitHub Copilot highlight the promise of LLMs in improving the developer experience.

3. Customer Interaction

AI-Powered Chatbots and Virtual Assistants revolutionize Customer Interaction. LLMs facilitate more conversational interactions by taking context into account, which can handle customer queries and offer relevant suggestions.

4. Language Translation

LLMs can also aid in language translation, enabling more nuanced, context-sensitive translations that consider cultural and linguistic subtleties for many language pairs.

5. Educational Support

Educational Support LLMs can also generate personalized learning materials, explain difficult concepts, offer interactive tutoring, and develop adaptive learning experiences.

6. Data Analysis

Uses LLMs to convert complex data into informative narrative reports, extracting valuable information and translating technical information into language that various audiences can understand.

7. Creative Ideation

Enables professionals to leverage LLMs as brainstorming partners, generating original ideas across design, marketing, product development, and more.

RAG vs LLM: Choosing the Right Approach for Your Business

Selecting between Retrieval-Augmented Generation (RAG) and Large Language Models (LLM) requires a strategic assessment of your organization’s specific needs, technological infrastructure, and business objectives.

When to Choose RAG?

Retrieval-Augmented Generation becomes the preferred choice when your business prioritizes:

1. Accuracy and Credibility

RAG systems excel in environments where factual precision is critical. Moreover, by retrieving information from specific, curated databases, RAG ensures responses are grounded in verified sources. Consequently, this makes it ideal for industries like legal, healthcare, and financial services where misinformation can have serious consequences.

2. Domain-Specific Knowledge

Organizations with extensive internal documentation or specialized knowledge bases benefit immensely from RAG. Also, the system can draw precisely from your organization’s unique information, providing context-aware responses that reflect your specific operational nuances.

3. Compliance and Traceability

Regulated industries require not just accurate information, but also the ability to trace the origin of that information. Additionally, RAG’s capability to cite sources makes it invaluable for compliance-driven environments where every recommendation must be substantiated.

4. Cost-Effective Customization

Instead of retraining large language models, RAG allows organizations to leverage existing knowledge repositories, making it a more economical approach to creating intelligent information systems.

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

When to Choose LLM?

Large Language Models become the go-to solution when your business needs:

1. Creative Content Generation

LLMs shine in scenarios requiring original, creative content. Marketing teams, content creators, and design professionals can leverage these models to generate diverse writing styles, brainstorm ideas, and produce engaging narratives quickly.

2. Broad Language Tasks

When you need versatile language processing across multiple domains without deep specialization, LLMs provide remarkable flexibility. They can handle translation, summarization, and communication tasks with impressive breadth.

3. Rapid Prototyping

Startups and innovation-driven organizations can use LLMs to quickly prototype conversational interfaces, generate initial product descriptions, or explore conceptual ideas without significant upfront investment.

4. General-Purpose Communication

Customer service chatbots, interactive assistants, and general communication tools benefit from LLMs’ ability to understand and generate human-like text across various contexts.

Hybrid Approach: Bridging the Gap

Many forward-thinking organizations are exploring hybrid solutions that combine RAG’s precision with LLM’s generative capabilities. This approach allows businesses to:

- Maintain high accuracy through retrieval

- Leverage the creative potential of generative models

- Create more intelligent, context-aware systems

Decision Framework

Your choice should depend on:

- Specific use case requirements

- Accuracy needs

- Available data infrastructure

- Budget constraints

- Complexity of domain knowledge

The most successful implementation will align technological capabilities with your unique business strategy, operational needs, and long-term objectives.

RAG vs LLM: Implementation Considerations

1. Technical Infrastructure Requirements

RAG systems require more complex infrastructure compared to traditional LLMs. They need specialized vector databases, powerful embedding models, and robust retrieval mechanisms. Organizations must invest in high-performance computing resources capable of semantic search and efficient information retrieval.

2. Data Preparation and Management

RAG implementation involves extensive data preprocessing, including document chunking, cleaning, and embedding generation. Also, each document must be transformed into semantically meaningful vector representations. LLMs typically rely on pre-trained models with less intensive ongoing data management.

3. Integration with Existing Systems

RAG introduces more complex integration challenges, requiring seamless connections between document repositories, embedding services, vector databases, and language models. Organizations need robust API frameworks and architectural design to ensure smooth data flow and minimal latency.

4. Evaluation Metrics and Performance Monitoring

RAG performance evaluation is more nuanced, measuring retrieval accuracy, chunk relevance, and response coherence. Metrics must capture vector similarity, retrieval precision, and generated response quality. LLM evaluation focuses more on general language understanding and task completion.

Key Comparative Insights

- RAG provides more contextually grounded responses

- LLMs offer broader generative capabilities

- RAG requires more complex infrastructure

- Both approaches need continuous refinement

Move From Informatica to Talend!

Kanerika keeps your workflow migration running smooth.

Future Trends in RAG and LLM Technologies

The future of artificial intelligence converges towards more integrated AI systems where Retrieval-Augmented Generation (RAG) and Large Language Models (LLMs) will dynamically complement each other. However, emerging technological advancements are driving sophisticated hybrid models capable of more accurate and contextually intelligent information processing.

Key Emerging Trends

1. Enhanced Contextual Intelligence

Future developments will focus on improving contextual reasoning capabilities. RAG systems will evolve to provide more nuanced, real-time information retrieval, while LLMs will develop advanced reasoning mechanisms to understand complex, multi-dimensional contexts more effectively.

2. Multimodal Capabilities

Researchers are expanding technologies beyond text-based interactions, developing RAG and LLM systems that can seamlessly integrate text, image, audio, and potentially video data. Moreover, this multimodal approach will enable more comprehensive and intuitive AI interactions across diverse domains.

3. Ethical and Transparent AI

Significant research is directed towards developing more transparent, accountable AI systems. Both RAG and LLM technologies will incorporate robust mechanisms for explaining reasoning, reducing bias, and ensuring more reliable AI-generated outputs.

4. Computational Efficiency

Future trends emphasize developing energy-efficient, computationally lightweight models through neural compression, optimized embedding methods, and advanced retrieval algorithms that maintain high-performance capabilities.

Transforming Businesses with Kanerika’s Data-Driven LLM Solutions

Kanerika leverages cutting-edge Large Language Models (LLMs) to tackle complex business challenges with remarkable accuracy. Our AI solutions revolutionize key areas such as demand forecasting, vendor evaluation, and cost optimization by delivering actionable insights and managing context-rich, intricate tasks. Designed to enhance operational efficiency, these models automate repetitive processes and empower businesses with intelligent, data-driven decision-making.

Built with scalability and reliability in mind, our LLM-powered solutions seamlessly adapt to evolving business needs. Whether it’s reducing costs, optimizing supply chains, or improving strategic decisions, Kanerika’s AI models provide impactful outcomes tailored to unique challenges. By enabling businesses to achieve sustainable growth while maintaining cost-effectiveness, we help unlock unparalleled levels of performance and efficiency.

Transform Challenges Into Growth With AI Expertise!

Partner with Kanerika for Expert AI implementation Services

Frequently Asked Questions

What is the primary difference between RAG and LLM?

RAG combines the strengths of generative models and information retrieval systems. It enhances the output of generative models by integrating real-time data retrieval to improve accuracy and relevance. LLMs, like GPT-4, are pre-trained models that generate text based on vast datasets without integrating real-time data retrieval, making them more general-purpose in language tasks.

When should I use RAG instead of LLM?

RAG is ideal for situations that require highly accurate and context-sensitive answers based on specific or real-time information, such as search engines or customer support systems. LLMs are more suited for generating creative, human-like text across a variety of tasks like content creation or summarization.

Which model is better for customer service applications?

RAG is generally more effective for customer service, as it allows AI agents to pull in the most relevant information from a variety of data sources in real time, offering precise, fact-based responses. LLMs, though good for general conversational AI, may struggle without real-time data access or external context.

Can LLMs integrate external knowledge like RAG?

While LLMs are incredibly capable of understanding and generating human-like text, they don’t typically integrate real-time external knowledge. RAG models excel in this aspect by retrieving up-to-date information from external sources, allowing for more specific and context-driven outputs.

Are RAG models more computationally expensive than LLMs?

Generally, RAG models can be more resource-intensive than LLMs because they involve both retrieving data and generating text. This dual process requires extra computing power, especially if the system needs to query large databases or search engines in real-time.

What industries can benefit the most from RAG and LLM?

RAG is well-suited for industries that require accurate, up-to-date information, such as finance, healthcare, and legal services. LLMs are widely used across industries for tasks like content creation, summarization, translation, and general conversational AI in sectors like marketing, media, and education.

Can I use RAG and LLM together?

Yes, many modern applications combine both RAG and LLM capabilities. RAG can be used to retrieve the necessary data, and the LLM can then generate human-like responses based on that data, enabling highly accurate and context-rich communication. This combination is increasingly being adopted in enterprise AI applications.