I asked an AI tool for side effects of a new medication, and it listed symptoms and studies that were completely made up. This is known as AI hallucination—when a model invents facts that sound accurate but have no real basis in data or evidence. This is exactly what Retrieval-Augmented Generation (RAG) is built to solve.

RAG enhances language models by connecting them to external knowledge sources in real time. Instead of relying solely on what a model was trained on, RAG tools retrieve relevant, up-to-date information and then generate responses—resulting in answers that are far more accurate, contextual, and reliable.

In 2025, over 60% of enterprise AI deployments are expected to incorporate RAG or similar grounding techniques, showing a clear shift toward trusted, retrieval-based AI outputs.

As businesses demand more precise and explainable AI, RAG isn’t just a trend—it’s becoming a foundational layer for enterprise-grade applications. Whether for customer service, research, or internal tools, RAG ensures AI gets it right—and explains why.

What is RAG?

Retrieval-Augmented Generation (RAG) is an AI framework designed to make large language models (LLMs) more accurate, reliable, and context-aware. Instead of relying solely on pre-trained knowledge, RAG enables LLMs to “look up” relevant, real-time information from external sources—such as documents, databases, or knowledge bases—before generating a response.

To make this possible, RAG tools come into play. These are specialized frameworks and platforms that implement the RAG architecture, connecting language models to retrieval systems and knowledge stores. They manage the entire pipeline—retrieval, augmentation, and generation—allowing organizations to build AI systems that deliver fact-based, contextual, and verifiable answers.

RAG Architecture: How It Works

RAG systems are made up of three key components:

1. Retriever

This part searches across predefined data sources (e.g., PDFs, wikis, internal databases) to identify the most relevant content based on the user’s query. It converts queries and documents into vector embeddings for semantic search.

2. Augmentation

Stores both the original content and its vectorized form for fast, accurate retrieval. This allows the system to maintain both content fidelity and search efficiency.

3. Generator

A large language model receives the retrieved context and uses it to generate a coherent, factually grounded response—combining learned patterns with real data.

These components work together in a pipeline:

Query → Retrieval → Augmented Generation → Response

How RAG Differs from Standard LLMs

Traditional LLMs generate answers based on patterns from their training data—which can lead to outdated or incorrect outputs. In contrast, RAG tools produce responses grounded in actual, retrievable data. This fundamental difference dramatically reduces fabrication or “hallucination” of information and enables precise citation of sources, making RAG tools especially valuable for applications requiring factual accuracy and verifiability. RAG tools help with

- Reduced hallucinations (made-up facts)

- Allows for source citation

- Makes AI outputs more trustworthy in high-stakes domains (e.g., legal, healthcare, finance)

How RAG Tools Work?

Retrieval-Augmented Generation (RAG) systems enhance AI responses by incorporating relevant information from external knowledge sources. Here’s how they work:

- Question Processing: When a user asks a question, the system converts it into a mathematical representation (embedding) that captures its meaning.

- Knowledge Retrieval: The system searches a vector database (like FAISS, Pinecone, or Chroma) for content chunks with similar meaning. These databases store document fragments as embeddings, allowing for semantic matching rather than just keyword matching.

- Context Selection: The most relevant chunks are selected and combined to form a “context window” that provides background information for the AI.

- Response Generation: The AI model receives both the original question and the retrieved context, using this information to generate an informed response.

RAG systems are particularly valuable because of token limits in AI models. Since models can only process a finite amount of text at once, RAG allows them to focus on relevant information rather than trying to memorize entire knowledge bases. The chunking process – breaking documents into smaller, digestible pieces – is crucial for efficient storage and retrieval.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

Top 7 Popular RAG Tools in 2026

1. LangChain

LangChain provides a comprehensive framework for building LLM applications with a focus on composability. It offers pre-built components for document loading, text splitting, embeddings, vector stores, and prompt management. Its “chains” architecture allows developers to connect multiple LLM operations in sequence for complex workflows.

Pros:

- Offers a comprehensive ecosystem for building RAG pipelines with a wide range of pre-built components.

- Ideal for chaining multiple LLM operations together efficiently.

- Strong integration with various vector databases, enabling flexible and scalable retrieval.

Cons:

- Can be complex and overwhelming for beginners due to its modular design.

- Sometimes criticized for abstraction overhead, which may impact performance or transparency.

- Rapid development pace can result in occasional gaps or inconsistencies in documentation.

2. LlamaIndex

LlamaIndex specializes in data ingestion and retrieval, providing tools to connect LLMs with personal or enterprise data. It offers data connectors for various formats (PDFs, APIs, databases) and query interfaces that abstract away the complexity of RAG implementation details.

Pros:

- Purpose-built for data ingestion and connectivity

- Easier learning curve compared to LangChain

- Excels at handling local files and structured data

- Offers a robust ecosystem of data connectors

Cons:

- Limited feature set for building complex workflows or chains

- Smaller community and ecosystem compared to LangChain

- Fewer integrations with advanced or specialized AI tools

3. Haystack

Haystack is an end-to-end framework for building search systems with deep learning models. It emphasizes flexible pipelines where document stores, retrievers, and readers/generators can be mixed and matched for different use cases, with a particular focus on search quality.

Pros:

- Emphasizes a search-first approach with a strong focus on retrieval

- Modular pipeline architecture enables scalable and maintainable workflows

- Well-suited for document-heavy applications such as RAG and QA systems

- Offers robust evaluation tools for performance and quality monitoring

Cons:

- Less flexible when it comes to general LLM orchestration

- Steeper learning curve for beginners or teams without prior ML/IR experience

- Smaller ecosystem and community support compared to more established frameworks

4. Ragas

Ragas is a framework specifically designed to evaluate RAG systems across multiple dimensions. It provides metrics for context relevance, answer faithfulness to retrieved context, and overall answer quality, helping developers identify specific weaknesses in their RAG implementations.

Pros:

- Specifically designed for evaluating the quality of RAG systems.

- Offers standardized metrics for assessing retrieval accuracy, answer relevance, and faithfulness.

- Easily integrate with existing machine learning or NLP pipelines.

Cons:

- Limited to evaluation; does not support the development or deployment of RAG systems.

- Requires customization to effectively evaluate domain-specific applications.

- The evaluation framework and metrics are still evolving and may lack maturity for some use cases.

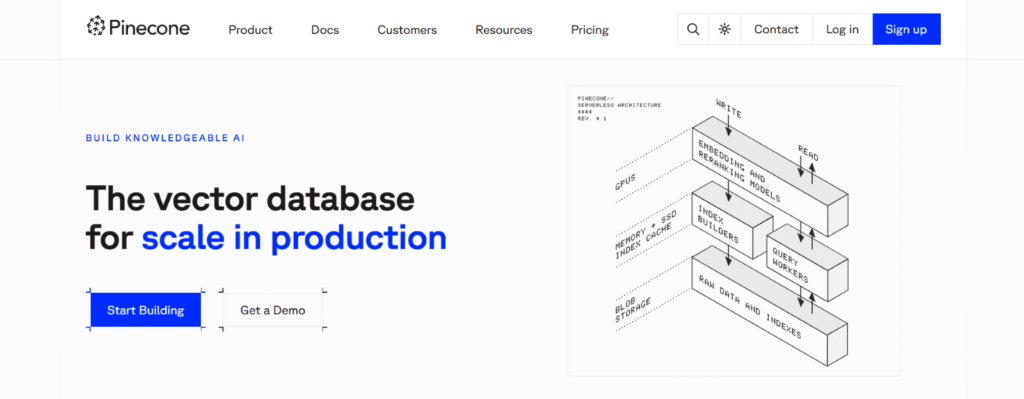

5. Pinecone

Pinecone is a fully managed vector database optimized for machine learning applications. It handles vector storage, indexing, and similarity search with minimal operational overhead, allowing developers to focus on application logic rather than infrastructure.

Pros:

- Fully managed solution with excellent scalability

- Easy-to-use API integration

- Strong performance, even at enterprise scale

- Robust hybrid search capabilities (combining dense and sparse retrieval)

Cons:

- Higher operational costs at scale compared to self-hosted alternatives

- Limited flexibility for customizing or deploying specialized retrieval models

6. Weaviate

Weaviate is a vector search engine with object storage capabilities. It offers GraphQL for querying, automatic vectorization of data objects, and modules for specialized tasks like image search, making it highly versatile for multimodal applications.

Pros:

- Open-source with managed deployment options

- Supports GraphQL-based queries for flexible data access

- Offers multimodal capabilities (text, image, etc.)

- Includes modular architecture for specialized retrieval tasks

Cons:

- More complex to set up compared to tools like Pinecone

- Steeper learning curve for new users

- Performance can vary depending on configuration and optimization

7. Qdrant

Qdrant is a vector similarity search engine focused on high-performance filtering and exact matching combined with vector search. It offers extensive filtering capabilities, making it ideal for applications requiring precise control over search results.

Pros:

- Offers excellent filtering capabilities that allow for precise and efficient data retrieval.

- Backed by a strong and active community that provides ongoing support and shared best practices.

- Delivers a good balance of performance and cost, making it a practical choice for many use cases.

- Supports a wide range of similarity search methods, ideal for advanced search and recommendation systems.

Cons:

- It has a smaller ecosystem compared to more established competitors, limiting third-party integrations.

- It provides fewer enterprise-grade tools and features, which may be a drawback for large-scale deployments.

- Requires more hands-on setup and management, demanding technical expertise for optimization and maintenance.

Each tool addresses different RAG aspects, from orchestration to storage to evaluation, with the best implementations often combining several specialized components.

AI Agentic Workflows: Unlocking New Efficiencies

Empower your business with autonomous AI systems that handle complex, multi-step tasks, driving unprecedented productivity and operational excellence.

Top Use Cases for RAG Tools

1. Internal Company Knowledge Management

RAG systems excel at transforming static company documentation into interactive resources. These tools can index content across Slack, Notion, Confluence, SharePoint, and internal wikis, enabling employees to query years of institutional knowledge through natural language.

Unlike traditional search, RAG-powered tools understand context and relationships between documents, helping teams quickly find specific information without sifting through multiple resources.

Use Case: Glean AI enables enterprises like Grammarly and Okta to unify internal knowledge and deliver contextual search experiences across apps and content repositories.

2. Domain-Specific Expert Assistants

In specialized fields like medicine, law, and finance, RAG tools create assistants that combine LLM capabilities with authoritative domain knowledge. Medical RAG systems can draw from clinical guidelines and research papers to assist healthcare providers with treatment recommendations.

Legal assistants can reference case law and statutes to provide guidance on complex legal questions. These systems ensure responses align with accepted domain expertise rather than generic LLM knowledge.

Use Case: Syntegra uses RAG in healthcare to generate HIPAA-compliant, synthetic patient data grounded in real medical records and guidelines.

3. Dynamic Research Tools

RAG-powered research assistants connect to live data sources like academic databases, web search APIs, and real-time information streams. These tools help researchers track developments across multiple sources, synthesize findings from diverse documents, and identify patterns or connections that might otherwise be missed when working with large volumes of information.

Use Case: Consensus is a RAG-based search engine that helps researchers extract answers and citations from scientific literature in real time.

4. Personalized Learning Platforms

E-learning platforms use RAG to create adaptive educational experiences by connecting LLMs to course materials, textbooks, and lecture content. Students can ask questions about specific lessons, receive explanations tailored to course terminology, and explore concepts through a dialogue format that references assigned materials.

Use Case: Ivy.ai integrates RAG with course materials to power AI chatbots for universities, offering 24/7 student support and contextual learning help.

5. Regulatory and Compliance Support

Organizations use RAG systems to help employees navigate complex regulatory environments by connecting LLMs to internal policies, regulatory documents, and compliance guidelines.

Use Case: Thomson Reuters uses RAG to power CoCounsel, an AI assistant that helps legal teams quickly retrieve relevant compliance and regulatory data from massive legal databases.

These tools help interpret requirements, suggest compliant approaches to business challenges, and reduce the risk of unintentional violations by making complicated regulatory frameworks more accessible.

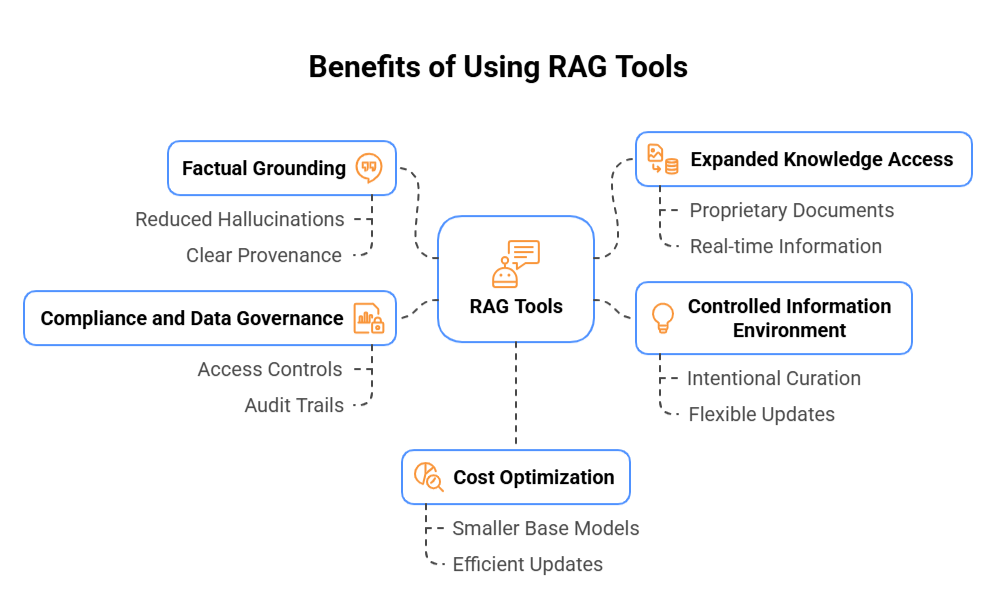

Benefits of Using RAG Tools

1. Factual Grounding

RAG systems dramatically reduce hallucinations by anchoring AI responses to specific retrieved content. Rather than generating answers based on statistical patterns learned during training, these systems first retrieve relevant documents and then generate responses based on this evidence. This approach creates more factually accurate outputs with clear provenance, as each response can be traced back to its source material.

2. Expanded Knowledge Access

RAG enables AI systems to access information beyond their training data, including proprietary documents, recent developments, and specialized knowledge. Organizations can incorporate internal documents, databases, and real-time information sources that weren’t part of the LLM’s original training. This capability keeps responses current and relevant without requiring frequent model retraining.

3. Controlled Information Environment

With RAG tools, organizations maintain precise control over the knowledge available to their AI systems. This allows for intentional curation of information sources, ensuring AI responses align with organizational standards and expertise. Teams can update knowledge bases independently from the underlying AI model, providing flexibility as information evolves.

4. Compliance and Data Governance

RAG architectures help organizations maintain regulatory compliance by separating sensitive data from the AI model itself. Rather than fine-tuning models on confidential information, RAG systems keep this data in separate, secured knowledge bases that can implement appropriate access controls, audit trails, and data handling policies while still utilizing this information for responses.

5. Cost Optimization

RAG approaches can significantly reduce operational costs compared to continuous model fine-tuning. By externalizing knowledge, organizations can deploy smaller, more efficient base models while still providing domain-specific expertise. This architecture limits the computational resources needed for both deployment and updates, as only the knowledge base requires modification when information changes rather than retraining large models.

Challenges and Things to Watch Out For

1. Document Quality Issues

The fundamental principle of “garbage in, garbage out” strongly applies to RAG systems. Low-quality documents, outdated information, or incorrect content will lead to flawed responses. Inconsistent formatting, duplicate content, and poorly structured documents can significantly degrade retrieval performance. Implementing quality control processes for knowledge bases is essential before scaling your RAG implementation.

2. Chunking Strategy Complexity

Finding the optimal chunking approach presents a delicate balance. Chunks that are too large burden the context window and dilute relevance signals, causing the LLM to miss critical information. Conversely, overly small chunks lose contextual relationships and coherence. Different document types often require customized chunking strategies rather than one-size-fits-all approaches.

3. Extensive Tuning Requirements

RAG systems demand substantial tuning across multiple components. Embedding models need selection based on your specific domain; retrievers require parameter optimization for recall-precision balance; prompt templates need refinement to properly utilize retrieved context. This multi-faceted optimization process can be time-consuming and requires systematic testing.

4. Performance Bottlenecks

Without proper caching and optimization, RAG systems can introduce significant latency compared to standalone LLMs. Vector searches, document processing, and managing large context windows all add computational overhead. Response times can become frustrating for users if infrastructure isn’t properly designed for performance.

5. Prompt Engineering Dependencies

Even with excellent retrieval, RAG systems still depend on effective prompt engineering. The LLM must be properly instructed on how to use retrieved information, when to acknowledge knowledge gaps, and how to synthesize multiple (sometimes contradictory) sources. Poor prompting can lead to ignored context or inappropriate synthesis of retrieved information.

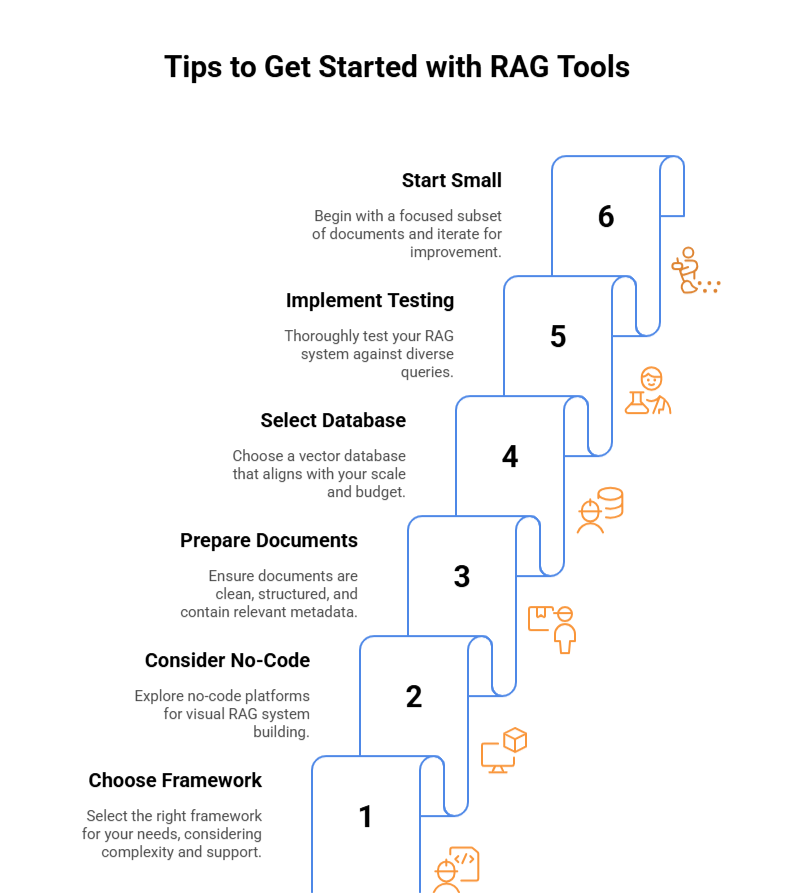

Tips to Get Started with RAG Tools

1. Choose the Right Framework

For developers, LangChain and LlamaIndex provide excellent entry points into RAG implementation. LangChain offers a comprehensive ecosystem with extensive documentation and community support, making it ideal for complex use cases. LlamaIndex excels at data integration with simpler abstractions, making it more approachable for beginners or those with straightforward document retrieval needs.

2. Consider No-Code Options

If coding isn’t your strength, platforms like Chatbase, Flowise, or Langflow offer visual interfaces for building RAG systems. These tools provide drag-and-drop components for creating document processing pipelines and connecting to vector databases, allowing non-developers to experiment with RAG capabilities before committing to custom development.

3. Prepare Your Documents

Document quality significantly impacts RAG performance. Focus on clean, well-structured content with consistent formatting. Remove duplicates, fix formatting issues, and ensure documents contain relevant metadata. Consider preprocessing steps like summarization for lengthy documents to improve retrieval precision.

4. Select an Appropriate Vector Database

Your choice of vector database should align with your scale and budget. For smaller projects or testing, Chroma or FAISS work well locally. As you scale, consider Pinecone for simplicity, Weaviate for multimodal data, or Qdrant for complex filtering needs. Evaluate pricing models carefully as data volumes grow.

5. Implement Rigorous Testing

Before deployment, thoroughly test your RAG system against diverse queries. Create evaluation sets that include expected answers and compare system outputs for relevance and accuracy. Tools like Ragas can help quantify retrieval quality and answer faithfulness. Always include edge cases in your testing to identify potential failure modes.

6. Start Small and Iterate

Begin with a focused subset of high-quality documents rather than indexing everything available. This approach allows you to refine your chunking strategy, embedding models, and retrieval parameters with manageable data volumes before scaling up. Each iteration should improve response quality and system performance.

Transforming Businesses with Kanerika’s Data-Driven LLM Solutions

Kanerika leverages cutting-edge Large Language Models (LLMs) to tackle complex business challenges with remarkable accuracy. Our AI solutions revolutionize key areas such as demand forecasting, vendor evaluation, and cost optimization by delivering actionable insights and managing context-rich, intricate tasks. Designed to enhance operational efficiency, these models automate repetitive processes and empower businesses with intelligent, data-driven decision-making.

Built with scalability and reliability in mind, our LLM-powered solutions seamlessly adapt to evolving business needs. Whether it’s reducing costs, optimizing supply chains, or improving strategic decisions, Kanerika’s AI models provide impactful outcomes tailored to unique challenges. By enabling businesses to achieve sustainable growth while maintaining cost-effectiveness, we help unlock unparalleled levels of performance and efficiency.

Frequently Asked Questions

6. Are RAG tools scalable for enterprise use?

Many RAG frameworks are built to scale across departments and use cases, integrating with APIs and cloud infrastructure for high performance and large data volumes.

7. What are some popular RAG tools or frameworks?

Some popular options include LangChain, LlamaIndex, Haystack, and tools integrated into enterprise platforms like Azure OpenAI with custom connectors.