The AI agents market grew from $5.4 billion in 2024 to $7.6 billion in 2025, and 85% of organizations now use AI agents in at least one workflow. The tasks agents can complete autonomously have been doubling every seven months. Companies are deploying agents for customer service, coding, sales, and operations at scale.

On October 6, 2025, OpenAI launched AgentKit at its Dev Day event. The problem it solves is real. Building agents previously meant juggling fragmented tools with no versioning, writing custom connectors, building evaluation pipelines manually, tuning prompts by hand, and spending weeks on frontend work before launch. AgentKit consolidates everything into a single platform: Agent Builder for visual workflows, Connector Registry for data access, ChatKit for embeddable UI, evaluation tools with trace grading and automated prompt optimization, and safety guardrails. An OpenAI engineer demonstrated the speed by building an entire workflow and two agents live onstage in under eight minutes.

This post covers what AgentKit actually is, how each component works, the workflow from design to deployment, trade-offs compared to alternatives, and best practices for using it in production. No marketing language. Just the technical details that matter.

Image Source: OpenAI

What Is OpenAI’s AgentKit ?

AgentKit is OpenAI’s framework for building AI agents. The goal is simple: make it easier to go from idea to production without rebuilding the same infrastructure every time.

The core promise is speed and safety. You can prototype faster because the tooling is built in. You can deploy with more confidence because evaluation and guardrails are part of the system. Also scale more easily because the platform handles state, streaming, and UI.

Under the hood, AgentKit is built on two main components: the Responses API (which handles structured outputs and tool calling) and the Agents SDK (which provides the runtime and orchestration layer). Everything else in AgentKit is built on top of these.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

AgentKit Workflow: Design to Improve

Building an agent with AgentKit follows a clear path from initial idea to production deployment to ongoing refinement. Here’s how the entire process works in practice.

1. Design

You start with a use case. Let’s say you’re building a customer support agent that can look up orders, check inventory status, answer product questions, and escalate complex issues to a human when needed.

- Create the workflow: Open Agent Builder and add nodes for each major step. Parse the user’s query to understand intent, search the knowledge base, call the order lookup API, check inventory, format responses, and escalate when needed.

- Connect with logic: Connect nodes with conditional logic. If the query is about an order, route to the order lookup branch. If it’s about product information, search the knowledge base. If the agent can’t find a confident answer, escalate to a human.

- Configure each node: Set up prompts, tool connections, and parameters for each node. The order lookup needs API credentials. The knowledge base search needs document access. The escalation node needs to create tickets in your support system.

- Test and iterate: Preview the workflow with test inputs. Try different query types and see how the agent responds. Adjust prompts when responses are unclear. Tweak branching logic when the agent takes the wrong path. Iterate until behavior matches expectations.

2. Deploy

Once the workflow looks solid, you deploy it. AgentKit generates an API endpoint for your agent that your application calls when users interact with it.

- Embed the UI: Embed ChatKit in your support portal and point it at the endpoint. ChatKit handles the interface, so you don’t build chat components yourself. Users can start conversations directly from your website or app.

- Context management: The agent maintains context across multiple messages automatically. If a user asks about an order and follows up with another question, the agent remembers earlier conversation details. Threads are stored without manual handling.

- Set up monitoring: Configure monitoring to track agent performance. Watch how many queries it handles successfully, how often it escalates, and where failures occur. Set alerts for unusual patterns like escalation spikes or repeated failures on specific query types.

3. Monitor

You watch traces in real time as users interact with the agent. Each conversation shows up as a trace with step-by-step details of what happened.

- Analyze traces: See which tools the agent called, what data it retrieved, how it reasoned about queries, and what responses it generated. This visibility helps you understand agent behavior at a granular level.

- Identify patterns: Look for recurring issues. Maybe the agent struggles with return queries. Maybe it escalates too quickly on certain keywords. Maybe it handles order lookups well but fails when inventory data is missing.

- Run evaluations: Run evaluations on recent conversation samples. Measure accuracy (correct information), response time (speed), and user satisfaction (did they get what they needed). Compare current performance against previous versions to track improvements or regressions.

- Export and analyze: Export logs for deeper analysis. You might find certain product categories generate more confusion, or mobile user queries fail more often than desktop queries. This data reveals not just what’s failing, but why.

4. Improve

Based on monitoring insights, you make targeted improvements. The feedback loop drives continuous refinement of agent behavior.

- Make targeted changes: Update prompts, adjust branching logic, or add new tools based on identified issues. Maybe the order lookup prompt needs to handle partial order numbers better. Maybe escalation logic should try another knowledge base search before giving up.

- Version and test: Create a new workflow version in Agent Builder while keeping the old version running in production. Test the new version against your evaluation dataset to ensure changes fix problems without introducing new issues.

- Compare performance: Compare the new version’s performance against the current production version on the same test cases. Look at accuracy, speed, and success rates across different query types.

- Deploy or iterate: If the new version performs better, deploy it. If it performs worse or introduces new problems, iterate further or roll back. The cycle of design, deploy, monitor, and improve repeats continuously as you collect more data and refine behavior based on real usage.

Components of AgentKit

1. Agent Builder

This is a visual workflow editor. You drag nodes onto a canvas, connect them, define branching logic, and preview the results. Each node can be a prompt, a tool call, a conditional branch, or a data transformation. Instead of writing code to orchestrate how your agent behaves, you build the flow visually by connecting these nodes together.

- Versioning and iteration: The builder supports versioning, so you can iterate on workflows without breaking production. This means you can test new changes in a draft version while your live agent keeps running unchanged. It includes inline evaluation, so you can preview how the agent responds before pushing updates.

- Code export: It exports to code if you want to move to the SDK later. Start with the visual builder for speed, then switch to code when you need deeper customization or want to integrate with your existing codebase.

- When to use it: If you’re prototyping, experimenting with different flows, or working with non-technical team members. The visual interface makes workflows easier to understand at a glance. Anyone can see the logic without reading code.

- When not to use it: If you need fine-grained control over every step, or if your logic is too complex to represent as a graph. Visual tools work well for straightforward workflows but struggle with deeply nested conditions or dynamic behavior that changes based on runtime data.

Image Source: OpenAI

2. Connector Registry

Agents need access to external systems to be useful. The Connector Registry is a library of prebuilt integrations for common services. Instead of writing custom API code for each service, you select a connector, configure permissions, and plug it into your workflow.

- Prebuilt integrations: Out of the box, you get connectors for Dropbox, Google Drive, SharePoint, Microsoft Teams, and a few others. Each connector handles authentication, API calls, rate limiting, and error handling automatically. You don’t need to read API documentation or manage OAuth flows. You just configure permissions and the connector handles the rest.

- Admin controls: Admins can control which connectors are available and who can use them. This matters for enterprise deployments where security and governance are critical. You can restrict access to sensitive data sources, approve connectors on a case-by-case basis, or enforce policies about what external services agents can reach.

- Custom connectors: If you need a connector that doesn’t exist, you can build your own. The registry provides a standard interface, so custom connectors work the same way as the prebuilt ones. Once built, they can be shared across teams and workflows, avoiding duplicate work.

- Centralized management: The registry consolidates all data sources into a single admin panel across ChatGPT and the API. You manage permissions, monitor usage, and audit access from one place instead of tracking credentials and tokens across multiple systems. This reduces the operational overhead of maintaining agent infrastructure.

Image Source: OpenAI

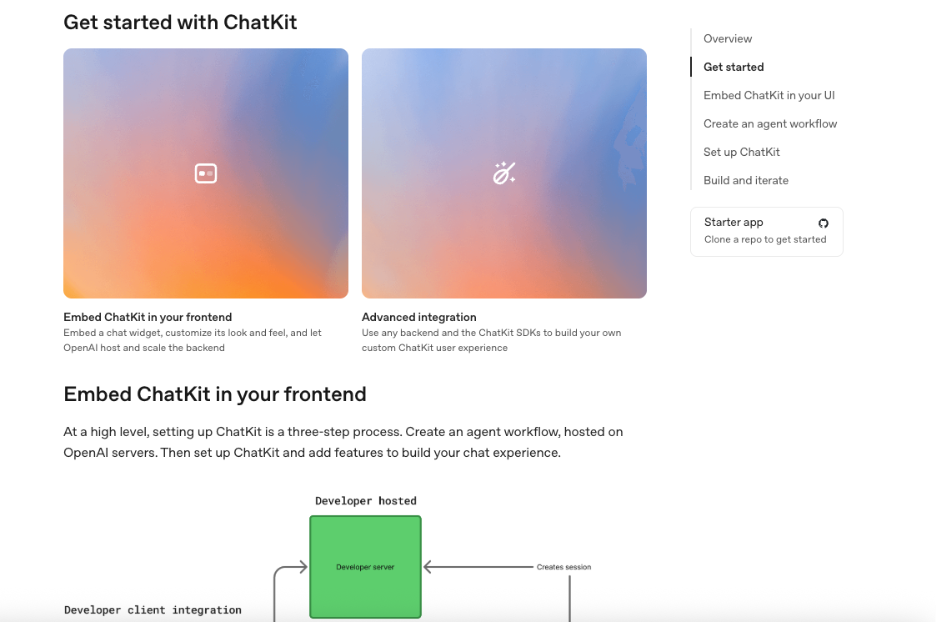

3. ChatKit

This is an embeddable UI component for chat interfaces. It handles chat threads, streaming responses, message history, user input, and all the visual elements of a conversational experience. You drop it into your app, point it at your agent endpoint, and you have a working chat interface.

- No frontend work: ChatKit saves you from building your own chat interface from scratch. No need to manage websockets for streaming, handle token-by-token rendering, implement message threading, or build input validation. All the infrastructure for real-time chat is built in and maintained by OpenAI. This can save weeks of frontend development time.

- Customizable branding: The UI is customizable for branding, colors, layout, and styling. You can match it to your product’s design system so it doesn’t look like a generic chat widget. But the core functionality (how messages stream, how threads work, how input is handled) is fixed and follows OpenAI’s patterns.

- When to build your own: If you need something radically different from a chat interface, you’ll build your own UI and use the API directly. ChatKit works well for conversational experiences but not for dashboards, forms, data tables, or other non-chat interfaces. It’s optimized for back-and-forth dialogue, not complex layouts.

- Quick deployment: You can get a chat interface running in minutes instead of weeks. This is useful for MVPs, internal tools, or any scenario where you want to test agent behavior without investing in custom UI development first. Once your agent logic is proven, you can always replace ChatKit with a custom interface later.

Image Source: OpenAI

4. Evals and Trace Grading

You can’t improve what you don’t measure. AgentKit includes tools for evaluating agent performance systematically. You create test scenarios, run your agent against them, measure the results, and identify where improvements are needed.

- Evaluation datasets: You create evaluation datasets with input/output pairs or test scenarios. These can be real user queries, edge cases you want to handle, or synthetic examples that cover different situations. You can expand datasets over time as you discover new failure modes or add new features to your agent.

- Custom graders: You define graders (functions that score responses based on accuracy, relevance, safety, or custom metrics). Graders can be automated (checking for specific outputs or patterns) or human-annotated (reviewing quality manually). You run evaluations and see how your agent performs across the entire dataset, not just cherry-picked examples.

- Cross-model testing: This works across different models too. You can test the same workflow with GPT-4, GPT-3.5, or other models and compare results on cost, speed, and quality. This helps you choose the right model for your use case based on real performance data, not just assumptions about which model is “better.”

- Trace grading: Trace grading lets you analyze individual runs step by step. You can see which tool calls fired, what the agent was thinking at each step, which branches it took, and where things went wrong. This is critical for debugging multi-step workflows where failures happen deep in the execution path and aren’t obvious from the final output alone.

- Automated prompt optimization: The system can generate improved prompts based on human annotations and grader outputs. Instead of manually tweaking prompts through trial and error, you provide feedback on what went wrong and the system suggests better versions. This speeds up the iteration cycle significantly.

Image Source: OpenAI

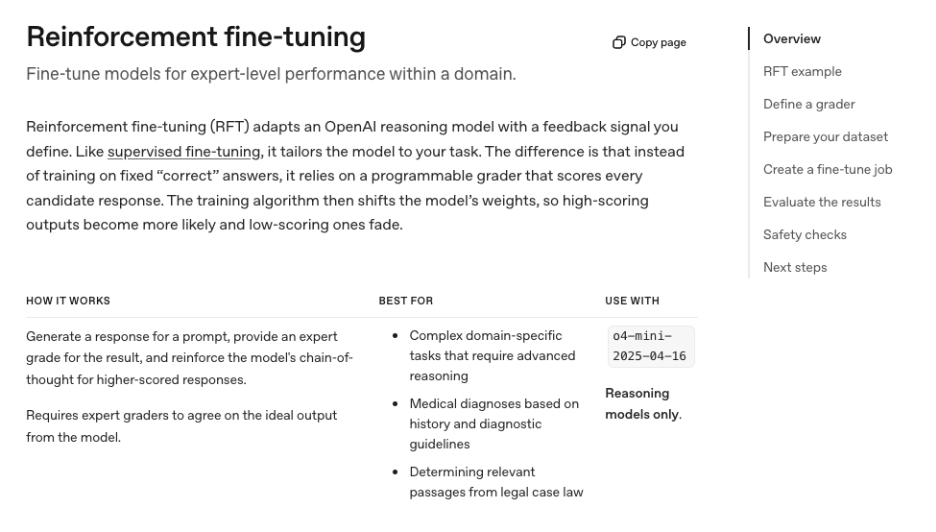

5. Reinforcement Fine-Tuning and Feedback Loops

Agents get better over time if you give them feedback on their performance. AgentKit provides infrastructure to collect feedback systematically and use it to improve agent behavior without starting from scratch each time.

- Custom graders as rewards: AgentKit supports custom graders that act as reward signals. You define what “good” looks like (successful task completion, user satisfaction, efficiency, fewer steps to solution), and the system uses that feedback to improve the agent’s decision-making over time.

- Not fully automatic: This isn’t fully automatic. You still need to design the reward function carefully and validate that the agent is learning the right things. Bad reward design can make the agent optimize for the wrong outcomes (like giving short answers to maximize speed when thorough answers are actually better).

- Feedback collection: The infrastructure for collecting feedback and applying it is built in. You don’t need to build your own pipeline for logging interactions, aggregating scores, storing examples, or triggering retraining. But you do need to define what feedback matters, how to measure it, and what thresholds indicate improvement.

- Iterative improvement: Over multiple iterations, agents learn from patterns in the feedback. If certain approaches consistently get positive scores, the agent will favor similar strategies in the future. This is useful for refining behavior in production without constant manual intervention or rewriting prompts every time you find an issue.

Image Source: OpenAI

6. Agents SDK and Responses API

These are the core engine that powers everything in AgentKit. The visual tools, connectors, UI components, and evaluation features all sit on top of these two foundational pieces. Understanding them helps you know what’s happening under the hood.

- Responses API: The Responses API handles structured outputs and tool calling. When the agent decides to use a tool, the API formats the request correctly, executes the function, waits for the result, and returns it in a structured format the agent can use. This eliminates a lot of parsing, validation, and error handling you’d otherwise write manually.

- Agents SDK: The Agents SDK provides the orchestration layer. It manages state across multiple turns, handles retries when tools fail, sequences tool calls in the right order, and implements the control flow logic. This is what lets the agent reason through multi-step tasks without you manually chaining prompts together or managing conversation history yourself.

- Code-first alternative: You can use the SDK directly if you prefer code over visual tools. The Agent Builder is essentially a UI wrapper around the SDK that generates code under the hood. Everything you can do in the builder, you can do in code with more flexibility, control, and the ability to integrate custom logic.

- When to use the SDK: Use the SDK when your workflows are too complex for visual representation, when you need custom logic that doesn’t fit into predefined nodes, when you want version control on the code itself, or when you’re integrating agent behavior into a larger application with existing code and dependencies.

Image Source: OpenAI

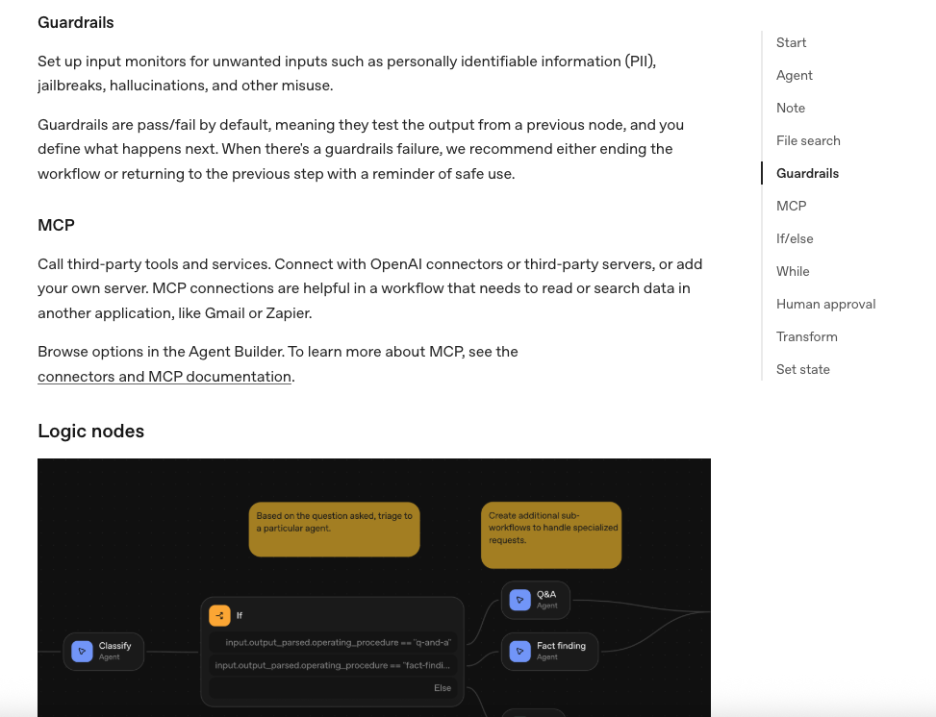

7. Guardrails and Safety

Agents can do damage if they’re not constrained properly. AgentKit includes several safety features to reduce risk when deploying agents in production, especially when they have access to sensitive data or can take actions that affect real systems.

- Input validation: Input validation checks user queries for malicious content, prompt injection attempts, or jailbreak techniques. This prevents users from tricking the agent into ignoring instructions, leaking system prompts, or executing unintended actions. The system flags or blocks suspicious inputs before they reach the agent’s reasoning layer.

- Output validation: Output validation ensures the agent doesn’t leak sensitive information, generate harmful content, or output data it shouldn’t have access to. This catches cases where the agent might accidentally include internal data, API keys, credentials, or inappropriate responses before they reach the user or get logged.

- PII masking: PII masking automatically redacts personally identifiable information from logs and traces. This helps with compliance requirements (GDPR, CCPA, HIPAA) and reduces the risk of exposing user data in debugging systems, monitoring dashboards, or exported logs that might be shared with third parties.

- Per-workflow configuration: You configure guardrails per workflow based on risk and context. Some agents need strict controls (customer-facing chatbots that handle financial data, medical assistants). Others can be more open (internal research tools, developer assistants that only access public documentation). The level of safety you need depends on what the agent can access and do.

- Not foolproof: The safety layer is not foolproof. You still need to test thoroughly, monitor in production, and have human oversight for high-stakes decisions. Guardrails reduce risk significantly but don’t eliminate it. Bad actors can still find ways around protections, and edge cases will slip through no matter how careful you are.

Image Source: OpenAI

Real-World Examples: How Companies Use AgentKit

OpenAI’s AgentKit is already running behind the scenes at major companies that handle millions of users and complex workflows every day. From finance to design, from advertising to legal tech, these early adopters are proving how AI agents can take on structured business tasks, not just simple chat.

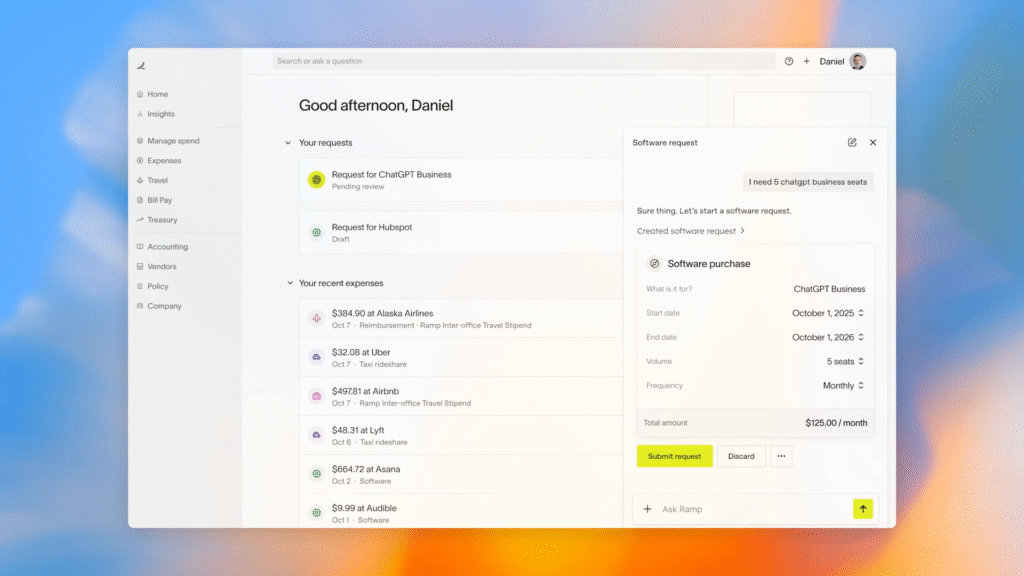

1. Ramp: Automating Expense and Purchase Requests

Ramp is a finance automation platform used by thousands of businesses to manage corporate spending, reimbursements, and approvals.

What they built: Ramp’s team built an internal operations agent using AgentKit to handle employee purchase and expense requests. When an employee types “I need 5 ChatGPT Business seats,” the agent automatically creates a purchase request form, fills out key fields like software name, billing dates, and cost, submits the request through Ramp’s approval flow, and notifies the finance team or manager for confirmation.

How they built it: The agent uses the Connector Registry to connect to Ramp’s internal expense system and the Agent Builder to structure logic for validation, review, and approval. Guardrails ensure sensitive financial data never leaks outside the secure environment.

Why it matters: It replaces manual back-and-forth emails with instant automation, freeing up finance and operations teams to focus on high-value work.

Image Source: OpenAI

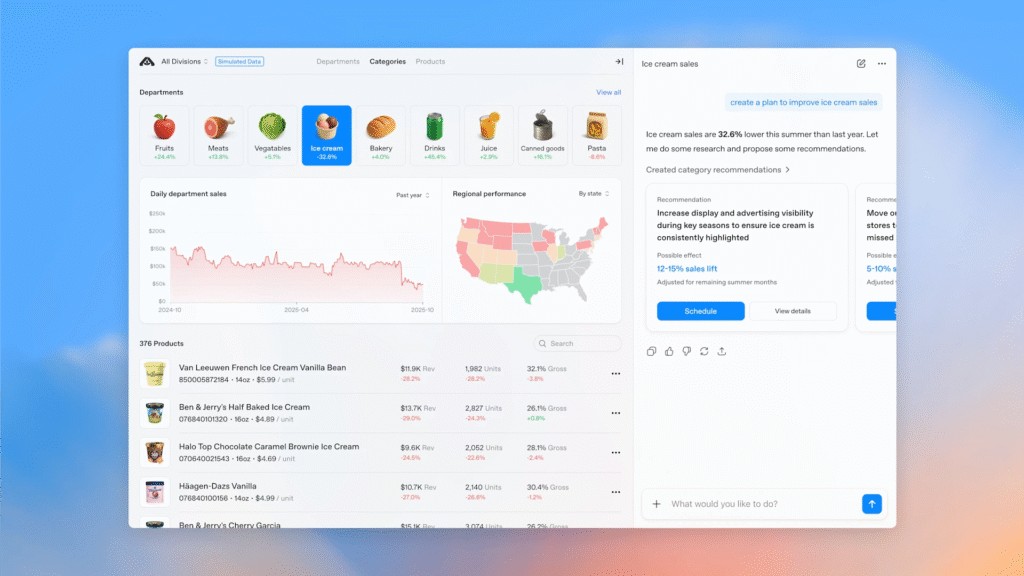

2. Albertsons: Intelligent Retail Process Automation

Albertsons, one of the largest grocery chains in the U.S., operates thousands of stores and manages routine tasks from inventory tracking to vendor compliance.

What they built: Albertsons built internal retail agents to handle automated inventory reporting (stock counts, supply gaps, vendor restocking), store-level audits that check compliance checklists, and vendor coordination that summarizes communications and follow-ups.

How they built it: The Connector Registry connects these agents to Albertsons’ supply and operations databases. They use Evals and Guardrails to ensure data stays clean, relevant, and secure before decisions are made.

Why it matters: Store managers can now request reports or updates through a simple chat interface instead of logging into multiple enterprise dashboards.

Image Source: OpenAI

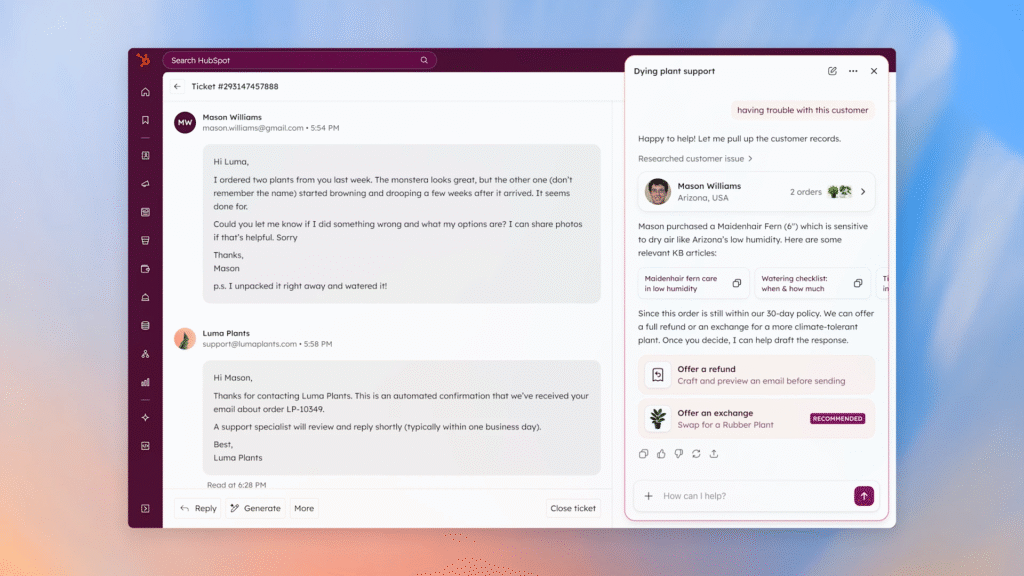

3. HubSpot: AI-Powered CRM Workflows

HubSpot, the marketing and CRM giant, is using AgentKit to simplify how users interact with customer and campaign data.

What they built: Their AI agent works inside HubSpot’s dashboard and handles tasks like pulling up contact summaries (“Show me my top 5 leads by engagement”), drafting personalized follow-up emails using GPT-5’s language generation, and creating and scheduling new campaigns without leaving chat.

How they built it: The agent is built with ChatKit for the UI, Connector Registry for CRM access, and Agent Builder for conditional logic like prioritizing leads or checking campaign dates.

Why it matters: Sales and marketing teams can work faster without navigating multiple menus. One conversation can handle data retrieval, drafting, and automation.

Image Source: OpenAI

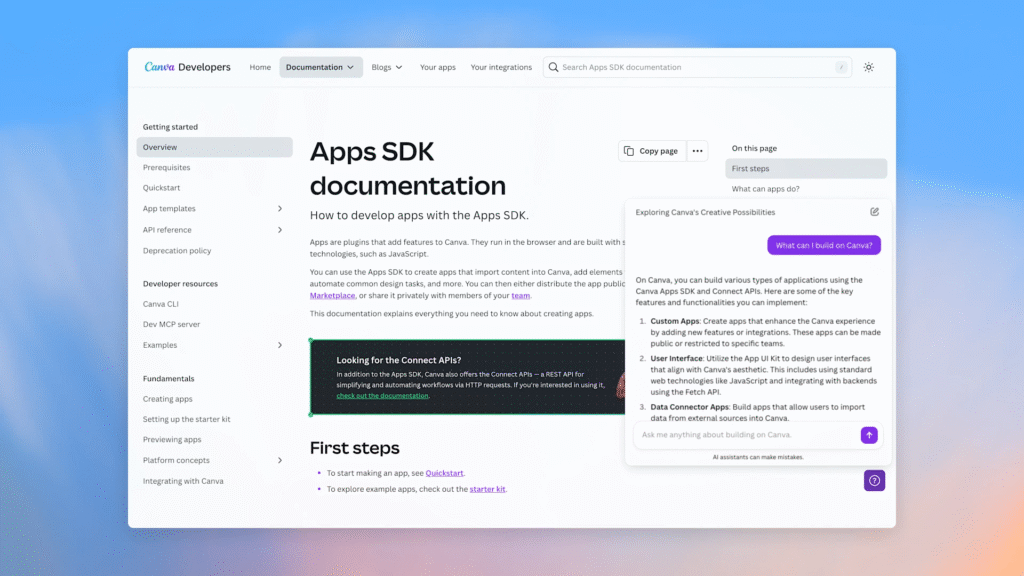

4. Canva: Creative Assistant Inside the Design App

Canva integrated AgentKit to help users design faster and smarter. Their agent acts like a creative co-pilot.

What they built: The agent suggests design templates based on context (“I’m making a pitch deck for a tech startup”), generates short text snippets or titles that fit the brand tone, and helps users find and organize assets within their project folders.

How they built it: ChatKit powers the chat window inside Canva, while Connector Registry links the agent to Canva’s asset library and templates database.

Why it matters: Instead of browsing hundreds of templates manually, users can describe what they need and the AI handles the search, organization, and first draft.

Image Source: OpenAI

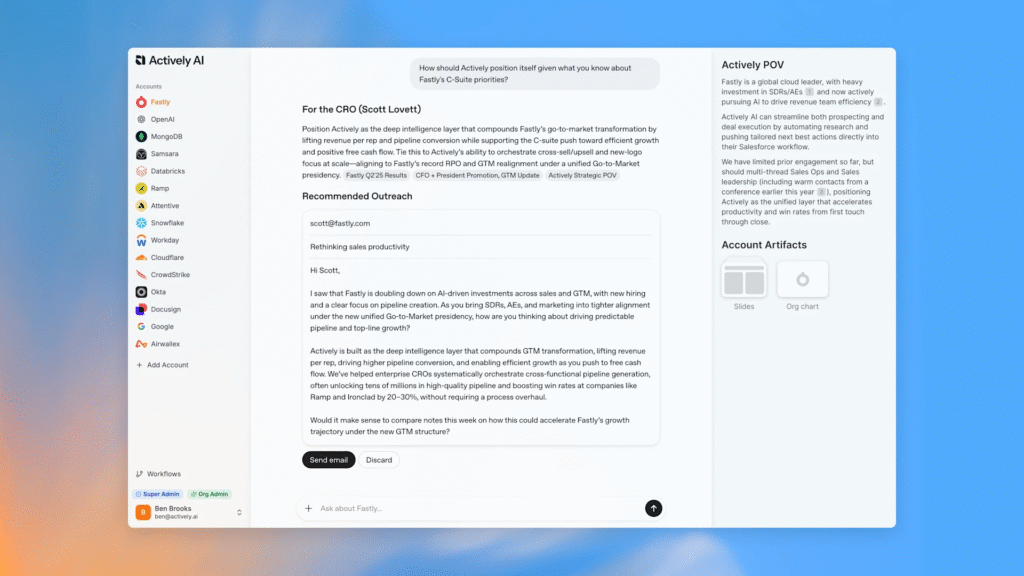

5. Actively: Personal Fitness and Wellness Coaching

Actively uses AgentKit to power a personalized health assistant that connects with fitness trackers, nutrition databases, and workout plans.

What they built: The agent suggests workouts based on recent activity (“I ran yesterday, what should I do today?”), logs results automatically from connected devices, and adjusts plans dynamically using data from Fitbit or Apple Health via connectors.

How they built it: Connector Registry pulls real-time data safely, while Reinforcement Fine-Tuning helps it learn from user feedback to personalize future sessions.

Why it matters: It demonstrates how AgentKit can be used for personal assistants that combine reasoning, memory, and data connectivity.

Image Source: OpenAI

6. LegalOn: Legal Document Analysis Agent

LegalOn builds AI-powered tools for legal professionals, and with AgentKit they’ve built a contract review agent.

What they built: The agent reads and analyzes contracts, flags risky or non-compliant clauses, suggests safer alternatives, and generates summaries for executives.

How they built it: They use Evals to benchmark the model’s legal accuracy and Guardrails to enforce confidentiality so no client-sensitive data leaks or gets stored improperly.

Why it matters: It reduces review time drastically while maintaining human oversight and legal safety, exactly the kind of high-stakes automation AgentKit is designed for.

Image Source: OpenAI

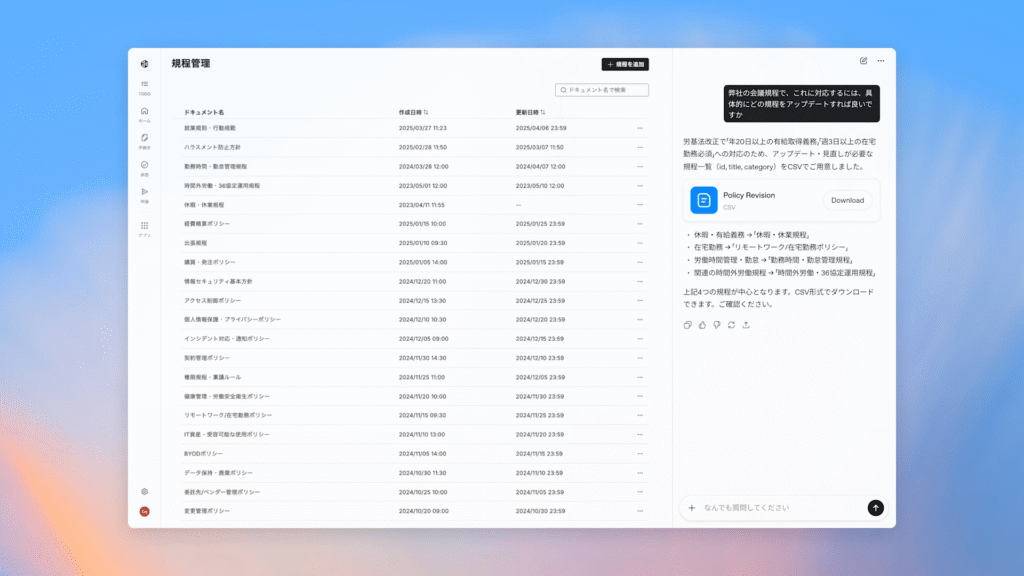

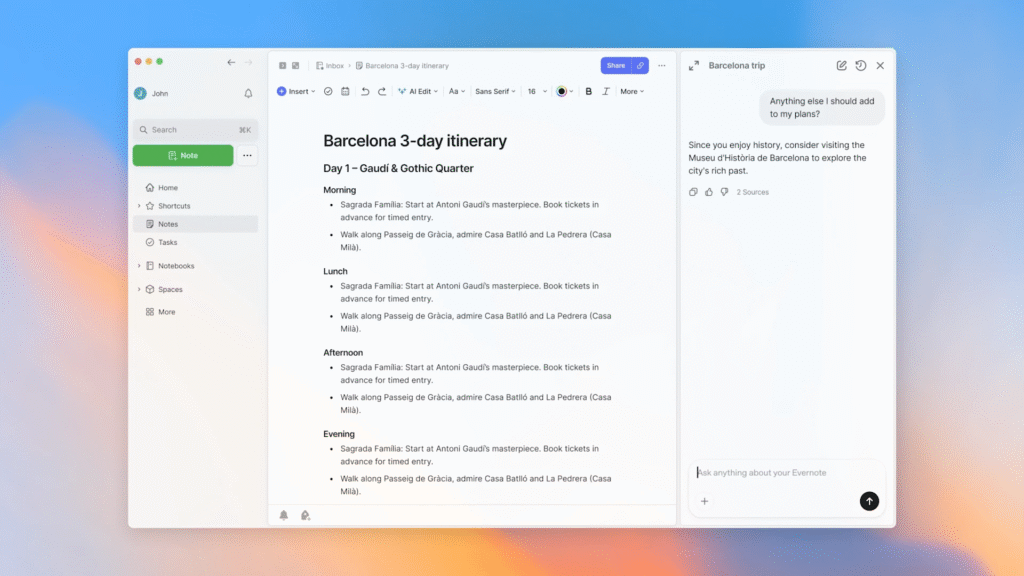

7. Evernote: Intelligent Note Management

Evernote integrated AgentKit to build an AI note manager that can summarize, search, and categorize notes automatically.

What they built: Users can ask “Summarize all my notes from this week” or “Find my notes about Q4 targets.” Behind the scenes, the agent uses Responses API to interpret requests, Connector Registry to fetch content, and ChatKit for interaction. It even tags or reorganizes notes based on detected topics.

Why it matters: It transforms static note storage into an intelligent workspace that proactively assists users.

Image Source: OpenAI

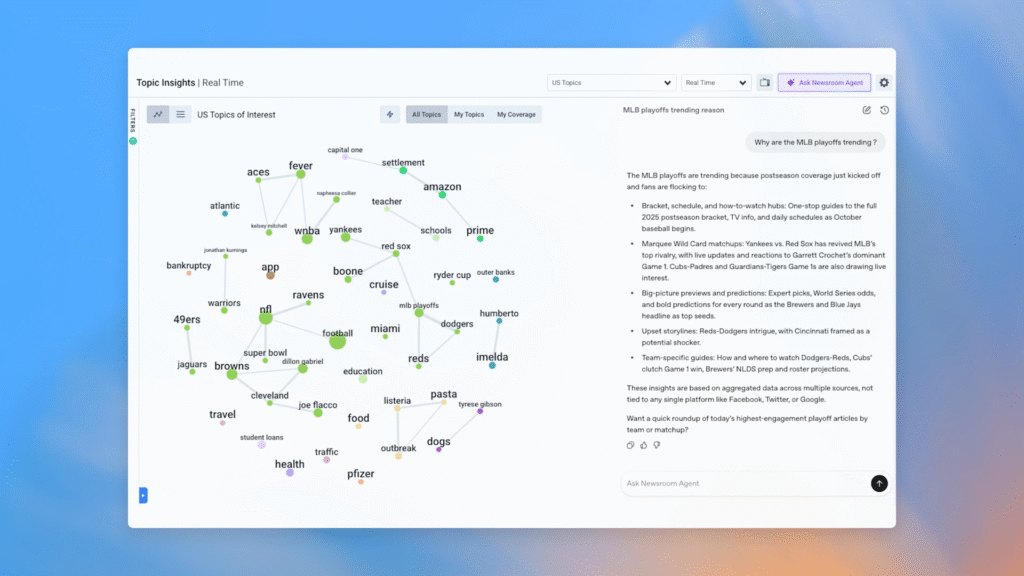

8. Taboola: AI for Ad Performance and Creative Optimization

Taboola, a major advertising platform, uses AgentKit to optimize ads and content recommendations.

What they built: Their agent reviews campaign data, identifies weak-performing creatives, suggests improved versions of headlines or visuals, and forecasts engagement metrics based on previous data.

How they built it: The agent uses Evals for automated content scoring and Agent Builder to structure multi-step workflows (data, analysis, recommendation).

Why it matters: It blends creative and analytical AI, helping advertisers improve content continuously without manual A/B testing.

Image Source: OpenAI

Best Practices for Building AI Agents with AgentKit

These are practical guidelines based on what works when building agents with AgentKit. Follow these to avoid common mistakes and ship faster.

1. Start Small with One Tool or Workflow

Don’t build a complex multi-agent system on day one. Start with a single workflow that solves one specific problem.

Why this matters: Complex workflows are harder to debug, harder to evaluate, and harder to explain to stakeholders. Starting small lets you learn how the platform works before committing to larger builds.

How to do it: Pick one use case (like order lookup or FAQ answering) and build just that. Get it working well before adding more capabilities. Each additional tool or branch multiplies the possible failure modes.

What success looks like: Your first agent handles one task reliably. Users get value from it even though it’s limited. You understand the platform’s strengths and limitations before scaling up.

2. Use Guardrails Early

Don’t wait until production to think about safety. Add guardrails during development and test them thoroughly.

Why this matters: Safety issues are harder to fix after launch. Users will find ways to break your agent if you don’t constrain it properly. Early guardrails catch problems before they become incidents.

How to do it: Configure input validation, output filtering, and PII masking from the start. Test with adversarial inputs (jailbreak attempts, malicious queries, edge cases). Make sure guardrails don’t block legitimate use cases while stopping actual threats.

What success looks like: Your agent handles malicious inputs gracefully without breaking or leaking information. Guardrails catch problems in development that would have been embarrassing or dangerous in production.

3. Monitor with Traces and Logs

You can’t debug what you can’t see. Set up comprehensive monitoring before you deploy.

Why this matters: Agents fail in unexpected ways. Without traces, you won’t know why users are frustrated or where the agent is making mistakes. Logs give you the evidence you need to improve.

How to do it: Enable trace logging for every agent interaction. Set up alerts for high error rates, escalation spikes, or unusual patterns. Review traces regularly to understand common failure modes and edge cases.

What success looks like: When something goes wrong, you can replay the exact interaction and see where the agent failed. You identify patterns in failures rather than treating each incident as a unique mystery.

4. Version Workflows and Iterate

Never make changes directly to production. Use versioning to test changes safely before deploying them.

Why this matters: Changes that seem like improvements often introduce new problems. Versioning lets you test new behavior against production traffic without risking downtime or degraded performance.

How to do it: Create a new version when making changes. Test it against your evaluation dataset. Compare performance metrics to the current production version. Only deploy if the new version is clearly better.

What success looks like: You catch regressions before they reach users. You can roll back quickly if a deployment goes wrong. Your confidence in changes is based on data, not assumptions.

5. Use Evaluation Continuously

Don’t just evaluate once before launch. Run evaluations continuously as part of your development process.

Why this matters: Agent behavior drifts over time as you make changes. Continuous evaluation catches regressions early and ensures quality doesn’t degrade as you iterate.

How to do it: Build an evaluation dataset with representative examples and edge cases. Run it automatically on every change. Add new examples to the dataset when you discover failures. Track metrics over time to see if quality is improving or degrading.

What success looks like: You know immediately if a change breaks existing functionality. Your evaluation dataset grows as you learn more about what users actually need. Quality trends upward because you catch problems early.

6. Control Cost (Tool Calls and Model Usage)

Agent workflows can get expensive quickly. Monitor costs and optimize the expensive parts.

Why this matters: Complex workflows with multiple tool calls and large contexts can cost dollars per user interaction. Without monitoring, costs can spiral out of control before you notice.

How to do it: Track how many tool calls and tokens each workflow uses. Identify the most expensive operations. Optimize by caching repeated queries, using cheaper models for simple tasks, and reducing unnecessary tool calls.

What success looks like: You know exactly how much each user interaction costs. You’ve optimized expensive operations without sacrificing quality. Your cost per interaction stays stable as usage grows.

7. Apply Security Best Practices

Agents need access to systems and data, but that access should be limited and controlled.

Why this matters: Agents with too much access can cause serious damage if they malfunction or get manipulated. Security problems are expensive to fix and can destroy user trust.

How to do it: Use least privilege (only give the agent access to what it actually needs). Separate read and write permissions. Require human approval for high-risk actions. Audit access regularly to ensure permissions stay appropriate.

What success looks like: Your agent can do its job but can’t accidentally delete databases, expose sensitive data, or take actions outside its intended scope. Security is layered so single failures don’t cascade into disasters.

8. Document Your Workflows

Write down what each workflow does, why you made specific design choices, and what assumptions you’re making.

Why this matters: Agents are complex systems that other people (or future you) need to understand and maintain. Without documentation, knowledge lives in one person’s head and disappears when they leave.

How to do it: Document the purpose of each workflow, the expected inputs and outputs, what tools it uses and why, edge cases you’re aware of, and known limitations. Keep documentation close to the code or workflow definition.

What success looks like: New team members can understand what an agent does without asking the original builder. When something breaks, anyone can debug it because the intended behavior is documented.

AI Agents: A Promising New-Era Finance Solution

Explore how AI agents are transforming finance, offering innovative solutions for smarter decision-making and efficiency.

Kanerika: Building Scalable AI Solutions for Smarter Business Decisions

Kanerika builds AI-powered data analytics systems that help businesses turn raw data into useful insights. Using Microsoft tools like Power BI, Azure ML, and Microsoft Fabric, we create dashboards, predictive models, and automated reports that support faster decisions across industries like healthcare, finance, retail, and logistics.

Our AI strategy consulting & delivery services cover predictive analytics, agentic AI, and marketing automation. We help teams forecast trends, understand customer behavior, and automate routine work. We also support cloud migration, hybrid setups, and strong data governance. With ISO 27701 and 27001 certifications, privacy is built into every solution.

Kanerika’s AI agents—DokGPT, Jennifer, Alan, Susan, Karl, and Mike Jarvis—are designed for tasks such as document processing, risk scoring, customer analytics, and voice data analysis. They’re trained on structured data and fit easily into enterprise workflows.

We also offer data engineering and low-code automation. Our systems are modular and scalable, so teams can start small and expand as needed. Whether upgrading legacy tools or building new AI capabilities, Kanerika helps businesses move faster with less friction.

Transform Your Business with Cutting-Edge AI Solutions!

Partner with Kanerika for seamless AI integration and expert support.

FAQs

How is AgentKit different from ChatGPT?

ChatGPT is a conversational interface for end users. AgentKit is a development platform for building custom AI agents. With AgentKit, you create agents that connect to your own systems, follow your specific workflows, and handle tasks unique to your business. ChatGPT answers questions. AgentKit helps you build systems that take action.

Do I need to know how to code to use AgentKit?

Not for basic workflows. The Agent Builder provides a visual, drag-and-drop interface where you can design agent workflows without writing code. However, for complex logic, custom integrations, or fine-grained control, coding with the Agents SDK gives you more flexibility. AgentKit works for both technical and non-technical users depending on what you’re building.

What systems can AgentKit connect to?

The Connector Registry includes prebuilt integrations for Dropbox, Google Drive, SharePoint, Microsoft Teams, and other common services. You can also build custom connectors for any system with an API. The registry provides a standard interface so custom connectors work the same way as prebuilt ones. If you need to connect to internal databases or proprietary systems, you’ll need to create custom integrations.

How long does it take to build an agent with AgentKit?

Simple agents can be built in hours. Complex multi-agent systems take weeks or months depending on requirements. At OpenAI’s Dev Day, an engineer built a working agent in under eight minutes to demonstrate the speed. The visual tools and prebuilt components significantly reduce development time compared to building everything from scratch, but production-ready agents still require testing, evaluation, and refinement.

Can I migrate from AgentKit to another platform later?

Partially. Your workflow logic and prompts are portable. But you’ll be tightly coupled to OpenAI’s APIs and infrastructure. If you want to avoid lock-in, use the Agents SDK directly rather than the visual tools, keep your integration logic separate, and design workflows that could run on other orchestration platforms. The more you use AgentKit-specific features, the harder migration becomes.Retry