In 2025, OpenAI demonstrated a powerful multimodal RAG (Retrieval-Augmented Generation) system capable of answering questions using both text and images from large knowledge bases. According to a report by MarketsandMarkets, the multimodal AI market is expected to grow at a staggering CAGR of 35%, reaching $4.5 billion by 2028. Such growth highlights the pressing demand for systems capable of leveraging diverse data types.

Recent IDC findings indicate that 90% of enterprise data is unstructured, comprising over 80% of images, videos, audio, and text documents. As organizations struggle to make sense of this diverse data landscape, multimodal RAG (Retrieval Augmented Generation) has emerged as a game-changing solution.

While traditional RAG systems excel at processing text, they fall short when dealing with product images, technical diagrams, video tutorials, or voice recordings. This critical limitation has pushed forward the development of multimodal RAG architectures that can process, understand, and generate insights from multiple data types simultaneously. Let’s explore why Multimodal RAG is the future of AI innovation and how it’s reshaping industries worldwide.

Optimize Resources and Drive Business Growth With AI!

Partner with Kanerika for Expert AI implementation Services

What is Multimodal RAG?

Multimodal RAG (Retrieval Augmented Generation) is an advanced AI system that processes and understands multiple types of data, including text, images, audio, and video, simultaneously. Unlike traditional RAG, which works only with text, multimodal RAG can retrieve relevant information across different formats, enabling more comprehensive and context-aware responses. This technology enhances AI applications by bridging the gap between various forms of digital content and human communication patterns.

Here’s an example scenario to understand multimodal RAG better.

Every day, your customer service team faces a familiar challenge: a customer sends a photo of a malfunctioning product along with a voice message describing the issue, as well as screenshots of error messages from your app. Traditional AI systems would struggle to piece this puzzle together – but this is exactly where multimodal RAG shines. Multimodal RAG (Retrieval Augmented Generation) systems represent the next evolution in AI technology, capable of understanding and connecting information across multiple modalities, including text, images, audio, and video, to provide comprehensive, context-aware responses.

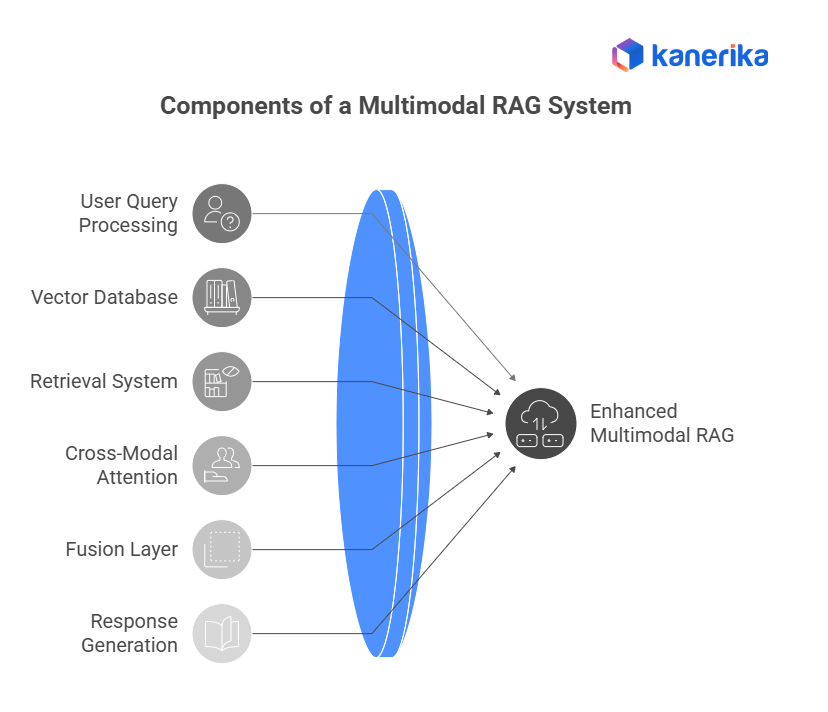

Multimodal RAG Architecture: Key Components and Their Functions

1. Response Generation & Post-Processing

Finally, a Large Language Model (LLM) generates a coherent, human-like response grounded in the fused multimodal data. Post-processing may refine the output by re-ranking, filtering irrelevant details, or formatting results into text, visuals, or multimodal explanations, depending on the user’s needs.

2. User Query Processing & Multimodal Encoders

The process begins with user input, which could be text, images, audio, or video. Specialized encoders then convert each data type into vector embeddings. For instance, BERT may be used for text, CLIP for images, and Whisper for audio. This step ensures that all inputs are transformed into a common representation that the system can work with.

3. Vector Database / Knowledge Store

All multimodal embeddings are stored in a vector database, which acts as the knowledge repository. This database enables efficient similarity search across data types, making it possible to locate the most relevant information for any query.

4. Retrieval System

The retrieval engine searches the vector database to fetch the most relevant items. It applies similarity metrics and often uses hybrid methods—semantic search for meaning and keyword-based search for precision. This ensures the retrieved data is both accurate and contextually appropriate.

5. Cross-Modal Attention Mechanisms

Once retrieved, the system uses cross-modal attention to align and link data across modalities. This allows it to connect related elements—for example, matching a section of descriptive text with a corresponding image region—ensuring coherence between different data types.

6. Fusion Layer (Context Integration)

The retrieved multimodal data is then fused into a unified context. This integration step balances the contribution of each modality and prepares the data for generation, ensuring the response is holistic rather than fragmented.

Types of Modalities Supported

1. Text Documents

Text documents encompass written content like articles, documentation, emails, and chat logs that form the foundation of traditional RAG systems. The system processes these using advanced language models to understand context, semantics, and relationships within the text. Natural Language Processing (NLP) techniques help extract key information and maintain the original meaning during retrieval.

2. Images and Diagrams

Visual content includes photographs, illustrations, technical diagrams, charts, and infographics that contain important visual information. Vision-language models like CLIP process these images to understand visual elements, text within images, and spatial relationships. The system can identify objects, read text, and understand complex visual relationships within diagrams.

3. Audio Files

Audio content includes voice recordings, meetings, calls, podcasts, and other sound-based data that contain valuable information. Speech-to-text models like Whisper convert audio into text while preserving important aspects like tone and emphasis. The system can process multiple speakers, different languages, and acoustic characteristics.

4. Video Content

Video files combine visual and audio elements, requiring sophisticated processing to extract meaningful information from both streams. The system analyzes frame sequences, motion, scene changes, and synchronized audio to understand the complete context. Key frame extraction and temporal understanding help manage the complexity of video data.

5. Structured Data

Structured data includes databases, spreadsheets, JSON files, and other formally organized information with clear relationships and hierarchies. The system preserves the inherent structure and relationships while converting this data into vector representations. This enables integration with other data types while maintaining the original organizational context.

Agentic AI: How Autonomous AI Systems Are Reshaping Technology

Explore how Agentic AI is transforming industries with autonomous systems that drive innovation and reshape technology.

Advanced Features of Multimodal RAG

Cross-Modal Search

1. Image-to-text Search

Image-to-text search allows users to query using images to find relevant textual information in the knowledge base. The system analyzes visual elements and converts them into semantic embeddings that can be matched against text vectors. This enables use cases like finding documentation related to product images or retrieving text descriptions matching visual diagrams.

2. Text-to-image Search

Text-to-image search enables natural language queries to locate relevant images and visual content in the database. The system uses cross-modal embeddings to bridge the semantic gap between textual descriptions and visual features. These capabilities power applications like finding product images based on specifications or locating diagrams matching technical descriptions.

3. Audio-visual Search Capabilities

Audio-visual search combines audio and visual processing to enable complex multimodal queries across multimedia content. Users can search using combinations of speech, sound, and visual elements to find relevant content across video libraries and mixed-media databases. This enables sophisticated use cases like finding video segments based on spoken keywords and visual events.

Context Window Management

1. Handling Multiple Data Types

The system intelligently manages different data types within the context window to maintain coherent relationships between modalities. Priority algorithms determine how to balance text, image, audio, and video information within memory constraints. This ensures that the system maintains appropriate context across different data types without losing critical information.

2. Priority-based Retrieval

Priority-based retrieval uses intelligent algorithms to rank and select the most relevant pieces of information across different modalities. The system weighs factors like relevance scores, data freshness, and information density to optimize retrieval results. This ensures that the most important context is preserved regardless of data type or source.

3. Context Window Optimization

Context window optimization involves dynamically adjusting how different types of information are stored and processed in the system’s working memory. The system uses techniques like sliding windows, chunking, and compression to maximize the effective use of the context window. This enables handling of longer sequences and complex multimodal interactions while maintaining performance.

Benefits of Multimodal RAG

Multimodal RAG brings together the strengths of text, images, audio, and video to deliver deeper, more reliable insights than traditional RAG systems. By integrating multiple data sources, it provides a richer understanding of context and improves the quality of responses. Key benefits include:

1. Deeper Context Understanding

Instead of relying solely on text, multimodal RAG combines visual, auditory, and textual inputs. This allows AI to capture nuances and deliver answers that reflect the full context of a query.

Example: In healthcare, a system can analyze a patient’s MRI scan alongside lab reports and doctors’ notes, offering more precise diagnostic support.

2. Smarter Decision-Making

With access to multiple forms of evidence, such as documents, charts, and images, multimodal RAG produces more accurate and well-rounded outputs that support faster and more confident business decisions.

Example: A customer support system can understand a user’s spoken issue along with screenshots of an error, providing faster, tailored resolutions.

3. Improved Accuracy and Relevance

By combining modalities, the system cross-validates information. This reduces the risk of incomplete or misleading results, ensuring that responses are both precise and relevant.

Example: An e-commerce chatbot can check both product descriptions and uploaded customer photos to give more accurate product matches.

4. Enhanced User Experience

Multimodal RAG enhances interactions by accommodating various input types, including voice queries with image attachments. This leads to more intuitive, user-friendly AI applications.

5. Cross-Industry Versatility

From healthcare (medical reports and scans) to finance (reports and graphs) and retail (product descriptions and images), multimodal RAG adapts to diverse domains and delivers value across various industries.

Elevate Your Business With Custom AI Solutions!

Partner with Kanerika for Expert AI implementation Services

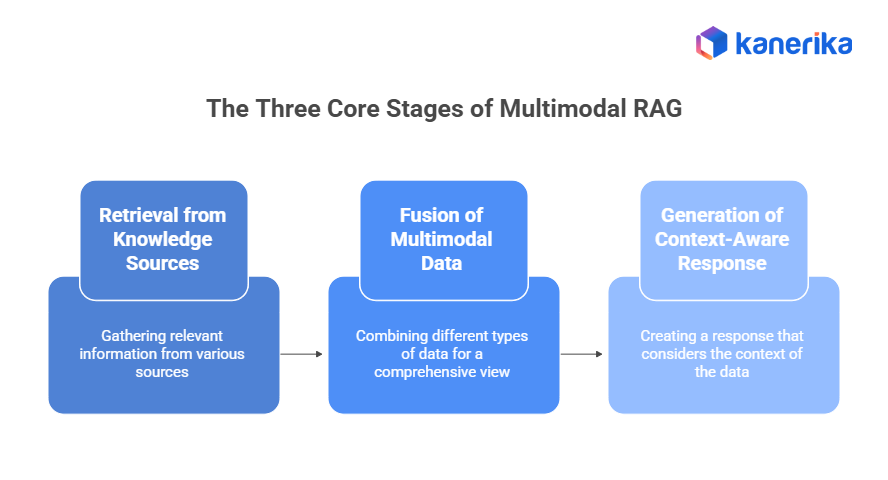

The Three Core Stages of Multimodal RAG

Multimodal RAG builds on the concept of Retrieval-Augmented Generation by enabling AI systems to use more than just text. Instead of relying solely on language data, it can process and combine text, images, audio, and video. This enables AI to comprehend queries in a richer context and produce more accurate, fact-based responses. The process can be explained in three key stages:

1. Retrieval from Knowledge Sources

- The system begins by converting user queries and multimodal data into vector embeddings.

- These embeddings are stored and organized in a vector database, allowing the AI to quickly find relevant information across text, visuals, or speech.

- For example, if the query relates to healthcare, it may pull lab reports, diagnostic notes, and medical scans from the knowledge base.

2. Fusion of Multimodal Data

- Once relevant items are retrieved, the AI fuses them into a unified context.

- This involves aligning information from different modalities so they complement one another.

- Specialized models help connect the dots—ensuring that a chart, an image, or a transcript is interpreted together with related text.

- The outcome is a coherent, enriched dataset that captures the full meaning of the query.

3. Generation of Context-Aware Response

- The large language model (LLM) takes the fused multimodal data and uses it to generate a final answer.

- Because the response is grounded in retrieved evidence, it is more accurate and relevant than text-only outputs.

- The system can present the answer in multiple formats—text summaries, visual explanations, or even multimodal outputs, depending on the need.

In short, Multimodal RAG works by retrieving information across different formats, fusing it into a single context, and generating responses that are precise, well-supported, and practical for real-world use.

Key Technologies

1. CLIP and Vision-Language Models

CLIP (Contrastive Language-Image Pre-training) enables powerful capabilities for understanding image-text relationships. The model learns joint representations of images and text through a contrastive learning approach. This allows sophisticated cross-modal search and understanding.

- Zero-shot image classification

- Cross-modal similarity matching

- Visual semantic understanding

2. Whisper and Audio Processing

Whisper handles speech recognition and audio understanding tasks with high accuracy. The system processes audio inputs into text while maintaining important acoustic features. Advanced audio processing enables multilingual support and noise-resistant operation.

- Multilingual speech recognition

- Speaker diarization

- Acoustic feature extraction

3. Vector Databases

Specialized databases optimize storage and retrieval of high-dimensional vectors. These systems employ sophisticated indexing structures for efficient similarity search. Real-time updates and scaling capabilities support production deployments.

- Efficient similarity search

- Horizontal scaling

- ACID compliance for enterprise use

4. Large Language Models (LLMs)

LLMs serve as the orchestration layer, coordinating between different modalities. They interpret queries, combine information, and generate coherent responses. Advanced models, such as GPT-4, enable sophisticated reasoning across multiple data types.

- Cross-modal reasoning

- Response generation

- Context management

Comparing Top LLMs: Find the Best Fit for Your Business

Compare leading LLMs to identify the ideal solution that aligns with your business needs and goals.

Multimodal RAG: Implementation Guide

1. Choosing the Right Embedding Models

Embedding models translate different data types (such as text, images, and audio) into numerical formats that machines can process. Selecting the appropriate models ensures accurate representation and compatibility across modalities. Key considerations include:

- Text embeddings: Use models like BERT or OpenAI’s embeddings for contextual text understanding.

- Image embeddings: Leverage models like CLIP or Vision Transformers for image representation.

- Multimodal embeddings: Opt for unified models, such as FLAVA, for seamless data integration.

2. Vector Database Selection

A vector database stores embeddings and retrieves the most relevant data during queries. Picking the right database affects performance and scalability. Consider the following:

- Performance: Evaluate options like Pinecone or Weaviate for fast similarity searches.

- Scalability: Ensure the database supports large datasets as your needs grow.

- Integration: Choose databases with API support for smooth LLM integration.

3. LLM Integration

Large Language Models (LLMs) generate responses by synthesizing retrieved embeddings. Integrating the right LLM ensures coherent and accurate outputs across modalities. Steps include:

- LLM choice: Select models like GPT-4 or BLOOM, depending on your use case.

- Customization: Fine-tune the LLM with multimodal data to improve response quality.

- Latency management: Optimize pipelines to minimize response delays.

4. API Design

APIs connect your Multimodal RAG system with external applications, enabling smooth interaction. A well-designed API ensures ease of use and system efficiency. Focus on:

- Endpoints: Define endpoints for querying, uploading, and retrieving multimodal data.

- Error handling: Build robust mechanisms for incomplete or invalid input scenarios.

- Scalability: Design APIs to handle increasing query loads without performance drops.

Why AI and Data Analytics Are Critical to Staying Competitive: Key Stats and Insights

Uncover key stats and insights on how AI and data analytics are essential for maintaining a competitive edge in today’s market.

Business Use Cases of Multimodal RAG

1. E-commerce Product Discovery

Multimodal RAG revolutionizes online shopping by enabling sophisticated product search and recommendations. Customers can search using images of products they like, combined with text descriptions of desired modifications. The system processes product photos, descriptions, customer reviews, and technical specifications to provide comprehensive results. This leads to improved conversion rates and customer satisfaction by bridging the visual-textual gap in product discovery.

- Visual similarity search

- Product attribute extraction

- Cross-category recommendations

- Style and design matching

2. Technical Documentation and Support

Engineering and IT teams can leverage multimodal RAG to streamline access to technical documentation. The system processes technical diagrams, code snippets, video tutorials, and written documentation simultaneously. Support teams can quickly find relevant solutions by combining error screenshots, log files, and problem descriptions, significantly reducing resolution time.

- Troubleshooting guides

- Equipment maintenance manuals

- API documentation

- System architecture documents

3. Healthcare Information Management

Healthcare providers can use multimodal RAG to process and retrieve information from medical records, imaging data, and clinical notes. The system can analyze medical images (X-rays, MRIs), patient records, doctors’ notes, and lab results together to provide comprehensive patient information. This enables faster diagnosis, better treatment planning, and improved patient care.

- Integrated patient records analysis

- Medical image interpretation

- Clinical decision support

- Research and case study retrieval

4. Legal Document Analysis

Law firms and legal departments can process various types of legal documents, including contracts, court recordings, evidence photos, and video depositions. Multimodal RAG helps analyze complex cases by connecting information across different types of evidence and documentation. This leads to more thorough case preparation and efficient legal research.

- Contract analysis

- Evidence correlation

- Case law research

- Compliance documentation

5. Customer Service Enhancement

Contact centers can leverage multimodal RAG to improve customer support by processing customer queries across multiple channels. The system can handle customer photos, voice recordings, chat transcripts, and product documentation simultaneously. This enables more accurate and faster problem resolution while maintaining context across customer interactions.

- Omnichannel support

- Visual problem diagnosis

- Voice and text integration

- Automated response suggestion

6. Market Research and Competitive Analysis

Marketing teams can analyze competitor products, marketing materials, and customer feedback across various media types. The system processes social media posts, product images, video advertisements, and customer reviews to provide comprehensive market insights. This enables better strategic planning and product positioning.

- Brand sentiment analysis

- Product comparison

- Marketing campaign analysis

- Trend identification

7. Educational Content Management

Educational institutions and corporate training departments can organize and retrieve learning materials across different formats. The system processes lecture videos, presentation slides, textbook content, and interactive materials to provide comprehensive learning resources. This enables personalized learning experiences and efficient knowledge management.

- Course material organization

- Learning path creation

- Content recommendation

- Assessment support

How Kanerika Uses RAG to Make LLMs Smarter and More Reliable

Kanerika, a globally recognized tech services provider, utilizes Retrieval-Augmented Generation (RAG) to enhance the accuracy and reliability of large language models (LLMs). RAG connects generative AI with real-time data sources, helping reduce hallucinations and improve context-aware responses. We go beyond basic RAG by offering advanced techniques, such as multimodal RAG and Agentic RAG, which enable AI agents to retrieve and act on data autonomously. These capabilities are also part of our data governance tools—KANGovern, KANGuard, and KANComply.

With experience across various industries, including BFSI, healthcare, logistics, and telecom, we build AI systems that solve real business problems. Our LLM-powered models support tasks like demand forecasting, vendor evaluation, and cost optimization. By combining generative AI with structured and unstructured data, we enable businesses to automate repetitive tasks and make more informed decisions. These systems are designed to handle complex, context-rich workflows with high accuracy.

Our solutions are built for scalability and reliability. Whether it’s optimizing supply chains, improving strategic planning, or reducing operational costs, our AI tools deliver measurable impact. As a featured Microsoft Fabric partner, we support enterprises in adopting AI that’s practical, efficient, and ready for real-world challenges.

Transform Challenges Into Growth With AI Expertise!

Partner with Kanerika for Expert AI implementation Services

Frequently Asked Questions

1. What is Multimodal RAG and how is it different from traditional RAG?

Multimodal RAG extends traditional RAG by using not only text but also images, audio, and video as inputs. It retrieves and fuses knowledge from multiple data types to generate richer, more accurate responses.

What is an example of a multimodal RAG?

An example is a healthcare assistant combining patient records, diagnostic images (X-rays, MRIs), and lab reports to suggest potential diagnoses. By integrating multimodal data, the system enhances accuracy and supports medical professionals in making informed decisions.

How does a RAG work?

A Retrieval-Augmented Generation (RAG) retrieves relevant information from a knowledge base using embeddings and integrates it into a large language model. The LLM uses this retrieved data to generate detailed and accurate responses, ensuring outputs are grounded in up-to-date and domain-specific knowledge.

How to create a RAG model?

To create a RAG model:

- Select an embedding model for encoding queries and data.

- Set up a vector database to store and retrieve embeddings.

- Integrate a large language model (LLM) like GPT.

- Design APIs for seamless querying and response generation.

Why is RAG used?

RAG is used to generate accurate, context-rich responses by combining generative AI with real-time retrieval from external knowledge bases. It overcomes limitations of static models, providing updated and relevant information, making it ideal for dynamic domains like customer support, research, and decision-making.

What is the RAG framework?

The RAG framework combines a retrieval mechanism with a generative language model. It retrieves the most relevant data from a vector database based on user queries and integrates this data into the generative process, ensuring responses are accurate, grounded, and contextually appropriate.

What is the benefit of RAG?

The key benefit of RAG is its ability to deliver accurate and contextually relevant outputs by grounding generative responses in real-world data. It enhances reliability, ensures up-to-date information, and makes AI applications more effective across industries like healthcare, education, and customer service.