Companies like Uber handle millions of trips and user interactions every day, relying heavily on optimized data pipelines to provide real-time ride data and recommendations. A slow or inefficient data pipeline could disrupt their entire service, leading to customer dissatisfaction and lost revenue. While most enterprises struggle with data bottlenecks, slow insights, and escalating costs, companies that master data pipeline optimization gain a decisive competitive edge.

What separates organizations drowning in data from those turning it into strategic value? The answer lies not in more tools but in smarter pipelines. As data volumes grow exponentially, the efficiency of your data infrastructure becomes the hidden factor determining whether your analytics deliver timely insights or outdated noise.

This guide unpacks the essential practices reshaping data pipeline optimization in 2025, revealing how modern enterprises can transform data infrastructure from a cost center into their most valuable competitive advantage.

Transform Your Data Workflows With Expert Data Modernization Services!

Partner with Kanerika Today!

What is Data Pipeline Optimization?

Data pipeline optimization is the process of refining these crucial systems to ensure they operate efficiently, accurately, and at scale. By streamlining how data is collected, processed, and analyzed, businesses can transform raw data into actionable insights faster than ever before, driving smarter decisions and more effective outcomes.

Data Pipelines: An Overview

1. Components and Architecture

A data pipeline is a crucial system that automates the collection, organization, movement, transformation, and processing of data from a source to a destination. The primary goal of a data pipeline is to ensure data arrives in a usable state that enables a data-driven culture within your organization. A standard data pipeline consists of the following components:

- Data Source: The origin of the data, which can be structured, semi-structured, or unstructured data

- Data Integration: The process of ingesting and combining data from various sources

- Data Transformation: Converting data into a common format for improved compatibility and ease of analysis

- Data Processing: Handling the data based on specific computations, rules, or business logic

- Data Storage: A place to store the results, typically in a database, data lake, or data warehouse

- Data Presentation: Providing the processed data to end-users through reports, visualization, or other means

The architecture of a data pipeline varies depending on specific requirements and the technologies utilized. However, the core principles remain the same, ensuring seamless data flow and maintaining data integrity and consistency.

2. Types of Data Handled

Data pipelines handle various types of data, which can be classified into three main categories:

- Structured Data: Data that is organized in a specific format, such as tables or spreadsheets, making it easier to understand and process. Examples include data stored in relational databases (RDBMS) and CSV files

- Data-wpil-monitor-id=”3392″>Semi-structured Data: Data that has some structure but may lack strict organization or formatting. Examples include JSON, XML, and YAML files

- Unstructured Data: Data without any specific organization or format, such as text documents, images, videos, or social media interactions

These different data formats require custom processing and transformation methods to ensure compatibility and usability within the pipeline. By understanding the various components, architecture, and data types handled within a data pipeline, you can more effectively optimize and scale your data processing efforts to meet the needs of your organization.

Identifying Data Pipeline Inefficiencies

1. Performance Bottlenecks

CPU and memory constraints: Insufficient computing power or RAM limits pipeline throughput, causing processing queues and delays when handling complex transformations or large datasets.

I/O limitations: Slow disk operations create bottlenecks as data moves between storage and processing layers, particularly with high-volume batch processes or frequent small reads/writes.

Network transfer issues: Bandwidth constraints and latency problems slow data movement between distributed systems, especially in cross-region or multi-cloud architectures.

Poor query performance: Inefficient SQL queries or unoptimized NoSQL operations drain resources and create processing delays, often due to missing indexes or suboptimal join operations.

2. Cost Inefficiencies

Overprovisioned resources: Allocating excessive computing capacity “just in case” leads to significant waste, commonly seen when static resource allocation doesn’t match actual workload patterns.

Underutilized compute power: Purchased computing resources sit idle during low-demand periods, particularly problematic with fixed-capacity clusters that can’t scale down automatically.

Redundant data processing: Multiple teams unknowingly reprocess the same data multiple times, duplicating effort and creating unnecessary copies that waste storage and compute resources.

Storage inefficiencies: Improper data formats, compression settings, and retention policies bloat storage costs for data that delivers minimal or no business value.

3. Reliability Issues

Single points of failure: Critical pipeline components without redundancy create vulnerability to outages, commonly seen in master-node dependencies or singleton service architectures.

Error handling weaknesses: Poor exception management leads to silent failures or pipeline crashes, causing data loss or quality issues when unexpected input formats appear.

Monitoring blind spots: Insufficient observability into pipeline operations prevents early problem detection, leaving teams reacting to failures rather than preventing them.

Data quality problems: Lack of validation at pipeline entry points allows corrupted or non-conforming data to pollute downstream systems, creating cascading reliability issues.

Best Data Pipeline Optimization Strategies

1. Optimize Resource Allocation

Resource allocation optimization ensures you’re using exactly what you need, when you need it. By right-sizing compute resources and implementing auto-scaling, organizations can significantly reduce costs while maintaining performance. This approach aligns computing power with actual workload demands rather than peak requirements.

- Implement auto-scaling based on workload patterns

- Use spot/preemptible instances for non-critical workloads

- Right-size resources based on historical usage patterns

2. Improve Data Processing Efficiency

Efficient data processing minimizes the work required to transform raw data into valuable insights. By implementing incremental processing and optimizing data formats, organizations can dramatically reduce processing time and resource consumption while maintaining or improving output quality.

- Convert to columnar formats (Parquet, ORC) for analytical workloads

- Implement data partitioning strategies for faster query performance

- Use appropriate compression algorithms based on access patterns

3. Enhance Pipeline Architecture

Architectural improvements focus on the structural design of your data pipelines for better scalability and maintainability. Modern pipeline architectures leverage parallelization and modular components to process data more efficiently and adapt to changing requirements with minimal disruption.

- Break monolithic pipelines into modular, reusable components

- Implement parallel processing where dependencies allow

- Select appropriate processing frameworks for specific workload types

4. Streamline Data Workflows

Streamlining workflows eliminates unnecessary steps and optimizes the path data takes through your systems. By reducing transformation complexity and optimizing job scheduling, organizations can minimize processing time while maintaining data quality and integrity.

- Eliminate redundant transformations and unnecessary data movement

- Implement checkpoints for efficient failure recovery

- Optimize job scheduling based on dependencies and resource availability

5. Implement Caching Strategies

Strategic caching reduces redundant processing by storing frequently accessed or expensive-to-compute results. Properly implemented caching layers can dramatically improve response times and reduce computational load, especially for read-heavy analytical workloads.

- Cache frequently accessed or computation-heavy results

- Implement appropriate invalidation strategies to maintain freshness

- Use distributed caching for scalability in high-volume environments

6. Adopt Data Quality Management

Proactive data quality management prevents downstream issues that can cascade into major pipeline failures. By implementing validation at ingestion points and throughout the pipeline, organizations can catch and address problems before they impact business decisions.

- Implement schema validation at data entry points

- Create automated data quality checks with alerting

- Develop clear protocols for handling non-conforming data

7. Implement Continuous Monitoring

Comprehensive monitoring provides visibility into pipeline performance and helps identify optimization opportunities. With proper observability tooling, organizations can detect emerging issues before they become critical and measure the impact of optimization efforts.

- Monitor end-to-end pipeline health with key performance indicators

- Set up alerting for performance degradation and failures

- Implement logging that facilitates root cause analysis

8. Leverage Infrastructure as Code

Infrastructure as Code (IaC) brings consistency and repeatability to pipeline deployment and management. This approach enables organizations to version-control their infrastructure configurations and quickly deploy optimized pipeline components across environments.

- Use templates to ensure consistent resource provisioning

- Version-control infrastructure configurations

- Automate deployment and scaling operations

Read more – Different Types of Data Pipelines: Which One Better Suits Your Business?

Implementing a Data Pipeline Optimization Framework

1. Assessment Phase

Pipeline performance auditing: Systematically measure and analyze current pipeline metrics against benchmarks to identify bottlenecks, using tools like execution logs, resource utilization monitors, and end-to-end latency trackers.

Identifying optimization opportunities: Map pipeline components against performance data to pinpoint specific areas for improvement, focusing on resource utilization gaps, processing inefficiencies, and architectural limitations.

Prioritizing improvements by impact: Evaluate potential optimizations based on business impact, implementation effort, and resource requirements to create a ranked priority list that delivers maximum value first.

2. Implementation Roadmap

Quick wins vs. long-term improvements: Balance immediate high-ROI optimizations like query tuning against strategic architectural changes, allowing for visible progress while building toward sustainable improvements.

Phased implementation approach: Break optimization efforts into sequenced sprints that minimize disruption to production environments, starting with low-risk components and gradually addressing more complex pipeline segments.

Testing and validation strategies: Implement rigorous testing protocols including performance benchmarking, regression testing, and canary deployments to verify optimizations deliver expected improvements without introducing new issues.

3. Continuous Optimization Culture

Establishing pipeline performance SLAs: Define clear, measurable performance targets for each pipeline component, creating accountability and objective criteria for ongoing optimization efforts.

Creating feedback loops: Implement systematic review cycles where pipeline performance data feeds back into planning, ensuring optimization becomes an iterative process rather than a one-time project.

Building optimization into the development cycle: Integrate performance considerations into development practices through code reviews, performance testing gates, and optimization-focused training for engineering teams.

Handling Data Quality and Consistency

1. Ensuring Accuracy and Reliability

Maintaining high data quality and consistency is essential for your data pipeline’s efficiency and effectiveness. To ensure accuracy and reliability, conducting regular data quality audits is crucial. These audits involve a detailed examination of the data within your system to ensure it adheres to quality standards, compliance, and business requirements. Schedule periodic intervals for these audits to examine your data’s accuracy, completeness, and consistency.

Another strategy for improving data quality is by monitoring and logging the flow of data through the pipeline. This will give you insight into potential bottlenecks that may be slowing the data flow or consuming resources. By identifying these issues, you can optimize your pipeline and improve your data’s reliability.

2. Handling Redundancy and Deduplication

Data pipelines often encounter redundant data and duplicate records. Proper handling of redundancy and deduplication plays a vital role in ensuring data consistency and compliance. Design your pipeline for fault tolerance and redundancy by using multiple instances of critical components and resources. This approach not only improves the resiliency of your pipeline but also helps in handling failures and data inconsistencies.

Implement data deduplication techniques to remove duplicate records and maintain data quality. This process involves:

- Identifying duplicates: Use matching algorithms to find similar records

- Merging duplicates: Combine the information from the duplicate records into a single, accurate record

- Removing duplicates: Eliminate redundant records from the dataset

Security, Privacy, and Compliance of Data Pipelines

1. Data Governance and Compliance

Effective data governance plays a crucial role in ensuring compliance with various regulations such as GDPR and CCPA. It is essential for your organization to adopt a robust data governance framework, which typically includes:

- Establishing data policies and standards

- Defining roles and responsibilities related to data management

- Implementing data classification and retention policies

- Regularly auditing and monitoring data usage and processing activities

By adhering to data governance best practices, you can effectively protect your organization against data breaches, misconduct, and non-compliance penalties.

2. Security Measures and Data Protection

In order to maintain the security and integrity of your data pipelines, it is essential to implement appropriate security measures and employ effective data protection strategies. Some common practices include:

- Encryption: Use encryption techniques to safeguard data throughout its lifecycle, both in transit and at rest. This ensures that sensitive information remains secure even if unauthorized access occurs

- Access Control: Implement strict access control management to limit data access based on the specific roles and responsibilities of employees in your organization

- Data Sovereignty: Consider data sovereignty requirements when building and managing data pipelines, especially for cross-border data transfers. Be aware of the legal and regulatory restrictions concerning the storage, processing, and transfer of certain types of data

- Anomaly Detection: Implement monitoring and anomaly detection tools to identify and respond swiftly to potential security threats or malicious activities within your data pipelines

- Fraud Detection: Leverage advanced analytics and machine learning techniques to detect fraud patterns or unusual behavior in your data pipeline

ETL vs. ELT: How to Choose the Right Data Processing Strategy

Boost your financial performance—explore advanced data analytics solutions today!

Tools and Technologies for Data Pipeline Optimization

Data Processing Frameworks

1. Apache Spark

A unified analytics engine offering in-memory processing that significantly accelerates data processing tasks. Spark excels at pipeline optimization through its DAG execution engine, which analyzes query plans and determines the most efficient execution path. Its ability to cache intermediate results in memory dramatically reduces I/O bottlenecks for iterative workflows.

2. Apache Flink

A stream processing framework built for high-throughput, low-latency data streaming applications. Flink optimizes data pipelines through stateful computations, exactly-once processing semantics, and advanced windowing capabilities. Its checkpoint mechanism ensures fault tolerance without sacrificing performance, making it ideal for real-time pipelines.

3. Databricks

A unified data analytics platform built on Spark that enhances pipeline optimization through its Delta Lake architecture. Databricks offers automatic cluster management, query optimization, and Delta caching for improved performance. Its optimized runtime provides significant speed improvements over standard Spark deployments and integrates ML workflows seamlessly.

A fully managed service implementing Apache Beam for both batch and streaming workloads. Dataflow optimizes pipelines by dynamically rebalancing work across compute resources, auto-scaling resources to match processing demands, and offering templates for common pipeline patterns. Its serverless approach eliminates cluster management overhead.

Orchestration Platforms

An open-source workflow management platform that optimizes pipeline orchestration through directed acyclic graphs (DAGs). Airflow enables pipeline optimization by allowing detailed dependency management, task parallelization, automatic retries, and resource pooling to prevent overloading downstream systems.

2. Dagster

A data orchestrator focused on developer productivity and observability. Dagster optimizes pipelines through its asset-based approach, type checking, and structured error handling. Its ability to track data dependencies and visualize lineage helps identify optimization opportunities and eliminate redundant processing.

3. Prefect

A workflow management system designed for modern infrastructure. Prefect optimizes pipelines through dynamic task mapping, caching mechanisms, and state handlers. Its hybrid execution model allows seamless scaling between local development and distributed production environments, with detailed visibility into task performance.

A serverless orchestration service that coordinates multiple AWS services. Step Functions optimizes pipelines by managing state transitions, handling error conditions, and enabling parallel processing branches. Its visual workflow editor and built-in integrations simplify complex pipeline management without infrastructure overhead.

The Ultimate Databricks to Fabric Migration Roadmap for Enterprises

A comprehensive step-by-step guide to seamlessly migrate your enterprise data analytics from Databricks to Microsoft Fabric, ensuring efficiency and minimal disruption.

Monitoring Solutions

1. Prometheus

An open-source monitoring and alerting toolkit designed for reliability and scalability. Prometheus optimizes data pipelines by providing detailed time-series metrics, custom query language for analysis, and targeted alerts for performance degradation. Its pull-based architecture is lightweight and adaptable to diverse pipeline environments.

2. Datadog

A comprehensive monitoring platform that unifies metrics, traces, and logs. Datadog enables pipeline optimization through end-to-end visibility, anomaly detection, and correlation analysis across distributed systems. Its pre-built integrations with data processing tools provide immediate insights into pipeline performance without extensive setup.

3. New Relic:

An observability platform with deep application performance monitoring capabilities. New Relic optimizes data pipelines through distributed tracing, real-time analytics, and ML-powered anomaly detection. Its ability to connect pipeline performance directly to business metrics helps prioritize optimization efforts based on impact.

4. Grafana

An open-source analytics and visualization platform. Grafana optimizes pipelines by consolidating metrics from multiple sources, enabling custom visualizations tailored to specific pipeline components, and supporting alerting based on complex conditions. Its flexible dashboard system adapts to different team needs and monitoring requirements.

10 Different Types of Data Pipelines: Which One Better Suits Your Business?

Explore the 10 different types of data pipelines and find out which one is best suited for optimizing your business’s data flow and processing needs.

Informatica to DBT Migration

When optimizing your data pipeline, migrating from Informatica to DBT can provide significant benefits in terms of efficiency and modernization.

Informatica has long been a staple for data management, but as technology evolves, many companies are transitioning to DBT (for more agile and version-controlled data transformation. This migration reflects a shift towards modern, code-first approaches that enhance collaboration and adaptability in data teams.

Moreover, transitioning from a traditional ETL (Extract, Transform, Load) platform to a modern data transformation framework leverages SQL for defining data transformations and runs directly on top of a data warehouse. This process aims to modernize the data stack by moving to a more agile, transparent, and collaborative approach to data engineering.

Also Read- Whitepaper on Modernizing Integration Layer from Informatica to DBT

Here’s What the Migration Typically Entails

- Enhanced Agility and Innovation: DBT transforms how data teams operate, enabling faster insights delivery and swift adaptation to evolving business needs. Its developer-centric approach and use of familiar SQL syntax foster innovation and expedite data-driven decision-making

- Scalability and Elasticity: DBT’s cloud-native design integrates effortlessly with modern data warehouses, providing outstanding scalability. This adaptability ensures that organizations can manage vast data volumes and expand their analytics capabilities without performance hitches

- Cost Efficiency and Optimization: Switching to DBT, an open-source tool with a cloud-native framework, reduces reliance on expensive infrastructure and licensing fees associated with traditional ETL tools like Informatica. This shift not only trims costs but also optimizes data transformations, enhancing the ROI of data infrastructure investments

- Improved Collaboration and Transparency: DBT encourages better teamwork across data teams by centralizing SQL transformation logic and utilizing version-controlled coding. This environment supports consistent, replicable, and dependable data pipelines, enhancing overall effectiveness and data value delivery

Key Areas to Focus

- Innovation: Embrace new technologies and methods to enhance your data pipeline. Adopting cutting-edge tools can result in improvements related to data quality, processing time, and scalability

- Compatibility: Ensure that your chosen technology stack aligns with your organization’s data infrastructure and can be integrated seamlessly

- Scalability: When selecting new technologies, prioritize those that can handle growing data volumes and processing requirements with minimal performance degradation

When migrating your data pipeline, keep in mind that DBT also emphasizes testing and documentation. Make use of DBT’s built-in features to validate your data sources and transformations, ensuring data correctness and integrity. Additionally, maintain well-documented data models, allowing for easier collaboration amongst data professionals in your organization.

8 Best Data Modeling Tools to Elevate Your Data Game

Explore the top 8 data modeling tools that can streamline your data architecture, improve efficiency, and enhance decision-making for your business.

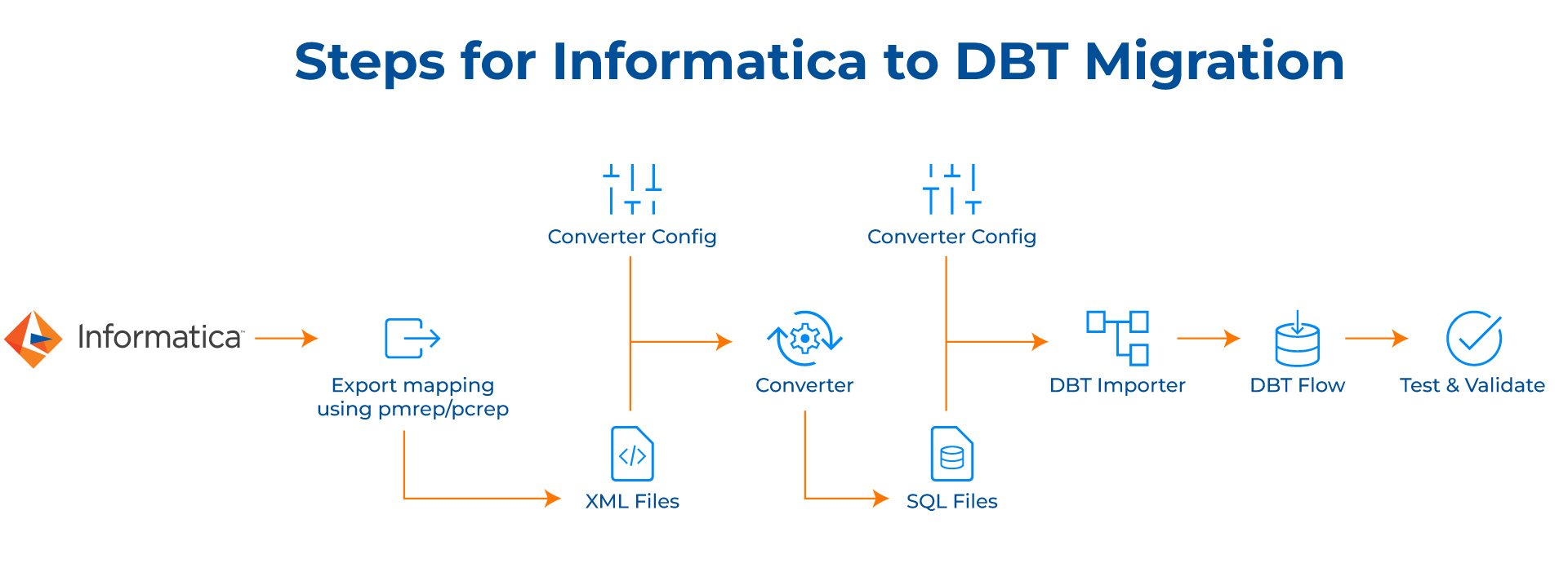

Migration Approach for Transitioning from Informatica to DBT

1. Inventory and Analysis

Catalog all Informatica mappings, including both PowerCenter and IDQ. Perform a detailed analysis of each mapping to decipher its structure, dependencies, and transformation logic.

2. Export Informatica Mappings

Utilize the pmrep command for PowerCenter and pcrep for IDQ mappings to export them to XML format. Organize the XML files into a structured directory hierarchy for streamlined access and processing.

3. Transformation to SQL Conversion

Develop a conversion tool or script to parse XML files and convert each transformation into individual SQL files. Ensure the conversion script accounts for complex transformations by mapping Informatica functions to equivalent Snowflake functions. Structure SQL files using standardized naming conventions and directories for ease of management.

4. DBT Importer Configuration

Create a DBT importer script to facilitate the loading of SQL files into DBT. Configure the importer to sequence SQL files based on dependencies, drawing from a configuration file with Snowflake connection details.

5. Data Model and Project Setup

Define the data model and organize the DBT project structure, including schemas, models, and directories, adhering to DBT best practices.

6. Test and Validate

Conduct comprehensive testing of the SQL files and DBT project setup to confirm their correctness and efficiency. Validate all data transformations and ensure seamless integration with the Snowflake environment.

7. Migration Execution

Proceed with the migration, covering the export of mappings, their conversion to SQL, and importing them into DBT, while keeping transformations well-sequenced. Monitor the process actively, addressing any issues promptly to maintain migration integrity.

8. Post-Migration Validation

Perform a thorough validation to verify data consistency and system performance post-migration. Undertake performance tuning and optimizations to enhance the efficiency of the DBT setup.

9. Monitoring and Maintenance:

Establish robust monitoring systems to keep a close watch on DBT workflows and performance. Schedule regular maintenance checks to preemptively address potential issues.

10. Continuous Improvement

Foster a culture of continuous improvement by regularly updating the DBT environment and processes based on new insights, business needs, and evolving data practices.

Data Integration Tools: The Ultimate Guide for Businesses

Explore the top data integration tools that help businesses streamline workflows, unify data sources, and drive smarter decision-making.

Choosing Kanerika to for Efficient Data Modernization Services

Businesses today face critical challenges when operating with legacy data systems. Outdated infrastructure limits data accessibility, compromises reporting accuracy, prevents real-time analytics, and incurs excessive maintenance costs. Kanerika, a leading and data and AI solutions firm, helps organizations transform these limitations into competitive advantages through modern data platforms that enable advanced analytics, cloud scalability, and AI-driven insights.

The migration journey, however, presents significant risks. Traditional manual approaches are resource-intensive and error-prone, potentially disrupting business continuity. Even minor mistakes in data mapping or transformation logic can cascade into serious problems—inconsistent outputs, permanent data loss, or extended system downtime.

Kanerika addresses these challenges through purpose-built automation solutions that streamline complex migrations with precision. Our specialized tools facilitate seamless transitions across multiple platform pairs: SSRS to Power BI, SSIS/SSAS to Fabric, Informatica to Talend/DBT, and Tableau to Power BI. This automation-first approach dramatically reduces manual effort while maintaining data integrity throughout the migration process.

Revamp Your Data Pipelines And Stay Ahead—Start With Data Modernization!

Partner with Kanerika Today!

Frequently Asked Questions

What are the 5 steps of data pipeline?

Data pipelines typically involve five key steps: ingestion (collecting raw data), transformation (cleaning and structuring it), validation (ensuring data quality), loading (placing it into a target system), and monitoring (tracking pipeline health and performance). These steps aren’t always rigidly sequential; some overlap, and iterations are common. The exact steps and their details can vary depending on the specific pipeline and its purpose.

What are the data pipeline optimization techniques?

Data pipeline optimization boils down to making your data flow faster, cheaper, and more reliable. This involves strategies like choosing the right tools (e.g., faster databases, optimized cloud services), streamlining data transformations (reducing redundancy and unnecessary steps), and implementing robust error handling and monitoring. Ultimately, it’s about maximizing efficiency throughout the entire data journey.

How to optimize an ETL pipeline?

Optimizing your ETL pipeline means making it faster, more reliable, and more efficient. Focus on data volume reduction (e.g., filtering unnecessary data early), parallel processing where possible, and efficient data storage choices. Regular monitoring and profiling will pinpoint bottlenecks needing attention, ultimately saving resources and improving data delivery time.

What are the main 3 stages in data pipeline?

Data pipelines typically involve three core stages: ingestion (gathering and cleaning raw data from various sources), transformation (processing and enriching the data to a usable format), and loading (storing the prepared data in its final destination, like a data warehouse). These stages are iterative and often involve feedback loops for quality control. Efficient pipeline design prioritizes speed and reliability while handling different data volumes and types.

What is pipeline in ETL?

In ETL (Extract, Transform, Load), a pipeline is the automated workflow. It’s a sequence of steps, like an assembly line, that moves data from its source, cleans and modifies it, and finally deposits it into its destination. Think of it as a pre-programmed recipe for data processing, ensuring consistency and efficiency. Each step is meticulously defined and executed in order.

What are the best ETL tools?

The “best” ETL tool depends entirely on your specific needs and technical expertise. Consider factors like data volume, complexity, budget, and your team’s familiarity with programming languages. Popular choices range from fully managed cloud services offering ease-of-use to powerful, customizable open-source options. Ultimately, the ideal tool maximizes efficiency and reliability for *your* data integration process.

What are the 5 steps in data preparation?

Data prep isn’t a rigid 5-step process, but key phases include: gathering and cleaning your raw data (handling missing values and outliers); transforming it (e.g., scaling, encoding categorical variables); exploring patterns (visualizations and summary stats); integrating diverse data sources; and finally, validating your prepared dataset for accuracy and consistency before analysis. This iterative cycle ensures reliable insights.