Are you wondering how AI is changing so quickly from simple chatbots to fully autonomous systems? As businesses race to adopt advanced automation, the debate around AI Agent vs LLM is becoming more important than ever. According to McKinsey, AI could add $2.6 to $4.4 trillion in annual economic value across industries. With such rapid growth, organisations must understand the difference between these two key technologies to make smarter investments.

Many people confuse Large Language Models (LLMs) with AI agents, but they serve different purposes. LLMs like GPT-4 or Claude excel at understanding and generating language. AI agents, however, go further—they can plan tasks, use tools, take actions, and work autonomously.

Understanding this difference is crucial for designing the right AI strategy, improving automation, and maximising ROI.

This blog will break down AI agents vs LLMs in simple terms, explore their architectures, compare their strengths, and show real-world use cases to help you decide which one your organisation needs.

Streamline, Optimize, and Scale with AI-Powered Agents!

Partner with Kanerika for Expert AI implementation Services

Key Learnings

- LLMs generate language, while AI agents use LLMs to plan, act, and complete tasks autonomously.

- AI agents handle multi-step workflows, whereas LLMs work best for single-step text tasks.

- Agents require components like memory, decision engines, feedback loops, and external tools.

- LLMs have limitations such as no built-in memory, no action ability, and no persistent goals.

- The future of AI lies in hybrid systems where LLMs and agents work together for scalable automation.

What Is an AI Agent?

An AI agent is an autonomous system designed to perform tasks, make decisions, and take actions with minimal human input. While an LLM can generate text or answer questions, an AI agent goes a step further. It uses one or more LLMs as its “thinking engine,” but it also includes additional components that allow it to plan steps, use tools, access data, and complete tasks from start to finish. In simple terms, you can think of an AI agent as a smart digital worker that can understand instructions, break them into steps, and then act on them.

To understand how an AI agent functions, it helps to look at its architecture. An effective AI agent typically includes:

- Reasoning/Planning module: Breaks a goal into smaller, manageable actions.

- Memory: Stores information for later use. This can include short-term memory for ongoing tasks, long-term memory for past interactions, and vector store memory for semantic search.

- Tools: Allows the agent to interact with real systems. These may include APIs, RPA scripts, databases, browsers, ERP connectors, or email services.

- Decision engine: Helps the agent choose the next best step based on logic and context.

- Feedback loop: Enables self-correction by checking results and adjusting plans.

- Execution engine: Carries out tasks until the goal is completed.

Because of these components, AI agents offer a wide range of capabilities. They can handle multi-step planning, call APIs and apps, access or update databases, send emails, browse the internet, or work inside enterprise systems. They also learn from context and memory, allowing them to work autonomously for long periods without constant prompting.

What Is an LLM?

A Large Language Model (LLM) is an advanced type of artificial intelligence built to designed to understand and produce text that reads naturally and clearly. Popular examples include GPT-4, Claude, Gemini, and Llama. These models are trained on massive collections of text from books, articles, websites, code repositories, and other public sources. Because of this large-scale training, an LLM can recognise patterns in language and produce responses that sound natural, coherent, and contextually relevant.

To understand what an LLM can do, it helps to break down its core capabilities:

- Text generation: Creates paragraphs, answers, stories, explanations, and more.

- Summarisation: Condenses long content into short, clear summaries.

- Classification: Categorises text, identifies sentiment, or labels content.

- Code generation: Writes functions, scripts, or suggests improvements for developers.

- Reasoning: Handles logical tasks to an extent, although the reasoning is plausible rather than perfectly reliable.

Because of these abilities, LLMs have several key strengths. To begin with, they offer broad knowledge across many topics because they are trained on massive datasets. In addition, they excel at pattern recognition, which helps them predict text and create structured, meaningful responses. They are also useful for general problem-solving, especially when tasks involve language, explanation, or creativity.

On the other hand, LLMs come with important limitations. They do not have built-in memory unless a separate system provides it. Similarly, they cannot perform long-term planning and lack goal persistence beyond the current prompt. Moreover, they are unable to use tools such as APIs, databases, or browsers unless external integrations are added. Most importantly, an LLM cannot take actions on its own; it only produces text and suggestions.

AI Agents: A Promising New-Era Finance Solution

Explore how AI agents are transforming finance, offering innovative solutions for smarter decision-making and efficiency.

AI Agent vs LLM: Key Differences

Understanding the distinctions between Large Language Models (LLMs) and AI Agents helps organizations choose the right technology for specific business needs. While related, these technologies serve different purposes and operate in fundamentally different ways.

Comprehensive Comparison Table

| Aspect | LLM (Large Language Model) | AI Agent |

| 1. Purpose | Understands and generates text based on patterns in training data | Achieves specific goals through autonomous actions and tool usage |

| 2. Execution | Responds to input passively; waits for prompts | Operates autonomously and actively pursues objectives |

| 3. Memory | No built-in persistent memory; forgets context after conversation ends | Uses long-term memory stores to track progress and recall past interactions |

| 4. Planning Ability | Limited to short reasoning chains within single responses | Performs multi-step planning and task decomposition for complex workflows |

| 5. Tool Usage | Requires external integration to access tools or data | Designed natively for actions and tool execution across systems |

| 6. Autonomy | Zero autonomy; must be prompted by users for every action | Can run tasks without supervision once goals are defined |

| 7. Use Cases | Writing content, conversational chat, text summarization, translation | Workflow automation, autonomous decision-making, process orchestration |

| 8. Error Handling | Cannot self-correct or retry; requires new prompt if output unsatisfactory | Detects failures, adjusts approach, retries actions until goals achieved |

| 9. State Management | Stateless; each interaction is independent unless context manually provided | Maintains state across sessions tracking task progress and intermediate results |

| 10. Learning & Adaptation | Static after training; doesn’t learn from individual user interactions | Can learn from outcomes, improving performance through experience |

Detailed Explanation of Key Differences

1. Purpose

LLMs function as sophisticated text processing engines. They excel at understanding language, generating coherent responses, and completing language-based tasks. However, they remain confined to the realm of text without ability to affect real-world systems.

In contrast, AI Agents use LLMs as one component within broader systems designed to accomplish concrete objectives. Agents combine language understanding with the ability to take actions, make decisions, and manipulate external systems to achieve defined goals.

2. Execution

When you interact with an LLM, it responds to your specific prompt then stops, waiting for your next input. This passive approach means LLMs cannot initiate actions or continue work independently.

AI Agents operate proactively. Once given objectives, agents develop plans, execute steps, check results, and continue working without constant human intervention. This active execution model makes agents suitable for automation scenarios where continuous operation is required.

3. Memory

LLMs process each conversation within a limited context window. Once that conversation ends, the LLM retains no memory of what was discussed. While techniques like RAG (Retrieval Augmented Generation) can provide access to external information, the LLM itself doesn’t remember past interactions.

AI Agents maintain persistent memory across sessions. They remember previous tasks, outcomes, user preferences, and learned patterns. This memory enables agents to build on past work, avoid repeating mistakes, and provide increasingly personalized assistance over time.

4. Planning Ability

LLMs can perform reasoning within the scope of a single response, handling relatively straightforward logic chains. Complex multi-step problems requiring extended planning exceed their capabilities without breaking tasks into smaller prompted steps.

AI Agents decompose complex goals into manageable subtasks automatically. They create plans spanning multiple steps, understand dependencies between tasks, and adjust plans dynamically as circumstances change. This planning ability enables agents to handle sophisticated workflows without human guidance at each step.

5. Tool Usage

LLMs require developers to build integrations connecting them to external tools, APIs, or databases. The LLM itself cannot invoke functions or interact with systems directly—it only generates text that might describe desired actions.

AI Agents are architected from the ground up to use tools. They understand which tools are available, when to use them, how to call them with appropriate parameters, and how to interpret results. This native tool integration makes agents practical for real-world automation.

6. Autonomy

The fundamental limitation of LLMs is their complete lack of autonomy. They cannot decide to perform actions, check results, or adjust approaches independently. Every step requires explicit human prompting.

AI Agents operate with substantial autonomy. Once objectives are set, agents work independently—gathering information, making decisions, executing actions, and handling exceptions—without needing approval or guidance for routine steps. Humans define goals and constraints, but agents determine how to achieve them.

7. Use Cases

LLMs shine in content creation, conversational interfaces, document summarization, translation, and other language-centric applications. They excel when the task involves understanding or generating text without requiring actions beyond producing that text.

AI Agents suit scenarios requiring autonomous operation: customer service workflows routing inquiries and taking actions, business process automation handling multi-step procedures, data analysis pipelines extracting insights and generating reports, and system monitoring identifying and resolving issues automatically.

8. Error Handling

When LLMs generate incorrect or unsatisfactory output, users must identify the problem and craft new prompts requesting corrections. LLMs cannot evaluate their own outputs or self-correct.

AI Agents incorporate error detection and recovery mechanisms. They validate outputs against expected results, recognize when actions fail, adjust strategies, and retry with different approaches. This self-correction capability enables reliable autonomous operation.

9. State Management

LLMs are stateless, treating each interaction independently. Maintaining context across conversations requires external systems to store and inject conversation history into prompts.

AI Agents maintain state inherently. They track where they are in multi-step processes, remember intermediate results, know which tasks are complete and which remain, and pick up where they left off if interrupted. This state management is essential for complex, long-running workflows.

10. Learning & Adaptation

LLMs remain static after training. While they can simulate learning by incorporating feedback into context windows, they don’t actually improve their capabilities through individual user interactions.

AI Agents can implement learning loops. They analyze outcomes, identify successful and unsuccessful strategies, and adjust their approaches based on experience. Over time, agents become more effective at their assigned tasks by learning from what works in real-world scenarios.

Choosing Between LLMs and AI Agents

Use LLMs when:

- Tasks involve primarily language understanding or generation

- No actions beyond producing text are required

- Human oversight and control are desired at every step

- Complexity remains within single-interaction scope

Use AI Agents when:

- Goals require sequences of actions across multiple systems

- Autonomous operation without constant supervision is beneficial

- Tasks involve decision-making and adaptive planning

- Long-running workflows need persistent state tracking

Many practical applications combine both: AI Agents use LLMs as their reasoning engines while adding the planning, tool usage, memory, and autonomy that transform language understanding into goal-directed action.

Understanding these differences helps organizations deploy the appropriate technology, avoiding both the limitations of using LLMs where agents are needed and the unnecessary complexity of deploying agents for simple language tasks.

AI Agents: A Promising New-Era Finance Solution

Explore how AI agents are transforming finance, offering innovative solutions for smarter decision-making and efficiency.

How AI Agents Use LLMs Internally

AI agents are not replacements for Large Language Models (LLMs). Instead, they build on top of them. An agent uses one or more LLMs as its core reasoning engine, while adding the missing layers required for real-world action. You can think of the LLM as the brain, responsible for understanding language and generating ideas, while the AI agent is the full body, equipped with memory, tools, planning ability, and the power to execute tasks.

In practice, an agent wraps around an LLM and extends its abilities. LLMs alone cannot take actions, maintain long-term memory, or use tools. Agents fill these gaps by connecting LLM outputs to workflows, APIs, databases, applications, and feedback loops. This combination allows the system to work autonomously and complete multi-step goals.

Agents can also use multiple LLMs for different tasks, depending on strengths. For example, a research agent may use:

- one LLM to read and interpret academic papers,

- another LLM to generate structured summaries,

- and external tools to extract citations, check sources, or store notes.

By coordinating these models and tools, AI agents transform raw language understanding into meaningful action. This layered architecture is what makes agents far more capable and autonomous than a standalone LLM.

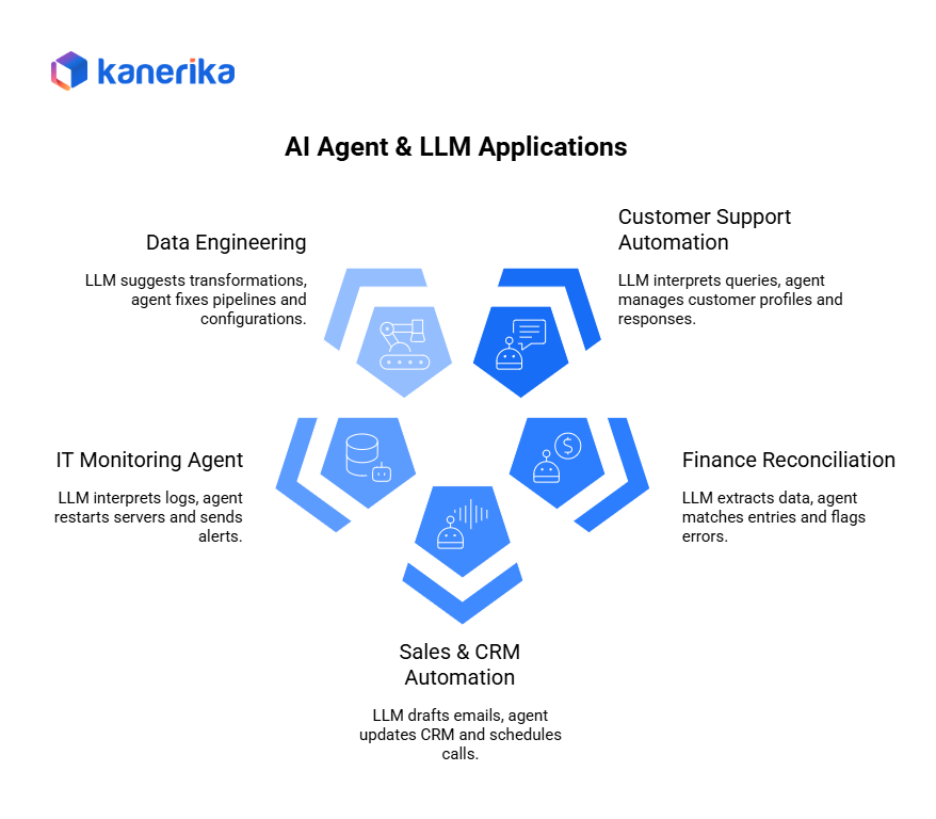

Real-World Examples

AI agents are becoming essential across industries because they combine the intelligence of LLMs with the ability to take real actions. While an LLM understands language, the agent uses that understanding to execute tasks, connect to systems, and complete workflows. The examples below show how this partnership works in real business scenarios.

1. Customer Support Automation

In this case, the LLM reads and interprets customer queries, whether they are complaints, status requests, or general questions. After understanding the message, the AI agent steps in. It identifies the customer profile, checks order or ticket status, updates the CRM with new information, and sends the correct response. As a result, the entire support process becomes faster and more accurate.

2. Finance Reconciliation

Here, the LLM extracts key details from invoices, purchase orders, or receipts. Next, the AI agent checks ERP data, matches entries, flags mismatches, and triggers alerts when something looks incorrect. This reduces manual effort and speeds up month-end closing cycles.

3. Sales & CRM Automation

The LLM drafts personalised emails or follow-up messages. Then the agent updates Salesforce, logs meeting notes, schedules calls, and moves leads through the pipeline. This ensures a smoother sales process with minimal manual work.

4. IT Monitoring Agent

In IT operations, the LLM interprets logs, error messages, and alerts. After that, the agent restarts servers, creates helpdesk tickets, sends alerts to engineers, or runs diagnostic scripts. This improves uptime and reduces response times.

5. Data Engineering (Fabric / Databricks / Snowflake)

In data platforms, the LLM suggests transformations or highlights errors. The agent then checks pipelines, retries failed jobs, fixes schema mismatches, or adjusts configurations. This keeps data workflows stable and efficient.

Future of AI: Agents + LLMs Together

The future of AI is moving toward a powerful combination of agents and LLMs working together, rather than one replacing the other. As multi-agent systems continue to emerge, organisations are beginning to use several agents that collaborate, share tasks, and solve complex problems as a team. At the same time, memory-rich LLMs are rapidly evolving, allowing models to hold context longer, recall past interactions, and make more accurate decisions.

Because of these advancements, enterprises are shifting from simple chatbots to fully autonomous AI systems that can plan, act, and self-correct. In these systems, agents will increasingly take the lead in orchestrating workflows, handling tools, and executing tasks, while LLMs will continue to provide the intelligence needed for understanding, reasoning, and generating content.

As a result, hybrid architectures where LLMs serve as the “brain” and agents serve as the “body” will become the standard approach for building scalable, reliable, and enterprise-ready AI solutions.

Kanerika’s AI Expertise: Harnessing AI Agents and LLMs to Transform Enterprise Intelligence

At Kanerika, we leverage the combined power of AI agents and Large Language Models (LLMs) to create intelligent, scalable, and cost-effective solutions that solve real business challenges. While LLMs provide deep language understanding and reasoning, AI agents bring autonomy, planning, and action—allowing enterprises to go far beyond basic chatbot experiences.

Kanerika also builds LLM-powered AI agents that don’t just respond—they act. These agents understand context, plan multi-step tasks, use enterprise tools, and execute actions with minimal human intervention. This makes them ideal for automating complex workflows across departments.

Our Specialized AI Agents Powered by LLMs and Agentic Intelligence

- DokGPT: Retrieves accurate information from documents using natural language queries.

- Karl: Analyzes data and generates visual insights for business users.

- Alan: Summarizes lengthy legal contracts into clear, easy-to-read formats.

- Susan: Removes sensitive information to ensure compliance and data privacy.

- Mike: Verifies formulas, calculations, and document accuracy.

- Jennifer: Manages scheduling, meetings, calls, and routine daily tasks.

- Jarvis: Supports IT operations with ticket triage, root-cause suggestions, and faster resolution.

Every agent is modular, secure, scalable, and easy to integrate into enterprise systems, making them ideal for modern digital workflows.

With a balanced use of LLMs for intelligence and agents for autonomous action, Kanerika builds AI solutions that deliver measurable impact—helping businesses operate more efficiently, adapt faster, and scale with confidence.

Partner with Kanerika and unlock the future of enterprise AI powered by the perfect synergy of AI agents and LLMs.

Boost Productivity and Efficiency with Next-Gen AI Agents!

Partner with Kanerika for Expert AI implementation Services

FAQs

1. What is the difference between an AI Agent and an LLM?

An LLM generates and understands language, while an AI agent uses one or more LLMs to plan tasks, use tools, take actions, and work autonomously.

2. Is an AI agent the same as a chatbot?

No. A chatbot only responds to messages. An AI agent can plan steps, access external systems, update data, and complete tasks without constant input.

3. Can an LLM act on its own?

No. An LLM cannot take actions independently. It needs an AI agent or external system to perform tasks, use tools, or trigger workflows.

4. How do AI agents use LLMs internally?

Agents rely on LLMs for reasoning, language understanding, and decision-making. The agent then handles memory, tools, execution, and feedback loops.

5. When should I use an LLM instead of an AI agent?

Use an LLM for single-step language tasks like summarisation, writing, sentiment analysis, and code suggestions. Use an AI agent for multi-step workflows.

6. What are common use cases for AI agents?

AI agents support customer service, finance reconciliation, CRM automation, IT operations, data pipeline monitoring, and enterprise workflow automation.

7. Will AI agents replace LLMs?

No. AI agents depend on LLMs. The future involves hybrid systems where LLMs provide intelligence and agents manage planning and execution.