As large language models (LLMs) become more embedded in everyday applications — from chatbots to enterprise automation — inference performance, scalability, and cost are becoming mission-critical. While vLLM has quickly risen as a leading high-throughput inference engine, organizations are increasingly evaluating vLLM alternatives to align with their specific infrastructure and workload needs.

vLLM is known for its efficient GPU memory usage, dynamic batching, and strong support for distributed serving — making it a top choice for many production deployments. However, not every use case fits the same mold. Some environments demand tighter hardware alignment, such as NVIDIA-specific optimizations, while others require lightweight solutions for edge devices, easier integration with cloud infrastructures, or reduced operational complexity and costs.

This blog explores what vLLM is, why alternatives are gaining attention, and how different inference engines compare in real-world scenarios. By the end, you’ll have a clear understanding of how to evaluate the right LLM serving framework for 2025 and beyond.

Key Learnings

- vLLM is a leading high-throughput LLM inference engine, but not always the best fit for every organization or hardware environment.

- Different workloads require different performance trade-offs — some prioritize latency, others prioritize cost efficiency or multi-model scalability.

- Hardware alignment plays a major role: NVIDIA-optimized engines like TensorRT-LLM often outperform general-purpose systems such as vLLM on specific GPU architectures.

- Edge and CPU-based deployments benefit from lightweight engines like Exllama or Ollama, which offer lower resource demands and simpler setup.

- DeepSpeed Inference excels at distributed scaling — ideal for extremely large LLMs that require multi-node GPU orchestration.

- Ecosystem and integration matter — tools like Hugging Face TGI provide smoother deployment for teams already using HF models and pipelines.

- A structured evaluation checklist ensures organizations choose the right engine based on latency, throughput, model compatibility, setup complexity, and cost.

- The future of inference will move toward multi-engine flexibility, automated optimization, and hybrid cloud/edge deployment models.

What Is vLLM?

vLLM is an open-source inference engine designed for large language models (LLMs). Developed originally at the UC Berkeley Sky Computing Lab, it has become a high-throughput, memory-efficient system for LLM serving.

Key features of vLLM include PagedAttention, a memory-paging technique that optimizes GPU key-value cache usage; dynamic batching that continuously absorbs new requests instead of static batch flushing; and multi-node GPU support for distributed inference scenarios.

Its strengths lie in scalability and flexibility: vLLM supports a wide range of LLM models, multi-GPU setups, quantization workflows (e.g., INT8/FP8), and even heterogeneous hardware (GPU, CPU) environments. These capabilities make it favorable for high-traffic, real-time use-cases.

However, there are trade-offs. Being hardware-agnostic, vLLM may not match the ultra-optimized performance of vendor-specific inference engines tailored for a single GPU architecture. Additionally, initial setup and tuning may require expertise in batching, memory management, and distributed configuration — making it less ideal for edge or highly constrained hardware environments.

Why Look for Alternatives to vLLM?

As organizations embrace large-language-model (LLM) adoption at scale, the choice of inference engine becomes critical. While vLLM offers strong capabilities, many enterprises are exploring vLLM alternatives because of evolving business and technical drivers. These include hardware specialization (such as NVIDIA vs Intel vs Apple Silicon), ease of deployment in edge or embedded environments, cost constraints when scaling large model footprints, and the need to support a wide variety of model types including smaller models or quantized versions.

For example, some teams require inference on Apple-Silicon or heterogenous hardware stacks with tight power or latency constraints—scenarios where vLLM’s general-purpose optimizations may not be ideal. Benchmark comparisons show that frameworks like TensorRT‑LLM (optimized for NVIDIA GPUs) deliver significantly lower latency on that hardware than vLLM, while more lightweight engines support edge deployments more efficiently.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

Top vLLM Alternatives

Organizations seeking alternatives to vLLM have numerous options depending on their specific requirements for performance, hardware compatibility, and operational complexity. Here’s a comprehensive breakdown of the leading alternatives.

1. Text Generation Inference (TGI – Hugging Face)

Open-source inference toolkit from Hugging Face designed for production deployment of large language models. TGI supports numerous model architectures including Llama, Mistral, Falcon, and GPT-based models with optimized token processing and horizontal scaling capabilities.

Key Strengths:

- Seamless integration with Hugging Face ecosystem and model hub

- Strong community support with active development and regular updates

- Compatible with diverse hardware including NVIDIA, AMD, and Intel GPUs

- Production-ready features like token streaming, safetensors loading, and dynamic batching

- REST API compatible with OpenAI specifications for easy migration

- Built-in monitoring and observability tools

Trade-offs:

- May not achieve ultra-low latency compared to vendor-specific optimized engines

- Less aggressive memory optimization than vLLM’s PagedAttention

- Throughput can be lower for extremely high-concurrency scenarios

- Limited support for some cutting-edge optimization techniques

Ideal Use-Case: Teams already invested in the Hugging Face ecosystem, organizations prioritizing model variety and community support, companies needing flexible hardware options without vendor lock-in.

2. DeepSpeed Inference (Microsoft)

Scalable inference and training library from Microsoft Research featuring ZeRO optimization, memory offloading techniques, and comprehensive multi-node support for distributed inference workloads.

Key Strengths:

- Exceptional support for massive models (100B+ parameters) through intelligent memory management

- ZeRO-Inference reduces memory footprint enabling larger batch sizes

- Efficient distributed computing across multiple GPUs and nodes

- Kernel fusion optimizations for improved performance

- Integrated with Azure ML for cloud-native deployments

- Strong enterprise support and documentation from Microsoft

Trade-offs:

- Steeper learning curve requiring deeper understanding of distributed systems

- Higher implementation complexity compared to simpler alternatives

- Configuration can be intricate for optimal performance

- May be overkill for smaller models or single-GPU deployments

Ideal Use-Case: Enterprise-scale deployments with multi-billion parameter models, organizations with multi-GPU clusters, companies requiring distributed inference across data centers, Azure cloud users.

3. TensorRT-LLM / NVIDIA Ecosystem

NVIDIA’s purpose-built inference library optimized exclusively for NVIDIA hardware, featuring advanced quantization techniques (INT4, INT8, FP8), in-flight batching, and kernel-level optimizations.

Key Strengths:

- Ultra-low latency achieving industry-leading inference speeds

- Exceptional throughput on NVIDIA GPU architectures (A100, H100, L40S)

- Advanced optimization techniques including multi-head attention fusion

- Native support for NVIDIA Triton Inference Server for production deployment

- Comprehensive profiling and optimization tools

- Regular updates aligned with new NVIDIA hardware releases

Trade-offs:

- Complete vendor lock-in to NVIDIA ecosystem

- Limited portability to AMD, Intel, or other GPU vendors

- Requires NVIDIA-specific expertise for optimal configuration

- Higher infrastructure costs due to premium GPU requirements

- Complex setup process with multiple dependencies

Ideal Use-Case: Latency-sensitive production inference workloads, real-time applications requiring sub-100ms response times, organizations standardized on NVIDIA infrastructure, high-throughput serving at scale.

4. FasterTransformer

Open-source high-performance transformer inference library originally developed by NVIDIA, now community-maintained. Provides optimized implementations of popular transformer architectures with focus on GPU acceleration.

Key Strengths:

- Exceptional performance for transformer-based models on GPUs

- Supports various model architectures including BERT, GPT, T5

- Multi-GPU and multi-node inference capabilities

- Efficient memory management and kernel optimizations

- C++ and Python API flexibility

- Active community contributions and model support

Trade-offs:

- Requires more manual customization and tuning than managed solutions

- Documentation can be sparse for advanced configurations

- Less user-friendly than higher-level frameworks

- May need significant engineering effort for production deployment

- Limited built-in serving infrastructure

Ideal Use-Case: Research teams needing maximum performance flexibility, organizations with strong ML engineering capabilities, projects requiring custom transformer optimizations, high-performance inference pipelines.

Ensemble Learning Techniques for Better ML Accuracy

Discover how ensemble learning combines multiple models to improve accuracy and reliability. Explore key methods such as bagging, boosting, and stacking.

5. Lightweight Alternatives / Edge-focused (Ollama, Exllama, vLite)

Specialized frameworks optimized for resource-constrained environments including CPUs, Apple Silicon (M1/M2/M3), and edge devices. These tools prioritize accessibility and hardware flexibility over maximum throughput.

Ollama:

- Dead-simple local model deployment with single-command installation

- Optimized for MacBooks and consumer hardware

- Built-in model quantization (4-bit, 8-bit)

- Perfect for development and prototyping

Exllama:

- Extremely fast inference for quantized models

- Optimized specifically for consumer GPUs

- Excellent performance-per-dollar ratio

- Popular for hobbyist and small-scale deployments

vLite:

- Lightweight inference focused on minimal dependencies

- Efficient CPU inference capabilities

- Ideal for edge computing scenarios

Key Strengths:

- Cost-effective requiring no specialized hardware

- Flexible deployment across diverse hardware platforms

- Quick setup and minimal configuration complexity

- Low operational overhead and maintenance

- Excellent for experimentation and rapid prototyping

Trade-offs:

- Significantly lower throughput for large models compared to GPU solutions

- Limited scalability for production workloads

- May struggle with models exceeding 13B parameters

- Not suitable for high-concurrency serving requirements

- Performance depends heavily on local hardware capabilities

Ideal Use-Case: Local inference on developer machines, edge device deployments, prototyping and experimentation, cost-sensitive applications, offline environments, small-scale production for lightweight models.

SGLang vs vLLM – Choosing the Right Open-Source LLM Serving Framework

Explore the differences between SGLang and vLLM to choose the best LLM framework for your needs.

6. Ray Serve with Ray AI Runtime

Scalable serving framework built on Ray distributed computing platform, providing flexible model serving with native support for LLMs and multi-model deployments.

Key Strengths:

- Unified framework for serving multiple models simultaneously

- Excellent horizontal scaling across clusters

- Built-in load balancing and auto-scaling capabilities

- Framework-agnostic supporting PyTorch, TensorFlow, and custom models

- Strong observability with metrics and logging

- Seamless integration with Ray ecosystem for end-to-end ML pipelines

Trade-offs:

- Additional complexity from Ray cluster management

- Learning curve for Ray programming model

- May have higher latency than specialized inference engines

- Resource overhead from Ray runtime

Ideal Use-Case: Organizations running multiple models in production, teams needing unified serving infrastructure, companies already using Ray for data processing, hybrid deployments mixing LLMs with traditional ML models.

7. Triton Inference Server

NVIDIA’s open-source inference serving platform supporting multiple frameworks (TensorFlow, PyTorch, ONNX, TensorRT) with advanced features for production deployment.

Key Strengths:

- Framework-agnostic model serving

- Dynamic batching and model ensembles

- Concurrent model execution on single GPU

- HTTP/gRPC and C API interfaces

- Kubernetes-native with Helm charts

- Comprehensive metrics and monitoring

Trade-offs:

- Configuration complexity for optimal performance

- Primarily optimized for NVIDIA hardware

- Requires understanding of model formats and conversions

- Steeper learning curve than simpler alternatives

Ideal Use-Case: Multi-model production environments, microservices architectures, Kubernetes deployments, organizations needing framework flexibility, enterprises requiring robust monitoring and observability.

8. OpenLLM (BentoML)

Open-source platform for operating LLMs in production, providing simplified deployment, scaling, and monitoring with support for various model architectures.

Key Strengths:

- User-friendly API for model deployment

- Built-in support for popular open-source models

- Containerized deployments with BentoML

- Auto-scaling and load balancing

- Simple CLI and Python SDK

- Good documentation and examples

Trade-offs:

- Less mature than established alternatives

- May have lower raw performance than specialized engines

- Smaller community compared to major frameworks

- Limited advanced optimization features

Ideal Use-Case: Rapid prototyping to production, teams wanting simplicity over maximum performance, startups needing fast time-to-market, organizations standardizing on BentoML infrastructure.

9. MLC LLM (Machine Learning Compilation)

Universal LLM deployment engine leveraging Apache TVM compiler stack to run models natively on diverse hardware including WebGPU, mobile devices, and embedded systems.

Key Strengths:

- Unprecedented hardware portability (iOS, Android, WebGPU, embedded)

- Compilation-based optimization for target hardware

- Enables browser-based LLM inference

- Mobile-first optimization techniques

- No server infrastructure required for edge deployment

Trade-offs:

- Compilation process adds deployment complexity

- Performance may not match hardware-specific solutions

- Smaller ecosystem and community

- Limited enterprise support options

Ideal Use-Case: Mobile applications requiring on-device inference, web applications with in-browser LLMs, IoT and embedded systems, privacy-sensitive applications avoiding cloud dependencies.

10. CTranslate2

Fast inference engine for Transformer models implementing custom runtime optimized for CPU and GPU with emphasis on efficiency and quantization.

Key Strengths:

- Excellent CPU performance often matching GPU solutions

- Aggressive quantization support (INT8, INT16)

- Low memory footprint

- Simple Python API

- Production-ready with minimal dependencies

- Strong translation model support

Trade-offs:

- Limited to specific model architectures

- Smaller model zoo compared to comprehensive frameworks

- Less flexibility for custom model modifications

- Community smaller than major alternatives

Ideal Use-Case: CPU-based inference deployments, translation services, cost-optimized serving, edge computing with limited resources, applications prioritizing efficiency over cutting-edge features.

LLM vs vLLM: Key Differences, Speed & Performance Guide

Compare LLM vs vLLM to understand differences in speed, performance, architecture, and scalability. Find out which option is best for your AI workloads.

Comprehensive Comparison Table

| Platform | Hardware Support | Latency | Throughput | Ease of Use | Best For | License |

| vLLM | NVIDIA GPUs | Very Low | Very High | Medium | High-concurrency production | Apache 2.0 |

| TGI (Hugging Face) | Multi-vendor GPUs | Low | High | High | Hugging Face users | Apache 2.0 |

| DeepSpeed | NVIDIA/AMD GPUs | Medium | Very High | Low | Large-scale distributed | Apache 2.0 |

| TensorRT-LLM | NVIDIA only | Ultra Low | Very High | Low | NVIDIA hardware | Apache 2.0 |

| FasterTransformer | NVIDIA GPUs | Very Low | High | Medium | Custom optimization | Apache 2.0 |

| Ollama | CPU/GPU/Apple Silicon | High | Low | Very High | Local development | MIT |

| Ray Serve | Multi-platform | Medium | High | Medium | Multi-model serving | Apache 2.0 |

| Triton | Multi-framework | Low | High | Medium | Enterprise multi-model | BSD 3-Clause |

| OpenLLM | Multi-platform | Medium | Medium | High | Rapid deployment | Apache 2.0 |

| MLC LLM | Universal (mobile/web) | Medium | Medium | Medium | Edge/mobile inference | Apache 2.0 |

| CTranslate2 | CPU/GPU | Low | Medium | High | CPU-optimized serving | MIT |

Key Selection Criteria

- Choose vLLM when: Maximum throughput and concurrency are critical, using NVIDIA infrastructure, need PagedAttention memory efficiency.

- Choose TGI when: Already using Hugging Face, want broad model support, need hardware flexibility, prioritize ease of use.

- Choose DeepSpeed when: Working with 100B+ parameter models, having multi-GPU clusters, need distributed inference, Azure integration.

- Choose TensorRT-LLM when: Latency under 100ms is required, standardized on NVIDIA, willing to invest in optimization.

- Choose Lightweight alternatives when: Deploying locally or on-defense, cost-sensitive, prototyping, CPU-only environments.

- Choose Ray Serve when: Running multiple models, need unified serving infrastructure, already using Ray ecosystem.

- Choose Triton when: Multi-framework support needed, Kubernetes deployment, require comprehensive monitoring.

- Choose OpenLLM when: Rapid prototyping to production, prefer simplicity, using BentoML infrastructure.

- Choose MLC LLM when: Targeting mobile/web deployment, need browser-based inference, IoT applications.

- Choose CTranslate2 when: Optimizing CPU inference, translation workloads, minimal dependencies required.

How to Choose the Right Inference Engine

Selecting the right inference engine is not a one-size-fits-all decision. The ideal alternative to vLLM depends on the hardware you run, the scale of deployment, your latency vs. throughput needs, and overall operational cost. Start by evaluating your hardware environment — whether you rely on NVIDIA GPUs, CPUs, Apple Silicon, data center clusters, or edge devices. Hardware alignment can drastically impact performance and cost-efficiency.

Next, consider model size and workload characteristics. Some engines are optimized for large-parameter LLMs requiring distributed computing, while others excel in compact or quantized model serving. Assess whether your application demands ultra-low latency (e.g., conversational chatbots) or high-throughput batch processing (e.g., document analysis).

A useful evaluation checklist:

- Does the engine fully support your hardware (NVIDIA / Apple / CPU / TPU)?

- Does it support your model types and required quantization formats?

- Are documentation, community, and ecosystem integrations strong?

- Is setup manageable and tuning straightforward?

- How well does cost scale as workload increases?

- Is there a risk of vendor lock-in?

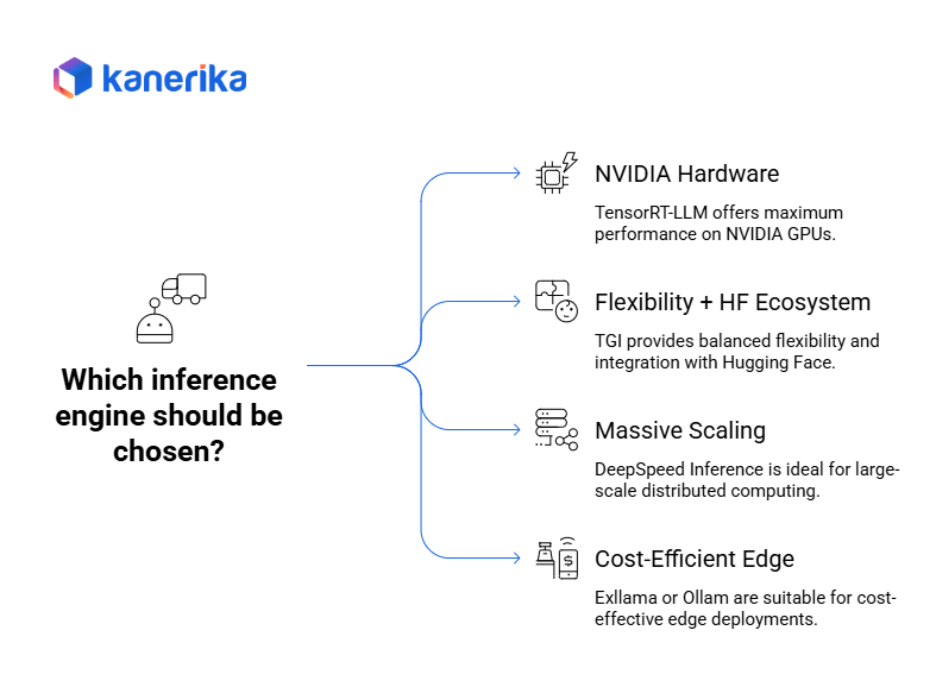

General guidance based on use-case:

- NVIDIA hardware, max performance → TensorRT-LLM

- Balanced flexibility + HF ecosystem → TGI (Text Generation Inference)

- Massive distributed scaling → DeepSpeed Inference

- Cost-efficient edge/local deployments → Exllama or Ollama

By aligning these factors to business priorities, organizations can confidently choose from strong vLLM alternatives that better match their operational and performance goals.

Migration & Deployment Considerations

When migrating from vLLM to an alternative inference engine, organizations should begin by evaluating the cost of change, including licensing, engineering effort, and potential downtime. Identify any capability gaps such as unsupported model architectures, quantization features, or missing API hooks. Consider the integration overhead: will the new engine seamlessly fit into your pipelines and deployment automation?

Deployment success relies heavily on benchmarking and validation. Before full rollout, run pilot tests to compare latency, throughput, GPU/CPU utilization, and memory behavior against vLLM. Always validate output quality to ensure responses remain accurate and aligned with model expectations.

Performance optimization techniques — such as quantization, tensor parallelism, and dynamic batching — should be enabled early to realize cost and efficiency gains.

Compatibility matters: ensure the new engine works smoothly with Hugging Face model hubs, REST/gRPC APIs, and your compute environment (cloud, on-prem, or edge devices). Continuous monitoring of resource consumption and user load is essential to avoid production bottlenecks.

With a structured approach, enterprises can deploy new inference engines with confidence — unlocking better performance and lower operational cost while maintaining reliability.

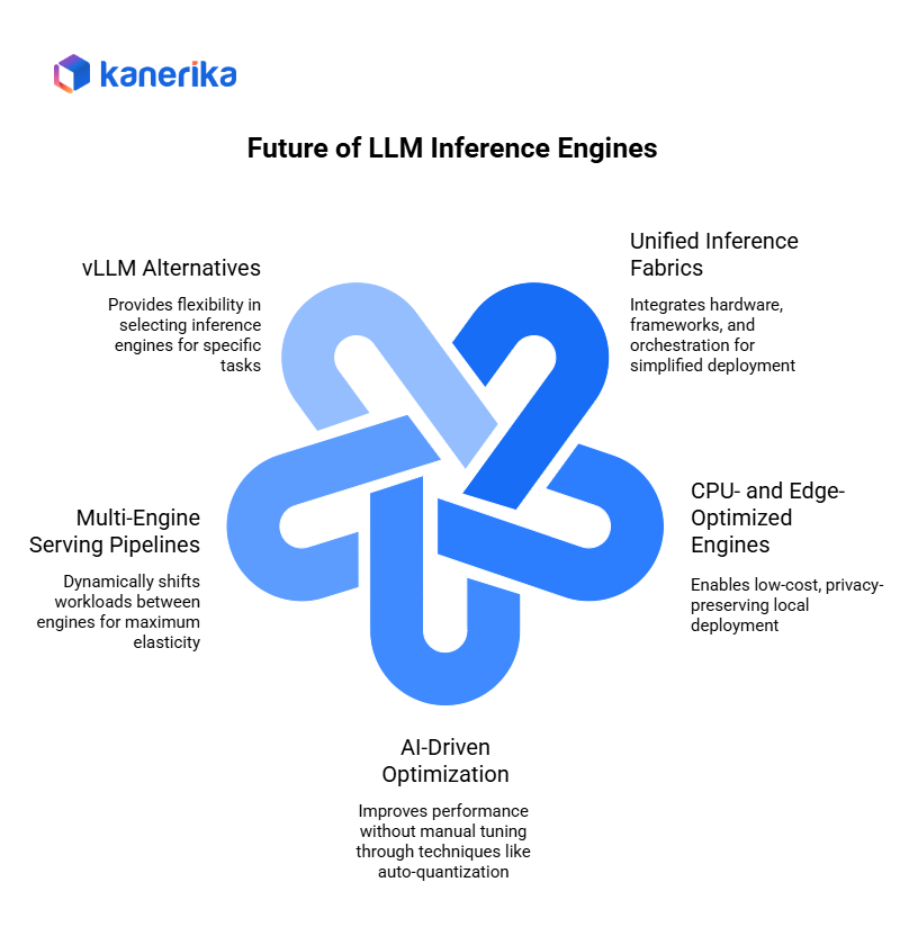

Future Trends in LLM Inference Engines

The next generation of LLM inference will shift toward unified inference fabrics that tightly integrate hardware accelerators, serving frameworks, and orchestration layers — simplifying deployment across diverse environments. As organizations move beyond GPU-only strategies, we will see rapid growth in CPU- and edge-optimized inference engines, enabling low-cost, privacy-preserving, local deployment for real-time applications such as retail automation and on-device assistants.

AI-driven optimization techniques — including auto-quantization, dynamic batching, and KV-cache sharing — will increasingly apply intelligence to the serving layer, improving performance without requiring manual tuning. (Source: arXiv:2510.09665)

Another key trend is the rise of multi-engine serving pipelines, where workloads dynamically shift between inference engines based on performance targets, user traffic, or available hardware — ensuring maximum elasticity and cost efficiency.

As these innovations converge, enterprises will gain greater flexibility when selecting vLLM alternatives — choosing the right inference engine for the right task, while maintaining consistency, control, and high-performance serving across hybrid and distributed infrastructures.

Kanerika: Your Reliable Partner for Efficient LLM-based Solutions

Kanerika offers innovative solutions leveraging Large Language Models (LLMs) to address business challenges effectively. By harnessing the power of LLMs, Kanerika enables intelligent decision-making, enhances customer engagement, and drives business growth. These solutions utilize LLMs to process vast amounts of text data, enabling advanced natural language processing capabilities that can be tailored to specific business needs, ultimately leading to improved operational efficiency and strategic decision-making.

Why Choose Us?

1. Expertise: With extensive experience in AI, machine learning, and data analytics, the team at Kanerika offers exceptional LLM-based solutions. We develop strategies tailored to address your unique business needs and deliver high-quality results.

2. Customization: Kanerika understands that one size does not fit all. So, we offer LLM-based solutions that are fully customized to solve your specific challenges and achieve your business objectives effectively.

3, Ethical AI: Trust in Kanerika’s commitment to ethical AI practices. We prioritize fairness, transparency, and accountability in all our solutions, ensuring ethical compliance and building trust with clients and other stakeholders.

5. Continuous Support: Beyond implementation, Kanerika provides ongoing support and guidance to optimize LLM-based solutions. Our team remains dedicated to your success, helping you navigate complexities and maximize the value of AI technologies.

Elevate your business with Kanerika’s LLM-based solutions. Contact us today to schedule a consultation and explore how our innovative approach can transform your organization.

Visit our website to access informative resources, case studies, and success stories showcasing the real-world impact of Kanerika’s LLM-based solutions.

Choosing Between vLLM vs Ollama: A Complete Comparison for Developers

Compare vLLM and Ollama: Benchmarking performance, scalability, and deployment suitability.

FAQs

1. What are vLLM alternatives?

vLLM alternatives are other inference engines and optimization frameworks that provide high-performance large language model (LLM) serving, often with different trade-offs in speed, memory usage, and deployment options. Common examples include TensorRT-LLM, DeepSpeed, FasterTransformer, and LMDeploy.

2. Why should I consider alternatives to vLLM?

Although vLLM is fast and supports continuous batching, some alternatives may offer better hardware utilization, quantization support, integration with specific platforms (like Nvidia GPUs), or improved cost efficiency for large-scale deployments.

3. Which alternatives are best for Nvidia GPU optimization?

TensorRT-LLM and FasterTransformer are widely recommended for Nvidia hardware because they take full advantage of CUDA, Tensor Cores, and GPU-optimized kernels to maximize throughput and reduce latency.

4. Are any vLLM alternatives designed for CPU-based inference?

Yes. Intel’s OpenVINO and ONNX Runtime offer strong CPU optimizations, allowing large models to run cost-effectively without requiring GPUs.

5. Do these alternatives support quantization and memory compression?

Most modern inference engines support quantization techniques such as INT8, FP8, and 4-bit methods. DeepSpeed, TensorRT-LLM, and LMDeploy provide advanced quantization and sparsity support to lower memory requirements.

6. Can vLLM alternatives deploy models across distributed environments?

Many alternatives, including DeepSpeed and Ray Serve, support distributed inference and multi-GPU clustering, enabling scalability for high-traffic applications and large parameter models.

7. Which alternative is best for open-source and production-grade usage?

The choice depends on priorities: vLLM excels in ease of use and batching; TensorRT-LLM is ideal for Nvidia performance; DeepSpeed is strong for distributed and training-to-inference workflows. Organizations typically benchmark multiple frameworks to find the best fit for their hardware and latency targets.