In 2025, the success of AI and machine learning models still hinges on one core factor: the quality and volume of training data. Yet, many organizations continue to struggle with limited, imbalanced, or noisy datasets. That’s where data augmentation has become a game-changing technique. By generating new training samples from existing data, it allows models to learn more effectively without the need for costly data collection.

A recent IDC report states that over 70% of AI failures in production environments are linked to poor or insufficient training data. Companies like Tesla use data augmentation to simulate different driving conditions—such as night, fog, or rain—so their autonomous systems can handle real-world complexities more reliably.

In a world where more data means better AI, what if you could create new training examples without collecting more data? That’s the promise—and power—of data augmentation.

What Is Data Augmentation?

Data augmentation is the process of creating additional training data by applying transformations or modifications to existing datasets. Instead of collecting more raw data—which can be time-consuming, expensive, or even impractical—data augmentation generates new, synthetic variations that preserve the underlying patterns and labels.

These modifications may include:

- Rotating the image by a certain angle

- Flipping the image horizontally or vertically

- Scaling the image up or down

- Cropping a portion of the image

- Changing the color attributes of the image

For text data, it might include changing synonyms or the order of words without altering the meaning.

The main goal of data augmentation is to improve model generalization and robustness. By exposing machine learning models to a wider variety of training examples, it helps reduce overfitting—a common problem where models perform well on training data but fail to generalize to unseen data. Augmentation is also widely used to address class imbalance, where certain categories may have significantly fewer examples than others.

Drive Business Innovation and Growth with Expert Machine Learning Consulting

Partner with Kanerika Today.

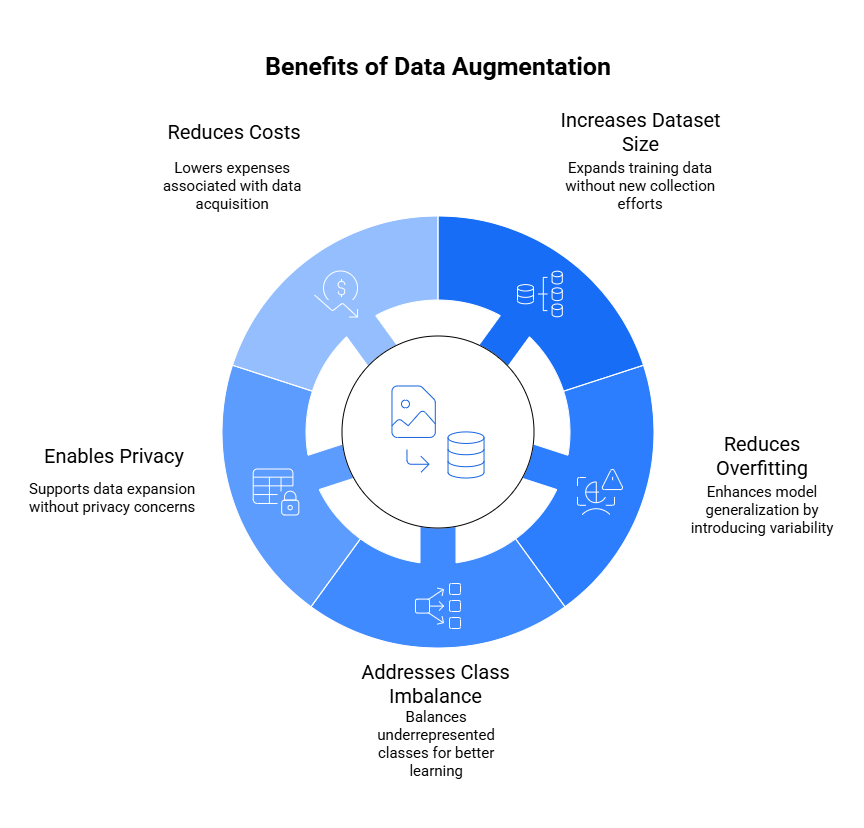

Benefits of Data Augmentation

1. Increases Dataset Size Without New Data Collection

One of the most immediate advantages of data augmentation is that it allows you to expand your training dataset without the need for additional data collection. By applying controlled transformations to existing samples—such as rotating images or paraphrasing text—you can generate diverse variations that help the model learn better. This is particularly useful when collecting new data is expensive, time-consuming, or simply not feasible.

2. Reduces Overfitting and Improves Generalization

When models are trained on small or repetitive datasets, they often memorize patterns rather than learning generalizable insights—a problem known as overfitting. Data augmentation introduces variability into the training process, which helps models generalize more effectively to unseen data. This leads to more robust performance in real-world scenarios.

3. Helps Address Class Imbalance

In many real-world datasets, some classes are underrepresented compared to others. Data augmentation helps balance these classes by generating synthetic samples for the minority categories. This improves the model’s ability to learn from all classes equally, reducing bias and improving overall accuracy.

4. Enables Privacy-Conscious Data Expansion

In sensitive domains like healthcare or finance, collecting new data may raise privacy concerns or regulatory issues. Data augmentation allows teams to enhance datasets without exposing or requesting additional personal information, supporting compliance with data protection laws.

5. Reduces Data Collection Costs

Lastly, data augmentation significantly lowers the cost associated with acquiring or labeling new data. It enables teams to build high-performing models using fewer resources—making it a cost-effective solution, especially for startups and research-driven organizations.

Data Augmentation Techniques by Domain

Data augmentation strategies vary significantly depending on the type of data involved. Whether you’re working with images, text, audio, tabular data, or time series, there are well-established techniques and tools tailored to each domain. Below is an overview of common augmentation methods categorized by data type.

1. Computer Vision

In computer vision, data augmentation is widely used to improve the robustness of models by simulating various real-world image conditions. Common techniques include image rotation, horizontal or vertical flipping, random cropping, scaling, brightness or contrast adjustments, and adding Gaussian noise. These transformations help the model become invariant to changes in orientation, lighting, and size.

Popular tools:

- Albumentations – A fast, flexible library for image augmentation

- TensorFlow ImageDataGenerator – Built-in support for common transformations

- PyTorch torchvision – Offers transformation pipelines integrated with PyTorch models

2. Natural Language Processing (NLP)

Text data poses unique challenges, but several augmentation techniques help diversify language input while preserving meaning. Methods include synonym replacement, random word insertion or deletion, back translation (translating to another language and back), and paraphrasing using language models.

Popular tools:

- NLPAug – A Python library for NLP-based augmentation

- TextAttack – Designed for adversarial training and augmentation

- OpenAI GPT-based paraphrasing – For generating diverse language variations

3. Audio and Speech

In speech and audio applications, augmentation enhances model robustness against background noise and different speaking conditions. Techniques include pitch shifting, injecting background noise, time stretching, and volume scaling.

Popular tools:

- Torchaudio – PyTorch-native audio augmentation tools

- Audiomentations – A lightweight library for common audio transformations

4. Tabular Data

For structured datasets, data augmentation helps with class imbalance and model stability. Techniques such as SMOTE (Synthetic Minority Over-sampling Technique), ADASYN, noise injection, and value permutation are commonly used to generate realistic synthetic data.

Popular tools:

- imbalanced-learn – Implements SMOTE, ADASYN, and other resampling techniques

- pandas-aug – Lightweight tool for tabular transformations

- SDV (Synthetic Data Vault) – A powerful toolkit for generating synthetic tabular data

5. Time Series

Time series data requires techniques that maintain temporal consistency. Methods include window slicing (segmenting data), jittering (adding noise), scaling, and time warping (changing the speed of sequences).

Popular tools:

- tsaug – Tailored for time series augmentation

- augmenty – Provides augmenters for sequential and time-dependent data

Choosing the right data augmentation method depends on your domain, model architecture, and training goals. Properly applied, these techniques can significantly enhance model performance and reliability.

Machine Learning operations (MLOps): A Comprehensive Guide

Explore the world of MLOps and transform how your organization scales machine learning workflows.

Types of Data Augmentation

Data augmentation methods vary by medium, each enhancing an existing dataset’s size and variability. These methods introduce perturbations or transformations that help to make your models more generalizable and robust to changes in input data.

1. Image Data Augmentation

For your image datasets, data augmentation can include a range of transformations applied to original images. These manipulations often include:

- Rotations: Modifying the orientation of images.

- Flips: Mirroring images horizontally or vertically.

- Scaling: Enlarging or reducing the size of images.

- Cropping: Extracting subparts of images for training.

- Color Jittering: Adjusting image colors by changing brightness, contrast, saturation, and hue.

2. Text Data Augmentation

When dealing with text, you can augment your data by altering the textual input to generate diverse linguistic variations. These alterations include:

- Synonym Replacement: Swapping words with their synonyms to preserve meaning while altering the sentence structure.

- Back Translation: Translating text to a different language and back to the original language.

- Random Insertion: Adding new words that fit the context of the sentence.

- Random Deletion: Removing words without distorting the sentence’s overall sense.

3. Audio Data Augmentation

To enhance your audio datasets, consider applying these common audio data augmentation techniques:

- Noise Injection: Adding background noise to audio clips to mimic real-life scenarios.

- Time Stretching: Changing the speed of the audio without affecting its pitch.

- Pitch Shifting: Altering the pitch of the audio, either higher or lower.

- Dynamic Range Compression: Reducing the volume of loud sounds or amplifying quiet sounds to normalize audio levels.

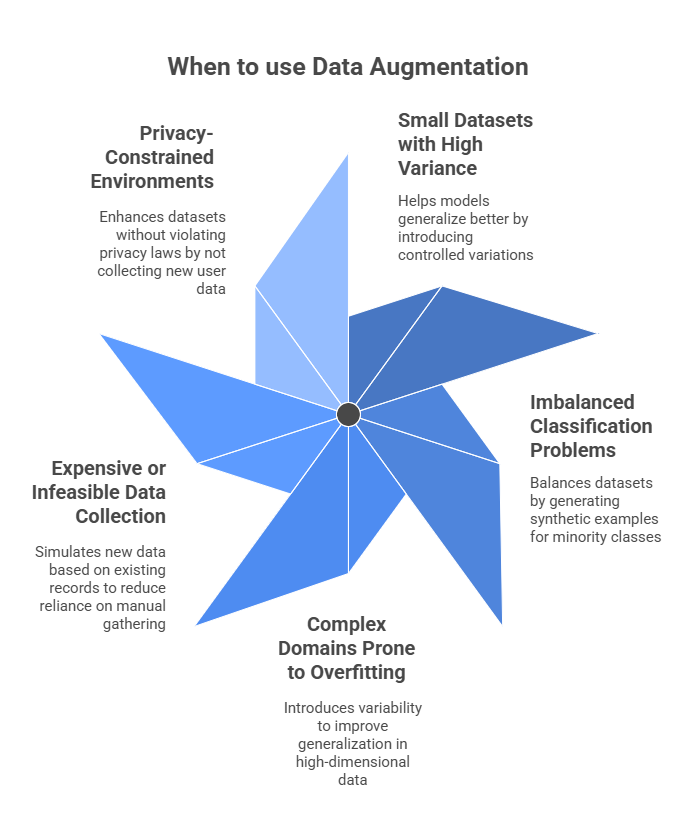

When to Use Data Augmentation

Data augmentation is a valuable technique in a range of real-world machine learning scenarios. Below are the most common situations where it becomes essential:

1. Small Datasets with High Variance

When training data is limited in size or contains high variability, models are more likely to overfit—memorizing patterns instead of generalizing. Data augmentation helps artificially expand the dataset by introducing controlled variations, enabling the model to learn more robust features without additional data collection.

2. Imbalanced Classification Problems

In classification tasks where one or more classes are significantly underrepresented, data augmentation can help balance the dataset. Techniques like SMOTE or targeted transformations generate synthetic examples for minority classes, improving the model’s ability to make accurate and unbiased predictions.

3. Complex Domains Prone to Overfitting

Fields such as medical imaging, speech recognition, and natural language processing often involve high-dimensional data and subtle distinctions between classes. These conditions make models prone to overfitting. Augmentation introduces variability that helps the model generalize better to unseen data.

4. When Data Collection Is Expensive or Infeasible

In industries like healthcare, aerospace, or legal, collecting large volumes of labeled data can be costly, time-consuming, or restricted. Data augmentation allows teams to simulate new data based on existing records, reducing reliance on manual data gathering while still enhancing model performance.

5. Privacy-Constrained Environments

In highly regulated sectors such as finance or healthcare, data augmentation provides a way to enhance existing datasets without violating privacy laws. Since no new user data is collected, it supports compliance while still enriching the dataset for machine learning.

Techniques and Methods for Data Augmentation

Data augmentation techniques are essential in improving the performance of your machine learning models by increasing the diversity of your training set. This section guides you through several key techniques that you can apply.

1. Geometric Transformations

You can use geometric transformations to alter the spatial structure of your image data. These include:

- Rotation: Rotating the image by a certain angle.

- Translation: Shifting the image horizontally or vertically.

- Scaling: Zooming in or out of the image.

- Flipping: Mirroring the image either vertically or horizontally.

- Cropping: Removing sections of the image.

2. Photometric Transformations

Photometric transformations adjust the color properties of images to create variant data samples. Consider the following:2.

- Adjusting Brightness: Changing the light levels of an image.

- Altering Contrast: Modifying the difference in luminance or color that makes an object distinguishable.

- Saturation Changes: Varying the intensity of color in the image.

- Noise Injection: Adding random information to the image to simulate real-world imperfections.

3. Random Erasing

Random erasing is a practice where you randomly select a rectangle region in an image and erase its pixels with random values. This strategy encourages your model to focus on less prominent features by removing some information.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

Implementation Strategies

In implementing data augmentation, you need to decide between augmenting your data offline before training or online during the training process, as well as tailor strategies for deep learning applications.

Offline vs. Online Augmentation

Offline Augmentation: Here, you augment your dataset prior to training.

- Pros:

- Predictable increase in dataset size.

- One-time computational cost.

- Cons:

- Increased storage requirements.

- Limited variation compared to online methods.

Online Augmentation: This approach applies random transformations as new batches of data are fed into the model during training.

- Pros:

- Endless variation in data.

- More efficient storage usage.

- Cons:

- Higher computational load during training.

- Potentially slower training per epoch.

Data Augmentation in Deep Learning

For deep learning, augmentation should complement the model’s complexity.

- Image Data:

- Rotation, Scaling, Cropping: These basic transformations can help the model generalize better from different angles and sizes of objects.

- Color Jittering: Adjusting brightness, contrast, and saturation to make the model robust against lighting conditions.

- Text Data:

- Synonym Replacement: Swapping words with their synonyms can broaden the model’s understanding of language nuances.

- Back-translation: Translating text to another language and back to the original can create paraphrased versions, enhancing the model’s grasp of different expression forms.

- Audio Data:

- Noise Injection: Adding background noise trains the model to focus on the relevant parts of the audio.

- Pitch Shifting: Varying the pitch helps the model recognize speech patterns across different voice pitches.

In each case, carefully choose augmentation techniques that maintain the integrity of the data. Too much alteration can lead to misleading or incorrect model training.

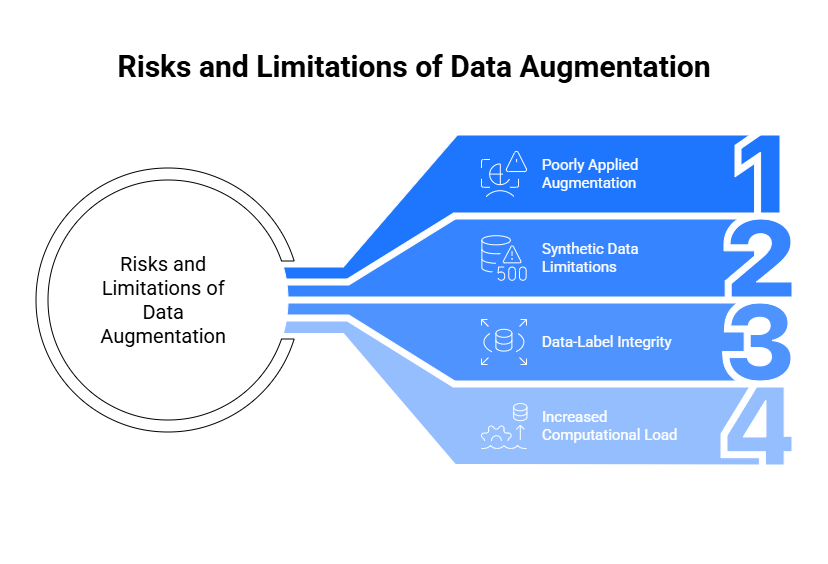

Risks and Limitations of Data Augmentation

While data augmentation offers clear advantages, it also comes with specific risks and constraints. Understanding these limitations is essential to applying augmentation techniques effectively.

1. Poorly Applied Augmentation Can Harm Model Performance

If transformations are overused or irrelevant to the task, they may introduce distortions or inconsistencies in the data. This can lead to reduced model accuracy and generalization.

2. Synthetic Data May Miss Real-World Edge Cases

Augmented data is generated based on existing patterns. As a result, it may fail to capture rare but important scenarios that only appear in real-world conditions. This limits the model’s ability to handle unexpected inputs.

3. Risk to Data-Label Integrity

It’s critical that any augmentation preserves the relationship between input and label. For example, rotating a medical image or paraphrasing text incorrectly may alter the intended meaning, resulting in label noise and incorrect learning.

4. Increased Computational Load

Augmenting datasets can significantly increase their size, leading to longer training times and greater demand for computational resources. This can slow down development and deployment cycles if not managed properly.

Being aware of these risks allows practitioners to apply data augmentation more thoughtfully—maximizing benefits while avoiding common pitfalls.

Real-World Use Cases of Data Augmentation

Data augmentation is not just a theoretical concept—it’s actively transforming real-world applications across industries by improving model accuracy, reliability, and adaptability. Here are some notable use cases:

1. Healthcare

In medical imaging, collecting large, annotated datasets is both time-consuming and expensive. Data augmentation techniques like rotation, flipping, and contrast adjustment are used on X-ray and MRI scans to expand datasets and train more robust diagnostic models. These techniques improve model generalization, particularly when data from rare conditions is limited.

2. Autonomous Vehicles

Self-driving cars rely heavily on computer vision models for object detection and navigation. Augmentation methods simulate different weather, lighting, and road conditions to ensure models can perform safely across varied environments. These synthetic variations help reduce edge-case failures in real-world driving.

3. Retail

Fraud detection models often face class imbalance issues due to the rarity of fraudulent transactions. Data augmentation generates synthetic customer transaction records, helping to balance datasets and improve the model’s ability to detect anomalies.

4. Finance

In credit scoring, augmentation is used to simulate customer profiles and financial histories, helping to reduce bias and improve the accuracy of credit risk predictions, especially when historical data is limited or skewed.

Choose Kanerika as your AI/ML Implementation Partner

Kanerika has long acknowledged the transformative power of AI/ML, committing significant resources to assemble a seasoned team of AI/ML specialists. Our team, composed of dedicated experts, possesses extensive knowledge in crafting and implementing AI/ML solutions for diverse industries.

Our AI models are designed to help businesses automate complex tasks, enhance decision-making, and achieve significant cost savings. Additionally, from optimizing financial forecasts, to improving customer experiences in retail, to streamlining workflows in manufacturing, our solutions are built to address real-world challenges and drive meaningful results.

Leveraging cutting-edge tools and technologies, we specialize in developing custom ML models that enable intelligent decision-making. With these models, our clients can adeptly navigate disruptions and adapt to the new normal, bolstered by resilience and advanced insights.

FAQs

What is meant by data augmentation?

Data augmentation is like taking existing photos and making slight changes to them, like rotating them, cropping them, or adjusting their brightness. This creates new, similar versions of your data without needing to collect more actual data. It helps machine learning models learn better by exposing them to a wider variety of examples.

What is augmentation with an example?

Augmentation means adding something to make it better or more effective. It’s like adding more ingredients to a recipe to make it more flavorful. For example, in machine learning, we augment data by adding variations to existing images, like rotating them or changing their brightness, to make our AI model more robust.

What is data augmentation in NLP?

Data augmentation in NLP is like adding more ingredients to your recipe to make it richer and more flavorful. It involves creating synthetic data from existing text by techniques like paraphrasing, replacing words with synonyms, or introducing noise. This helps train NLP models on a wider variety of data, leading to better performance and robustness.

What are the techniques of data augmentation?

Data augmentation is a clever way to expand your training dataset without collecting more data. It involves applying various transformations to existing data, like flipping images, adding noise, or changing colors, to create new, slightly different versions. This helps your model learn more robustly and generalize better to unseen data.

What is the principle of data augmentation?

Data augmentation is like taking a single photo and creating variations of it, like rotating it, flipping it, or adding noise. This helps train AI models by expanding the dataset, giving them more diverse examples and ultimately improving their performance. Essentially, it’s a way to “trick” the model into learning from more data than you actually have.

Is data augmentation an algorithm?

Data augmentation isn’t a single algorithm, but rather a collection of techniques used to increase the size and diversity of your training data. It involves modifying existing data in various ways, like flipping images, adding noise, or changing colors, to create new, synthetic data points. Essentially, it’s a strategy, not a specific algorithm.

How generative AI is used in data augmentation?

Generative AI acts like a creative artist for your data. It uses learned patterns to generate new, realistic data points that resemble your existing dataset. This expands your dataset, helping train AI models more effectively and address issues like limited data availability or class imbalance. Think of it as adding more brushstrokes to your data painting!

What is the difference between data augmentation and preprocessing?

Data augmentation artificially expands your dataset by creating variations of existing data, like flipping images or adding noise. Preprocessing, on the other hand, prepares your data for analysis by cleaning it, transforming it into a usable format, and standardizing it. Think of augmentation as making more copies of your data while preprocessing is like tidying up your data before you use it.

Does data augmentation reduce overfitting?

Data augmentation helps reduce overfitting by artificially expanding your training dataset. This combats overfitting by introducing more variations of your existing data, preventing the model from learning too closely to specific training examples and making it more robust to unseen data. In essence, it helps your model see the world from multiple angles, leading to better generalization.