You ask ChatGPT, “What’s the best way to improve customer retention?” and it replies with a generic list—loyalty programs, better support, maybe some automation. But when you provide your company’s churn data, customer feedback, and strategic goals, the answer shifts. It becomes specific, insightful, and aligned with your business. That’s the power of context engineering.

Context engineering is the practice of designing and delivering the correct background information to AI systems so they can generate smarter, more relevant outputs. Instead of relying solely on well-crafted prompts, it builds a rich environment—like giving AI a map before asking for directions. Whether it’s customizing chatbots for healthcare, training models with enterprise-specific data, or enhancing search relevance, context engineering helps AI align with your business reality—not just general assumptions.

In this blog, we’ll explain what context engineering is, break down its main components, and demonstrate how it addresses the limitations of prompt engineering— helping organizations get the most out of AI in real-world use.

What Is Context Engineering?

Context engineering is the systematic design, management, and orchestration of information that influences how an AI model interprets, responds, and evolves. It goes beyond prompt engineering by incorporating multiple layers of context—each contributing to the model’s understanding of its environment, task, and user.

Rather than treating context as a static input, context engineering views it as a dynamic, modular framework that adapts to changing goals, data, and interactions.

Why It Matters

In enterprise settings, AI systems must operate across diverse workflows, departments, and compliance requirements. Context engineering ensures that these systems are:

- State-aware: Able to remember and adapt based on prior interactions

- Goal-aligned: Tuned to specific business objectives

- Secure and compliant: Governed by access controls and audit trails

- Scalable: Reusable across multiple use cases without retraining

Transform your business with context-engineered AI solutions!

Partner with Kanerika for Expert AI implementation Services

Key Components of Context Engineering

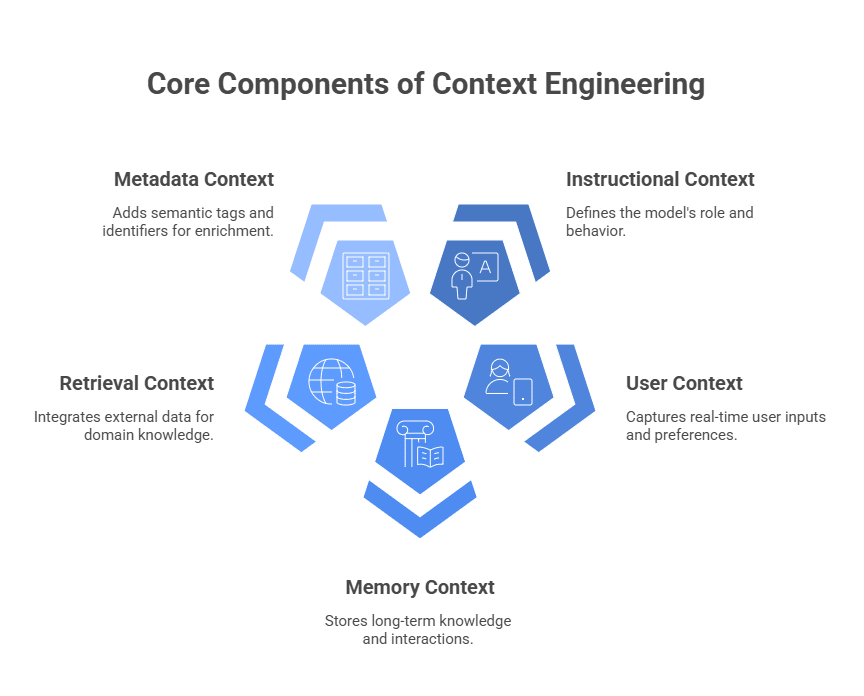

To build intelligent and reliable AI systems, context engineering orchestrates several distinct layers:

1. Instructional Context

Defines the model’s role, behavior, and constraints. This is the foundational layer that sets expectations for how the model should operate.

Example: You are a procurement advisor for a global manufacturing firm. Your goal is to optimize supplier selection based on cost, quality, and delivery timelines.

2. User Context

Captures real-time inputs, preferences, and session-specific data. This layer personalizes responses and ensures relevance.

Example: A user’s recent queries, selected filters, or geographic location.

3. Memory Context

Stores long-term knowledge and historical interactions. Enables continuity across sessions and supports personalization.

Example: Past conversations with a customer, previous recommendations, or resolved issues.

4. Retrieval Context (RAG)

Integrates external data sources using Retrieval-Augmented Generation. This allows the model to access up-to-date, domain-specific information.

Example: Pulling compliance guidelines from a secure enterprise database or retrieving product specs from a catalog.

5. Metadata Context

Includes semantic tags, timestamps, user identifiers, and contextual markers that enhance the model’s situational understanding and response relevance.

Example: A sales manager accessed this data during a quarterly performance review to identify key customer trends.

Together, these components form a contextual architecture that transforms generic LLMs into enterprise-grade AI systems capable of nuanced reasoning and decision-making.

Techniques and Tools in Context Engineering

To build scalable, intelligent, and enterprise-ready AI systems, context engineering relies on a combination of strategic techniques and specialized tools. These enable AI to operate with awareness of user roles, business logic, and real-time data environments.

Key Techniques

- Context Layering

Breaking context into transparent, modular layers—like instructional guidance, user data, memory, retrieved content, and metadata—helps AI systems operate more effectively. This structured approach improves clarity, makes updates easier, and supports scalability across different workflows.

RAG strengthens AI by combining language models with external sources of knowledge. Instead of relying solely on internal training, it retrieves relevant information on the fly, making responses more accurate and tailored to specific domains.

- Memory Compression and Token Optimization

To stay efficient within token limits, modern techniques such as summarization and embedding-based memory storage enable AI systems to retain key information over time without compromising performance.

- Semantic Search and Embedding Matching

Rather than using a basic keyword search, semantic search taps into vector embeddings to understand the meaning behind queries. This allows AI to retrieve more relevant and context-aware information.

- Role-Based Context Switching

AI systems can now adapt based on who’s interacting with them. Whether it’s a customer, analyst, or admin, context switching ensures the response is personalized and aligned with their role—improving both relevance and security.

- Context Simulation and Testing

Before going live, it’s critical to test how AI performs in different real-world situations. Context simulation helps developers validate behaviors and fine-tune responses across various use cases.

- Graph-Based Context Modeling

By using knowledge graphs to map relationships between people, places, and concepts, AI can reason more effectively and retrieve answers that involve multiple steps or a deeper understanding.

Tools Commonly Used

- LangChain: A framework for building context-aware LLM applications. It supports chaining, memory management, and RAG integrations.

- LlamaIndex (formerly GPT Index): Connects LLMs to structured and unstructured data sources, ideal for building retrieval systems and memory modules.

- Vector Databases: Tools like Pinecone, Weaviate, and FAISS store and retrieve embeddings for semantic search, crucial for scalable context retrieval.

- OpenAI Function Calling / Tool Use:

Allows LLMs to interact with external APIs and tools, enriching context dynamically during runtime.

- Prompt Layer / Guidance

Tools that help manage and test prompts, making AI responses more consistent and accurate.

- Knowledge Graph Platforms

Neo4j, TigerGraph, and others structure relationships in data, enabling AI to understand and reason more effectively.

- Custom Middleware & Orchestration

Systems like Airflow or Prefect manage how context moves across platforms, keeping everything secure and compliant.

The Limitations of Prompt Engineering

Prompt engineering involves creating specific inputs to elicit helpful responses from large language models (LLMs). It played a significant role in the early success of generative AI by helping users phrase tasks or questions effectively.

But prompts are limited—they’re static and can break easily with minor changes in wording or situation. They lack support for memory, personalization, and rules, all of which are essential for complex business use. As enterprises grow, prompt engineering alone is insufficient, leading to the rise of context engineering as a stronger, more reliable approach.

1. Static and Fragile

Prompts are often static and brittle. A slight change in phrasing or context can lead to drastically different outputs. This lack of robustness makes prompt engineering unsuitable for dynamic enterprise environments.

2. Lack of Personalization

Prompts do not retain memory or adapt to user behavior over time. This results in repetitive, impersonal interactions that fail to meet enterprise standards for customer experience or operational intelligence.

3. Scalability Challenges

Maintaining prompt libraries across departments and workflows becomes operationally expensive. Each use case requires manual tuning, leading to inefficiencies and inconsistencies.

4. Security and Governance Risks

Prompts often expose sensitive data without proper control. Without context-aware governance, enterprises risk violating compliance standards and data privacy regulations.

5. Limited Reasoning and Adaptability

Prompts alone cannot support multi-turn reasoning, goal alignment, or adaptive behavior. They lack the depth necessary for complex decision-making in domains such as finance, healthcare, or logistics.

AutoGen Vs LangChain: Which Framework Is Better for Building AI Agents In 2025?

Learn the difference between AutoGen and LangChain to discover which AI framework suits your needs.

How Context Engineering Resolves These Challenges

Context engineering addresses the shortcomings of prompt engineering by introducing modularity, memory, and governance into AI workflows.

From Static Prompts to Dynamic Context

Instead of relying on fixed instructions, context engineering enables AI to respond to changing inputs, user behavior, and business goals in real-time.

Example: A sales assistant AI that adjusts its pitch based on the customer’s industry, purchase history, and budget.

Personalized Interactions with Memory

With built-in memory, AI can remember past interactions, making future responses more personalized and meaningful.

Example: A healthcare chatbot that recalls a patient’s medical history to provide tailored advice.

Enterprise-Ready, Scalable Frameworks

Organizations can build reusable context modules that work across departments—saving time, ensuring consistency, and simplifying deployment.

Example: A common compliance layer that guides AI behavior across finance, HR, and procurement.

Built-In Governance and Security

Context engineering integrates rules and protections directly into the system. This includes role-based access, encryption, and full audit trails.

Example: Giving financial analysts access to sensitive data while restricting others—and keeping a log of every AI recommendation.

Smarter Decisions with Better Context

Structured context enables AI to think ahead, make informed decisions, and collaborate with other systems to achieve business objectives.

Example: An AI agent that collaborates with supply chain tools to optimize inventory based on demand forecasts and vendor performance.

The Strategic Role of Context Engineering in Enterprise AI

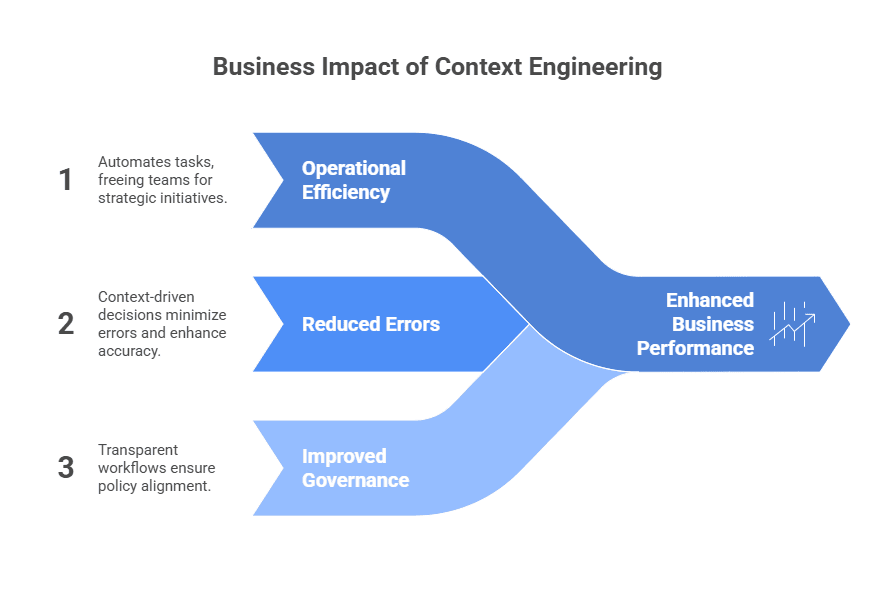

Enterprises are now shifting toward context-aware architectures using techniques like Retrieval-Augmented Generation (RAG), contextual memory, and agentic workflows.

These methods ensure that AI systems access relevant, up-to-date information at the time of inference, leading to more accurate and tailored outputs. By embedding business logic, historical data, and user-specific context into AI pipelines, organizations can reduce errors, increase personalization, and ensure better alignment with operational goals.

This shift is significant in dynamic environments like finance, healthcare, and customer service, where real-time decisions depend on multiple context layers. For instance, agentic workflows allow AI to take informed actions based on user roles, prior interactions, and external data sources. As a result, enterprises benefit from AI that not only understands the “what” but also the “why” and “how” behind every decision.

Drive business success with smarter, context-aware AI!

Partner with Kanerika for Expert AI implementation Services

Real-Life Examples of Context Engineering in Action

1. Healthcare: Personalized Patient Engagement

Hospitals using AI chatbots for patient interaction often face challenges with generic responses. Without access to patient history, medication records, and appointment schedules, the chatbot cannot provide meaningful support.

With context engineering:

- AI accesses EMR data, wearable device inputs, and treatment plans.

- Responses are personalized, timely, and clinically relevant.

- Compliance with HIPAA is ensured through role-based access and audit trails.

2. Manufacturing: Intelligent Procurement

A global manufacturer uses AI to optimize supplier selection. Initially, the system relied on static prompts and failed to consider delivery timelines, supplier reliability, and inventory levels.

With context engineering:

- AI integrates with ERP, supplier portals, and logistics data.

- It recommends suppliers based on cost, quality, and delivery performance.

- Procurement decisions are faster, wiser, and aligned with business goals.

3. BFSI: Risk Assessment and Compliance

Banks deploying AI for loan approvals often struggle with regulatory compliance. Without context, AI may overlook regional rules or customer history.

With context engineering:

- AI accesses credit history, KYC data, and local regulations.

- It flags high-risk applications and routes them to compliance officers.

- Decisions are auditable and aligned with internal policies.

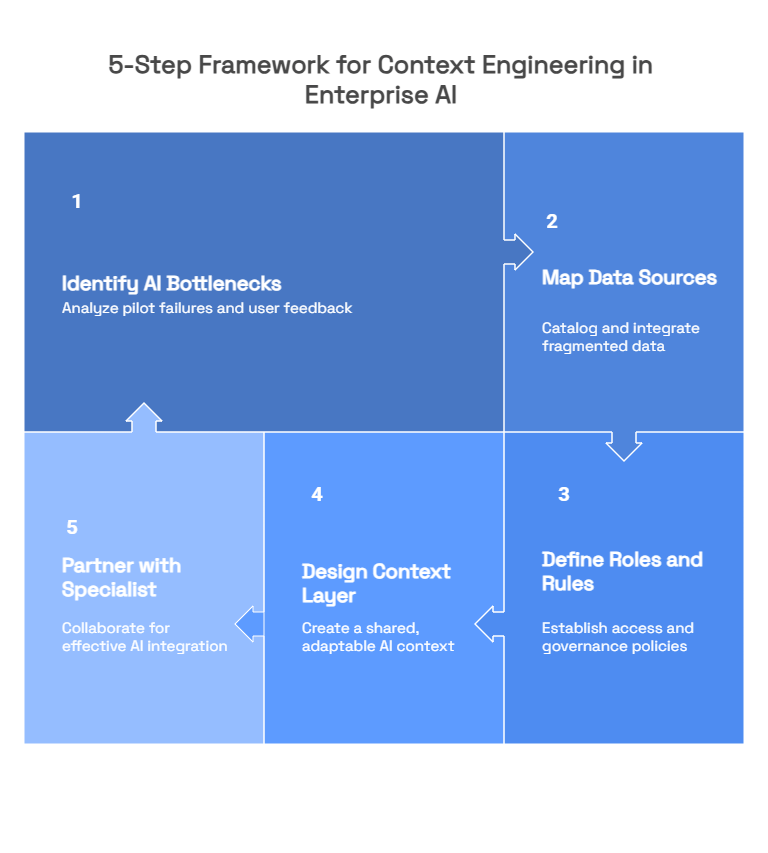

Step-by-Step Guide to Implementing Context Engineering

1. Identify AI Pilot Failures or Bottlenecks in Workflows

Start by auditing existing AI deployments. Look for:

- Inconsistent outputs

- Low user adoption

- Frequent manual overrides

- Compliance violations

Focus on workflows where AI is expected to make decisions or interact with users—these are most sensitive to context gaps.

2. Map Data Sources and Integration Gaps

Context engineering depends on access to relevant data. Map out:

- Internal systems (ERP, CRM, HRMS, ITSM)

- External APIs (weather, finance, logistics)

- Knowledge repositories (policy docs, product catalogs)

Goal: Identify where data silos exist and how they impact AI performance.

3. Define User Roles, Permissions, and Business Rules

Establish transparent governance for how the AI operates by defining:

- Who interacts with the AI (agents, customers, managers)

- What data can they access

- What rules the AI must follow (e.g., escalation protocols, compliance filters)

Example: In a healthcare setup, a nurse and a doctor may access different patient data, and AI must respect these boundaries.

4. Design Context Layer Before Scaling AI

Before expanding AI across departments, build a modular context layer that includes:

- Instructional templates

- Memory modules

- Retrieval connectors

- Metadata governance

Benefit: Ensures consistency, scalability, and security across use cases.

5. Partner with a Trusted AI Integrator (Kanerika)

Context engineering is not just technical—it’s strategic. Partnering with an expert ensures:

- Deep integration with enterprise IT systems

- Custom orchestration tailored to business goals

- Compliance-first design aligned with industry standards

Kanerika’s Approach to Context Engineering

Kanerika is a global consulting and technology firm, committed to delivering enterprise-ready solutions across AI, analytics, automation, and data integration. Since 2015, we’ve partnered with organizations worldwide to drive digital transformation through intelligent, scalable, and secure systems. With a presence in both India and the United States, we blend deep technical expertise with strategic insight to support diverse industries.

What sets us apart is our ability to combine innovation with dependable execution. As a Microsoft Solutions Partner for Data and AI—specialized in Analytics on Azure—we also collaborate closely with leading platforms such as AWS, Databricks, and Informatica. Our adherence to the highest standards is demonstrated through certifications like ISO 27701, SOC II, GDPR, and CMMI Level 3, ensuring our solutions meet global benchmarks for quality and compliance.

Context-aware AI is central to our innovation strategy. Our suite of intelligent tools includes DokGPT, a RAG-based chatbot for intelligent document search, and AI agents such as Alan (Legal Document Summarizer), Susan (PII Redactor), and Mike (Quantitative Proofreader). These solutions are purpose-built to reduce manual effort, increase accuracy, and minimize risk, enabling enterprises to make decisions that are not only faster but also more innovative and compliant.

Our Methodology: Context Engineering Built for Scale

Kanerika’s approach is built on three foundational pillars:

1. Deep Integration with Enterprise Systems

We connect AI models to:

- ERP (SAP, Oracle)

- CRM (Salesforce, Zoho)

- ITSM (ServiceNow)

- Cloud platforms (Azure, AWS)

Outcome: AI operates with full access to structured and unstructured enterprise data.

2. Custom AI Orchestration Layers

Our proprietary orchestration frameworks allow us to:

- Mirror business processes

- Automate tasks, approvals, and escalations

- Align AI behavior with business KPIs

Outcome: AI systems behave like intelligent teammates, not isolated bots.

3. Compliance-First Architecture

We embed governance and regulatory rules directly into AI logic:

- Role-based access controls

- Data encryption and masking

- Audit trails and explainability modules

Outcome: AI systems are secure, auditable, and aligned with industry standards.

Custom AI Agents: How to Build Your Own Smart Helper in 2025

Learn how to build custom AI agents that solve real business problems.

Real Case Studies: Context Engineering in Action

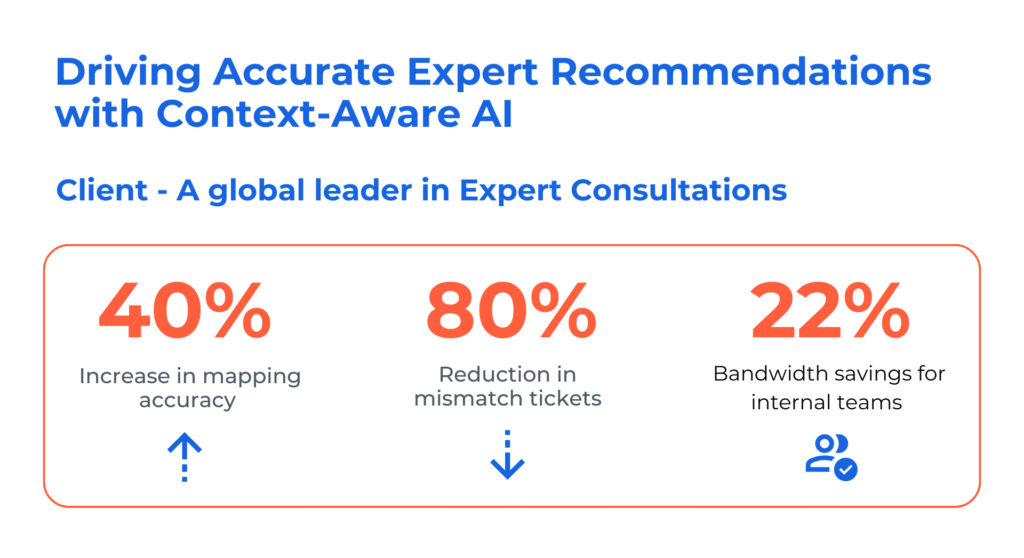

1. Driving Accurate Expert Recommendations with Context-Aware AI

A global leader in expert consultations faced challenges in identifying the right subject-matter experts for niche survey requests. Their manual process spanned three disconnected systems, resulting in poor matches, delays, and high support ticket volumes.

Kanerika’s Solution:

- Deployed a context-aware AI agent that analyzed survey context using semantic search across skills, domains, and expertise levels.

- Integrated past participation, survey history, and compliance data to validate expert matches.

- Delivered a unified dashboard with expert insights and source links.

Outcomes:

- 40% increase in mapping accuracy

- 80% reduction in mismatch tickets

- 22% bandwidth savings for internal teams

2. Delivering Contextual Query Resolution Through an AI Support Agent

A membership services provider struggled with repetitive queries and inconsistent support quality. Their AI chatbot lacked access to member history, policy documents, and escalation protocols.

Kanerika’s Solution:

- Implemented a contextual AI support agent that accessed member profiles, historical queries, and relevant documentation.

- Embedded escalation logic and compliance filters into the AI workflow.

Outcomes:

- Faster query resolution

- Improved member satisfaction

- Reduced support escalations

Conclusion

As AI becomes a core part of business operations, the way it’s designed and deployed matters more than ever. Context engineering offers a thoughtful, structured approach—shifting from static prompts to systems that can understand, adapt, and support real business needs over time.

Rather than treating each AI task in isolation, context-aware design allows for continuity, security, and relevance. It enables AI to remember, comply with policies, and respond in ways that are meaningful to both users and organizations.

At Kanerika, we see context not as an extra layer—but as the foundation for responsible, high-performing AI. By integrating it across systems, we enable enterprises to utilize generative AI with precision and purpose. From improving procurement to streamlining service and managing risk, context-aware AI supports smarter decisions—and better outcomes.

Drive results with context-optimized AI solutions!

Partner with Kanerika for Expert AI implementation Services

FAQs

1. What is contextual engineering?

Contextual engineering is the practice of designing AI systems that understand and respond to the specific environment they operate in—like your company’s data, workflows, user roles, and goals. Instead of treating every task the same, these systems adapt their behavior based on how your business works, making them more valuable and reliable.

2. What are standard techniques used in context engineering?

Key techniques include Retrieval-Augmented Generation (RAG), contextual memory, vector embeddings, metadata layering, and agentic workflows.

3. Does context engineering require custom tools or platforms?

Not necessarily. Many companies start with existing tools, but some customization—like APIs, role-based access, or workflow logic—is often needed to make AI systems truly context-aware. The goal is to shape the system around your business, not force your business to fit the system.

4. What tools or frameworks support context engineering?

Tools like MuleSoft, Apache NiFi, and Zapier help with integration; Azure AI and Google Vertex AI support custom AI workflows; Okta and Azure AD manage user roles; and platforms like Snowflake or Databricks unify data. The right mix depends on your company’s size, tech stack, and goals.

5. What is the difference between context engineering and prompt engineering?

Prompt engineering is about writing good inputs to get functional AI responses—great for one-off tasks. Context engineering goes deeper, building an environment where AI understands who’s asking, what systems are involved, and what rules apply. It’s the difference between giving instructions and designing the whole workspace.

6. Is context engineering relevant for small teams or only large enterprises?

It’s valuable for both. Small teams can use it to automate smarter and save time, while large enterprises benefit from scaling AI across departments and systems. Even basic context-aware setups—such as understanding user roles or workflows—can make a significant difference in how effective AI becomes.