Every day, AI creates over 34 million images using diffusion models, with platforms like Midjourney alone generating over 984 million creations since its launch in August 2023. From DALL-E’s photorealistic art to Stable Diffusion’s ability to transform text into stunning visuals, diffusion models have revolutionized the AI landscape in just two years. Diffusion models have surpassed traditional generative models like GANs in several benchmarks, providing high-quality images with realistic detail. Originally inspired by physical processes, these models mimic how particles spread over time, applying it to data to produce crisp images and text

Yet beneath the surface of these eye-catching images lies a fascinating mathematical framework that’s reshaping how AI learns to create. Diffusion models represent a fundamental shift from traditional generative approaches, offering unprecedented stability, quality, and control in AI-generated content.

Unlike their predecessors, diffusion models excel not just in creating images, but have shown promising results across various domains – from enhancing medical imaging to generating molecular structures for drug discovery. This versatility, combined with their robust training process, has made them critical for modern generative AI systems and a crucial technology for understanding the future of artificial creativity.

Transform Operational Efficiency with Custom AI Models!

Partner with Kanerika for Expert AI implementation Services

What Are Diffusion models?

Diffusion models are a type of generative model in artificial intelligence designed to simulate the way particles disperse or “diffuse” over time. This method is particularly useful in generating data, like images or text, where realistic quality and diversity are essential.

Why Diffusion Models Matter in AI

Diffusion models have become highly valuable in AI, especially for applications requiring high-quality, realistic data generation, such as images and text. These models are unique in their approach, gradually refining random noise into coherent data outputs. Unlike traditional generative models, like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), diffusion models stand out for their output stability and diversity, which has made them a compelling alternative in numerous AI applications.

1. Stability and Diversity in Outputs

One of the primary advantages of diffusion models lies in their stability and ability to produce varied outputs. GANs, though effective, often encounter a limitation known as mode collapse, where the generator produces highly similar outputs repeatedly, limiting variability. Diffusion models bypass this by iteratively refining noise in a step-by-step manner, resulting in more diverse and nuanced results. On the other hand, Variational Autoencoders, while capable of creating varied data, often deliver lower-quality images due to inherent constraints in their data compression and reconstruction processes.

2. Rapid Adoption in Text-to-Image and Image Restoration

The versatility and effectiveness of diffusion models have driven their rapid adoption across several AI tasks, including text-to-image synthesis, super-resolution, and inpainting (filling in missing parts of images). Notable tools like Stable Diffusion and DALL-E showcase diffusion models’ potential in creative fields, generating high-resolution, detailed images based on textual inputs. Innovations in model architectures, like U-Net and autoencoder frameworks, have also improved the efficiency of diffusion models, reducing the computational load of their iterative processing steps.

3. Expanding Applications Beyond Images

Diffusion models are not limited to image generation; they’re also being explored in fields such as audio synthesis and medical imaging. In audio, diffusion models can generate or restore high-fidelity signals, while in medical imaging, they improve diagnostics by reconstructing realistic medical images. This adaptability across domains highlights diffusion models’ role as a foundational technology in generative AI, providing a robust alternative to models like GANs and VAEs for applications demanding both high quality and output diversity.

Why Small Language Models Are Making Big Waves in AI

Discover how small language models are driving innovation with efficiency and accessibility in AI.

Types of Diffusion Models

1. Denoising Diffusion Probabilistic Models (DDPM)

Denoising Diffusion Probabilistic Models (DDPM) are among the most common diffusion models. They work by adding noise to data in a series of steps during training, gradually degrading it. During inference, the model reverses this process, removing noise step-by-step to reconstruct the data. This framework allows DDPMs to learn how to “denoise” each stage progressively, which is especially useful in generating high-quality images.

Advantages and Use Cases

- DDPMs are highly effective in image generation, achieving results comparable to GANs in terms of detail and realism.

- Due to their stable training process, DDPMs avoid common pitfalls like mode collapse found in GANs.

- These models are adaptable to various data types, including images, audio, and even 3D structures, making them versatile for diverse AI applications.

2. Score-Based Generative Models

Score-based generative models, also known as Score Matching Models, estimate data density through a process called score matching. Rather than directly modeling data, these models learn the “score,” or gradient, of the data distribution. The model architecture often uses deep networks like U-Net to capture these gradients across different noise levels, helping generate diverse and realistic data.

Key Features of Score Matching

- These models are robust for high-dimensional data like images and audio, producing diverse outputs with few training instabilities.

- Score-based models leverage stochastic differential equations (SDEs) to model data, making them suitable for tasks that require high fidelity and realistic textures.

- They can match or exceed the image quality of traditional models like GANs, especially in tasks requiring intricate detail or high resolution.

3. Latent Diffusion Models (LDM)

Latent Diffusion Models (LDMs) improve efficiency by performing the diffusion process in a lower-dimensional latent space rather than directly in pixel space. This reduction significantly decreases computational requirements, making LDMs more scalable for complex tasks. LDMs are widely used in popular applications, such as Stable Diffusion, where they enable fast, high-resolution image generation.

Advantages and Use Cases

- LDMs are efficient, making them ideal for high-dimensional data tasks, like generating large images or videos.

- They use autoencoders to encode data into a latent space, allowing diffusion models to focus on meaningful features rather than every pixel.

Two Core Processes in Diffusion: Forward and Reverse Diffusion

Diffusion models operate through two main processes: forward diffusion and reverse diffusion. In the forward diffusion process, data (for example, an image) is gradually corrupted by adding small amounts of noise at each time step. This degradation continues until the data becomes mostly noise, which allows the model to “see” various versions of noisy data, preparing it for the reverse process.

Role of Markov Chains

This noise addition follows the structure of a Markov chain, where each state depends only on the previous one. This method ensures that the noise degradation remains predictable and manageable, allowing for smooth progression across noise levels.

Reverse Diffusion Process: Reconstructing Data

After the data reaches full corruption, the reverse diffusion process begins. The model learns to remove noise step-by-step, reconstructing the original data from the noise. The reverse process uses Markov chains to structure these transformations, ensuring that each denoising step depends on the previous one.

Federated Learning: Train Powerful AI Models Without Data Sharing

Learn how federated learning enables AI training across multiple devices without compromising data privacy.

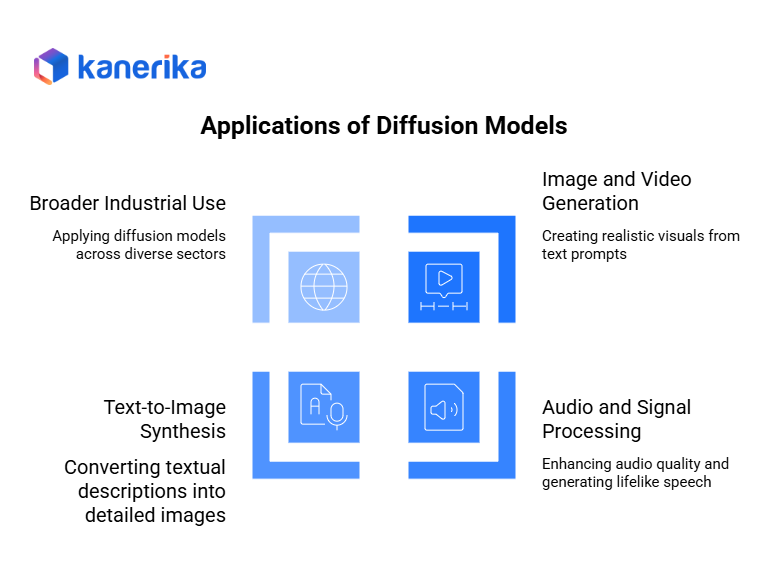

Key Applications of Diffusion Models

1. Image and Video Generation

Diffusion models are extensively used in image and video generation, particularly in applications that require realistic and high-quality outputs. For example, in text-to-image synthesis, models like DALL-E and Stable Diffusion generate images based on descriptive text prompts, transforming user inputs into visually coherent scenes. This capability has led to widespread adoption in creative industries, where artists and designers use diffusion-based tools to create content on demand. Video generation, though more complex, is also emerging, as researchers explore how diffusion models can generate smooth, coherent sequences frame-by-frame.

Examples of Tools

- Stable Diffusion allows users to input a prompt and get high-resolution, intricate images.

- DALL-E has become popular for its ability to create vivid, imaginative visuals from textual descriptions, enhancing workflows in fields like marketing and media

2. Audio and Signal Processing

In audio and signal processing, diffusion models play a significant role in applications such as speech synthesis and noise reduction. By leveraging noise addition and removal, these models can produce high-fidelity audio from raw input signals, making them ideal for restoring old audio recordings or enhancing voice clarity in telecommunication. In speech synthesis, diffusion models generate lifelike speech patterns that can adapt to different vocal tones and accents, bringing improvements to virtual assistants and automated call centers.

Key Contributions

- Diffusion models have proven valuable in denoising tasks, where they can isolate and remove unwanted noise, improving sound quality in real-time applications.

- In synthetic voice generation, diffusion-based speech models create natural-sounding voices, advancing capabilities in virtual assistance and accessibility technology.

3. Text-to-Image Synthesis

In text-to-image synthesis, diffusion models excel at converting textual descriptions into vivid, coherent images. This application holds significant potential for content creation, as it enables users to generate visuals directly from descriptive language. By gradually refining random noise into an image that aligns with the given text prompt, diffusion models allow for highly customizable, detailed visuals that capture the nuances of the input description. This capability has made text-to-image synthesis popular in fields like digital marketing, content production, and entertainment, where quick and visually accurate output is crucial.

Key Contributions

- Versatility in Content Creation: Diffusion models in text-to-image synthesis empower creators to produce graphics, illustrations, or concept art quickly, reducing reliance on traditional design tools.

- High-Resolution Outputs: These models can generate high-resolution images suitable for commercial use, from marketing materials to social media visuals.

- Enhanced Creative Control: By refining images based on detailed text, diffusion models give creators control over aspects like style, color, and subject matter, allowing for unique, visually appealing results that resonate with audiences across industries.

4. Broader Use Cases Across Industries

Beyond creative fields, diffusion models are finding broader applications in industries like healthcare, finance, and environmental science. In healthcare, diffusion models aid in medical imaging, where they reconstruct detailed scans from noisy inputs, supporting more accurate diagnoses. Finance applications include generating realistic market data for simulations, which helps in stress testing and forecasting. Other industries, such as environmental science, benefit from diffusion models’ ability to create high-resolution geographical images or simulate environmental conditions for climate studies.

Examples of Industrial Use

- Healthcare: Improved diagnostic tools through noise-free imaging, such as MRI reconstruction.

- Finance: Simulating realistic market conditions to enhance financial modeling.

- Environmental Science: Creating accurate geographical data and climate models for research and planning.

Diffusion Models in AI: Key Features and Drawbacks

1. High-Quality Data Generation and Mode Coverage

Diffusion models excel in generating high-quality, realistic data across various domains. Their unique approach—where data is gradually refined from random noise—enhances diversity and quality by covering a wide range of potential outputs. This capability is especially advantageous in applications like image generation, where other models, such as GANs, may suffer from “mode collapse,” producing repetitive patterns instead of diverse images.

Diffusion models, with their controlled noise addition and removal process, avoid this issue, making them highly effective for applications requiring intricate details and variety.

2. Computational Costs and Extended Training Times

One challenge of diffusion models is their high computational cost and longer training times. Unlike other generative models, diffusion models require many iterative steps to gradually remove noise from data, which can lead to significant processing demands.

This issue can limit their use in environments where quick results are necessary or resources are limited, as the computational power required to reach optimal quality can be prohibitive

3. Optimization and Performance Improvements

To mitigate these challenges, researchers are developing optimization techniques that reduce the computational load without compromising output quality. For instance, advancements in latent diffusion models shift processing to a compressed latent space, making the generation process faster and more efficient.

Additional approaches, like using smaller time-step schedules or hybrid models, also offer promising avenues for enhancing performance in diffusion models

Understanding Multimodal Models: A Comprehensive Overview

Discover how multimodal models integrate diverse data types to enhance AI’s understanding and versatility.

Implementing Diffusion Models

A typical implementation of a diffusion model can be broken down into several core steps. Here is an outline with code snippets demonstrating the process using PyTorch and the Hugging Face diffusers library.

1. Define the Model Architecture

Start by creating a U-Net or autoencoder-based model that can handle both noise addition and removal processes. Hugging Face provides a pre-built U-Net architecture designed specifically for diffusion models.

2. Set Up the Forward Diffusion Process

Add Gaussian noise to the input data iteratively to create a training dataset with various noise levels.

3. Train the Model on Noisy Data

During training, the model learns to remove noise step-by-step. For each batch, select a random noise level and have the model predict the clean image from the noisy input.

4. Reverse Diffusion for Generation

After training, apply reverse diffusion by starting from random noise and iteratively passing it through the model to remove noise step-by-step, ultimately reconstructing the data.

Everything You Need to Know About Building a GPT Models

Uncover the essentials of building GPT models, from training data to architecture and deployment.

Best Practices for Implementation

To achieve optimal performance, here are some best practices to consider:

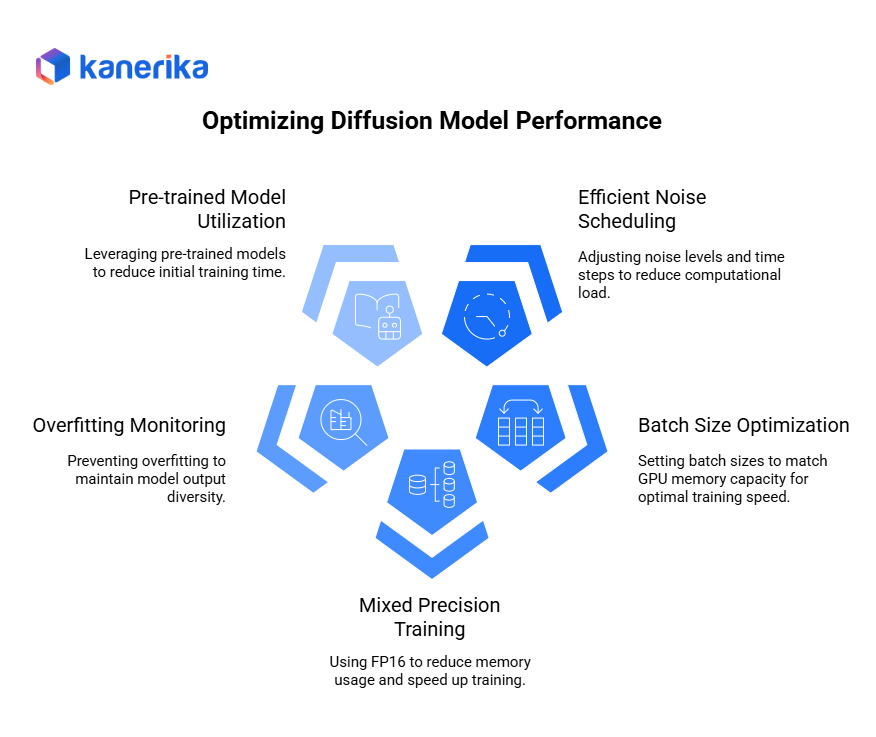

1. Efficient Noise Scheduling

Adjust noise levels and time steps thoughtfully to reduce computational load. Using a cosine schedule or scheduling fewer time steps can improve efficiency without sacrificing output quality.

2. Choose the Right Batch Size

Diffusion models are computationally intensive. Setting batch sizes to match GPU memory capacity can optimize training speed without running out of memory.

3. Use Mixed Precision Training

Training with mixed precision (FP16) significantly reduces memory usage and speeds up training, which is especially beneficial for diffusion models’ iterative processes.

4. Monitor for Overfitting

Overfitting can be subtle but can affect model output diversity. Early stopping or reducing noise levels can help maintain generalization.

5. Experiment with Pre-trained Models

Leverage Hugging Face’s pre-trained models and fine-tune them to fit your needs, reducing the initial training time required for high-quality results.

Achieve New Business Heights with Kanerika’s Custom AI Models

Kanerika, a top-rated AI company, empowers businesses to tackle operational challenges and boost outcomes with purpose-built AI solutions. Our models, crafted for real-world impact, support everything from sales and finance forecasting to smart product pricing and vendor selection, helping clients drive innovation and growth by cutting costs and optimizing resources. With deep expertise in AI, we empower businesses across industries like banking and finance, retail, manufacturing, healthcare, and logistics to seamlessly integrate AI into their operations. Our tailored AI solutions are designed to elevate operational efficiency, reduce costs, and drive impactful outcomes.

By developing advanced, industry-specific models, we help businesses automate complex processes, make data-driven decisions, and gain competitive advantages. Whether it’s optimizing financial forecasting, enhancing customer experiences in retail, streamlining manufacturing workflows, or advancing patient care, Kanerika’s AI solutions adapt to diverse requirements and challenges. Our commitment to client success has established us as a leader in the AI space, trusted by companies to transform their operations and realize measurable improvements through intelligent automation and analytics.

Empower Your Business with Purpose-built AI Solutions!

Partner with Kanerika for Expert AI implementation Services

Frequently Asked Questions

What is the diffusion model?

A diffusion model is a deep learning technique that generates data by gradually denoising random noise into meaningful content. It works by learning to reverse a process where noise is systematically added to data. This approach has revolutionized AI-generated content creation, particularly in image generation, offering high-quality and stable results.

What are the different types of diffusion models?

The main types include Latent Diffusion Models (LDMs), which operate in compressed latent space, Conditional Diffusion Models that generate content based on specific inputs, and Score-Based Diffusion Models that use gradient scoring. Each type offers different trade-offs between quality, speed, and resource requirements for various applications.

What are diffusion models in NLP?

In Natural Language Processing, diffusion models generate and modify text by treating words or tokens as data points that undergo the noise-adding and denoising process. They’re used for text generation, translation, and text style transfer. Notable examples include Google’s Imagen-T and Meta’s CM3 models.

What is the best diffusion model?

Stable Diffusion is widely considered the leading diffusion model due to its balance of quality, speed, and accessibility. However, “best” depends on specific needs – DALL-E 3 excels in following complex prompts, while Midjourney leads in artistic quality. Each model has unique strengths for different use cases.

What are the applications of diffusion models?

Diffusion models power various applications including text-to-image generation, image editing, super-resolution, inpainting, music generation, and 3D model creation. They’re also used in scientific applications like molecular structure generation for drug discovery and medical image enhancement for improved diagnostics.

What are the advantages of diffusion models?

Diffusion models offer superior stability during training, high-quality output, better controllability, and more consistent results compared to GANs. They can generate diverse, realistic content with fewer artifacts. Their principled mathematical foundation makes them more reliable and easier to optimize for specific tasks.

What is the goal of diffusion models?

The primary goal of diffusion models is to learn a high-quality data generation process by reversing a gradual noise-addition process. They aim to create new, realistic data samples that match the training data distribution while offering controllable generation through conditioning and maintaining high output quality.

What are the limitations of diffusion models?

Key limitations include slow generation speed due to the iterative denoising process, high computational requirements, significant training time, and occasional issues with coherence in complex scenes. They can also struggle with global consistency and may produce artifacts in challenging scenarios.

What is an example of a diffusion model?

Stable Diffusion is a prominent example, capable of generating high-quality images from text descriptions. Released by Stability AI, it can create artwork, photographs, and illustrations based on text prompts. It’s open-source and has been integrated into numerous applications and services.

How do diffusion models generate images?

Diffusion models generate images through a step-by-step denoising process. Starting with random noise, they gradually refine it into a clear image using learned patterns. At each step, the model predicts and removes noise, eventually producing a coherent image matching the desired output.