In 2024, the PGA Tour tackled AI-generated content accuracy issues by implementing Retrieval-Augmented Generation (RAG), integrating a 190-page rulebook to provide precise, real-time golf statistics. Meanwhile, Bayer leveraged fine-tuning, training AI models on proprietary agricultural data to enhance domain-specific insights. These real-world applications highlight the ongoing debate of RAG vs Fine Tuning.

While RAG offers adaptability by fetching up-to-date information during inference, fine-tuning embeds domain expertise directly into the model. Both methods have distinct advantages, but how do you decide which one is right for your needs? In this blog, we’ll break down the key differences between RAG vs Fine Tuning, exploring their strengths, limitations, and ideal use cases

What is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) is an advanced AI framework that improves text generation by incorporating external information retrieval. Instead of relying solely on a model’s pre-trained knowledge, RAG dynamically fetches relevant data from an external source (such as a database, document collection, or the web) before generating a response.

How RAG Works

- Query Processing: A user inputs a query.

- Retrieval: The model searches for relevant information from an external knowledge base using a retrieval system (e.g., vector search, semantic search, BM25).

- Augmentation: The retrieved data is provided as an additional context to the model.

- Generation: The model generates a response based on both the retrieved information and its pre-trained knowledge.

Kanerika’s RAG-Based LLM Chatbot: DokGPT

What is DokGPT?

DokGPT is a RAG-based LLM chatbot that allows users to interact with enterprise data, document repositories, and collections of files. It retrieves precise and relevant information without manual searching, ensuring efficiency and accuracy across various business applications.

Core Functionalities

- Customizable Data Modularity – Users can adjust retrieval settings to either prioritize accuracy (fewer but highly relevant results) or increase data volume (broader information with possible redundancies).

- Multilingual Query & Response – DokGPT can process queries in one language while retrieving answers from documents in another.

- Cross-Document Consolidation – Instead of returning separate results, DokGPT merges relevant information from multiple documents into a single, well-structured response, improving readability and context retention.

- Advanced Media & Data Handling – Supports not only text but also structured tables, images, and videos. If needed, it can extract visuals, summarize video content, or convert numerical data into easy-to-read charts for better insights.

Key Business Use Cases

- Enterprise Knowledge Base: Enables quick access to project documents, policies, and operational insights.

- HR & Employee Support: Provides instant responses to onboarding, policy, and process-related queries.

- Manufacturing & Operations: Helps workers retrieve equipment manuals, troubleshooting steps, and training content.

- Customer Support Automation: Assists users by providing real-time product guidance, troubleshooting, and FAQs.

What is Fine-Tuning?

Fine-tuning is especially valuable when dealing with specialized tasks that require a deeper understanding of context beyond what a general model can provide. By training on domain-specific data, the model learns to recognize patterns, terminology, and nuances that are crucial for accurate responses.

By tailoring a model to industry-specific jargon, customer interactions, or unique problem-solving scenarios, fine-tuning ensures that AI systems provide more relevant, reliable, and context-aware responses.

How Fine-Tuning Works

- Start with a Pre-trained Model: Use a large model (like GPT, BERT, or ResNet) that has already been trained on massive datasets.

- Select a Domain-Specific Dataset: Gather a smaller dataset relevant to the target task (e.g., legal documents for a legal AI assistant).

- Adjust Model Weights: Train the model on the new dataset, updating its weights while retaining prior knowledge.

- Optimize & Validate: Fine-tune hyperparameters and evaluate performance on validation data to prevent overfitting.

Key Differences: RAG Vs Fine Tuning

| Feature | Retrieval-Augmented Generation (RAG) | Fine-Tuning |

| Approach | Retrieves external data before generating a response | Trains a model further on a specific dataset |

| Data Source | Uses an external knowledge base or document store | Uses labeled training data specific to the task |

| Flexibility | Dynamic; adapts to new data without retraining | Static; requires retraining for updates |

| Accuracy | Improves factual correctness by retrieving fresh data | Enhances model understanding of a domain |

| Computational Cost | Lower, as it avoids full retraining | Higher, due to additional training steps |

| Best For | Tasks requiring up-to-date or broad knowledge (e.g., chatbots, research tools) | Tasks needing deep understanding of specialized data (e.g., legal AI, medical diagnosis) |

| Example Use Cases | Real-time Q&A, search-augmented assistants | Domain-specific AI models, customer support bots |

| Updates | Easily updated by modifying external knowledge sources | Requires new training when data changes |

RAG Vs Fine Tuning: A Detailed Comparison

1. Model Adaptability

RAG (Retrieval-Augmented Generation)

- Flexibility: The model does not need retraining when new information becomes available. It dynamically retrieves relevant data at inference time.

- Continuous Learning: By integrating an updated knowledge base, RAG ensures that responses stay relevant without modifying the model itself.

- Example: A legal AI assistant that references the latest legal documents and case laws without requiring frequent retraining.

Fine-Tuning

- Static Knowledge: Once fine-tuned, the model remains fixed until it is explicitly retrained with new data.

- Requires Periodic Updates: To stay relevant, the model must be retrained whenever new domain knowledge is introduced.

- Example: A medical AI model that has been fine-tuned on past medical journals but requires updates when new treatments or diseases emerge.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

2. Handling Domain-Specific Knowledge

RAG (Retrieval-Augmented Generation)

- Broad Knowledge Scope: Works well with general or structured knowledge but may struggle with deeply specialized topics unless retrieval is optimized.

- External Data Access: Can fetch domain-specific information but requires a well-maintained external knowledge source.

- Example: A tech support chatbot retrieving real-time hardware troubleshooting steps from an external product manual.

Fine-Tuning

- Deep Specialization: Fine-tuning ensures the model inherently understands specific domain jargon and nuances.

- Pretrained for Accuracy: Can be customized with industry-specific datasets to improve contextual understanding.

- Example: A scientific research assistant AI fine-tuned on biomedical literature to understand complex genetic interactions.

3. Latency and Response Time

RAG (Retrieval-Augmented Generation)

- Higher Latency: Since it fetches external data in real-time, responses may take longer, especially if retrieval involves large datasets.

- Dependency on Retrieval System: The speed of response depends on how quickly the system can fetch relevant information.

- Example: A news summarization AI retrieving and summarizing the latest headlines from various sources before generating a response.

Fine-Tuning

- Lower Latency: The model generates responses instantly since all knowledge is embedded within its parameters.

- No External Calls: Since fine-tuning stores all learned information within the model, there’s no need to fetch data externally, making responses faster.

- Example: A financial trading assistant that instantly generates stock market insights based on pre-trained historical data.

Advanced RAG in Action: How to Leverage AI for Better Data Retrieval

Discover how advanced Retrieval-Augmented Generation (RAG) can enhance AI-driven data retrieval for more accurate, efficient, and context-aware results

4. Interpretability and Transparency

RAG (Retrieval-Augmented Generation)

- Clear Citation of Sources: Can display the original sources of retrieved information, increasing trust and interpretability.

- Easy Fact Verification: Users can verify data since RAG retrieves content from known databases or documents.

- Example: A research assistant AI providing article summaries along with source links for easy verification.

Fine-Tuning

- Opaque Decision-Making: The model’s outputs are based on stored knowledge without showing where the information originated.

- Difficult to Trace Errors: If the model generates incorrect responses, it’s harder to determine where the mistake came from.

- Example: A legal AI model trained on past cases that generates legal arguments without showing sources, making it less transparent.

5. Maintenance and Updates

RAG (Retrieval-Augmented Generation)

- Minimal Model Updates: Since knowledge is external, updates only require modifying the knowledge base rather than retraining the model.

- Less Downtime: Businesses can continuously update information without taking the model offline.

- Example: A customer service AI that pulls information from an updated FAQ database, ensuring users always get the latest answers.

Fine-Tuning

- Frequent Retraining Required: Every time new information is added, the entire model needs retraining, which can be costly and time-consuming.

- Risk of Outdated Responses: If not updated frequently, the model may provide obsolete information.

- Example: A medical diagnosis AI that needs retraining every year with new clinical research findings to stay accurate.

6. Use in Regulated or Sensitive Environments

RAG (Retrieval-Augmented Generation)

- Preferred for Auditable Fields: Suitable for industries where it is crucial to trace information back to its source (e.g., healthcare, law, finance).

- Dynamic Compliance Management: Can integrate with compliance databases to ensure regulatory adherence in real time.

- Example: A healthcare chatbot retrieving medical guidelines from an official database to provide accurate, up-to-date advice.

Fine-Tuning

- Better for Controlled Environments: Works well when information should not change frequently or when direct AI-generated responses are required.

- More Secure but Less Transparent: Since fine-tuning doesn’t rely on external sources, it may reduce security risks but lacks traceability.

- Example: A banking AI fine-tuned to generate financial reports based on pre-approved company policies, reducing external data dependencies.

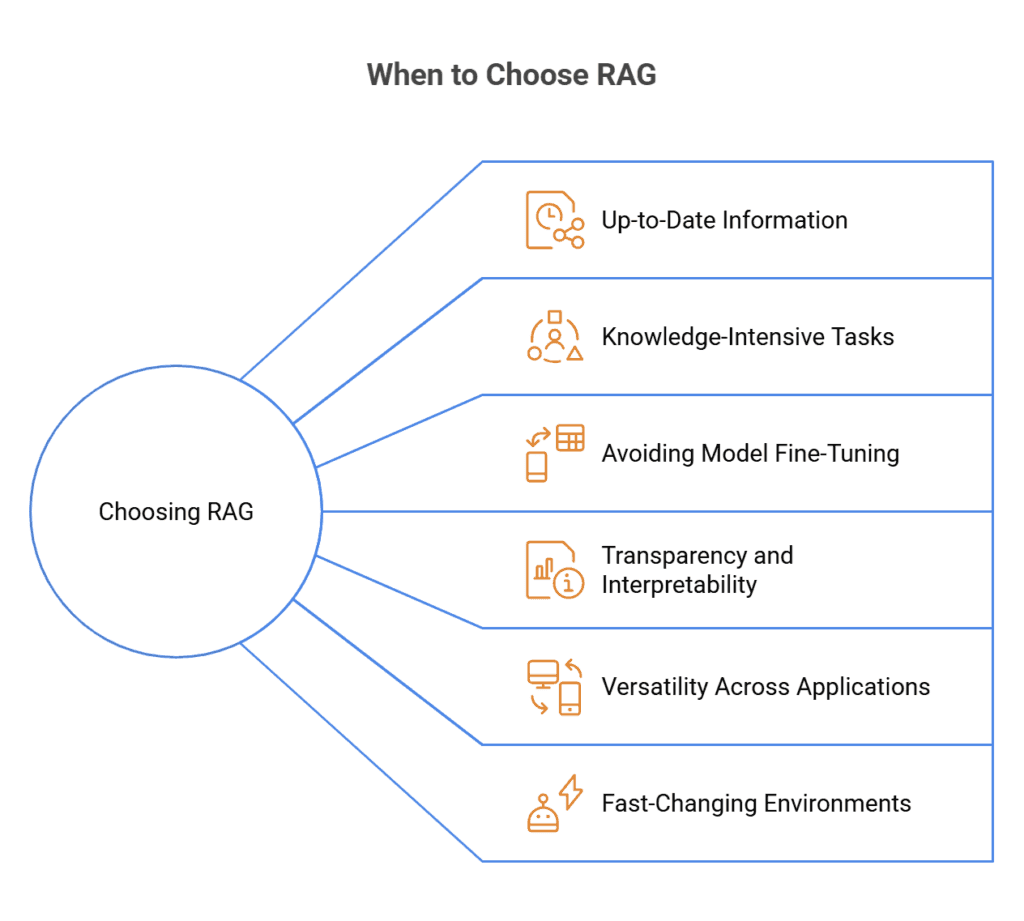

When to Choose RAG (Retrieval-Augmented Generation)

Retrieval-Augmented Generation (RAG) is a powerful approach for enhancing the capabilities of large language models (LLMs) by integrating external knowledge sources. Here are the key scenarios where RAG is the ideal choice:

1. Need for Up-to-Date Information

RAG excels in environments where real-time or frequently updated data is critical. By connecting the model to live databases, APIs, or web sources, it ensures access to the latest information. This makes it particularly useful for applications like:

- News summarization

- Research assistants

- Customer support systems that rely on current policies or product details.

2. Handling Knowledge-Intensive Tasks

If your application requires detailed factual knowledge or domain-specific expertise that is not included in the model’s training data, RAG is a better option. It dynamically retrieves relevant documents or data from external sources, making it suitable for:

- Question answering

- Knowledge-intensive content generation

- Technical or academic writing

3. Avoiding Model Fine-Tuning

RAG does not require modifying the underlying model, which reduces complexity and resource requirements. This makes it ideal when:

- You lack the computational resources or expertise for fine-tuning.

- The task involves diverse or rapidly changing datasets that would make fine-tuning impractical.

Multimodal RAG: Everything You Need to Know

Learn how Multimodal RAG enhances AI by integrating text, images, and videos for more accurate and context-aware information retrieval

4. Ensuring Transparency and Interpretability

RAG allows users to trace the sources of retrieved information, enhancing trust and accountability in generated outputs. This is particularly important in applications like:

- Legal or financial advisory systems

- Medical decision support tools

5. Versatility Across Applications

RAG supports a wide range of use cases by combining generative and retrieval-based techniques. It balances creativity with factual accuracy, making it suitable for:

- Conversational AI

- Summarization tools

- Educational platforms requiring accurate and context-aware responses.

6. Fast-Changing Environments

In industries where knowledge evolves rapidly, such as technology or healthcare, RAG ensures adaptability by continuously incorporating new information without retraining the model.

By leveraging external knowledge dynamically, RAG offers a scalable and flexible solution for applications that demand accuracy, relevance, and up-to-date responses.

When to Choose Fine-Tuning

Fine-tuning a large language model (LLM) involves customizing a pre-trained model for a specific task or domain by training it further on specialized datasets. Below are the key scenarios where fine-tuning is the optimal choice:

1. Task or Domain-Specific Requirements

Fine-tuning is ideal when your application requires the model to handle highly specialized tasks or domains. By training the model on domain-specific data, it can better understand unique terminology, language patterns, and contextual nuances. Examples include:

- Legal document analysis

- Medical diagnosis support

- Industry-specific chatbots.

2. High Accuracy and Performance Needs

When precision is critical, fine-tuning enhances the model’s ability to generate accurate and contextually relevant outputs. This is particularly beneficial for:

- Sentiment analysis

- Named entity recognition

- Document summarization.

3. Proprietary or Confidential Data

If your application relies on proprietary or sensitive data that is not publicly available, fine-tuning allows you to incorporate this data into the model securely. This ensures better alignment with your organization’s unique knowledge base while maintaining privacy.

4. Frequent Use of a Specific Task

For tasks that are repeated often within a business or workflow, fine-tuning can significantly improve efficiency and consistency. For instance:

- Automated customer support responses

- Product recommendation systems.

5. Custom Style or Output Requirements

Fine-tuning is effective when you need the model to generate outputs in a specific tone, style, or format. This is useful for applications such as:

- Creative writing tools (e.g., generating poetry or scripts)

- Tailored marketing content creation.

6. Limited Generalization Needs

If your use case does not require broad generalization across diverse topics but instead focuses on a narrow scope, fine-tuning ensures the model performs optimally within that scope.

Comparing Top LLMs: Find the Best Fit for Your Business

Compare leading LLMs to identify the ideal solution that aligns with your business needs and goals.

Combining RAG and Fine-Tuning

In many scenarios, combining Retrieval-Augmented Generation (RAG) and fine-tuning can yield superior results by leveraging the strengths of both approaches. This hybrid strategy is particularly useful when addressing complex or dynamic use cases. Below are the key methods and benefits of combining these techniques:

1. Retrieval-Augmented Fine-Tuning (RAFT)

- RAFT involves using RAG to retrieve relevant data and then fine-tuning the model on this curated dataset.

- This approach enhances the model’s ability to generate accurate and contextually relevant outputs while tailoring it to specific tasks or domains14.

- Example: A legal AI assistant could retrieve case law using RAG and then be fine-tuned on this data for improved accuracy in legal reasoning.

2. Fine-Tuning RAG Components

- Instead of fine-tuning the entire model, specific components of a RAG system, such as the retriever or generator, can be fine-tuned to address performance gaps.

- This targeted fine-tuning improves the system’s ability to retrieve and process domain-specific information effectively.

3. Balancing Dynamic and Static Knowledge

- RAG is ideal for accessing dynamic, real-time information, while fine-tuning enables specialization in static or domain-specific tasks.

- Combining both ensures that the model remains adaptable to new data while excelling in specialized tasks.

4. Improved Scalability and Accuracy

- By integrating RAG’s retrieval capabilities with fine-tuned domain expertise, organizations can scale their AI systems without sacrificing accuracy.

- This hybrid approach is particularly valuable for applications requiring both up-to-date knowledge and deep contextual understanding, such as customer support or research tools

Kanerika: Your Trusted Partner for AI-Driven Business Transformation

Kanerika is a fast-growing tech services company specializing in AI and data-driven solutions that help businesses overcome challenges, enhance operations, and drive measurable results. We design and deploy custom AI models tailored to specific business needs, boosting productivity, efficiency, and cost optimization.

With a proven track record of successful AI implementations across industries like finance, healthcare, logistics, and retail, we empower organizations with scalable, intelligent solutions that transform decision-making, automate processes, and enhance customer experiences.

Our team of AI experts works closely with clients to deliver actionable insights and build solutions that drive growth. Whether you’re looking to streamline operations, improve efficiency, or stay ahead in a competitive landscape, Kanerika is here to help.

Transform Challenges Into Growth With AI Expertise!

Partner with Kanerika for Expert AI implementation Services

FAQs

Is fine-tuning better than RAG?

Fine-tuning is better for domain-specific accuracy, where models need deep expertise in a specialized field. RAG is better for real-time and dynamic knowledge retrieval without retraining. The choice depends on your use case.

What is the difference between RAG, fine-tuning, and prompt engineering?

RAG retrieves external knowledge dynamically at inference time. While fine-tuning modifies the model’s internal weights to improve performance on specific tasks. Prompt engineering optimizes the input prompt to guide the model’s response without modifying its architecture.

Can RAG and fine-tuning be used together?

Yes, combining RAG and fine-tuning creates a powerful AI system. Fine-tuning enhances domain expertise, while RAG ensures real-time access to updated information, reducing outdated responses.

Is RAG better than fine-tuning for hallucinations?

Yes, RAG is better at reducing hallucinations because it retrieves information from trusted sources instead of generating responses from pre-trained knowledge. Fine-tuned models can still hallucinate if trained on biased or incomplete data

Does fine-tuning improve accuracy?

Yes, fine-tuning improves accuracy for specific tasks and specialized knowledge areas by training the model on carefully curated datasets. However, it does not guarantee real-time accuracy if external information changes.

When should I use RAG instead of fine-tuning?

Use RAG when you need real-time, up-to-date responses, especially in fields like news, legal research, and finance. Fine-tuning is preferable for well-defined, static tasks where the knowledge base doesn’t change frequently.

What are the main challenges of RAG?

RAG requires a high-quality retrieval system, structured databases, and efficient indexing. If the retrieval mechanism is weak, responses may be irrelevant or incomplete.

Does RAG require more computational resources than fine-tuning?

RAG requires fast retrieval systems and external databases, which can increase processing time and infrastructure costs. Fine-tuning, on the other hand, requires heavy GPU resources for model training but is computationally lighter during inference.