In March 2025, a Chinese startup launched Manus AI — a fully autonomous agent that doesn’t just reply, it acts. Give it a vague task like “plan a trip,” and it books flights, checks the weather, compares hotels, and sends a final itinerary, all without step-by-step prompts. That’s the idea behind LLM-powered autonomous agents. They utilize large language models to think, make decisions, and execute tasks with minimal human intervention.

Big players are moving fast. Nvidia’s new Nemotron models, built on Meta’s LLaMA, are designed to power agents for fraud detection, customer support, and factory operations. According to OpenAI, millions of autonomous AI agents are expected to operate “somewhere in the cloud” in just a few years, working under human supervision to generate economic value.

In this blog, we’ll break down how LLM-powered autonomous agents work, where they’re being used, and why they’re more than just chatbots. Keep reading.

Key Takeaways

- LLM-powered autonomous agents go beyond basic bots by understanding context, reasoning, and executing multi-step tasks.

- They combine large language models with memory, planning, and tool integration to automate complex workflows.

- Key characteristics include adaptability, continuous learning, multimodal capabilities, and secure integration.

- The technological architecture involves core LLMs, memory modules, APIs, and monitoring systems.

- These agents support industries such as customer service, finance, legal, HR, and healthcare, having a real-world impact.

- Benefits include improved efficiency, reduced costs, faster decision-making, and scalability.

- Implementation requires defining goals, choosing the right LLM, integrating with tools, and setting up human-in-the-loop oversight.

- Challenges such as bias, security, and accountability require careful handling through effective monitoring and governance.

- LLM-powered agents are a strategic enabler for enterprises, driving intelligent automation at scale.

What Are LLMs?

Large Language Models (LLMs) are AI models trained on vast amounts of text to understand and generate human-like language. They form the backbone of LLM-powered autonomous agents, allowing machines to interpret instructions, process information, and produce coherent text. LLMs enable agents to understand context, reason through tasks, and communicate effectively, bridging the gap between human instructions and automated action.

Examples:

- ChatGPT or GPT-4, which can answer questions, generate content, or simulate conversations.

- Google’s Bard or Anthropic’s Claude can provide insights, summaries, and suggestions from text prompts.

- LLaMA or MPT models are used in research and enterprise applications for language understanding and generation.

12 Unique AI Applications To Transforming Industries

Explore groundbreaking AI applications driving innovation, efficiency, and growth across diverse industries.

What Are LLM-Powered Autonomous Agents?

LLM-powered autonomous agents are AI systems that combine Large Language Models (LLMs) with autonomous decision-making. Unlike traditional AI, these agents can understand instructions, plan multi-step tasks, and execute actions independently. They utilize memory and tools to maintain context and interact with other systems, allowing them to execute complex workflows with minimal human intervention. By integrating reasoning, planning, and action, they can operate intelligently in dynamic environments, making them a powerful advancement in automation.

Key Characteristics:

- Autonomous Decision-Making: They leverage LLMs to understand and generate human language, enabling them to make informed decisions independently.

- Integration with Tools and Memory: These agents utilize tools and memory to enhance their functionality, allowing them to perform complex tasks and retain context over time.

- Sequential Reasoning and Planning: They can handle tasks requiring sequential reasoning, planning, and memory, often employing techniques like Retrieval-Augmented Generation (RAG).

AI Agents Vs AI Assistants: Which AI Technology Is Best for Your Business?

Compare AI Agents and AI Assistants to determine which technology best suits your business needs and drives optimal results.

Technological Architecture of LLM Agents

LLM agents go beyond basic chatbots by offering reasoning and task-completion capabilities. Their architecture reflects a core LLM working alongside other modules to create an intelligent system.

1. Agent Core

This module acts as the central coordinator, receiving user input and directing the agent’s response. It leverages the LLM to process the input, retrieve relevant information from memory, and decide on the most appropriate action based on the agent’s goals and tools available.

The agent core relies on carefully crafted prompts and instructions to guide the LLM’s responses and shape the agent’s behavior. These prompts encode the agent’s persona, expertise, and desired actions.

2. Memory Module

An effective LLM agent requires a robust memory system to store past interactions and relevant data. This memory usually includes:

- Dialogue history: Past conversations with users provide context for ongoing interactions.

- Internal logs: Information about the agent’s actions and performance can be used for self-improvement.

- External knowledge base: Facts, figures, and domain-specific knowledge relevant to the agent’s tasks.

3. Planning Module

The planning module in LLM agent architecture is a crucial component that enables planning and reasoning within a large language model (LLM)-based agent system. This module can break down tasks into subgoals, generate plans with or without external feedback, and aid in multi-step decision-making. It can employ techniques like chain-of-thought prompting to enhance its capabilities

4. Tools

Tools are external functions, webhooks, plugins, or other resources that the agent can use to interact with other software, databases, or APIs to accomplish complex tasks. These tools can take various forms, such as external functions, webhooks, plugins, or other resources that facilitate the agent’s ability to access and utilize external functionalities effectively.

- External Functions: These are functions or services that are external to the LLM agent but can be accessed and utilized by the agent to perform specific tasks.

- Webhooks: Webhooks are automated messages sent from web applications when specific events occur. They can trigger actions in external systems based on certain conditions or events detected by the agent.

- Plugins: These can extend the agent’s capabilities by providing additional tools or services that enhance its performance in handling complex tasks.

5. API Integration

APIs play a crucial role in technological architecture, acting as a bridge between LLM agents and external applications or tools. Integration with various APIs allows agents to perform tasks such as accessing databases, leveraging calculators for mathematical operations, or utilizing a code interpreter to execute dynamic actions within a coding environment like Python.

For example, the LangChain toolkit enables LLM agents to extend their functionality through integration, wrapping the LLM with additional capabilities. By utilizing APIs and open-source models, engineering teams can craft custom solutions that leverage the potent combination of LLMs and external tools.

A high-level flow for LLM agent API integration could be outlined as follows:

- Input Reception: The agent receives a prompt or request.

- Processing: LLM interprets and processes the input using its trained models.

- API Interaction: The agent interacts with external tools or databases through APIs.

- Response Generation: Based on the processed data and API interactions, the agent produces a response or carries out an action.

Key Capabilities of LLM Agents

LLM (Large Language Model) agents are designed with advanced AI capabilities that enable seamless interaction and autonomy within digital environments. The capabilities discussed in this section revolve around processing natural language, reasoning, and learning from interactions.

1. Contextual Understanding and Reasoning

LLM agents possess advanced natural language comprehension that goes beyond simple pattern matching. They can interpret complex, nuanced contexts, understand implicit meanings, and generate responses that demonstrate deep contextual awareness. This capability allows them to grasp intricate scenarios, extract meaningful insights, and provide intelligent, relevant solutions across diverse communication contexts.

2. Multi-Step Problem Solving

These intelligent agents excel at breaking down complex problems into manageable steps, developing strategic approaches to challenge resolution. They can create detailed action plans, anticipate potential obstacles, and dynamically adjust their problem-solving strategies. By combining logical reasoning with creative thinking, LLM agents can tackle intricate challenges that require sophisticated cognitive processing.

3. Tool and API Integration

LLM agents can seamlessly interact with external tools, APIs, and software systems, extending their capabilities beyond language processing. They can retrieve information, perform calculations, generate code, and execute complex workflows across different platforms. This integration enables agents to transform abstract instructions into concrete actions, bridging the gap between natural language understanding and practical task execution.

4. Memory and Context Retention

Unlike traditional chatbots, LLM agents maintain comprehensive context throughout extended interactions. They can recall previous conversation details, track ongoing tasks, and maintain coherent conversational threads. This memory capability enables more natural, continuous interactions, allowing agents to provide personalized and contextually relevant responses that evolve throughout the conversation.

5. Adaptive Learning and Optimization

LLM agents continuously refine their performance through advanced learning mechanisms. They can analyze interaction outcomes, identify areas for improvement, and adjust their approach accordingly. This self-optimization capability ensures that agents become more efficient, accurate, and sophisticated over time, learning from each interaction to enhance their problem-solving and communication abilities.

6. Cross-Domain Knowledge Synthesis

These agents can integrate knowledge from multiple domains, creating unique insights by connecting information across different fields. They can understand and generate content in various disciplines, translate complex concepts, and provide interdisciplinary perspectives. This capability enables LLM agents to offer comprehensive, nuanced understanding that transcends traditional disciplinary boundaries.

7. Generative and Creative Capabilities

LLM agents can generate original content, from written text to code, demonstrating remarkable creative potential. They can produce high-quality, contextually appropriate outputs across various formats, including technical documentation, creative writing, and problem-solving scenarios. This generative ability allows them to create novel solutions and innovative approaches to complex challenges.

8. Multimodal Interaction

Advanced LLM agents can process and generate multiple types of input and output, including text, images, and potentially audio and video. They can interpret visual information, describe images, and generate multimodal content. This capability enables more comprehensive and flexible interactions, expanding the potential applications of AI assistants across different communication mediums.

9. Ethical Reasoning and Bias Mitigation

LLM agents are increasingly designed with built-in ethical frameworks to recognize and mitigate potential biases. They can assess the ethical implications of actions, provide balanced perspectives, and avoid generating harmful or inappropriate content. This capability ensures more responsible and trustworthy AI interactions that prioritize ethical considerations.

10. Autonomous Task Execution

These intelligent agents can independently break down complex tasks, develop execution strategies, and carry out multi-step processes with minimal human intervention. They can manage workflows, coordinate multiple actions, and adapt to changing requirements, demonstrating a level of autonomy that goes beyond traditional automated systems.

How to Implement LLM Agents in Your Projects?

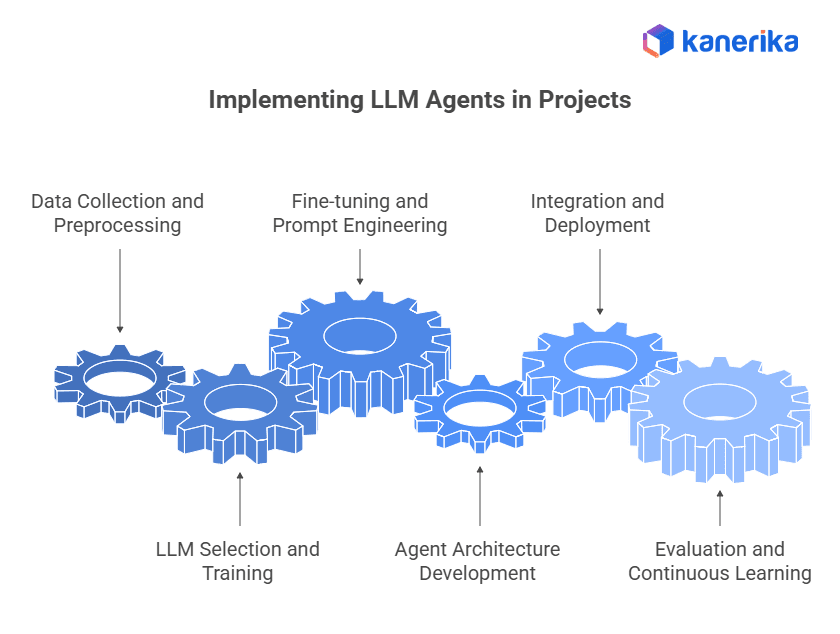

Implementing LLM agents involves several steps, from gathering data to deployment and ongoing improvement.

1. Data Collection and Preprocessing

The foundation of any LLM agent is the data on which it’s trained. This data should be relevant to the specific tasks the agent will perform and should be carefully curated to minimize bias and inaccuracies.

Preprocessing involves cleaning and organizing the data to ensure it’s suitable for training the LLM model. This may involve tasks such as removing irrelevant information, formatting text consistently, and handling missing data points.

2. LLM Selection and Training

Choosing the right LLM for your project depends on factors like the size and complexity of your dataset, computational resources available, and desired functionalities. Popular LLM options include GPT-3, Jurassic-1 Jumbo, and Megatron-Turing NLG.

Training involves feeding the preprocessed data into the chosen LLM architecture. This computationally intensive process can take days or even weeks, depending on the model size and the availability of hardware resources.

3. Fine-tuning and Prompt Engineering

While pre-trained LLMs offer a strong foundation, fine-tuning is often necessary to optimize performance for specific tasks. This involves training the LLM on a smaller, more targeted dataset related to the agent’s domain.

Prompt engineering is crucial for effective communication with the LLM. Well-designed prompts guide the LLM toward the desired outputs, ensuring the agent stays on track during interactions.

4. Agent Architecture Development

Beyond the LLM, the agent needs an architecture to handle user input, manage memory, and potentially interact with external tools or knowledge bases. This architecture will vary depending on the complexity of the agent’s functionalities.

5. Integration and Deployment

Once the agent is trained and fine-tuned, it must be integrated with the platform on which it will be used. This might involve connecting the agent to a chatbot interface, website, or mobile application.

Deployment involves making the agent accessible to users. This could include launching it on a cloud platform or integrating it into existing systems.

6. Evaluation and Continuous Learning

Monitoring the agent’s performance after deployment is crucial. This involves collecting user feedback, analyzing the agent’s outputs, and identifying areas for improvement.

LLM agents can continuously learn and improve over time. By feeding them new data and refining prompts, you can enhance their accuracy, expand their capabilities, and adapt them to evolving user needs.

Real-world Impact: Practical Applications of LLM Agents

Large language model (LLM) agents are transforming various industries by offering a unique blend of communication and task-completion skills. Their ability to understand natural language, access information, and automate tasks makes them valuable tools across diverse fields. Here’s a closer look at some of the most impactful applications of LLM agents:

1. Customer Support

LLM agents can manage entire customer support workflows. They interpret queries, search relevant knowledge bases, provide accurate responses, and escalate complex cases only when necessary. By handling routine tickets and interactions, these agents significantly reduce response times, freeing human agents for higher-value tasks. Some enterprises deploy them to process thousands of tickets daily, ensuring consistent support quality without scaling human resources proportionally.

2. IT and DevOps

In IT operations and DevOps, LLM agents monitor system health, detect anomalies, and automatically trigger corrective actions. They can restart services, run diagnostic scripts, debug code snippets, and even optimize deployment pipelines. This automation not only speeds up troubleshooting but also minimizes human error, allowing teams to focus on strategic initiatives rather than repetitive operational tasks.

3. Sales and Marketing

LLM agents assist sales and marketing teams by automating repetitive workflows. They can draft personalized outreach emails, schedule follow-ups, update CRM systems, and provide insights on client engagement. In marketing, these agents analyze campaign performance, suggest optimizations, generate content, and track trends. Their support ensures faster, data-driven decisions and consistent messaging, which is crucial for scaling business growth.

4. Healthcare

In healthcare, LLM agents streamline administrative and clinical workflows. They assist with patient intake, summarize medical histories, schedule appointments, and even provide reminders. For clinicians, agents can retrieve the latest research papers, compare treatment options, and draft clinical notes. By reducing administrative burdens and improving access to relevant information, these agents enable healthcare professionals to focus more on patient care and informed decision-making.

5. Finance and Accounting

LLM agents help finance teams by automating routine tasks like invoice processing, transaction reconciliation, and report generation. They can answer queries about budgets, expenses, and compliance rules, ensuring accuracy and consistency throughout the process. With faster report preparation and real-time insights, these agents support better financial planning, risk management, and operational efficiency.

6. Legal and Compliance

In legal and compliance functions, LLM agents review contracts, identify potential risks, and draft legal documents efficiently. Compliance teams leverage them to monitor policy changes, detect violations, and maintain audit-ready records. This reduces manual workloads and ensures that regulatory requirements are met promptly, minimizing legal and operational risks.

7. Data and Analytics

LLM agents help organizations make sense of complex data. They clean datasets, run queries, interpret results, and present insights in clear, actionable language. Non-technical teams can rely on them to understand trends and metrics without needing advanced analytics expertise. Some agents even generate dashboards or SQL queries directly from natural language prompts, democratizing data access and decision-making.

8. Human Resources

HR teams utilize LLM-powered autonomous agents to streamline recruiting, onboarding, and employee engagement processes. They can screen resumes, schedule interviews, answer HR-related queries, and guide new hires through company policies and systems. By automating routine HR tasks, these agents enable HR professionals to focus on enhancing the employee experience, developing talent, and driving strategic initiatives.

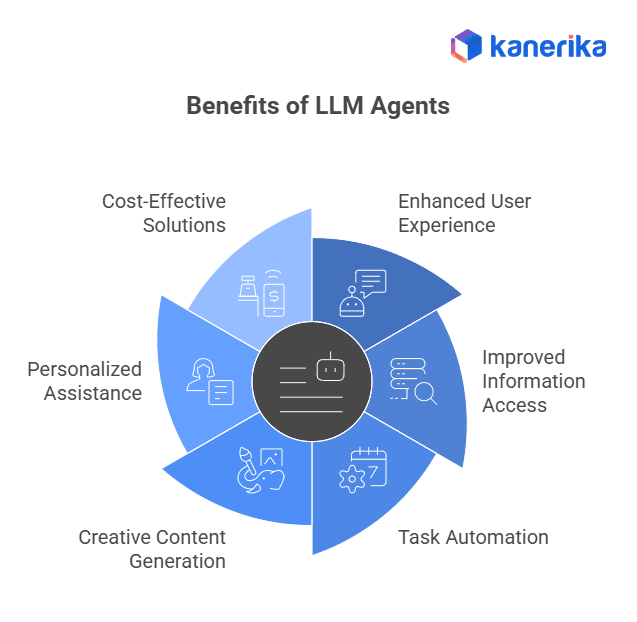

What Are the Advantages of Using LLM Agents?

LLM agents, which combine large language models with additional functionalities, offer several advantages over traditional approaches to human-computer interaction. Here are some key benefits:

1. Cost-Effective Solutions

By automating tasks such as customer support, content creation, and language translation, LLM agents offer cost-effective solutions for businesses across multiple domains.

2. Enhanced User Experience

LLM agents excel at natural language processing, enabling them to engage in natural, intuitive conversations. They can understand nuances, humor, and intent, creating a more user-friendly and interactive experience.

3. Improved Information Access and Retrieval

By integrating with search engines and domain-specific knowledge bases, LLM-powered autonomous agents can access and process real-time information. This enables them to answer questions accurately and serve as experts in their respective fields.

4. Task Automation and Efficiency

LLM agents can handle routine tasks, such as scheduling appointments, making reservations, or sending reminders, thereby freeing up human resources. Through API integrations, they can interact with external systems to perform actions like booking flights or controlling smart devices.

5. Creative Content Generation

Some agents can produce creative content in various formats, supporting storytelling, scriptwriting, or marketing campaigns. They can also manage to-do lists and schedules, boosting overall productivity.

6. Personalized Assistance

LLM agents can provide personalized recommendations and guidance based on user interactions, enhancing experiences in customer service, education, or personal productivity.

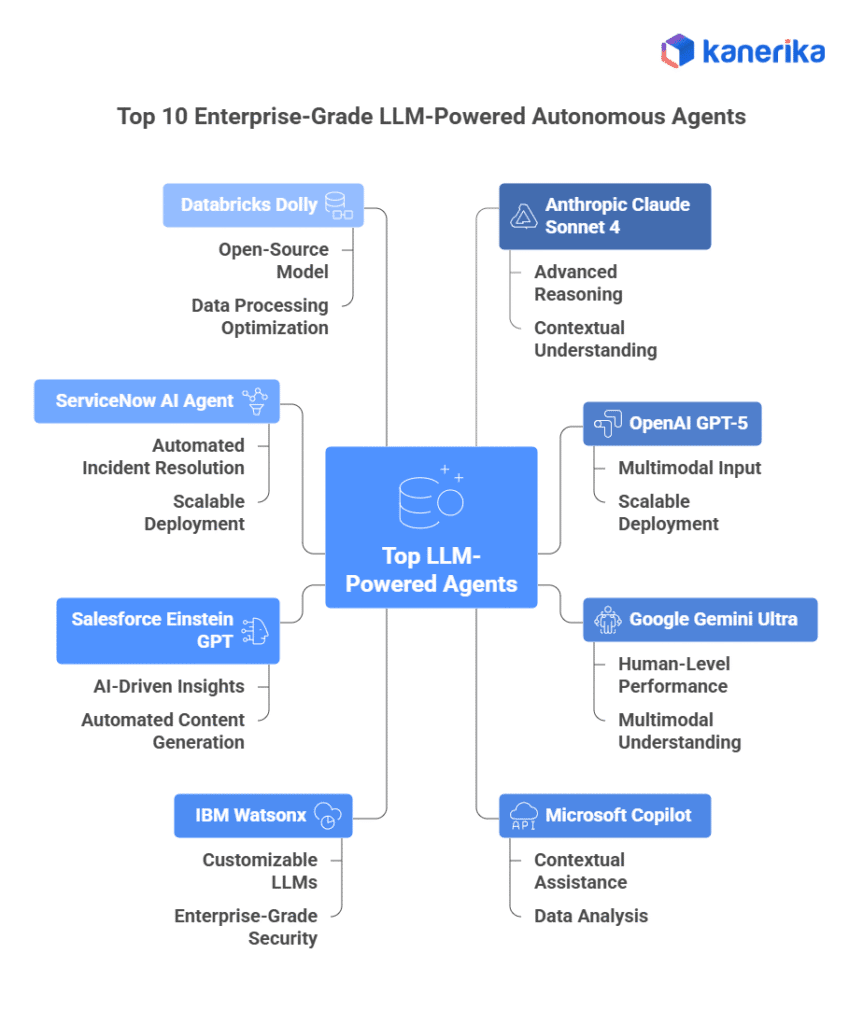

Top 10 LLM-Powered Autonomous Agents That Can Elevate Your Business

1. Anthropic Claude Sonnet 4

Overview: Claude Sonnet 4 is a state-of-the-art LLM designed for safe and reliable enterprise applications. It excels in complex reasoning tasks and is integrated into various enterprise tools.

Features:

- Advanced reasoning capabilities

- High contextual understanding

- Integration with enterprise applications

- Safety-focused design

2. OpenAI GPT-5

Overview: GPT-5 is OpenAI’s latest model, offering enhanced performance for enterprise applications. It supports a wide range of tasks, from content generation to complex problem-solving.

Features:

- Multimodal input processing

- Advanced natural language understanding

- Integration with enterprise systems

- Scalable deployment options

3. Google Gemini Ultra

Overview: Gemini Ultra is Google’s flagship LLM, achieving human-level performance on various benchmarks. It’s tailored for enterprise needs, offering robust AI capabilities.

Features:

- Human-level performance on benchmarks

- Multimodal understanding

- Seamless integration with Google Cloud

- Advanced reasoning and decision-making

4. Microsoft Copilot (Claude Sonnet 4-powered)

Overview: Microsoft Copilot integrates Claude Sonnet 4 into Microsoft 365 applications, enhancing productivity with AI-driven assistance.

Features:

- Contextual assistance in Microsoft 365

- Advanced document drafting and summarization

- Data analysis and visualization

- Seamless integration with enterprise workflows

5. IBM Watsonx

Overview: IBM Watsonx is an enterprise-focused AI platform that provides tools for building and deploying LLM-powered agents.

Features:

- Customizable LLMs

- Integration with IBM Cloud

- Advanced analytics capabilities

- Enterprise-grade security

6. Salesforce Einstein GPT

Overview: Einstein GPT integrates generative AI into Salesforce’s CRM platform, enhancing customer interactions with AI-driven insights.

Features:

- AI-driven customer insights

- Integration with Salesforce CRM

- Automated content generation

- Advanced analytics and reporting

7. ServiceNow AI Agent

Overview: ServiceNow’s AI Agent automates IT service management tasks, improving efficiency and response times.

Features:

- Automated incident resolution

- Integration with ITSM workflows

- Advanced natural language understanding

- Scalable deployment options

8. Databricks Dolly

Overview: Dolly is an open-source LLM developed by Databricks, optimized for enterprise data processing tasks.

Features:

- Open-source model

- Optimized for data processing

- Integration with the Databricks platform

- Scalable and customizable

9. Haptik AI Agent

Overview: Haptik offers AI agents specifically designed for customer support, enabling enterprises to automate interactions across multiple channels.

Features:

- Omnichannel support

- Advanced natural language processing

- Integration with CRM systems

- Real-time analytics and reporting

10. Teneo AI Agent

Overview: Teneo provides a platform for developing enterprise-grade AI agents, with a focus on complex, multi-step reasoning tasks.

Features:

- Multi-step reasoning capabilities

- Integration with enterprise systems

- Customizable workflows

Kanerika’s LLM-Powered Autonomous Agents: Built for Real Business Impact

At Kanerika, we’re building the next generation of enterprise automation with LLM-powered autonomous agents. Unlike basic bots, our agents understand context, plan multi-step tasks, and act across systems without constant human input. By combining large language models with memory, reasoning, and tool integration, we enable businesses to automate complex workflows that were previously manual and time-consuming.

Our specialized agents address real business needs:

- DokGPT – Retrieves information from documents using natural language queries.

- Karl – Analyzes data and generates charts or trends for easy interpretation.

- Alan – Summarizes lengthy legal contracts into concise, actionable insights.

- Susan – Automatically redacts sensitive data to ensure GDPR/HIPAA compliance.

- Mike – Checks documents for mathematical errors and formatting accuracy.

- Jennifer – Manages phone calls, scheduling, and routine interactions.

Each agent is modular, API-ready, and built for seamless integration into enterprise workflows. What sets Kanerika apart is our blend of LLM intelligence with enterprise-grade engineering—secure, scalable, and continuously improving with real-time monitoring and human-in-the-loop options. With Kanerika, you don’t just get automation—you get intelligent systems that adapt, learn, and deliver measurable results.

Drive Business Innovation And Outperform Competitors With LLMs!

Partner with Kanerika Today.

Frequently Asked Questions

1. What is a LLM agent for code generation?

An LLM code generation agent acts like a highly skilled programmer assistant. It uses a large language model (LLM) to understand your coding needs, translate them into code, and even debug the resulting program. Essentially, it automates parts of the software development process, boosting efficiency and potentially reducing errors. Think of it as a powerful tool to help you write better code faster.

2. Is ChatGPT an LLM?

Yes, ChatGPT is a large language model (LLM). It’s essentially a sophisticated computer program trained on massive amounts of text data to understand and generate human-like text. This training allows it to perform tasks like conversation, translation, and summarization. Think of it as a highly advanced autocomplete on steroids.

3. What is LLM in chatbot?

LLMs, or Large Language Models, are the brains behind sophisticated chatbots. They’re vast neural networks trained on massive amounts of text data, allowing them to understand and generate human-like text. This enables chatbots to engage in more natural, nuanced conversations and perform complex tasks like summarizing information or translating languages. Essentially, they’re the key ingredient that makes a chatbot smart.

4. What is the use of LLM?

LLMs, or Large Language Models, are incredibly versatile. They excel at understanding and generating human-like text, powering everything from chatbots and creative writing tools to advanced search and code generation. Essentially, they’re sophisticated pattern-recognizers that can learn from massive datasets to perform a wide range of language-based tasks. Their use is rapidly expanding across many industries.

5. Are LLM-powered autonomous agents secure for enterprise use?

Yes, many enterprise-grade LLM agents are designed with security in mind. They can comply with data privacy standards, encrypt sensitive information, and integrate with secure enterprise systems. However, security also depends on proper configuration, access controls, and monitoring by the organization to prevent data leaks or misuse.