Imagine waking up to your dream life—a Benz outside your door, delicious food on your table, and a beautiful family. But here’s the twist: you haven’t woken up for the past year. Your perfect world is a simulation, a creation of an AI-dominated reality reminiscent of the scenario in the iconic film The Matrix. This chilling vision highlights the generative AI risks that must be confronted as we navigate the complex landscape of artificial intelligence.

Moreover, the rapid advancements in generative AI have transformed the business landscape. It is empowering enterprises to create innovative products, streamline operations, and enhance customer experiences. However, as this transformative technology becomes more ubiquitous, it also introduces a myriad of risks and challenges that organizations must navigate with care.

A recent study by PwC found that 70% of business leaders believe generative AI will have a significant impact on their industry in the next three years. While the potential benefits are vast, the risks are equally concerning. For example, a report by the Brookings Institution estimates that up to 47% of jobs in the United States could be automated by AI, leading to widespread job displacement and the need for reskilling. Additionally, a study by the MIT Technology Review revealed that 60% of AI models are vulnerable to data poisoning attacks, where malicious actors intentionally corrupt the training data to manipulate the model’s output, posing a serious threat to data security and integrity.

Generative AI in Practice

Generative AI is rapidly reshaping enterprise operations, with a recent Accenture study revealing that 42% of companies are preparing for significant investments in technologies like ChatGPT this year. This trend is echoed by McKinsey & Co., which highlights that a substantial portion of generative AI’s value is concentrated in areas such as customer operations, marketing and sales, software engineering, and research and development.

To illustrate the transformative impact of generative AI, consider several practical examples. In customer service, banks are utilizing ChatGPT to analyze online customer reviews, enabling them to identify trends in customer satisfaction and pinpoint areas for improvement, such as website functionality and service quality. Similarly, in call centers, ChatGPT analyzes transcribed conversations to provide summaries and recommendations that enhance communication strategies and boost customer satisfaction.

In recruitment, AI tools like ChatGPT are revolutionizing the hiring process by analyzing candidate CVs for job compatibility, expediting recruitment, and ensuring a better match between job roles and applicants. Additionally, in the creative sector, tools like Midjourney are being employed to generate illustrations for advertising campaigns, showcasing AI’s expanding role in design and marketing.

These examples highlight the transformative potential of generative AI across various business functions. However, as we explore these advancements, it is essential to consider the challenges and risks associated with generative AI integration in enterprises. Let’s delve into some of the most significant risks that organizations may encounter.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

Top 7 Generative AI Risks and Challenges Faced by Enterprises

Risk 1 – IP and Data Leaks

A critical challenge for enterprises using generative AI is the risk of intellectual property (IP) and data leaks.

The convenience of web- or app-based AI tools can lead to shadow IT, where sensitive data is processed outside secure channels, potentially exposing confidential information. This risk was highlighted in a Cisco survey revealing that 60% of consumers are concerned about their private information being used by AI.

For instance, code-generating services like GitHub Copilot might inadvertently process sensitive company information, including IP or API keys.

To mitigate these risks, limiting access to IP is crucial. Forbes suggests using VPNs for secure data transmission and employing tools like Digital Rights Management (DRM) to control access. Additionally, OpenAI offers options for users to opt out of data sharing with ChatGPT, further protecting sensitive information.

Risk 2 – Biased Responses

One of the significant challenges in the use of generative AI is the risk of producing biased responses. This risk arises primarily from the data used to train these systems. If the training data is biased, the AI’s outputs will likely reflect these biases, leading to discriminatory or unfair outcomes.

Historical biases and societal inequalities can be reflected in the data used to train AI systems. This can be especially concerning in industries like healthcare or banking where individuals may be discriminated against.

The risk of bias is not only confined to the data itself but also extends to the way AI systems learn and evolve. Feedback loops can reinforce existing biases in society, leading to worsening inequality.

Identifying biases in AI systems can be challenging due to their complex and often opaque nature. This is further complicated by data protection standards that may restrict access to decision sets or demographic data needed for bias testing.

Ensuring fairness in AI-driven decisions necessitates robust bias detection and testing standards, coupled with high-quality data collection and curation.

Risk 3 – Bypassing Regulations and Compliance

Compliance is a major concern for enterprises using generative AI, particularly when handling sensitive data sent to third-party providers like OpenAI.

If this data includes Personally Identifiable Information (PII), it risks non-compliance with regulations such as GDPR or CPRA. To mitigate this, enterprises should implement strong data governance policies, including anonymization techniques and robust encryption methods.

Additionally, staying updated with evolving data protection laws is crucial to ensure ongoing compliance.

Risk 4 – Ethical AI Challenges

The implementation of AI technologies, particularly generative AI, introduces a range of ethical challenges that are crucial to address for their responsible and equitable use. These challenges stem from the inherent AI outputs being only as reliable and neutral as the input data. This leads to biased or unfair outcomes, especially if the data reflects societal biases or inaccuracies.

Additionally, the involvement of multiple agents in AI systems, including human operators and the AI itself, complicates the assignment of responsibility and liability for AI behaviors. For any incorrect output, is the AI responsible or their human operators?

AI systems can also inadvertently perpetuate societal biases and discrimination, affecting outcomes across different demographic groups.

This is particularly concerning in areas like healthcare, where biased AI decisions could lead to inadequate treatment prescriptions and exacerbate existing inequalities.

Risk 5 – Vulnerability to Security Hacks

Generative AI’s dependency on large datasets for learning and output generation brings significant privacy and security risks. A recent incident with OpenAI’s ChatGPT, where users could see others’ search titles and messages, underscores this vulnerability.

This breach led major corporations like Apple and Amazon to limit their internal use, highlighting the critical need for stringent data protection.

The risk extends beyond data breaches. Malicious actors can misuse Generative AI to create deepfakes or spread misinformation within an industry. Moreover, many AI models lack robust native cybersecurity infrastructure, making them susceptible to cyberattacks.

Risk 6 – Accidental Usage of Copyrighted Data

Enterprises using generative AI face the risk of inadvertently using copyrighted data, potentially leading to legal issues. This risk is amplified when AI models are trained on data without proper attribution or compensation to creators.

To mitigate this, enterprises should prioritize first-party data and ensure third-party data is sourced from credible, authorized providers. This can be achieved by ensuring there are efficient data management protocols present within the enterprise.

Risk 7 – Dependency on 3rd Party Platforms

Enterprises using generative AI face challenges with dependency on third-party platforms. This dependency becomes critical if a chosen AI model is suddenly outlawed or superseded by a superior alternative, forcing enterprises to retrain new AI models.

To mitigate these risks, implementing non-disclosure agreements (NDAs) is crucial when collaborating with third-party vendors like ChatGPT. These NDAs protect confidential business information and provide legal recourse in case of breaches.

From Pilots to Production: The Explosive Growth of Generative AI in Enterprises

Discover how generative AI is transforming enterprises—take your AI strategy from pilot to production today!

Generative AI Risk Management

As mentioned in the above section, generative AI risks and challenges are still numerous. Fortunately, most of these challenges can be alleviated by executing a proper Generative AI Risk Management plan for Enterprises.

The hallmark of a good risk management process is to always first identify the factors which lead to risk, and then create a system in place to tackle it. Here is what an effective Generative AI risk management process should look like for enterprises:

Step 1 – Enforce an AI Use Policy in Your Organization

For effective generative AI risk management, enterprises must enforce an AI use policy that is well-understood and adhered to by all employees. A Boston Consulting Group survey found that while over 85% of employees recognize the need for training on AI’s impact on their jobs, less than 15% have received such training. This highlights the necessity of not just having a policy but also ensuring comprehensive training.

Training should be based on the AI policy, tailored to specific roles and scenarios, to maintain security and compliance. Review the training data available to the generative AI model for biases and inaccuracies to ensure that the AI responses are free from biases and discriminatory beliefs.

It’s crucial to educate employees on identifying AI bias, misinformation, and hallucinations, enabling them to use AI tools more effectively and make informed decisions.

Step 2 – Responsibly Using First-Party Data and Sourcing Third-Party Data for Ethical AI Use

Effective generative AI use in enterprises hinges on responsibly using first-party data and carefully sourcing third-party data.

Prioritizing owned data ensures control and legality while sourcing third-party data requires credible sources with proper permissions. This approach guarantees that the generative AI model is trained without using any bad quality training data or data that infringes on copyrights.

Enterprises must also scrutinize AI vendors’ data sourcing practices to avoid legal liabilities from unauthorized or improperly sourced data.

Step 3 – Invest in Cybersecurity Tools That Address AI Security Risks

A report by Sapio Research and Deep Instinct indicates that 75% of security professionals have noted an increase in cybersecurity attacks, 85% of which are attributed to the misuse of generative AI. This situation underscores the urgent need for robust cybersecurity measures.

Generative AI models often lack sufficient native cybersecurity infrastructure, making them vulnerable. Enterprises should treat these models as part of their network’s attack surface, necessitating advanced cybersecurity tools for protection.

Key tools include identity and access management, data encryption, cloud security posture management (CSPM), penetration testing, extended detection and response (XDR), threat intelligence, and data loss prevention (DLP).

These tools are essential for defending enterprise networks against the sophisticated threats posed by generative AI.

Accelerate Your Projects with Generative AI

Partner with Kanerika for Expert AI implementation Services

Case Studies of Successful Generative AI Implementations

In the realm of generative AI, Kanerika has showcased remarkable success through its innovative implementations.

One notable example involves a leading conglomerate grappling with the challenges of manually analyzing unstructured and qualitative data, which was prone to bias and inefficiency.

Kanerika addressed these issues by deploying a generative AI-based solution. Which utilized natural language processing (NLP), machine learning (ML), and sentiment analysis models. This solution automated the data collection and text analysis from various unstructured sources like market reports, integrating them with structured data sources.

The result was a user-friendly reporting interface that led to a 30% decrease in decision-making time. In addition to a 37% increase in identifying customer needs, and a 55% reduction in manual effort and analysis time.

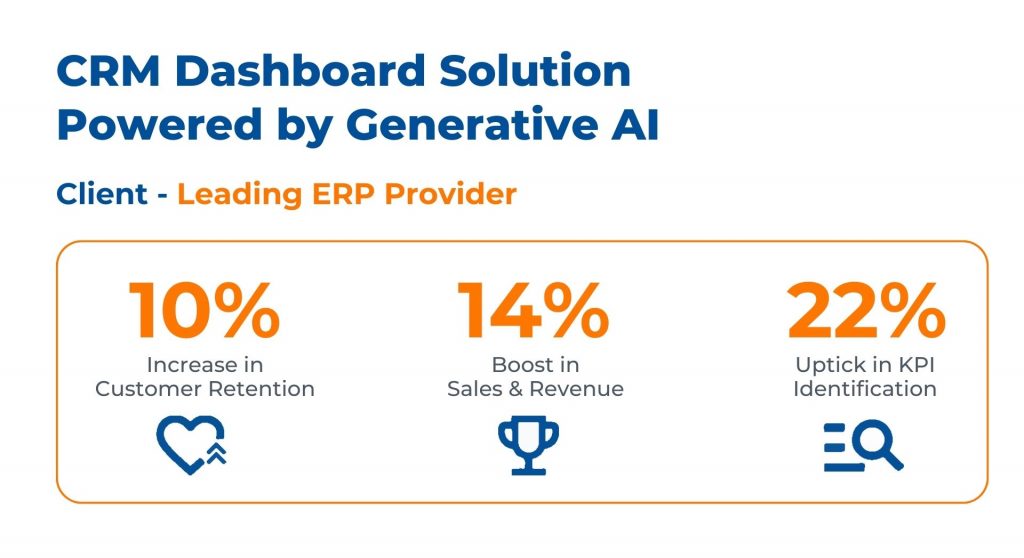

For another leading ERP provider facing ineffective sales data management and a lackluster CRM interface, Kanerika enabled a dashboard solution power by Generative AI.

Kanerika’s intervention involved leveraging generative AI to create a visually appealing and functional dashboard, which provided a holistic view of sales data and improved KPI identification.

This enhancement not only made the CRM interface more intuitive but also resulted in a 10% increase in customer retention, a 14% boost in sales and revenue, and a 22% uptick in KPI identification accuracy.

Generative AI in Supply Chain Management: A Complete Implementation Guide

Transform your supply chain with Generative AI—start implementing smarter solutions today!

Generative AI Implementation Challenges Faced by Enterprises

Implementing generative AI (GenAI) in enterprise settings presents unique challenges. These challenges are not just technical but also involve organizational and ethical considerations.

Understanding and addressing these challenges is crucial for the successful implementation and integration of GenAI into enterprise systems.

Let’s explore the top challenges faced by enterprises.

Generative AI Challenge 1: Integration and Change Management

Integrating generative AI into existing business processes can be a complex and daunting task for many enterprises. This challenge involves more than just technical implementation. It also adapts existing workflows and job roles to accommodate the new technology.

Furthermore, employees often face resistance to this integration. Change management becomes a critical aspect, as it involves educating and reassuring staff about the new technology.

Employees might be apprehensive about AI potentially replacing their jobs or changing their work routines. Effective communication, training, and a gradual approach to integration can help in alleviating these concerns. Thus, ensuring a smooth transition to GenAI-enhanced processes.

Generative AI Challenge 2: Explainability and Transparency

A significant challenge with generative AI, particularly those models based on complex algorithms like deep learning, is their lack of explainability. Additionally, these models often struggle with transparency.

These models are often seen as “black boxes” because it’s difficult to understand or interpret how they make decisions. This opacity can be a significant barrier to building trust and acceptance of AI systems. Both within an organization and with external stakeholders, including customers.

In industries where decisions need to be justified or explained. For example, in finance or healthcare, the inability to explain AI decisions can be a major impediment. Ensuring transparency in AI processes and outcomes is essential to gaining trust.

Researchers in the field of AI are making efforts to develop more explainable models. However, creating these models remains a significant challenge for enterprises looking to implement generative AI in their operations.

Generative AI Challenge 3: Bias and Fairness

Another critical challenge in the implementation of generative AI is the risk of bias and unfair outcomes. AI systems learn from the data they are fed. If the data is biased, the AI can also generate biased outputs.

This can lead to discriminatory results, which could unfairly affect certain segments of the audience or customers.

For example, if developers train a recruitment AI on historical hiring data that reflects past biases, it might still propagate these biases. Such outcomes can not only harm certain groups but also damage the brand’s reputation and lead to legal complications.

Enterprises must ensure that the data used to train AI models is diverse and representative of all relevant aspects. Continuous monitoring and testing for biases in AI decisions are crucial to ensure fairness and ethical use of AI technology.

This involves not only technical solutions but also a commitment at the organizational level to uphold ethical standards in AI use.

Revolutionize Your Workflow – Try Gen AI

Partner with Kanerika for Expert AI implementation Services

Kanerika: Advancing Enterprise Growth with Generative AI

As we have read through the article, enterprises stand to gain numerous benefits by implementing generative AI solutions in their business processes. But navigating through the challenges of such an implementation is crucial.

Choosing appropriate security protocols and crafting advanced algorithms require the expertise of a seasoned AI consulting partner. And, Kanerika stands at the forefront of providing comprehensive solutions that are ethically aligned and adhere to evolving regulatory standards.

Embrace the future of generative AI in the enterprise sector with the partnership of Kanerika’s expertise.

Generative AI Examples: How This Technology is Reshaping Creativity and Innovation

Discover how generative AI is revolutionizing creativity and driving innovation—explore real-world examples today!

FAQs

What are the risks of generative AI?

Generative AI’s biggest risks revolve around the potential for inaccurate or biased outputs, mirroring and amplifying existing societal prejudices. It also poses copyright and intellectual property challenges, as well as the possibility of misuse for malicious purposes like creating deepfakes or spreading misinformation. Finally, there are concerns about job displacement due to automation and the ethical implications of increasingly autonomous systems.

What is the problem with generative AI?

Generative AI’s biggest hurdle is its potential for misuse: creating convincing but false information (deepfakes, etc.) and perpetuating biases present in its training data. Furthermore, its outputs lack true understanding and originality, merely recombining existing patterns. Ultimately, responsible development and deployment are crucial to mitigate these risks.

What are the three limitations of generative AI?

Generative AI struggles with factual accuracy, sometimes hallucinating information or creating plausible-sounding but false content. It lacks true understanding; its outputs are based on patterns in data, not genuine comprehension. Finally, its creative potential is constrained by its training data, limiting originality and potentially perpetuating biases present in that data.

What are the disadvantages of Gen AI?

Generative AI, while powerful, has limitations. It can produce inaccurate or biased outputs due to flaws in its training data, leading to unreliable information. Furthermore, its creative process lacks genuine understanding and critical thinking, potentially generating nonsensical or inappropriate content. Finally, there are ethical concerns surrounding copyright and the potential for misuse.

What are the risks of generative AI bias?

Generative AI’s training data often reflects existing societal biases, leading the AI to perpetuate and even amplify those prejudices in its outputs. This can result in unfair or discriminatory outcomes, particularly affecting marginalized groups. Essentially, biased input leads to biased output, potentially harming individuals and reinforcing harmful stereotypes. Mitigating this requires careful data curation and ongoing model monitoring.

What are the risks of generative AI KPMG?

Generative AI, while powerful, presents several risks for KPMG and similar firms. These include the potential for inaccurate or biased outputs leading to flawed advice or audits, the risk of intellectual property infringement through unintentional replication, and the challenge of maintaining client confidentiality and data security in the AI’s training and application processes. Ultimately, ensuring responsible AI implementation requires careful oversight and robust risk mitigation strategies.

Which are three risks when citing generative AI?

Using AI-generated content without proper attribution is plagiarism, a serious academic and professional offense. There’s also the risk of inaccurate information, as AI models can hallucinate facts. Finally, relying solely on AI output can stifle critical thinking and original research skills.

What is the main challenge of GenAI?

The biggest hurdle for Generative AI isn’t just creating realistic outputs, but ensuring those outputs are consistently accurate, unbiased, and ethically sound. This means tackling issues like hallucination (fabricating information), perpetuating harmful stereotypes, and lack of transparency in its decision-making processes. Essentially, it’s about building trust and responsible usage.

What is the main danger of AI?

The biggest danger of AI isn’t malevolent robots, but unforeseen consequences from its rapid advancement. We risk creating systems whose complexity surpasses our understanding, leading to unpredictable and potentially harmful outcomes. Essentially, the danger lies in our inability to fully control or anticipate the impact of increasingly powerful AI.

What is the generative AI risk matrix?

A generative AI risk matrix helps us understand and prioritize the potential dangers of AI systems. It visually maps various risks (like bias, misinformation, or misuse) against their likelihood and impact. This allows for focused mitigation efforts, directing resources to the most pressing threats. Essentially, it’s a tool for proactively managing the downsides of generative AI.

Which of the following is a potential risk of generative AI?

Generative AI’s biggest risk is creating convincing but false information, leading to misinformation and manipulation. It also raises ethical concerns about authorship and intellectual property, blurring lines of originality. Finally, there’s the potential for bias amplification, as AI models learn from and reflect existing prejudices in data. These risks necessitate careful development and responsible usage.

How will generative AI affect jobs?

Generative AI won’t replace all jobs, but it will reshape many. Expect automation of repetitive tasks across various sectors, leading to job displacement in some areas. However, new roles focused on AI development, maintenance, and ethical oversight will emerge, creating opportunities for adaptation and reskilling. Ultimately, the impact will depend on how humans and AI collaborate.