In August 2025, Lenovo’s GPT-4-powered chatbot was compromised, exposing customer data and highlighting the rapid deployment of AI tools without adequate safeguards. Google also reported a mass data theft incident linked to the Salesloft AI agent, prompting emergency shutdowns. These incidents demonstrate that AI systems aren’t only vulnerable but also actively being targeted.

According to Cisco’s 2025 AI Security Report, 84% of enterprises using AI have experienced data leaks, and 75% cite governance as their top concern. AI is now embedded in fraud detection, diagnostics, and customer service—but most organizations still rely on outdated security models. With threats such as prompt injection, model theft, and shadow AI growing rapidly, the need for a structured AI security framework is no longer optional.

In this blog, we’ll break down what an AI security framework actually is, why your enterprise needs one, and which specific frameworks can protect your AI investments. We’ll cover five proven approaches and help you choose the right one for your organization.

What Is an AI Security Framework?

An AI security framework is a structured set of rules, processes, and tools designed to protect AI systems from misuse, adversarial attacks, and compliance risks.

Unlike traditional cybersecurity frameworks, AI security frameworks account for the unique nature of machine learning models, which can drift over time, learn from biased data, and be manipulated in ways that standard software cannot.

Think of them as blueprints for AI protection. They help enterprises:

- Identify AI-specific risks

- Define controls to prevent misuse

- Ensure compliance with laws like the EU AI Act and HIPAA

- Build trust with customers and regulators

Build Trustworthy AI with Strong Security Foundations!

Partner with Kanerika to secure AI across every layer.

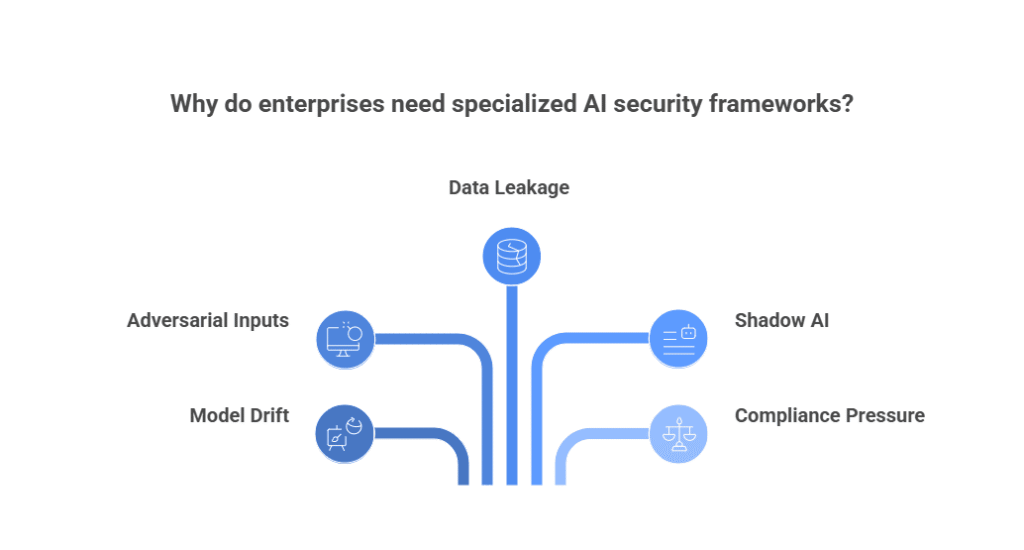

Why Enterprises Need Specialized AI Security Frameworks

AI systems don’t follow fixed rules. They learn, adapt, and sometimes fail in unpredictable ways. That brings new risks:

- Model drift — AI starts making wrong decisions over time

- Adversarial inputs — attackers feed data that tricks the model

- Data leakage — sensitive info gets exposed through outputs

- Shadow AI — unapproved tools used by teams without oversight

- Compliance pressure — laws now demand transparency and accountability

Traditional cybersecurity tools don’t cover these. That’s why enterprises need frameworks built for AI.

Types of AI Security Frameworks

Different frameworks have been created to help companies protect their AI systems. Each one tackles different problems. Some cover the entire AI lifecycle, while others focus on specific threats, and some address advanced autonomous AI agents.

Here’s a breakdown of the most important AI security frameworks in 2025:

1. NIST AI Risk Management Framework (AI RMF)

The NIST AI RMF is one of the most widely referenced AI governance and security frameworks.

Key features:

- Lifecycle-based: Govern, Map, Measure, Manage

- Provides structured risk assessment for AI projects

- Emphasizes trustworthy AI principles (fairness, transparency, accountability)

Best for:

- Highly regulated industries like healthcare, finance, and government

- Enterprises that need a compliance-focused approach

- Teams seeking a systematic way to measure and reduce AI risk

2. Microsoft AI Security Framework

Microsoft has introduced its AI Security Framework to ensure the responsible and secure use of AI.

Key features:

- Covers Security, Privacy, Fairness, Transparency, Accountability

- Works seamlessly with Azure AI and cloud tools, but principles apply to any platform

- Includes best practices for safeguarding data and preventing misuse

Best for:

- Enterprises already using Microsoft Azure

- Organizations prioritizing responsible AI and governance alongside security

- Teams looking for a practical, implementation-ready guide

3. MITRE ATLAS Framework

The MITRE ATLAS (Adversarial Threat Landscape for AI Systems) framework focuses squarely on AI threats and attacker tactics.

Key features:

Catalogs real-world AI attack methods, including:

- Model stealing

- Data poisoning

- Adversarial evasion

- Supports red teaming and threat modeling for AI systems

- Helps defenders anticipate how adversaries target AI models

Best for:

- Security operations (SOC) teams defending AI systems

- Organizations deploying machine learning models in production

- Enterprises wanting to understand and simulate attacker behavior

AI In Cybersecurity: Why It’s Essential for Digital Transformation

Explore AI tools driving threat detection, proactive security, and efficiency in cybersecurity.

4. Databricks AI Security Framework (DASF)

The Databricks AI Security Framework (DASF) bridges the gap between business, data, and security teams.

Key features:

- Lists 62 AI risks and 64 controls

- Platform-agnostic but inspired by NIST AI RMF and MITRE ATLAS

- Provides practical controls that map to enterprise needs

Best for:

- Data-heavy enterprises running large-scale AI pipelines

- Businesses seeking a comprehensive, control-based framework

- Teams wanting actionable steps instead of just principles

5. MAESTRO Framework for Agentic AI

As enterprises adopt autonomous AI systems, like agentic AI, traditional frameworks fall short. That’s where the MAESTRO Framework comes in.

Key features:

Built specifically for agentic and autonomous AI

Detects evolving risks such as:

- Goal manipulation

- Synthetic feedback loops

Includes guidance for AI-driven SOCs, RPA systems, and OpenAI API-based agents

Best for:

- Enterprises experimenting with agentic AI workflows

- Security teams handling autonomous AI deployments

- Early adopters preparing for next-generation AI threats

How to Choose the Right Framework

- Use NIST if you need structured risk management

- Use Microsoft if you care about ethical and responsible AI

- Use MITRE ATLAS if you need deep threat modeling

- Use Databricks DASF for cross-team collaboration

- Use MAESTRO if you’re working with autonomous agents

Many enterprises mix and match based on their AI maturity, risk profile, and compliance needs.

| Framework | Focus Area | Best For | Unique Strength |

| NIST AI RMF | Governance & Lifecycle | Regulated industries | Structured, compliance-ready |

| Microsoft AI Security | Responsible AI principles | Azure ecosystems | Balance of ethics + security |

| MITRE ATLAS | Threat modeling | Security teams | Adversarial attack catalog |

| Databricks DASF | Risk + control mapping | Data-driven enterprises | 62 risks, 64 actionable controls |

| MAESTRO | Agentic AI risks | Autonomous systems | Future-proof against evolving threats |

Real-World Use Cases

Healthcare: Workday and IBM Using NIST for Patient Data Protection

Workday, a global HR and finance software provider, uses the NIST AI Risk Management Framework to align its internal AI governance processes. Their Privacy and Data Engineering team mapped existing controls to NIST’s Govern, Map, Measure, and Manage functions. They created templates and SOPs to operationalize responsible AI across teams.

IBM also adopted NIST AI RMF. Their Chief Privacy Office led a three-phase audit comparing IBM’s internal Ethics by Design methodology to NIST’s framework. IBM now recommends that government agencies adopt NIST RMF for AI governance.

These efforts help ensure AI systems used in healthcare, HR, patient planning, and data analytics meet ethical and legal standards.

Finance: MITRE ATLAS for Threat Modeling in Fraud Detection

Cylance, a cybersecurity firm acquired by BlackBerry, used adversarial inputs to bypass a machine learning malware scanner. This case was documented in the MITRE ATLAS Framework, showing how attackers used public data and reverse engineering to evade detection.

MITRE ATLAS helped security teams understand tactics like model evasion, data poisoning, and prompt injection. It also guided mitigation strategies like retraining models with adversarial samples and tightening API access.

Financial institutions now use MITRE ATLAS to model threats against fraud detection systems, ensuring their AI tools can withstand real-world attacks.

Retail: UiPath Maestro for Agentic AI in Customer Service

Abercrombie & Fitch, Johnson Controls, and Wärtsilä use UiPath Maestro, which is built on the MAESTRO framework, to orchestrate agentic AI in customer service and operations.

- Abercrombie & Fitch uses agentic automation to streamline complex workflows like accounts payable.

- Johnson Controls automates end-to-end processes using AI agents and robots, improving speed and accuracy.

- Wärtsilä, a global marine and energy company, integrates agentic orchestration to manage business processes across systems.

- EY and CGI also partner with UiPath to deploy agentic AI for clients, combining automation, AI agents, and human oversight to deliver scalable customer service solutions.

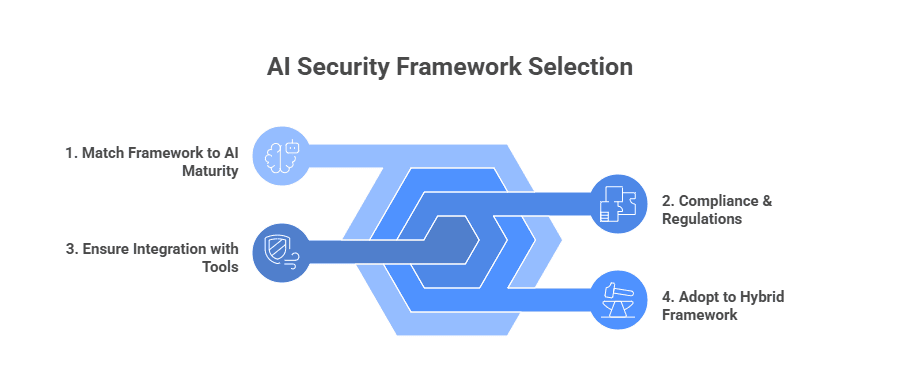

How to Choose the Right AI Security Framework

Selecting the right AI security framework is not a one-size-fits-all decision. The best choice depends on your organization’s AI maturity, regulatory environment, and existing security infrastructure. Here are key considerations:

1. Match the Framework to Your AI Maturity

Early-stage AI adoption: Organizations experimenting with AI pilots should start with flexible frameworks like NIST AI RMF, which provide high-level guidance on managing risks without overwhelming technical requirements.

Advanced AI deployment: Companies already running large-scale AI systems may benefit from more specialized frameworks like MITRE ATLAS for adversarial threats or MAESTRO for multi-agent security.

2. Consider Compliance and Regulatory Needs

If your industry is heavily regulated (healthcare, finance, government), frameworks that align closely with global standards such as ISO/IEC 23894 or NIST are often the safest choices.

These frameworks map directly to compliance requirements like GDPR, HIPAA, or PCI DSS, helping reduce legal risks.

3. Look at Integration with Existing Tools and Processes

Evaluate whether the framework can integrate with your current DevSecOps pipelines, monitoring systems, and governance tools.

For example, MITRE ATLAS aligns well with existing threat modeling tools, making it easier to add AI-specific security without reinventing the wheel.

4. Use Hybrid Approaches if Needed

Many enterprises adopt a hybrid strategy, combining elements from different frameworks.

For instance, a financial institution may use NIST for governance while applying MITRE ATLAS for red-teaming AI models.

This layered approach ensures broader coverage across governance, compliance, and active threat defense.

Case Study: Real-Time Compliance and Risk Detection

Client: A global expert network platform connecting decision-makers with over one million subject-matter experts.

Challenge: The client’s compliance team manually screened experts for negative news across public sources. This caused delays, backlogs, and inconsistent vetting.

Solution: Kanerika built an AI-powered compliance agent that automated expert profiling, scraped news and social media, and applied rule-based logic to flag risks. The agent generated structured reports with citations and mapped findings to compliance rules.

Impact:

- 60% faster screening

- 70% fewer backlog cases

- 40% reduction in event delays

- Standardized and auditable risk assessments

Securing AI Systems with Kanerika’s Proven AI Security Framework

At Kanerika, we design AI security frameworks that help enterprises protect their models, data, and workflows from evolving threats. Our layered approach combines data governance, risk detection, and compliance automation to secure AI systems across industries.

We use tools like Microsoft Purview to classify sensitive data, detect insider risks, and enforce policies automatically. Our framework supports AI TRiSM principles, making sure every AI model we deploy is transparent, accountable, and aligned with ethical standards. This helps our clients stay compliant with regulations like GDPR, HIPAA, and the EU AI Act.

Our partnerships with Microsoft, Databricks, and AWS allow us to deliver scalable, enterprise-grade AI security solutions. With certifications like ISO 27701, SOC II, and CMMi Level 3, we back our work with proven security and quality standards. Whether you’re working with LLMs, RPA bots, or autonomous agents, our AI security framework is built to adapt and protect.

Partner with us to build a trusted AI security framework that protects your data, ensures compliance, and scales with your enterprise.

Maximize AI Potential Without Compromising Security!

Partner with Kanerika for Expert AI implementation Services

FAQs

1. What is an AI security framework?

An AI security framework is a structured set of policies, processes, and tools designed to protect AI systems including models, data, and infrastructure from specific risks like adversarial attacks, drift, and non-compliance. Unlike generic cybersecurity standards, it addresses vulnerabilities unique to AI.

2. Why do organizations need AI-specific security frameworks?

AI systems can be manipulated through methods like adversarial inputs, prompt injection, or data poisoning—threats that traditional cybersecurity tools don’t fully cover. Frameworks built specifically for AI help organizations manage these risks, remain compliant, and uphold user trust.

3. Which industries benefit most from AI security frameworks?

Sectors like finance, healthcare, and retail where AI handles sensitive information, customer decisions, or critical automation, particularly need AI security frameworks. These industries face heightened regulatory and ethical scrutiny.

4. What are some core components covered by AI security frameworks?

Common elements include data integrity, threat modeling, adversarial testing, secure model deployment, ongoing monitoring, fairness and bias mitigation, explainability, and compliance controls.

5. What standards and frameworks are available for securing AI?

Some widely referenced frameworks include:

1. NIST AI RMF

2. OWASP’s AI Security & Privacy Guide

3. Google’s Secure AI Framework (SAIF)

4. Databricks AI Security Framework (DASF)

5. Framework from ENISA (FAICP)

6. AI TRiSM (Trust, Risk, and Security Management)

These cover lifecycle governance, adversarial risk, and ethical/security best practices.

6. How should enterprises choose the right AI security framework?

Evaluate based on your organization’s maturity stage, regulatory demands, and AI infrastructure. Regulated industries may lean toward frameworks like NIST AI RMF, while AI-driven operations using autonomous agents might benefit from layered or specialized models like AI TRiSM or DASF. Often, a hybrid approach combining elements from multiple frameworks is most effective.