Data engineering teams today face a pressing challenge: nearly 70% of their time is spent just preparing data, not analyzing it. Fragmented tools, duplicated storage, and disconnected environments continue to slow development cycles. Meanwhile, demand for scalable, real-time pipelines and unified analytics keeps rising.

To address these inefficiencies, platforms that consolidate the analytics workflow have surged in adoption—none faster than Microsoft Fabric.

As of April 2025, Microsoft Fabric is used by over 21,000 paying organizations, including more than 70% of the Fortune 500. It’s become the platform of choice for managing everything—from lakehouses and data warehouses to real-time analytics and AI—in one environment. A key part of this shift is Fabric’s integration with Apache Spark, which now allows PySpark notebooks to connect directly to the Fabric Warehouse without external workarounds.

In this blog, we’ll look at how PySpark fits into the Microsoft Fabric Warehouse environment and how it can simplify data engineering tasks—from exploration to data movement—within a unified workflow.

What Is PySpark Notebook in Microsoft Fabric Warehouse?

A PySpark Notebook in Microsoft Fabric is a browser-based development environment where users can write and execute Apache Spark code using Python. It is part of Fabric’s Data Engineering workload and designed to support scalable, distributed data processing without managing infrastructure.

Key Capabilities

When connected to a Fabric Warehouse, PySpark notebooks enable users to:

- Read data directly from warehouse tables using Spark DataFrames

- Run transformations and filters on large datasets using PySpark syntax

- Write results back into warehouse tables using a native connector

- Operate within a unified environment, without exporting data or switching tools

This integration eliminates the need for staging via Lakehouse or running post-processing SQL queries outside Spark. It enables full read-write support, making PySpark a natural choice for building end-to-end data preparation and ETL workflows within Fabric.

By combining structured data management and big data compute in one workflow, PySpark notebooks in Microsoft Fabric provide a seamless bridge between engineering, analytics, and reporting.

Transform Your Data Analytics with Microsoft Fabric!

Partner with Kanerika for Expert Fabric implementation Services

Setting Up the Environment for PySpark in Microsoft Fabric

Before using PySpark to interact with Microsoft Fabric Warehouse, it’s important to configure your environment correctly. The key requirement here is switching to the appropriate Spark runtime version that supports warehouse write-back capabilities.

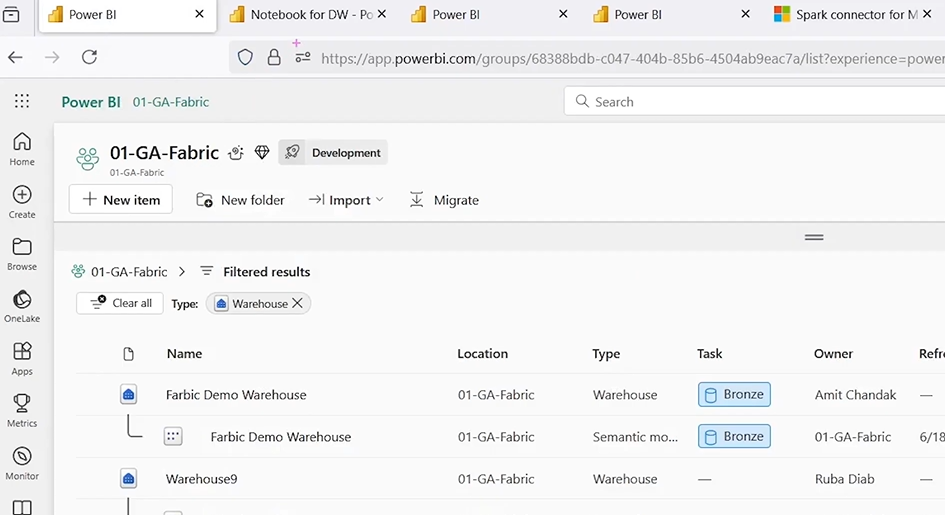

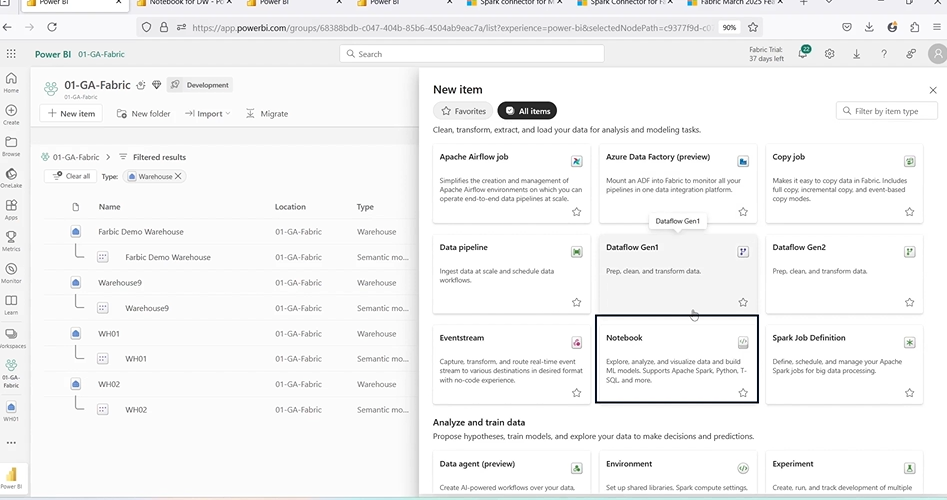

1. Access Your Fabric Workspace

Start by navigating to app.powerbi.com and opening the workspace where you want to run your PySpark notebook.

In this example, the workspace used is:

Workspace Name: 01 – GA fabric

You can find and open it from your list of available workspaces.

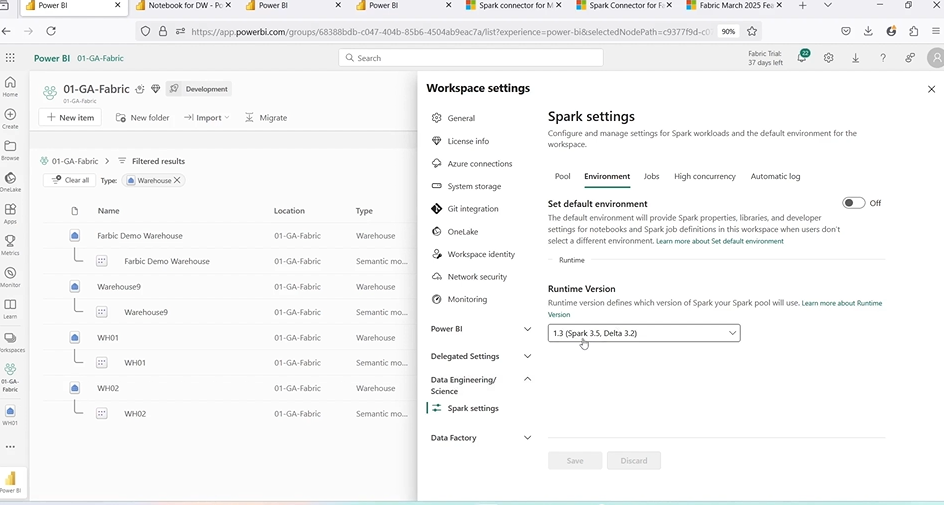

2. Configure the Runtime Environment

To enable full support for the Spark-Warehouse connector (especially for write operations), you need to upgrade the Spark runtime to version 1.3.

- In your workspace, go to the Settings panel (top-right corner).

- Scroll down to Data Engineering or Data Science Spark settings.

- Locate the Environment configuration section.

- Set the Runtime version to:

- Spark 3.5

- Delta Lake 3.2

- Labeled as Runtime 1.3

This runtime version is essential, as the older 1.2 environment does not support the df.write.synapsql() command used to write data back to the Fabric Warehouse.

3. Create a New PySpark Notebook

Once your workspace is set to Runtime 1.3:

- Click New > Notebook under the Prepare Data section.

- Name your notebook and assign it to the same workspace.

- Connect it to a Lakehouse—this is required for initializing the notebook session, even if your end goal is to work with a Fabric Warehouse.

With the notebook created and the environment correctly configured, you’re ready to start importing the necessary libraries and connecting to your Fabric Warehouse using PySpark.

Importing Required Libraries for Spark–Fabric Integration

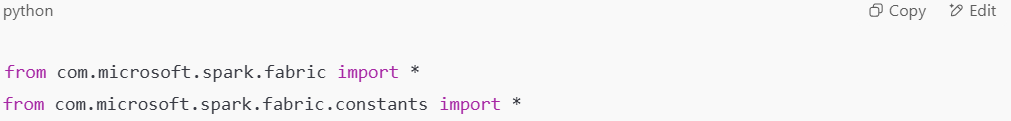

With your PySpark notebook created and the environment set to Runtime 1.3, the next step is to import the required libraries that enable the Spark connector to communicate with Microsoft Fabric Warehouse.

These imports are necessary to access key functions and constants used for both reading and writing to warehouse tables.

Required Imports

At the top of your notebook, add the following import statements:

- The first import enables core Spark-Fabric integration classes.

- The second import brings in predefined constants used for operations like setting workspace ID or configuring options during read/write.

These imports are lightweight and don’t require manual installation. They are bundled by default in Fabric Runtime 1.3, so you can use them immediately without installing external libraries.

Spark Session Initialization

When you run any cell in the notebook for the first time, Fabric automatically starts a Spark session in the background. This session:

- Allocates compute resources

- Initializes the runtime environment

- Keeps the session active for subsequent cells (until explicitly restarted)

Once the Spark session is active and the libraries are imported successfully, you’re ready to start interacting with your Fabric Warehouse.

Preparing the Target Warehouse for Testing

Before testing read and write operations using PySpark, it’s helpful to clean up your Fabric Warehouse environment. This ensures you’re working with a known state and avoids naming conflicts when re-creating tables.

1. Open the Target Warehouse

- Go to your Fabric workspace and open the warehouse instance—e.g., WH01.

- This warehouse contains all your structured tables used for SQL-based operations.

2. Identify and Remove the Test Table

If you’re re-running a test or writing a table with an existing name, delete the old table to avoid conflicts.

- In the warehouse explorer, locate the test table (e.g., pyspark_customer).

- Use the built-in query editor to run a simple drop command:

Execute the command and refresh the schema to confirm that the table has been removed.

AI Chatbot for Businesses: Trends to Watch in 2025

AI chatbots for businesses have come a long way from their early days as simple customer service tools.

3. Confirm Clean State

- After refreshing, verify that the table no longer appears under the schema browser.

- This clears the way to re-create the table during your PySpark write test.

This preparation step is optional but highly recommended when testing writing capabilities—especially if the notebook code creates a table with the same name each time.

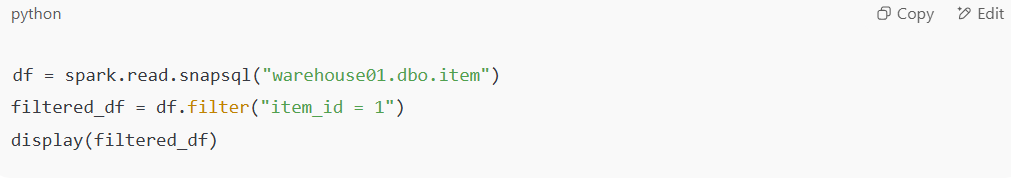

How to Read Data from Microsoft Fabric Warehouse Using PySpark

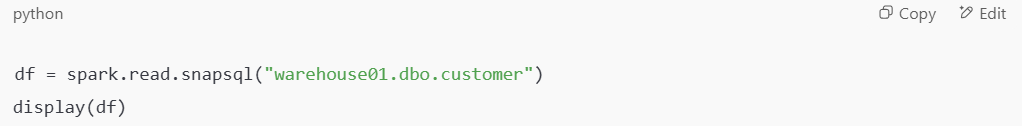

With the notebook set up and the Spark session initialized, the next step is to read data directly from tables in your Fabric Warehouse using PySpark.

This is done using the spark.read.snapsql() method, which allows you to query a warehouse table and load the result into a Spark DataFrame.

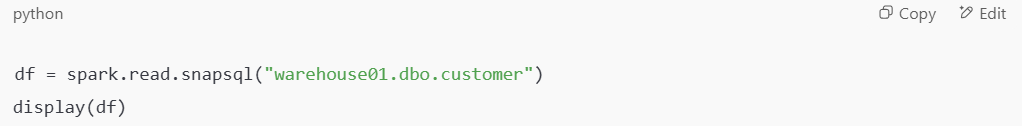

1. Reading a Table from the Current Workspace

To read a table within the same workspace as your notebook:

- warehouse01 is the name of the Fabric Warehouse.

- dbo.customer refers to the schema and table name.

- The display(df) function renders the DataFrame output inside the notebook.

This method allows you to pull full table contents directly into Spark for analysis or transformation.

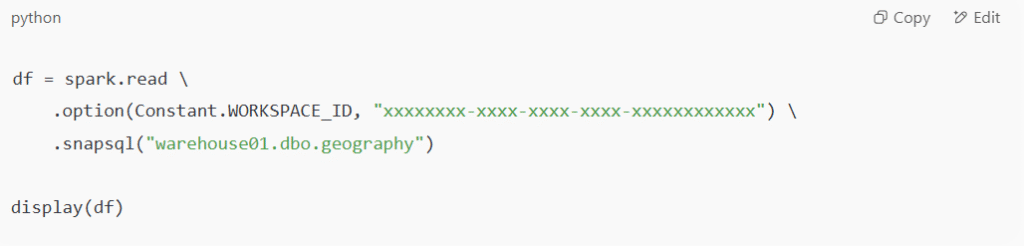

2. Reading a Table from a Different Workspace

If the table you want to query resides in another workspace, you need to provide the workspace ID explicitly.

Step-by-step:

- Copy the workspace ID from the URL of the source workspace.

- It appears after /groups/ in the Fabric URL.

- Use the .option(Constant.WORKSPACE_ID, “<workspace_id>”) method before calling snapsql().

Example:

This allows your notebook to access tables across workspaces securely.

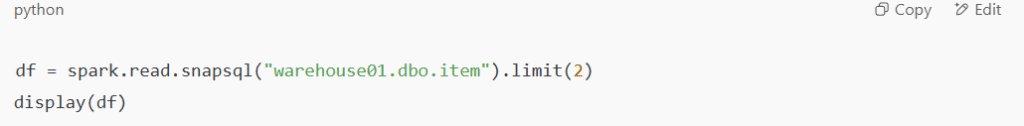

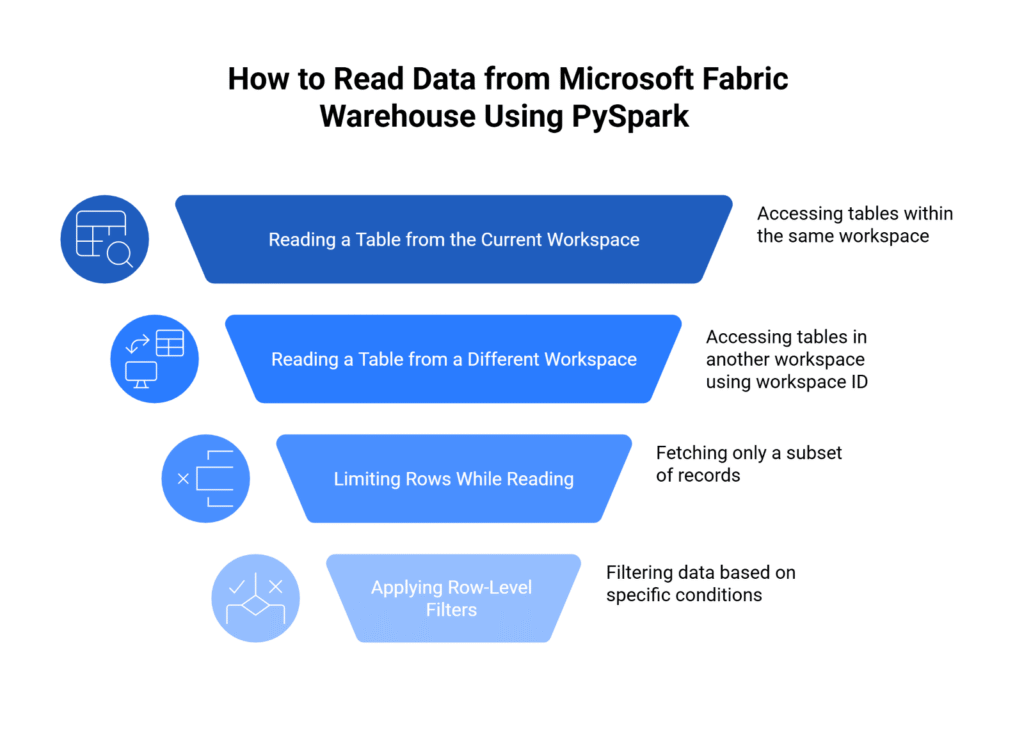

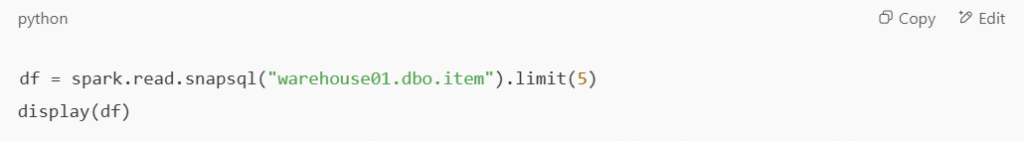

3. Limiting Rows While Reading

To fetch only a subset of records (useful for sampling or testing), apply the .limit() method directly to the read command:

This will return only the first two rows from the item table.

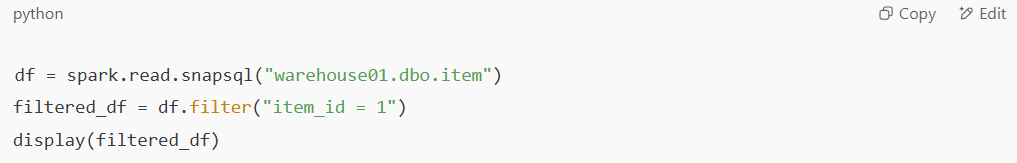

4. Applying Row-Level Filters

You can also filter the results using typical PySpark filtering syntax:

This reads the full table and returns only the rows where item_id equals 1.

These capabilities let you query Fabric Warehouse tables with ease—whether you’re doing exploratory data analysis, testing queries, or preparing data for transformation within your Spark notebook.

Reading Data Across Microsoft Fabric Workspaces

In Microsoft Fabric, PySpark notebooks can access data from warehouses located in other workspaces, not just the one the notebook is assigned to. This is especially useful when working in multi-team or cross-functional environments where data is distributed across projects.

To read from a warehouse table in another workspace, you need to include that workspace’s ID in your Spark read operation.

1. Locate the Workspace ID

To find the Workspace ID:

- Navigate to the target workspace in your browser.

- Look at the URL in the address bar—it will look like this:

Copy the <workspace-id> value (a long alphanumeric string).

2. Use the Workspace ID in Your PySpark Command

Now use this ID with spark.read.option() before the snapsql() function:

- Replace “your-workspace-id” with the actual value from the URL.

- Replace warehouse01.dbo.geography with your desired table path.

3. Validate the Output

Once the command is run:

- The Spark engine queries the specified warehouse table across workspaces.

- The result is loaded into a DataFrame and rendered with display().

This functionality makes it easy to build centralized notebooks that consume data across organizational units, without duplicating storage or creating unnecessary copies.

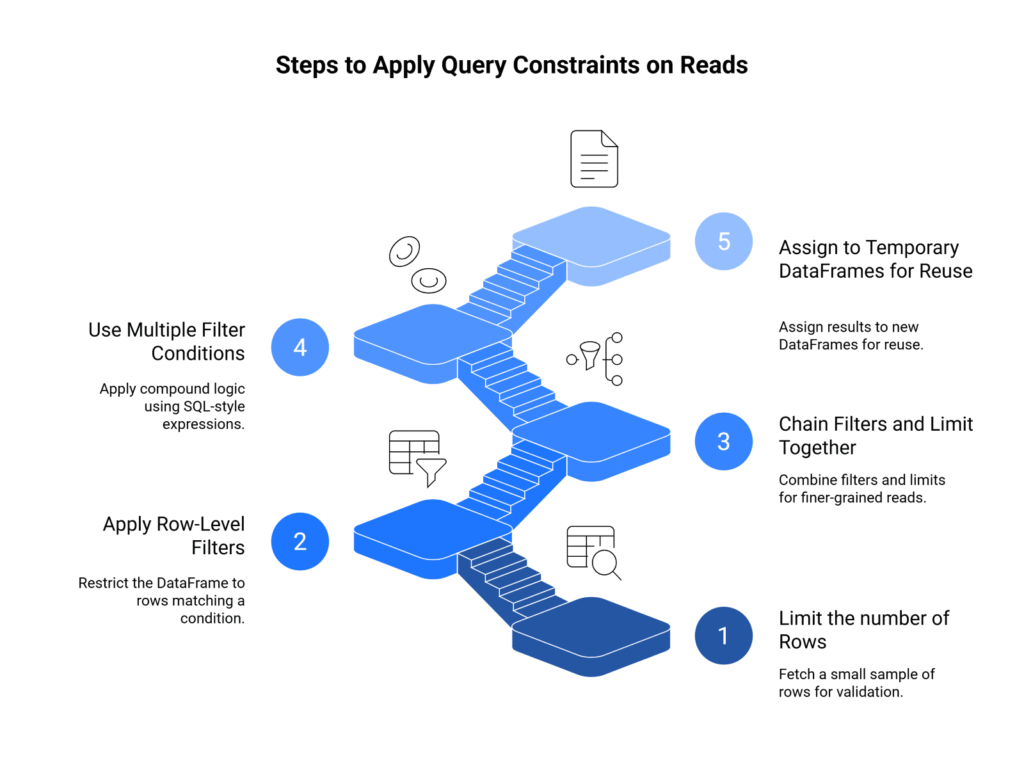

Steps to Apply Query Constraints on Reads

Working with large datasets in Microsoft Fabric Warehouse often requires reading only what’s needed—whether for performance, testing, or data quality checks. PySpark notebooks provide multiple ways to control how much data is pulled from the warehouse and how it’s shaped before processing.

Here are five ways to constrain your reads using PySpark:

Step 1: Limit the Number of Rows

Use .limit(n) to fetch a small sample of rows—ideal for validation, schema inspection, or notebook prototyping.

This retrieves just the first 5 rows of the item table.

Step 2: Apply Row-Level Filters

Use .filter() to restrict the DataFrame to only rows matching a condition:

This is useful when isolating data for a specific item, customer, or condition.

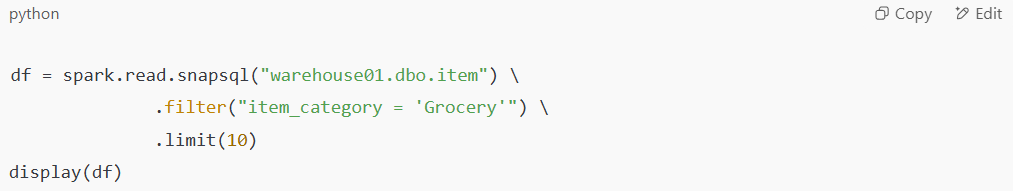

Step 3: Chain Filters and Limits Together

Filters and limits can be combined in sequence for even finer-grained reads:

This returns 10 records where the item belongs to the “Grocery” category.

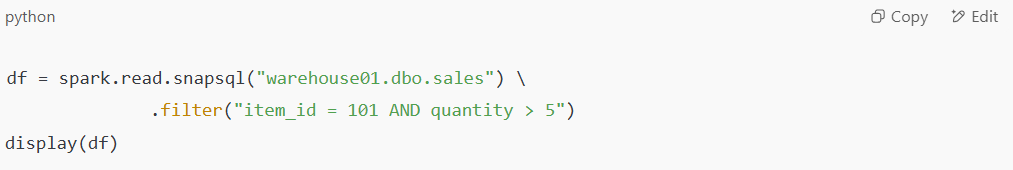

Step 4: Use Multiple Filter Conditions

You can apply compound logic using standard SQL-style expressions inside the .filter() method:

This targets rows where both conditions are met—very useful for slicing operational or transactional data.

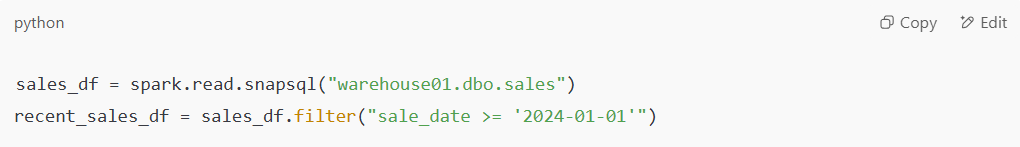

Step 5: Assign to Temporary DataFrames for Reuse

When constraints are complex or used multiple times, it’s best to assign the result to a new DataFrame for reuse:

This approach keeps your code modular and cleaner, especially when doing layered transformations.

These query constraints not only help optimize resource usage but also speed up debugging and testing—allowing Spark to process only what’s necessary inside your Fabric Warehouse connection.

Writing Data Back to Microsoft Fabric Warehouse

Once your transformations are complete in PySpark, the next step is to persist the results back to a Microsoft Fabric Warehouse table. This allows processed data to be queried via SQL or used in downstream reports and dashboards.

Fabric Runtime 1.3 enables native write support using a simple .write.synapsql() method—making it possible to push Spark DataFrames directly into the structured warehouse layer.

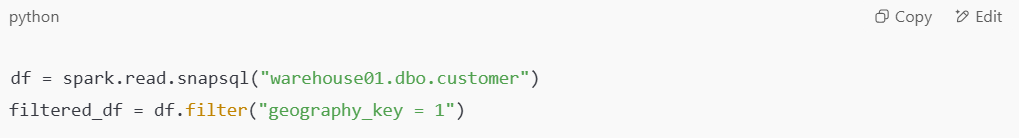

1. Prepare a Transformed or Filtered DataFrame

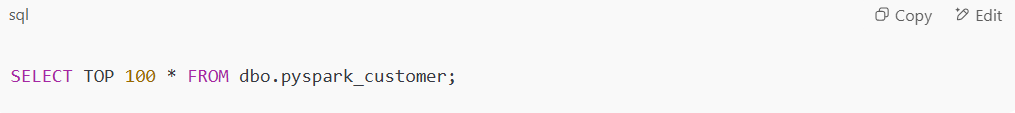

Before writing, you’ll typically start with a filtered or transformed DataFrame. For example:

This prepares a subset of customer data where geography_key = 1.

2. Write the DataFrame to the Warehouse

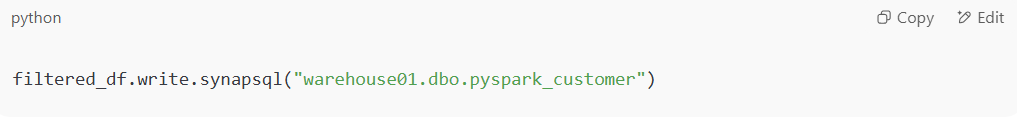

Use the synapsql() write method to write the DataFrame back into a table within the same warehouse:

- If the table does not exist, it will be created automatically.

- If the table already exists, and no overwrite mode is specified, the write may fail.

- The target path must follow the format: warehouse.schema.table.

This command is only supported on Spark Runtime 1.3 or later.

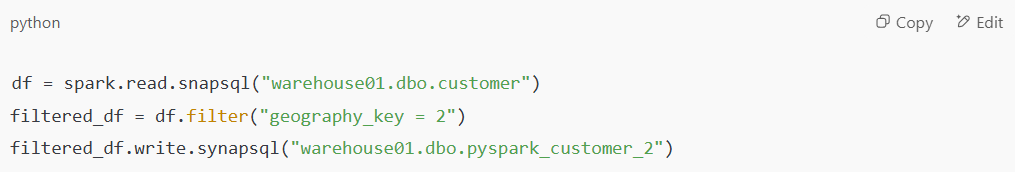

3. Validate the Write Operation in the Fabric UI

After the write completes:

- Open the Warehouse in Fabric (e.g., WH01).

- Click Refresh in the table browser pane.

- You should now see the newly created table (e.g., pyspark_customer) listed under your schema.

This confirms that Fabric has accepted the write and registered the new table.

4. Run SQL Queries to Inspect the Results

To confirm that the correct data has been written, run a simple SQL query inside the warehouse’s built-in query editor:

This allows you to view the first 100 rows and confirm that the schema and data are as expected.

5. Write Again Using a Different Filter

You can repeat the process using a new filter or transformation to write to a separate table:

This lets you persist with multiple versions of the dataset with different segmentation logic.

6. Considerations When Writing to Fabric Warehouse

- Supported only on Runtime 1.3+

- Tables are created using schema inferred from the Spark DataFrame

- Overwriting existing tables is not supported unless explicitly handled

- Data is staged internally and written efficiently via COPY INTO under the hood

By using write.synapsql(), data engineers can complete the loop—moving from Spark-based processing back to SQL-ready tables—all within Microsoft Fabric’s managed environment.

Developer’s Use Case in Microsoft Fabric

For data engineers and developers building on Spark, PySpark notebooks in Microsoft Fabric now serve as a complete development and execution layer within the platform. Instead of exporting data to external tools or switching between environments, teams can handle everything—from reading raw tables to publishing transformed outputs—directly within the notebook.

Key use cases include:

- Building ETL pipelines that read from Lakehouse or Warehouse, apply Spark transformations, and write back curated datasets.

- Testing data logic and filters interactively before operationalizing pipelines.

- Segmenting or enriching data using Spark’s distributed compute and persisting it in Fabric Warehouse for downstream Power BI dashboards.

- Collaborating in shared notebooks across teams, with versioned code and direct access to enterprise datasets.

This functionality bridges the gap between big data processing and structured analytics—letting Spark-native workflows plug directly into the governed, SQL-ready warehouse layer in Microsoft Fabric.

Microsoft Fabric Vs Tableau: Choosing the Best Data Analytics Tool

A detailed comparison of Microsoft Fabric and Tableau, highlighting their unique features and benefits to help enterprises determine the best data analytics tool for their needs.

Kanerika’s Data Modernization Services: Minimize Downtime, Maximize Insights

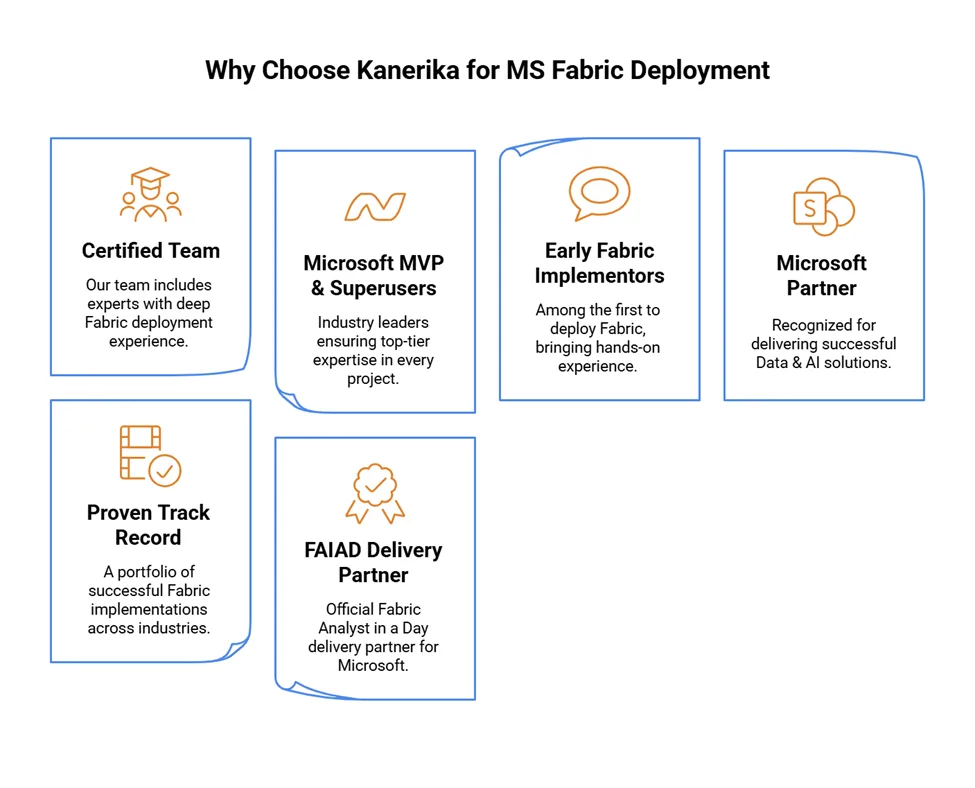

As a premier data and AI solutions company, Kanerika understands the importance of moving from legacy systems to modern data platforms. Upgrading to modern platforms enhances data accessibility, improves reporting accuracy, enables real-time insights, and reduces maintenance costs. Businesses can leverage advanced analytics, cloud scalability, and AI-driven decision-making when they migrate from outdated systems.

However, manual migration processes are time-consuming, error-prone, and can disrupt critical business operations. A single misstep in data mapping or transformation can lead to inconsistencies, loss of historical insights, or extended downtime.

To solve this, we’ve developed custom automation solutions that streamline migrations across various platforms, ensuring accuracy and efficiency. Our automated tools facilitate seamless migrations from SSRS to Power BI, SSIS and SSAS to Fabric, Informatica to Talend/DBT, and Tableau to Power BI, reducing effort while maintaining data integrity.

Partner with Kanerika for a smooth, automated, and risk-free data modernization services.

Accelerate Your Data Transformation with Microsoft Fabric!

Partner with Kanerika for Expert Fabric implementation Services.

FAQs

Can I write data from PySpark directly into Microsoft Fabric Warehouse?

Yes, starting with Fabric Runtime 1.3, you can write PySpark DataFrames directly to a Fabric Warehouse using the .write.synapsql() method. This makes it easy to move data from Spark into your warehouse tables without needing extra steps or conversions.

Is it necessary to connect a notebook to a Lakehouse even if I only work with a Warehouse?

Yes, a connection to a Lakehouse is still required when you create a new notebook. This is because Spark needs a Lakehouse to start the session. But after the notebook is up and running, you’re free to work only with Warehouse tables. You don’t have to read from or write to the Lakehouse if you don’t need it.

How do I read from a warehouse in another workspace?

To access tables from a different workspace, add the .option(Constant.WORKSPACE_ID, “”) line before your .snapsql() call. You can find the workspace ID in the URL of the workspace you want to connect to. This lets you reach across workspaces and use shared data.

Can I overwrite an existing warehouse table using PySpark?

Overwriting an existing warehouse table isn’t supported by default. If you try to write to a table that already exists, the operation will fail unless you handle it manually. To avoid issues, you should either drop the existing table first or write your data to a new table altogether.

What Spark version is required for Fabric Warehouse read/write?

Fabric Runtime 1.3 is the minimum version that supports warehouse read and write. It comes with Spark 3.5 and Delta Lake 3.2. Older runtimes, like 1.2, don’t support writing to warehouse tables, so make sure you’re using the correct version if you plan to use this feature

Do I need to install any external packages to use the Fabric Spark connector?

No extra packages are needed. The necessary libraries, such as com.microsoft.spark.fabric and its constants, are already included in the Fabric runtime. Just make sure to import them at the top of your notebook to use them properly.

Can I filter or limit data when reading from a warehouse in PySpark?

Yes. After calling .snapsql() to read from the warehouse, you can use typical DataFrame methods like .filter() and .limit() to narrow down the data. This helps manage memory usage and improves performance when you don’t need the full table.