Generative AI is a game-changer, allowing businesses to create and simulate data that never existed before. It is a subset of artificial intelligence (AI) that focuses on producing or generating new data based on a given set of rules. This comprehensive guide will take you through everything you need about Generative AI Tech Stack.

What is Generative AI?

Generative AI is a form of artificial intelligence that utilizes algorithms and machine learning to produce original content like images, music, and text. It leverages statistical algorithms and generative modeling to identify patterns in data and generate new content. This technology finds applications in various industries, but concerns about its potential misuse exist.

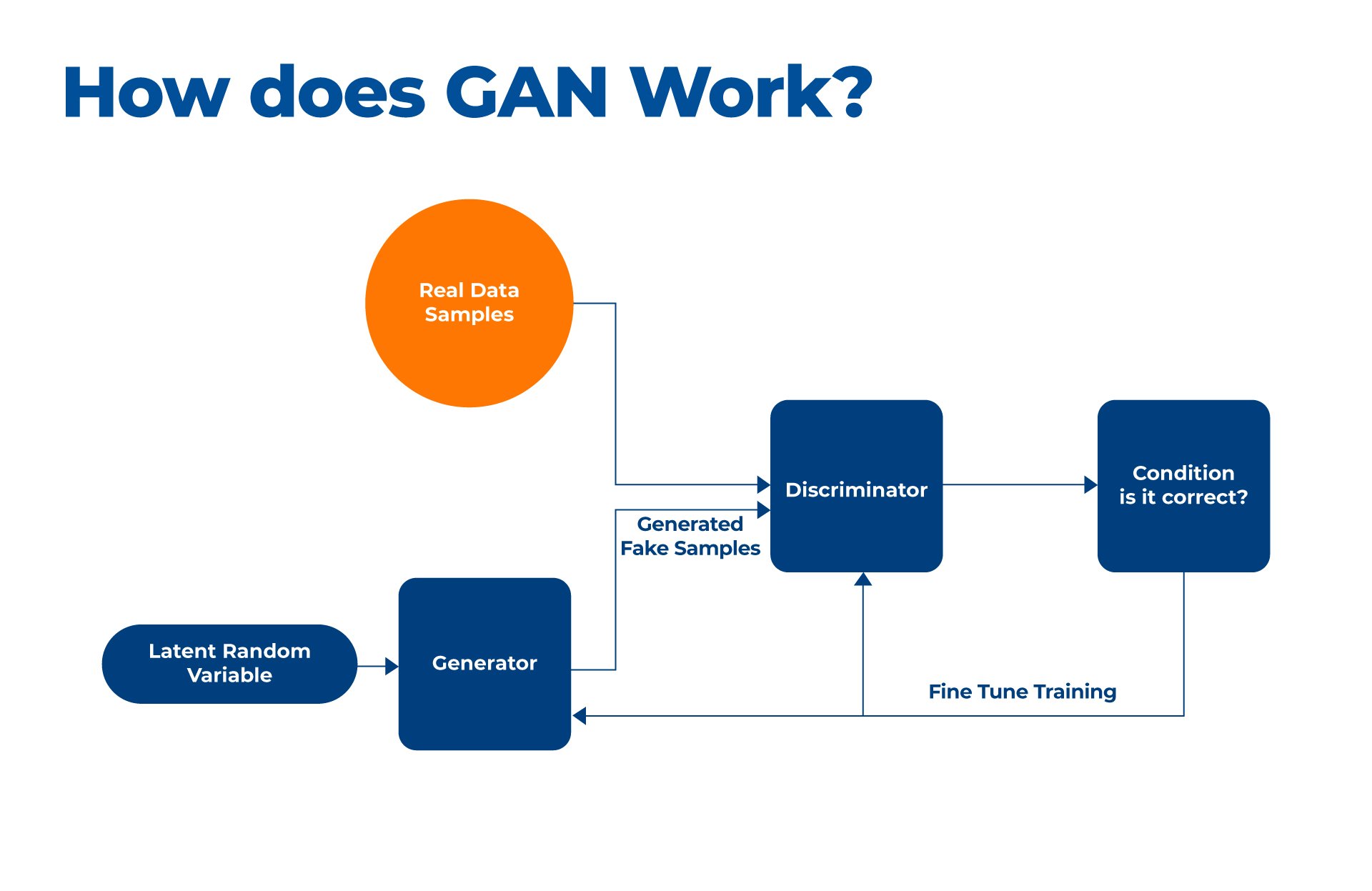

1. GAN – General Adversarial Network

GAN, or General Adversarial Network, is a generative AI network that leverages two models to generate new data. One model generates fake data, while the other discriminates between real and fake data. Through this adversarial setup, the two models work in tandem, continuously challenging each other to improve the quality and authenticity of the generated data over time. GAN has demonstrated its prowess in creating realistic images, audio, and even video, making it a powerful tool in art, design, and entertainment. The applications for GAN are virtually limitless, thanks to its ability to generate output based on large amounts of data and its stable diffusion in various domains. From content generation and data analysis to customer service and social media, GAN’s AI capabilities offer innovative solutions for industries seeking more innovative and creative approaches.

2. Transformers

Transformers are a generative AI model that leverages self-attention mechanisms to process input data. They have revolutionized the field with their success in natural language processing applications like language translation and text generation. These models can generate coherent and realistic responses in conversational AI settings. These have revolutionized natural language processing, with models like GPT-4 offering unprecedented capabilities in generating human-like text. Their application has expanded beyond text generation to areas like language translation, summarization, and even generating programming code, making them invaluable in various sectors. This breakthrough has opened up new possibilities for creative applications, including art and music generation. By utilizing stable diffusion and innovative data analysis techniques, transformers empower generative AI solutions to create captivating content and facilitate customer service in various industries.

3. Variational Autoencoders (VAEs)

Variational Autoencoders (VAEs) are generative models utilized in AI technology. These models can learn from existing data and generate new data using encoding and decoding. VAEs find typical applications in both image and text generation tasks. One of the key advantages of VAEs is their utilization of a probabilistic approach to generate new data, resulting in unique and diverse outputs. However, it is important to note that VAEs require a significant amount of training data and extensive tuning to ensure the production of high-quality output. By employing VAEs in generative modeling, researchers and practitioners can explore applications such as content generation, video generation, and other creative endeavors while capitalizing on the stability and diffusion of generative AI solutions.

4. Multimodal Models

Multimodal models, which combine different types of data, such as text and images, are incredibly versatile in generating diverse outputs. These models have many applications, from creating art to generating realistic language. Leveraging pre-trained models and transfer learning, multimodal models improve efficiency in tasks like content generation and video generation. Some popular examples of multimodal models include DALL-E, CLIP, and GPT-4. The field of multimodal models is constantly evolving as researchers develop new techniques and architectures to enhance their AI capabilities. By utilizing large amounts of data and drawing from a knowledge base, these models can generate innovative outputs across various domains – from creating realistic interior design models to protecting intellectual property. Multimodal models have become integral to the generative AI solution, driving advancements and pushing the boundaries of what’s possible.

An Overview of the Generative AI Stack

The generative AI stack encompasses various components that work together to enable the development and deployment of generative AI applications. These components include models, data, application frameworks, evaluation platforms, and deployment tools. Generative models like GANs and transformers form the foundational models of generative AI technology. They utilize stable diffusion techniques to generate new and realistic data based on large amounts of input data. Cloud platforms such as Google Cloud and Microsoft Azure provide infrastructure support for generative AI development, offering scalable resources for training and inference. The stack handles crucial tasks like data processing, model training, performance evaluation, and deployment of generative AI applications. This comprehensive stack empowers organizations to leverage the AI capabilities of generative models and apply them to a wide range of industries and use cases, from data analysis and social media to interior design models and intellectual property generation.

Also Read:

1. Application Frameworks: The Cornerstone of the Generative AI Stack

Application frameworks are pivotal in the generative AI stack, acting as the backbone of the development process. Leading frameworks such as TensorFlow and PyTorch and emerging tools offer developers a comprehensive suite of tools and libraries tailored for generative AI applications. These frameworks have been continuously updated to include advanced features, enhancing their efficacy in building sophisticated AI models.

A vital advantage of these frameworks lies in their versatility. They support a broad spectrum of generative AI use cases, not limited to image and text generation but also extending to audio synthesis, 3D model creation, and bespoke solutions for sectors like healthcare, finance, and digital arts. This versatility allows developers to harness the power of generative AI technology efficiently, even without extensive expertise in low-level programming languages.

By providing a high-level abstraction layer, these application frameworks enable developers to focus more on generative AI solutions’ creative and innovative aspects. This approach democratizes access to AI technology, allowing a more comprehensive range of developers to contribute to the field.

Furthermore, these frameworks have evolved to handle large volumes of data more efficiently, facilitating practical data analysis and model training. This development is crucial for generative AI applications’ stable and scalable implementation.

Whether for generating photorealistic images, creating complex music compositions, or assisting in content generation, application frameworks have become more robust and user-friendly. They empower developers to explore the full potential of generative AI technology, paving the way for groundbreaking applications that were once considered futuristic.

2. Models: Generative AI’s brain

Generative models, prominently including GANs (Generative Adversarial Networks), transformers, and other evolving architectures, form the crux of generative AI technology. These models utilize advanced neural network designs to identify patterns in extensive datasets, enabling new, realistic content generation. With continuous improvements, these models have become more adept at producing high-quality outputs that are increasingly difficult to distinguish from human-generated content.

A significant advantage of generative models is their fine-tuning ability, allowing customization to specific tasks and objectives. This adaptability has broadened the scope of generative AI, making it instrumental in diverse sectors such as healthcare, where it assists in drug discovery and patient care, finance for predictive analysis, and entertainment for content creation.

Moreover, incorporating advanced techniques, including stable diffusion and deep learning, enhances the models’ ability to process and analyze massive volumes of data. This capability has opened doors to creating intricate and expansive knowledge bases, further extending the utility of generative AI.

3. Data: Feeding information to the AI

Data is a vital component in generative AI, serving as the fuel that enables models to learn and create. To train generative models effectively, large amounts of relevant data are necessary. However, before feeding the data to AI, it goes through crucial steps such as processing, cleaning, and augmentation to ensure its quality and suitability for training. Furthermore, when working with generative AI technology, it is essential to consider data privacy and handle sensitive information responsibly. Depending on specific needs, generative models can be trained on various data types, including proprietary data, vector databases, or open-source datasets. By harnessing these diverse sources of information, generative AI can generate realistic content across different domains.

4. The Evaluation Platform

In the realm of generative AI, the effectiveness and reliability of models are paramount. The evaluation platform is integral to this, offering advanced tools and metrics designed to assess generative AI models‘ performance comprehensively. This includes evaluating the quality, diversity, realism, and originality of the content generated. Modern evaluation platforms are equipped with more nuanced metrics beyond traditional measures, incorporating user experience feedback and ethical considerations into the evaluation process.

Given generative AI’s increasing complexity and application, it’s crucial to identify model limitations and biases. Developers can use these insights for targeted improvements, ensuring that the models are efficient but also equitable and unbiased.

Furthermore, the evaluation platform aids in refining aspects like prompt engineering – a key component in models like ChatGPT – and in-depth analysis of outputs across varied domains. This is essential for debugging models and optimizing their performance to align with specific use cases and industry standards.

Another critical function of the evaluation platform is ensuring compliance with evolving ethical standards and regulations, especially in sectors like healthcare and finance, where the impact of generative AI is profound. It serves as a checkpoint to guarantee that the AI models adhere to ethical guidelines and legal requirements.

By providing a detailed and multifaceted assessment, the evaluation platform is a cornerstone in the generative AI tech stack. It validates the model’s capabilities and shapes the path for future enhancements, ensuring that generative AI solutions meet the highest performance standards, user satisfaction, and ethical responsibility.

5. Deployment: Moving Applications into Production

Moving generative AI applications from development to production environments is a crucial step in deployment. Cloud providers, like Google Cloud Platform, offer the necessary tools and infrastructure support for this process. During deployment, models are optimized to ensure scalability and compatibility with the cloud infrastructure. It also involves user experience, data privacy, and community support considerations. By integrating deployed generative AI applications into existing systems, industries such as healthcare, e-commerce, and media can derive significant value. The success of deployment relies on a stable diffusion of AI capabilities. Additionally, the deployment process involves comprehensive data analysis and management, leveraging large amounts of data to train generative models effectively.

A Detailed Analysis of the Generative AI Tech Stack

The generative AI tech stack encompasses various components for developing generative AI solutions. It includes models, data, application frameworks, evaluation platforms, and deployment tools. Models such as GANs and transformers form the foundation of generative AI technology. They enable the creation of realistic and diverse outputs. Data processing, privacy, and selection are crucial considerations in generative AI development. Application frameworks like TensorFlow and PyTorch provide the necessary tools, libraries, and utilities for training generative AI models. Evaluation platforms are significant in measuring model performance, assessing content quality, and supporting prompt engineering. Finally, deployment tools and cloud infrastructure facilitate the smooth transition of generative AI applications into production environments. By utilizing this comprehensive tech stack, developers can unlock the full potential of generative AI and drive innovation in various fields.

Layer 1: Hardware

Choosing the proper hardware infrastructure is crucial for optimizing the performance of generative AI solutions. To support the training and deployment of generative models, it’s essential to consider factors such as processing power, memory, and storage when selecting hardware. The hardware capabilities play a critical role in the efficiency and effectiveness of generative AI applications.

You need a stable diffusion of AI capabilities to efficiently handle large amounts of data. Whether you’re working with interior design models or analyzing data from social media, your hardware should be capable of handling the computational demands of generative modeling.

Additionally, ensuring that your hardware setup complies with intellectual property requirements and provides a secure environment for your generative AI solution is essential.

Layer 2: Cloud

Cloud computing is crucial in the generative AI tech stack, providing the necessary infrastructure for the technology to thrive. It offers scalable and cost-effective data storage and processing capabilities, making it an ideal solution for handling large amounts of data required by generative AI models.

Cloud platforms like AWS and Azure enhance the development process by offering pre-built machine learning models and tools, such as openAI’s ChatGPT, which developers can leverage.

Additionally, cloud-based machine learning enables real-time decision-making and continuous learning, allowing generative AI solutions to adapt and improve over time. Security and reliability measures are also built into cloud platforms, protecting valuable data and applications. By utilizing the cloud, businesses can effectively harness the power of generative AI for various applications, from social media content generation to interior design models, while safeguarding their intellectual property.

Layer 3: Model

The heart of any generative AI system lies in its model, which generates fresh content based on input data. Various models are available, including autoencoders, variational autoencoders, and generative adversarial networks (GANs). The quality of a model is determined by factors such as accuracy, diversity, and scalability. Training the model requires substantial data, computing power, and meticulous fine-tuning of hyperparameters. Once trained, the model can generate new content in real-time or as part of a larger system. It’s important to note that stable diffusion of knowledge and expertise within the model layer is crucial for developing robust and effective generative AI solutions. With large amounts of data and an intelligent model, generative AI opens up possibilities across various domains, from social media content generation to interior design models, while mindful of intellectual property and ethical considerations.

Researchers and organizations often leverage advanced generative modeling techniques, like OpenAI’s ChatGPT, to build AI systems that excel in data analysis and generation to achieve these capabilities.

Layer 4: MLOps

MLOps, short for Machine Learning Operations, is the practice of managing and automating machine learning operations within a generative AI system. It is crucial to ensure that the machine learning models are deployed reliably and at scale. MLOps encompasses various tasks, including version control, testing, monitoring, and deployment. By using MLOps tools such as Kubeflow, MLflow, and TensorFlow Serving, these tasks can be automated, saving time and reducing errors. This allows for a seamless integration of the generative AI solution into the existing tech stack.

MLOps also enables efficient management of large amounts of data, ensuring that the model layer has access to the necessary knowledge base for generating accurate and diverse outputs.

With stable diffusion of generative modeling, MLOps is poised to enhance the capabilities of AI in various domains, from data analysis to social media and even interior design models, while protecting intellectual property and maintaining ethical standards.

Deep Learning Frameworks for Generative AI

Deep learning frameworks play a crucial role in developing generative AI solutions. Let’s discuss them one by one.

1. TensorFlow

TensorFlow, an open-source software library, is widely renowned for its role in dataflow and differentiable programming. It empowers the creation of machine learning models, including neural networks and deep learning architectures. One of the key factors contributing to TensorFlow’s popularity is its vibrant and extensive community, which facilitates knowledge sharing and provides access to pre-built models and tools. Designed to be versatile, TensorFlow supports multiple programming languages such as Python, C++, Java, and Go. Developed by the Google Brain team, TensorFlow continues to hold its position as one of the most sought-after AI frameworks in the industry. Its capabilities extend beyond traditional AI applications, making it a stable diffusion for researchers and practitioners. With TensorFlow, organizations can leverage large amounts of data while deploying generative AI solutions for various domains, ranging from social media analysis to interior design models.

2. PyTorch

PyTorch is an open-source machine learning library known for its dynamic computational graph. It has gained popularity among researchers and developers due to its flexibility and ease of use. With GPU and CPU computing support, PyTorch allows faster training and inference, making it a preferred choice for building generative AI solutions. The platform offers a wide range of pre-built models for various domains, such as natural language processing and computer vision. In addition, PyTorch benefits from a large, supportive community that provides extensive resources for users at all levels. Moreover, this thriving ecosystem and PyTorch’s AI capabilities make it a valuable tool.

Learn more about our partners in building Efficient Enterprises.

3. Keras

Keras is a Python-based high-level neural network API with a user-friendly interface for building deep learning models. It can be used on top of popular deep learning frameworks like TensorFlow, CNTK, or Theano. With Keras, developers can easily experiment and build complex models such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs). Its intuitive design and extensive community support make it a popular choice among AI practitioners. Keras allows for the seamless integration of different AI capabilities and simplifies the process of model layer creation. Its stable diffusion in the industry is evident from its widespread usage in diverse fields like data analysis, social media, and even interior design models. As part of the generative AI tech stack, Keras offers a versatile solution for developers and researchers seeking powerful tools to create generative AI models.

4. Caffe

Caffe is a widely used open-source deep-learning framework for image classification, segmentation, and object detection tasks. Developed by the Berkeley Vision and Learning Center (BVLC), Caffe is written in C++ with a Python interface. With its support for popular neural network architectures like CNNs and RNNs, Caffe offers robust AI capabilities. It also includes pre-trained models for image classification using the ImageNet dataset. One of the key advantages of Caffe is its user-friendly interface, which allows users to design, train, and deploy custom models effortlessly. It’s a stable diffusion of generative AI technology, providing a powerful resource for researchers and developers alike.

How to Build Generative AI Models?

Building generative AI models requires a strong understanding of machine learning and programming. Select a framework like TensorFlow or PyTorch to construct your model. Preprocess and gather relevant data for the desired task. Continuously experiment, refine, and tweak your model to achieve optimal results.

Step 1: Objective and System Mapping

Defining the objective clearly and understanding the problem you aim to solve is crucial to build your generative AI solution. This step involves mapping out the system on which you will apply Generative AI, including identifying data sources and potential outputs. It’s important to consider constraints or limitations, such as computational resources and available data. Consulting with domain experts and stakeholders helps ensure alignment with business needs. As you progress, document your findings and revisit the objective and system mapping as needed throughout the model-building process. By taking these steps, you lay a strong foundation for developing a tailored generative AI solution that addresses your specific requirements and goals.

Step 2: Building the Infrastructure

Building the infrastructure is a crucial step in the generative AI journey. It involves setting up the necessary hardware and software components to support your AI models. The hardware requirements may include powerful GPUs and CPUs to handle the computational demands of training and inference. Additionally, storage devices are necessary to store and access large amounts of data efficiently.

Software frameworks like TensorFlow and PyTorch are essential for building and training your generative AI models. These frameworks provide the necessary tools and libraries to implement complex neural networks and optimize them for performance. Cloud platforms like AWS and Google Cloud offer pre-configured infrastructure designed explicitly for AI models, making scaling and deploying your solutions easier.

Efficient infrastructure is paramount for training and deploying generative AI models successfully. It ensures you have the necessary resources and capabilities to handle the computational complexity.

Step 3: Model Selection

When building generative AI models, model selection plays a crucial role. Several factors should be considered, such as the type of data, model complexity, and training time. Popular options for generative AI models include GANs, VAEs, and autoregressive models. Each model has strengths and weaknesses, so choosing the one that best fits your specific use case is important. To make an informed decision, experimentation and iteration are key. By exploring different models and evaluating their performance, you can identify the most suitable option for your needs. This process ensures that your generative AI solution leverages the right model layer to achieve the desired outcomes. Ultimately, the success of your generative AI project relies on selecting the most effective model to generate content.

Step 4: Training and Refinement

To ensure the accuracy and robustness of generative AI models, step 4 involves training and refinement. A large and diverse training dataset provides a solid foundation for the model. Through iterative refinement and rigorous testing, it is possible to enhance the model’s accuracy and performance. Additional fine-tuning with more data or hyperparameter adjustments can further optimize the model’s capabilities. Monitoring performance metrics such as loss and accuracy throughout the training process helps guide the refinement process. It’s essential to balance model complexity and generalizability to achieve optimal performance.

Step 5: Training, Governance, and Iteration

Training teaches the model to recognize patterns and make predictions, leveraging stable diffusion techniques. It involves feeding large amounts of data into the model and adjusting its parameters to minimize errors. Governance is crucial in ensuring the generative AI solution operates within ethical and legal boundaries. It involves monitoring and managing the model’s behavior and customer service and ensuring the accuracy of the generated content. Iteration, on the other hand, focuses on refining the model by incorporating feedback and new data. This iterative approach helps improve the model’s performance over time.

Future Trends and Advances in Generative AI

The field of generative AI is constantly evolving, with new models, techniques, and applications emerging. Revolutionary advances in large language models, such as OpenAI’s GPT, have transformed text generation tasks. Generative models are now being applied across various industries, including drug discovery, interior design, and content creation. The development of generative artificial intelligence also addresses concerns surrounding privacy and data protection. Moreover, community-driven development, open-source tools, and knowledge sharing are pivotal in advancing the generative AI technology stack. By harnessing stable diffusion and leveraging the capabilities of AI, organizations can analyze large amounts of data, build knowledge bases, and develop robust generative AI solutions while safeguarding intellectual property. With the integration of generative modeling, the possibilities for innovation and creativity in AI continue to expand.

Conclusion

The generative AI tech stack holds immense importance across various industries, enabling a wide range of tasks to be accomplished. As generative AI evolves, new applications and use cases are emerging, showcasing the vast potential of this technology. And the ever-increasing demand for generative AI applications in diverse industries fuels this growth.

If you’re ready to dive deeper into generative AI and explore its limitless possibilities, we invite you to book a free consultation with our experts. Get hands-on experience and discover how generative AI can transform your business. Don’t miss out on this opportunity to unlock the true potential of generative AI.

Know more about our Business Transformation Through AI/ML Services

FAQs

What is the tech stack of generative AI?

Generative AI’s tech stack isn’t a single thing, but a flexible combination. It heavily relies on deep learning models, particularly large language models (LLMs) and transformers, trained on massive datasets. Crucially, it also needs powerful hardware (GPUs) and specialized software frameworks for training and deployment. Finally, data processing and management systems are essential for handling the immense volume of data involved.

What is full stack generative AI?

Full-stack generative AI isn’t just about creating cool images or text; it’s the whole shebang. It encompasses all stages, from data ingestion and processing to model training, deployment, and user interface design – a complete, end-to-end system for creating AI-generated content. Think of it as building the entire factory, not just the assembly line. It demands expertise across many AI-related disciplines.

What are the three layers of the generative AI stack?

Generative AI isn’t a single thing, but a stack of technologies. At the base are foundational models, the massive pre-trained neural networks. Above that is the application layer, where these models are fine-tuned and used for specific tasks. Finally, the user interface layer provides the human-friendly interaction, like chatbots or image generators. These layers work together to create the experience.

What tech stack does AI use?

AI doesn’t use a single “tech stack” like a website. It leverages a diverse range of tools depending on the specific task – from programming languages like Python and R for data manipulation, to cloud platforms like AWS or Google Cloud for processing power, and specialized libraries for machine learning algorithms. Essentially, it’s a highly adaptable and evolving combination of technologies.

What are generative AI tools?

Generative AI tools are like creative digital assistants. They use vast datasets to learn patterns and then generate entirely new content, such as text, images, or code, based on your prompts. Unlike traditional AI focused on analysis, these tools *create* things. Think of them as sophisticated prediction engines turned artists or writers.

Will generative AI replace developers?

No, generative AI won’t replace developers; it’s more of a powerful assistant. Think of it like a supercharged code editor, boosting productivity by automating tedious tasks. Developers will still be crucial for designing, problem-solving, and ensuring ethical and robust applications. The future is collaboration, not replacement.

Is chatbot a generative AI?

Not all chatbots are generative AI, but many modern ones are. A simple rule-based chatbot just follows pre-programmed responses, while a generative AI chatbot creates novel text or other content in response to prompts. Generative AI gives chatbots a far more dynamic and human-like conversational ability. Essentially, generative AI is a *type* of technology that *can be* used to power a chatbot.

What are generative AI models?

Generative AI models are like creative digital artists. Instead of copying existing data, they learn patterns from it and then use that knowledge to create entirely new content – images, text, music, even code. Think of it as teaching a computer to imagine and build rather than simply memorize. They’re powered by complex algorithms that enable this novel content generation.

What is the infrastructure layer of generative AI?

Generative AI’s infrastructure is the unseen powerhouse behind its magic. It’s a complex web of massive computing resources (think thousands of GPUs), high-speed networking to connect them, and vast storage systems to hold the enormous datasets needed for training and operation. Essentially, it’s the hardware and software foundation enabling the AI models to generate content. Without this robust infrastructure, generative AI simply wouldn’t exist.

What is the most famous generative AI?

There’s no single “most famous” generative AI, as fame depends on the audience. However, DALL-E 2 and Stable Diffusion are frequently cited for their image generation capabilities, while ChatGPT enjoys widespread recognition for its text-based conversational skills. Ultimately, the “most famous” is subjective and shifts with time and emerging technologies.

What is the future of generative AI?

Generative AI’s future is bright but uncertain. We’ll see increasingly sophisticated models creating more realistic and nuanced content across various media, blurring lines between human and AI creation. Ethical concerns around misuse and job displacement will require careful navigation. Ultimately, its success hinges on responsible development and integration into society.

Does generative AI use deep learning?

Generative AI heavily relies on deep learning, specifically neural networks with many layers. These complex networks learn intricate patterns from vast datasets to generate new, similar content. Think of it as learning the “grammar” of images, text, or music to create novel outputs. Essentially, deep learning provides the sophisticated engine for generative AI’s creative abilities.

What tech stack is used for AI?

The “AI tech stack” isn’t one fixed thing; it’s highly variable depending on the specific AI application. You’ll usually find programming languages like Python (with libraries like TensorFlow or PyTorch), cloud computing platforms (AWS, Google Cloud, Azure), and specialized hardware (GPUs or TPUs) all playing crucial roles. The exact mix depends on factors like model size, data volume, and performance needs.