In November 2025, Azure Databricks users across West Europe faced nearly 10 hours of service disruptions. In October, customers in Australia East couldn’t launch clusters or create virtual machines. These outages aren’t rare. According to monitoring data, Databricks has tracked over 130 incidents since 2023, affecting thousands of enterprise customers.

At the same time, Databricks hit $3.7 billion in revenue with 50% year-over-year growth. Over 10,000 companies now depend on the platform for AI, analytics, and data engineering. As adoption grows, so does the cost of downtime. A single cluster failure can halt business operations and waste thousands in compute resources.

This guide covers the most common Databricks problems you’ll encounter in production. From cluster failures to slow performance and data access errors, you’ll learn how to diagnose issues faster, apply proven fixes, and prevent problems before they impact your workflows.

Key Takeaways

- Most Databricks’ issues come from cluster setup, permissions, or configuration errors.

- Always check logs before changing settings — driver and Spark UI logs reveal the real cause.

- Use the Databricks AI Assistant to explain errors and suggest fixes faster.

- Keep libraries, runtimes, and permissions consistent across all clusters.

- Optimize performance using Auto-Optimization, Predictive Scaling, and AQE.

- Fix data access issues by verifying IAM roles and Unity Catalog privileges.

- Prevent future problems with version control, monitoring, and regular audits

Understanding Databricks Components for Effective Troubleshooting

Before diving into specific problems, you need to understand how Databricks components connect. Many issues stem from gaps in setup or misunderstanding how different parts interact. Once you know the architecture, troubleshooting becomes much faster.

1. Workspace and Access Control

Your Databricks workspace is where everything happens. You write notebooks, manage jobs, connect to data sources, and control user permissions here. Access control at the workspace level is critical for troubleshooting. A small permission error can stop notebooks from running or block scheduled jobs entirely.

- Check workspace permissions first when notebooks won’t execute.

- Review group roles in the admin console for blocked workflows.

- Verify service principal access for automated jobs.

- Confirm Unity Catalog grants for data access issues.

2. Clusters and Compute Resources

Clusters are the compute engines that run your code. They’re groups of virtual machines working together to process data using Apache Spark. Understanding cluster types helps you troubleshoot faster.

- All-purpose clusters support interactive development and testing.

- Job clusters spin up automatically for scheduled tasks and shut down when complete.

- Shared clusters can cause resource conflicts between users.

Most Databricks performance issues and errors start at the cluster level. Always check cluster logs first when something fails.

3. Storage and Unity Catalog

Databricks connects to external cloud storage like S3, Azure Data Lake Storage, or Google Cloud Storage instead of storing data internally. Unity Catalog manages data governance and controls who can access datasets across teams.

- Verify storage credentials and mount points for access issues.

- Check Unity Catalog permissions for permission denied errors.

- Confirm correct path formats for your storage type.

Fixing Databricks Cluster Issues

Cluster problems account for most Databricks troubleshooting scenarios. When clusters fail to start, crash during execution, or perform slowly, everything downstream stops working. Learning to diagnose cluster issues quickly saves hours of frustration.

1. Cluster Won’t Start or Fails Immediately

This is the most common Databricks cluster problem. Your cluster might hang in “Pending” state, start and terminate instantly, or fail with vague error messages. Configuration errors like wrong instance types or invalid Spark settings trigger immediate failures. Permission problems occur when service accounts lack rights to create instances.

- Open cluster event logs from the Databricks UI.

- Start with the driver log for initialization errors.

- Check IAM roles and service principal permissions.

- Verify security groups and subnet configurations.

- Review instance quota limits in your cloud console.

- Clone working clusters and modify settings incrementally.

2. Memory Errors and OutOfMemoryError

Clusters that start successfully but crash during execution typically hit memory limits. This manifests as java.lang.OutOfMemoryError in driver or executor logs. Processing datasets larger than available node memory causes these failures.

- Scale clusters vertically with larger instance types.

- Add more worker nodes for horizontal scaling.

- Enable autoscaling for variable workloads.

- Repartition data to distribute load evenly.

- Avoid collect() operations on large datasets.

- Use broadcast joins for small lookup tables.

- Keep library versions consistent using init scripts.

Memory issues often indicate data processing inefficiencies rather than actual memory shortages.

3. Slow Cluster Performance

Clusters that start fine but crawl through jobs frustrate users and waste resources. Performance problems often hide behind misconfiguration or inefficient code rather than hardware limitations. Data skew causes uneven distribution where some nodes overwork while others sit idle.

- Monitor tasks through Spark UI to identify slow stages.

- Check task duration distribution for data skew patterns.

- Repartition data on better partition keys.

- Adjust spark.sql.shuffle.partitions for your data size.

- Cache only data reused multiple times.

- Unpersist cached data when no longer needed.

The Spark UI provides the most valuable information for diagnosing performance bottlenecks.

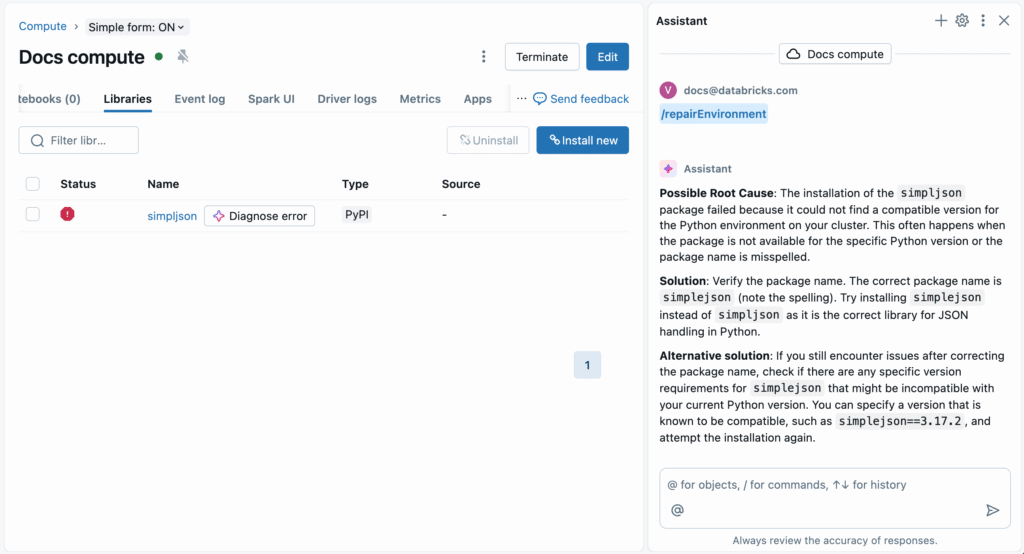

4. Library and Dependency Conflicts

Library mismatches break clusters during startup or cause runtime errors. This is especially common after upgrading Databricks runtime or when different team members install packages manually.

- Maintain a versioned list of required packages.

- Use init scripts to install libraries automatically at startup.

- Avoid manual pip install commands in shared clusters.

- Test new packages in temporary clusters first.

- Check driver logs for JAR conflicts and missing dependencies.

Consistency across clusters eliminates a huge category of avoidable problems.

5. Auto-Scaling and Auto-Termination Problems

Auto-scaling adjusts the number of workers based on demand, and auto-termination shuts down idle clusters. Both help control cost but can cause confusion when misconfigured.

- Clusters scale up too slowly, causing long delays.

- Jobs fail because the cluster terminated mid-run.

- Auto-scaling doesn’t trigger even when tasks queue up.

To check:

- Review your scaling thresholds.

- Make sure your Spark workload supports dynamic allocation.

- Keep termination timeouts high enough for queued jobs.

- If jobs run overnight, disable auto-termination temporarily.

6. Practical Troubleshooting Flow

When dealing with cluster issues, don’t guess. Follow a clear sequence.

- Open the cluster event log and note the failure stage.

- Identify if it’s a startup, runtime, or shutdown problem.

- Check the driver log first, it usually holds the main error.

- Verify compute permissions, Spark configurations, and instance limits.

- Restart with minimal settings to isolate the problem.

This step-by-step method works far better than random fixes.

Job and Notebook Failures in Databricks

Even if your cluster is healthy, jobs and notebooks can still fail for several reasons. Sometimes it’s the code, other times it’s configuration or environment mismatch. Knowing how to read Databricks job logs, retry behavior, and error types can save a lot of time.

1. Job Stuck or Timing Out

You’ll often find a job sitting in a “Running” state forever or failing after a long wait. This happens when the job queue is blocked, the cluster is overloaded, or a task inside the job is waiting for resources.

- The job is sharing a cluster that’s already running other heavy workloads.

- Network bottlenecks while reading from external storage.

- A loop or action in the code that never completes.

- Auto-scaling not adding enough workers to handle load.

How to fix it:

- Check job run details in the Databricks UI and note the task duration.

- Run the same job manually in a notebook to see where it freezes.

- If using a shared cluster, switch to a dedicated job cluster.

- Review retry settings — sometimes a simple retry clears transient issues.

Running jobs interactively often reveals exactly where execution stalls.

2. Python, Scala, or SQL Code Errors

Code-level errors are extremely common and can appear as “Task failed” or “Executor lost” messages. They’re usually due to syntax mistakes, missing imports, or bad data paths.

- Make sure your file paths are correct — Databricks often needs full dbfs:/ paths or mounted storage paths.

- Review import statements and package versions.

- For SQL, check table names, schema case sensitivity, and temporary views.

- For Python, look for DataFrame column mismatches or missing variables.

If the job fails instantly, open the driver logs, they usually contain the exact line number and exception type.

3. Dependency and Library Problems

Sometimes the job code is fine, but the environment isn’t. This happens when clusters have different library versions or missing dependencies.

- Code that works in one notebook fails in another.

- ModuleNotFoundError or NoClassDefFoundError messages in logs.

- Version conflicts after upgrading Databricks Runtime.

Fix steps:

- Use init scripts to install libraries consistently.

- Avoid manual %pip install in shared clusters.

- Keep a versioned list of dependencies so future jobs run the same way.

- If needed, create custom cluster policies to lock library versions.

Environment consistency is key to preventing these frustrating failures.

4. Job Scheduling Failures

A job that doesn’t trigger or runs at the wrong time can be the result of scheduling issues. This is especially true if you have multiple jobs chained together.

- Make sure the job is attached to the correct cluster.

- Verify the schedule time zone — Databricks uses UTC by default.

- If using dependent jobs, confirm that the previous job finished successfully.

- Review webhook or API triggers if using external schedulers like Airflow or Azure Data Factory.

Timezone misunderstandings cause many scheduling problems that appear mysterious at first.

5. Data-Related Failures

Even perfectly written code will fail if the data changes unexpectedly. For instance, a missing column, null values in key fields, or schema drift can crash a job.

- Confirm that your source data schema matches what your code expects.

- Use spark.read.option(“mergeSchema”, “true”) cautiously — it can mask issues.

- Validate data types before processing to catch bad records early.

- Set up schema validation steps at the start of your job.

Proactive schema validation catches data quality issues before they cause failures.

6. Debugging Tips

When a job or notebook fails, don’t just rerun it blindly. Take a few minutes to gather context.

- Check job run history to see if the issue is recurring.

- Open driver and executor logs from the job detail page.

- Review cluster metrics — CPU, memory, and disk use often reveal hidden causes.

- Test the failing section of code in an interactive notebook with a smaller dataset.

- Add logging inside your code using print statements or structured logs for easier tracing.

These steps help you pinpoint the cause instead of guessing.

7. Preventing Future Failures

Once you fix a job, lock in the lessons.

- Store version-controlled notebooks and scripts in Git or Repos.

- Use clear naming and folder structures for job files.

- Document cluster configurations used for production jobs.

- Schedule smaller, more focused jobs instead of huge single ones.

Over time, a well-documented environment cuts down failure rates dramatically.

Troubleshooting Data Access and Storage Problems

Databricks depends on external cloud storage rather than storing your data internally. That flexibility is powerful, but it also means small mistakes in credentials, mounts, or schemas can break workflows. Use the table below to identify, fix, and prevent the most common data access problems.

1. Permission Denied and Access Errors

Permission denied errors frustrate users because the cause isn’t always obvious. These errors occur when clusters, users, or service principals lack necessary access rights. Expired access tokens or keys cause sudden failures in previously working jobs. Missing IAM policies or incorrect service principal setup block data access.

- Verify credentials are current and correctly scoped.

- Check IAM roles include required storage permissions.

- Confirm Unity Catalog grants for affected tables.

- Test paths with correct prefix (s3://, abfss://, gs://, dbfs:/).

- Use workspace-level mounts instead of user-specific ones.

- Store credentials in Databricks Secrets or key vaults.

2. Mount Point Failures

Databricks lets you mount cloud storage as local paths, simplifying file access. However, mounts can silently fail or disconnect after token expiry or cluster restarts. Mounts created under user sessions aren’t visible to other users or automated jobs.

- Rerun dbutils.fs.mount() and check for error messages.

- Use long-lived credentials from key vault or service principal.

- Create mounts at workspace level for all users.

- Test mount access with dbutils.fs.ls() before running jobs.

Workspace-wide mounts prevent many user-specific access problems.

3. File Not Found Errors

FileNotFoundException errors typically mean incorrect paths, deleted data, or case sensitivity issues. Cloud storage paths are case sensitive, which trips up many users.

- Double-check bucket, container, and file names.

- Verify path format matches storage type.

- Use dbutils.fs.ls() to confirm files exist at specified location.

- Check for typos in folder or file names.

A simple typo in a path can waste hours if not caught early.

4. Delta Table Issues

Reading Delta tables with incorrect readers or encountering corrupted Delta logs causes confusing errors. Schema drift in source data breaks downstream processing.

- Verify file format before reading data.

- Check _delta_log folder exists for Delta tables.

- Run VACUUM to clean obsolete Delta files.

- Inspect Delta transaction logs for consistency.

Delta Lake provides reliability but requires understanding its transaction log structure.

5. Unity Catalog Access Control

Unity Catalog controls access to all data objects including catalogs, schemas, and tables. Users reporting missing tables or denied access usually hit Unity Catalog restrictions.

- Confirm user has SELECT or WRITE privileges on tables.

- Verify cluster is attached to Unity Catalog-enabled workspace.

- Check table ownership and permission grants.

- Ensure metastore region matches workspace region.

Understanding Unity Catalog’s permission model is essential for modern Databricks troubleshooting.

AI-Powered Databricks Troubleshooting

In 2025, AI isn’t just a helpful assistant anymore. It’s built into Databricks at every level, from predicting failures before they happen to automatically fixing performance issues. Understanding these AI capabilities can cut your troubleshooting time in half.

1. Built-In Databricks AI Features

Databricks Assistant

The most visible AI tool sits right inside your notebooks. Click the assistant icon or type /ask to get help with errors, code optimization, and query explanations.

What makes it powerful: It sees your workspace context, cluster configuration, and data schema. That means more accurate suggestions than generic AI tools.

Use it for:

- Explaining cryptic error messages in plain language

- Converting natural language requests into working code

- Optimizing slow queries automatically

- Suggesting better Spark configurations

Genie Data Room

Genie lets you ask questions about your data in plain English. Instead of writing SQL, you type “show me sales trends by region last quarter” and it generates the query, runs it, and visualizes results.

Best for:

- Quick data exploration without writing code

- Building dashboards faster

- Training non-technical team members

AI/BI Dashboards

These dashboards use AI to automatically detect anomalies, suggest insights, and update visualizations as your data changes. They learn what matters to your team and surface relevant patterns without manual setup.

2. Predictive AI Capabilities

Auto-Optimization

Databricks now includes AI that watches your workloads and automatically adjusts settings. It tweaks shuffle partitions, memory allocation, and caching strategies without you touching config files.

Enable it through cluster policies or workspace settings. Once active, it learns from job patterns and applies fixes proactively.

Predictive Cluster Scaling

Standard auto-scaling reacts to current load. Predictive scaling uses AI to forecast upcoming demand based on historical patterns and scales clusters before jobs start.

This prevents the lag you normally see when clusters scramble to add workers mid-job.

Intelligent Alerting

Set up alerts that learn what’s normal for your workloads. Instead of fixed thresholds that trigger false alarms, AI-based alerts only notify you when patterns genuinely deviate from learned behavior.

3. AI for ML and Model Debugging

Mosaic AI

If you’re training models, Mosaic AI helps troubleshoot common ML problems:

- Model drift detection catches when predictions degrade over time

- Feature importance analysis shows which inputs cause issues

- Automated retraining suggestions when performance drops

MLflow Integration

MLflow tracks experiments, but its AI features go further. It compares runs automatically, flags unusual metric patterns, and suggests hyperparameter changes based on past results.

AutoML Debugging

When AutoML runs fail, built-in diagnostics explain why. It identifies data quality issues, feature engineering problems, or resource constraints and suggests specific fixes.

4. AI Code Generation and Refactoring

Beyond Simple Fixes

Modern AI tools don’t just fix syntax errors. They:

- Rewrite inefficient code from scratch

- Convert between languages (Python to Scala, SQL to DataFrame API)

- Modernize legacy notebooks to use current best practices

- Suggest better algorithm choices for your data size

Continuous Code Review

Enable AI code review in your workspace settings. As you write, it flags anti-patterns, security issues, and performance problems in real time.

5. AI-Powered Log Analysis

Root Cause Detection

Instead of manually reading through driver and executor logs, AI tools can:

- Identify the actual error buried in thousands of log lines

- Correlate errors across multiple failed jobs

- Suggest fixes based on similar past failures

- Highlight which log entries matter and which are noise

Pattern Recognition

AI learns common failure patterns in your workspace. When a new error appears, it matches against known issues and provides solutions that worked before.

Optimizing Databricks Performance

Slow performance drains time and money even when jobs complete successfully. Databricks performance depends on cluster configuration, data layout, and Spark settings. A single misconfiguration can cut throughput in half.

1. Finding Performance Bottlenecks

Identifying where slowness occurs is the first step toward improvement. The Spark UI reveals which stages take the most time. Task distribution shows whether some tasks finish fast while others lag, indicating data skew. Shuffle sizes signal wide transformations like joins or groupBy operations.

- Open Spark UI from job details page.

- Identify stages with longest duration.

- Check task distribution for imbalance.

- Review shuffle read and write sizes.

- Examine executor metrics.

Once you identify the bottleneck location, fixes become much clearer.

2. Cluster Sizing Best Practices

Choosing the right cluster size dramatically impacts performance.

- Start with smaller clusters and scale based on metrics.

- Enable autoscaling for unpredictable workloads.

- Choose memory optimized nodes for shuffle heavy jobs.

- Keep driver and workers in same region as data.

Right sizing balances performance needs with cost efficiency.

3. Spark Configuration Tuning

Databricks automatically tunes many Spark settings, but key parameters benefit from manual adjustment.

- Lower spark.sql.shuffle.partitions for smaller datasets.

- Raise shuffle partitions for datasets over 1TB.

- Adjust spark.executor.memory for memory intensive operations.

- Enable Adaptive Query Execution (AQE) for dynamic optimization.

Small parameter changes can produce noticeable performance improvements.

4. Handling Data Skew

Data skew occurs when one partition contains far more data than others. That overloaded node slows the entire job while other nodes sit idle.

- Repartition data on columns with better distribution.

- Sample data to check key distribution before joins.

- Use salting technique (add random numbers to skewed keys).

- Switch to broadcast joins for smaller dimension tables.

Proper partitioning keeps workload balanced across the cluster.

5. Delta Lake Optimization

Delta Lake provides reliability and performance benefits, but tables need maintenance.

- Run OPTIMIZE to compact small files into larger ones.

- Use ZORDER BY on columns used in filters and joins.

- Schedule VACUUM commands to remove old file versions.

- Monitor table file counts and sizes.

Delta table maintenance should be part of regular operations.

6. Query Optimization Techniques

SQL query structure directly impacts performance. Small changes in how you write queries can produce massive speedups at scale.

- Apply WHERE filters before JOIN operations.

- Select only columns you actually need.

- Use temporary views instead of repeatedly reading files.

- Avoid UDFs for simple expressions, use built-in functions.

- Cache intermediate results only when reused multiple times.

Query optimization often provides the biggest performance wins with minimal effort.

Databricks Vs Snowflake: 7 Critical Differences You Must Know

Compare Azure Databricks vs Snowflake to find the right platform for your data strategy.

Common Databricks Error Codes Reference

| Error | Cause | Quick Fix |

| OutOfMemoryError | Driver or executor nodes ran out of memory. | Increase cluster size or node memory. Repartition data and avoid collect() on large datasets. |

| FileNotFoundException | Wrong path, deleted data, or inactive mount points. | Verify path prefix matches storage type. Use dbutils.fs.ls() to confirm files. |

| PermissionDenied | Missing IAM policies, Unity Catalog restrictions, or expired credentials. | Verify IAM or service principal permissions. Check Unity Catalog grants and renew tokens. |

| AnalysisException | Missing columns or schema mismatch. | Verify exact column and table names. Use printSchema() before joins. |

| TaskNotSerializableException | Non-serializable object in a distributed operation. | Move the object outside the transformation or broadcast it. Keep functions simple. |

| SparkException | Broad runtime issue (memory, shuffle, or execution failures). | Open Spark UI for failed stage details and read driver logs for the root cause. |

| SocketTimeoutException | Network timeouts due to slow connections or blocked traffic. | Verify VPC and firewall rules. Keep data and compute in the same region. Increase timeouts if needed. |

Best Practices to Prevent Databricks Issues

Prevention beats troubleshooting. These practices reduce failures and make your Databricks environment more stable and predictable.

1. Cluster Planning and Management

- Use job clusters for scheduled work.

- Enable auto-termination for cost control.

- Match cluster sizes to workload requirements.

- Keep runtime versions updated.

- Document cluster configurations for production jobs.

2. Library and Dependency Management

- Use init scripts for automatic library installation.

- Maintain versioned dependency lists.

- Avoid manual package installs in production.

- Test new libraries in non-production clusters first.

3. Access Control and Security

- Use service principals instead of user tokens.

- Store credentials in Databricks Secrets or key vaults.

- Schedule monthly access audits.

- Apply least privilege principles to user roles.

4. Monitoring and Alerting

- Review cluster metrics weekly.

- Set alerts for long running jobs.

- Monitor cloud costs for unexpected spikes.

- Track job success rates and durations.

5. Version Control Integration

- Link notebooks to Git repositories.

- Commit code before major changes.

- Keep cluster configurations in version control.

- Use clear commit messages describing changes.

When to Contact Databricks Support

| Step | What to Do | Details / Why It Matters |

| 1. When to Escalate | – Clusters fail repeatedly even after fixes. – Jobs crash with no clear error. – Workspace behaves inconsistently or won’t load. | These indicate deeper platform or infrastructure issues beyond user control. |

| 2. What to Include | – Cluster ID and Job Run ID. – Workspace URL and timestamps. – Exact error text or screenshot. – Steps to reproduce and recent configuration changes. | Complete and clear details help Databricks Support replicate and resolve the issue faster. |

| 3. Where to Reach Out | – Databricks Support Portal: For technical or platform issues. – Cloud Provider Support (Azure/AWS): For billing or resource-level problems. – Community Forums: For smaller code-related or usage questions. | Using the correct channel avoids delays and ensures your issue reaches the right team. |

| 4. How to Share Logs | – Export logs to secure cloud storage. – Share read-only links only. – Mask credentials and sensitive data before sharing. | Keeps confidential information safe while providing engineers the context they need. |

| 5. After Resolution | – Note the root cause and fix. – Update internal documentation. – Add new checks or alerts to prevent recurrence. | Turns one-time incidents into permanent improvements. |

Kanerika’s Partnership with Databricks: Enabling Smarter Data Solutions

We at Kanerika are proud to partner with Databricks, bringing together our deep expertise in AI, analytics, and data engineering with their robust Data Intelligence Platform. Furthermore, our team combines deep know-how in AI, data engineering, and cloud setup with Databricks’ Lakehouse Platform. Together, we design custom solutions that reduce complexity, improve data quality, and deliver faster insights. Moreover, from real-time ETL pipelines using Delta Lake to secure multi-cloud deployments, we make sure every part of the data and AI stack is optimized for performance and governance.

Our implementation services cover the full lifecycle—from strategy and setup to deployment and monitoring. Additionally, we build custom Lakehouse blueprints aligned with business goals, develop trusted data pipelines, and manage machine learning operations using MLflow and Mosaic AI. We also implemented Unity Catalog for enterprise-grade governance, ensuring role-based access, lineage tracking, and compliance. As a result, our goal is to help clients move from experimentation to production quickly, with reliable and secure AI systems.

We solve real business challenges, such as breaking down data silos, enhancing data security, and scaling AI with confidence. Furthermore, whether it’s simplifying large-scale data management or speeding up time-to-insight, our partnership with Databricks delivers measurable outcomes. We’ve helped clients across industries—from retail and healthcare to manufacturing and logistics—build smarter applications, automate workflows, and improve decision-making using AI-powered analytics.

Overcome Your Data and AI Challenges with Next-Gen Data Intelligence Solutions!

Partner with Kanerika Today.

FAQs

Why is my Databricks cluster not starting?

Clusters fail to start when there’s a configuration or permission issue.

Fix:

- Check instance types, IAM roles, and VPC subnet access.

- Make sure your cloud account has enough instance quota.

- If the cluster still fails, rebuild it from a working template.

How can I find out why a Databricks job failed?

Open the Job Run Details in Databricks and check the driver and executor logs.

Common causes include syntax errors, missing imports, or path mistakes.

If the logs aren’t clear, type /ask in a notebook to use the Databricks Assistant for log explanation.

What causes Databricks “Permission Denied” errors?

These usually come from missing IAM roles, expired access tokens, or Unity Catalog restrictions.

Fix:

- Verify storage permissions and token validity.

- Check Unity Catalog privileges for affected tables.

- Use Databricks Secrets or a key vault instead of hard-coded credentials.

What should I do if I get “File Not Found” errors?

That means your path is wrong, deleted, or case-sensitive.

Fix:

- Use dbutils.fs.ls() to check the file path.

- Verify prefix (s3://, abfss://, dbfs:/, etc.).

- Recreate mounts if they’ve expired.

How does AI help in Databricks troubleshooting?

AI features like Databricks Assistant and Predictive Scaling save time by automatically diagnosing and fixing common issues.

They analyze logs, detect bottlenecks, and adjust Spark settings on their own.