AI prompt engineering best practices is no longer just a technical trick; it’s the skill that defines how effectively we harness models like ChatGPT, Claude, and Gemini. In 2025, the global prompt engineering market is expected to hit $505 billion, growing at a 33% CAGR through 2034. Yet research shows that 78% of AI project failures stem from poor prompt design—not from model limitations. Clear evidence that prompt quality matters.

At its core, prompt engineering means crafting instructions—using natural language—that guide AI models to produce useful, accurate responses. A well-designed prompt can mean the difference between a vague, generic output and one tailored to your needs. This matters across industries: developers rely on prompts to generate or debug code; writers use them to draft SEO content; and customer support teams use them to automate answers or summarize tickets.

This blog covers essential best practices—from setting context and role personas, to including examples, constraints, and iterative tweaking—so you can get reliable, on‑target AI outputs.

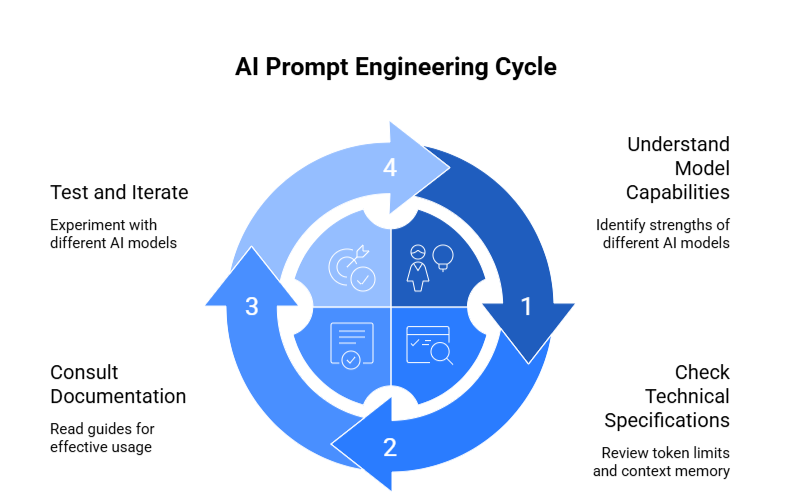

Why Knowing Your Model Matters in Prompt Engineering

1. Know Your Model’s Capabilities

Each AI assistant has different strengths. GPT-4 excels at creative writing; Claude handles analysis well, while Gemini works best with images and multiple content types. Knowing these differences helps you pick the right tool and avoid frustration.

2. Technical Specifications Matter

Check your model’s token limits, which determine how much text you can process in a single conversation. Context memory affects how well the model remembers earlier parts of long discussions. Some models support specific formats like JSON, XML, or code better than others. These constraints directly impact on your workflow and output quality.

3. Documentation Can be Helpful

Companies publish guides about what their AI can do and how to use it effectively. These get updated regularly with new features and fixes. Reading these guides saves you from guessing.

4. Test and Iterate

What works with one AI might fail with another. Test your requests with different assistants to see which gives the best results. This helps you find the most effective approach for your needs.

10 AI Prompt Engineering Best Practices

1. Be As Specific As Possible

Specificity is the cornerstone of effective AI prompt engineering. A well-crafted, specific prompt eliminates ambiguity and provides clear direction, enabling AI to deliver precisely what you need rather than generic or tangential responses. The key is striking the right balance—providing sufficient detail to guide the AI without overwhelming it with unnecessary information.

Essential Elements for Specific Prompts

• Detailed Context: Establish comprehensive background information including the subject matter, scope, constraints, and situational factors. This contextual foundation helps the AI understand not just what you’re asking, but why you’re asking it and what parameters should guide the response.

• Desired Format: Explicitly define how you want information structured—whether as numbered lists, bullet points, tables, executive summaries, step-by-step guides, or narrative reports. Include preferences for headings, subheadings, visual elements, and organizational hierarchy.

• Output Length: Specify exact word counts, paragraph numbers, or page limits rather than vague terms like “brief” or “detailed.” For example, request “exactly 300 words” or “5 bullet points with 2-3 sentences each” to ensure appropriate scope.

Multi Agent AI Systems: Everything You Need To Know In 2026

Multi-agent systems (MAS) are designed to solve complex problems by allowing multiple independent agents to work together.

• Level of Detail: Clearly indicate whether you need high-level overviews, technical deep-dives, beginner-friendly explanations, or expert-level analysis. This prevents the AI from misjudging your expertise level or informational requirements.

• Tone and Style: Define the voice, formality level, and stylistic approach—whether academic, conversational, persuasive, technical, or creative. Consider your target audience and specify accordingly to ensure appropriate communication style.

• Examples and Comparisons: Request specific types of illustrations, analogies, case studies, or comparative analyses to enhance understanding and provide concrete reference points for abstract concepts.

Enhanced Prompt Example:

Create a detailed 500-word executive summary analyzing emerging social media marketing trends for B2B technology companies from 2022-2024. Structure the response with: (1) an opening paragraph identifying the three most significant trends, (2) three sections of 100-125 words each examining LinkedIn video content strategy, AI-powered personalization, and thought leadership positioning, and (3) a concluding paragraph with actionable recommendations. Use a professional, data-driven tone suitable for C-suite executives. Include specific metrics where possible and suggest two chart types that would best visualize engagement data and ROI comparisons across platforms.

2. Use Step-by-Step Instructions

- Break Big Requests Into Smaller Pieces – Instead of asking for everything at once, guide the AI through what you want one step at a time

- Why This Helps – Complex tasks can confuse AI, leading to messy or incomplete answers. Clear steps create a logical flow that keeps everything organized

- Use Numbered Lists or Bullet Points – Structure your request with clear sequences like “First do this, then do that, finally wrap up with this”

- Perfect for Complex Tasks – This works great for tutorials, data reviews, research projects, and any multi-part content creation

Real Examples That Work:

- Coding help: “First explain what this does, then show me the code, finally give me an example”

- Data analysis: “Start with what the data shows, then find the main patterns, end with what we should do about it”

- Research: “Begin with background info, present key findings, finish with what it all means”

Sample Request – “Help me understand smartphones: First, explain what makes them ‘smart’ in simple terms. Second, give three ways people use them daily. Third, name one major benefit and one problem. Finally, sum it all up in two sentences.”

This approach turns overwhelming requests into clear, well-organized responses.

3. Give Role or Persona to the AI

Tell the AI Who to Be – Instead of generic responses, ask the AI to take on a specific role like teacher, marketer, doctor, or friend

Why Roles Matter – Different roles use different language, focus on different details, and approach problems uniquely. A teacher explains step-by-step, while a consultant gives strategic advice

Shapes the Entire Response – The role affects:

- How complex the language is

- What details get emphasized

- The overall tone and approach

- What examples or comparisons are used

Easy Role Examples:

- “Act as a math tutor helping a 10-year-old with fractions”

- “You’re a senior designer reviewing a junior’s work”

- “Respond as a fitness coach creating a beginner workout plan”

- “Be a friendly neighbor explaining how to fix a leaky faucet”

Works For Any Expertise Level – You can specify experience levels too: “Act as a beginner chef” gives different advice than “You’re a professional chef”

Mix Roles When Needed – “Be an encouraging teacher who also happens to be a coding expert” combines supportive tone with technical knowledge

This simple trick makes AI responses feel more natural and targeted to your specific needs.

Achieve Optimal Efficiency and Resource Use with Agentic AI!

Partner with Kanerika for Expert AI implementation Services

4. Use Context and Constraints

AI works best when it has context. Without it, you’ll often get generic, surface-level responses. Adding details like brand voice, target audience, location, tone, and output format helps the model generate results that are relevant and on-brand.

For example, instead of asking:

“Write a product description for a smartwatch.”

You could say:

“Write a 100-word product description for a smartwatch aimed at tech-savvy Gen Z users. Use a casual, witty tone and keep the language at a high school reading level.”

This small change gives the AI a clear framework. It avoids bland marketing copy and produces something tailored to your needs.

Useful constraints to include in your prompts:

- Word Count: “Keep it under 150 words.”

- Language Level: “Write for a 5th-grade reading level.”

- Tone/style: “Use a professional but friendly tone.”

- Format: “Respond in bullet points with bold headings.”

The more relevant info you give, the less guessing the AI has to do—and the better the results.

5. Iterate and Refine Prompts

a. First Attempts Rarely Work Perfectly – Don’t expect to nail the perfect prompt on your first try. AI responses improve when you fine-tune your requests

b. Change One Thing at a Time – If the response isn’t quite right, adjust just one element:

- Make the tone more casual or formal

- Add more specific details

- Change the structure or format

- Adjust the length or complexity

c. Use Follow-up Prompts – Instead of starting over, build on what you got:

- “Make this more conversational”

- “Add three specific examples”

- “Shorten this to two paragraphs”

- “Explain this part more clearly”

d. Keep What Works – When you find a prompt structure that gives great results, save it as a template for similar future requests

e. Track Your Improvements – Notice which changes made the biggest difference so you can apply those lessons to new prompts

f. Example Progression:

- “Write about coffee” (too vague)

- “Write about coffee benefits” (better but still broad)

- “Write 3 health benefits of coffee for busy professionals in simple language” (much better!)

Think of prompting as a conversation, not a single command.

6. System Prompts vs. User Prompts

a. Two Types of Instructions – When using AI through programming, you can set two different kinds of messages that work together

b. System Prompts Set the Rules – These tell the AI how to behave overall:

- What personality to have

- How to format responses

- What rules to always follow

- What never to do

c. User Prompts Ask for Specific Things – These are your actual requests or questions that trigger the AI to take action

d. Why Separate Them – System prompts stay consistent across conversations, while user prompts change based on what you need each time

e. System Prompt Examples:

- “Always respond in bullet points”

- “Be helpful but never explain your reasoning”

- “Write like a friendly teacher”

- “Format all code examples with proper syntax highlighting”

f. How They Work Together:

- System: “You’re a professional email writer who keeps things brief”

- User: “Write a follow-up email to a client about our meeting delay”

g. Best for Developers – This approach works when building apps or chatbots where you want consistent behavior across all interactions

Think of system prompts as permanent personality settings and user prompts as specific requests.

AI Agents Vs AI Assistants: Which AI Technology Is Best for Your Business?

Compare AI Agents and AI Assistants to determine which technology best suits your business needs and drives optimal results.

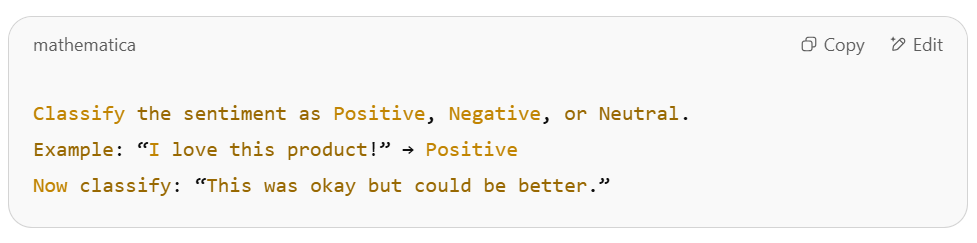

7. Use Examples in the Prompt

One of the best ways to guide AI is by showing it exactly what you expect. This is known as one-shot or few-shot prompting—where you include one or more examples in your prompt. It helps the model learn the pattern, reducing random or inconsistent replies.

This method is especially useful for structured tasks like classification, tone matching, translation, or formatting. By showing the AI what the input looks like and what the expected output should be, you reduce confusion and improve accuracy.

Here’s a simple example:

This gives the model a clear template to follow. The more relevant and accurate your example is, the more likely you’ll get results that match your expectations. Use this method when you want consistency across multiple responses.

8. Avoid Overcomplicated Language

a. Simple Works Better – Clear, straightforward prompts usually get better results than fancy, complex ones

b. Don’t Cram Everything into One Sentence – Long, confusing sentences with multiple conditions make it hard for AI to understand what you actually want

c. Use Everyday Language – Write like you’re talking to a friend, not writing a research paper. Skip the big words and complicated phrases

d. Break Things up Visually:

- Use line breaks between different requests

- Put conditions in bullet points

- Separate main task from formatting instructions

- Make important details stand out

e. Bad Example: “Please create a comprehensive analysis of social media trends while ensuring the tone remains professional yet engaging and the format includes bullet points with no more than 200 words total and examples from three different platforms.”

f. Good Example: “Analyze current social media trends.

Requirements:

- Professional but engaging tone

- 200 words maximum

- Use bullet points

- Include examples from Facebook, Instagram, and Twitter”

g. Why This Matters – AI processes clear instructions better than complex ones. Simple prompts reduce confusion and get you closer to what you actually need.

Think of prompts like giving directions – shorter and clearer beats long and confusing.

Elevate Organizational Productivity by Integrating AI!

Partner with Kanerika for Expert AI implementation Services

9. Test Across Use Cases and Models

a. One Size Doesn’t Fit All – A prompt that works perfectly for writing might fail completely for data analysis or summarizing. Each task type needs different approaches

b. Different AI Assistants Behave Differently – What works great with ChatGPT might not work as well with Claude or other AI tools. Each has its own strengths and quirks

c. Test the Same Prompt for Different Jobs:

- Writing creative content

- Analyzing data or information

- Summarizing long texts

- Answering questions

- Solving problems step-by-step

d. Compare Across AI Assistants – Try your best prompts with different AI tools to see which gives better results for your specific needs

e. Use Testing Tools – Many websites offer prompt playgrounds where you can:

- Test the same prompt across multiple AI models

- Compare responses side by side

- Save successful prompts for future use

- Track which approaches work best

f. Keep Notes on What Works – Document which prompts work best for which tasks and which AI assistants. This saves time and improves your results over time

g. Start Small, then Expand – Test with simple examples first, then try more complex requests once you know what works

Think of this like recipe testing – what works for cookies might not work for bread.

Amazon Nova AI – Redefining Generative AI With Innovation and Real-World Value

Discover how Amazon Nova AI is redefining generative AI with innovative, cost-effective solutions that deliver real-world value across industries.

10. Log and Version Your Prompts

a. Keep a Prompt Collection – Save your best-working prompts in a document or app so you can reuse them instead of starting from scratch every time

b. Track What Changes You Made – When you improve a prompt, write down:

- What you changed

- Why you changed it

- Whether it worked better or worse

- What specific results improved

c. Organize by Purpose – Group your saved prompts by what they do:

- Writing tasks (emails, blogs, social media)

- Analysis work (data review, research)

- Creative projects (brainstorming, storytelling)

- Problem-solving (troubleshooting, planning)

d. Version Your Improvements – Like software updates, give your prompts version numbers:

- “Email writer v1” → “Email writer v2 (added tone specification)”

- Note which version works best for different situations

e. Essential for Bigger Projects – If you’re building chatbots, automated content tools, or regular AI workflows, good prompt records save hours of repeated work

f. Simple Tracking Method – Use a basic spreadsheet or note-taking app with columns for: Prompt text, Purpose, Version, Results, Notes

Think of it like keeping a recipe book – the good ones are worth saving and improving over time.

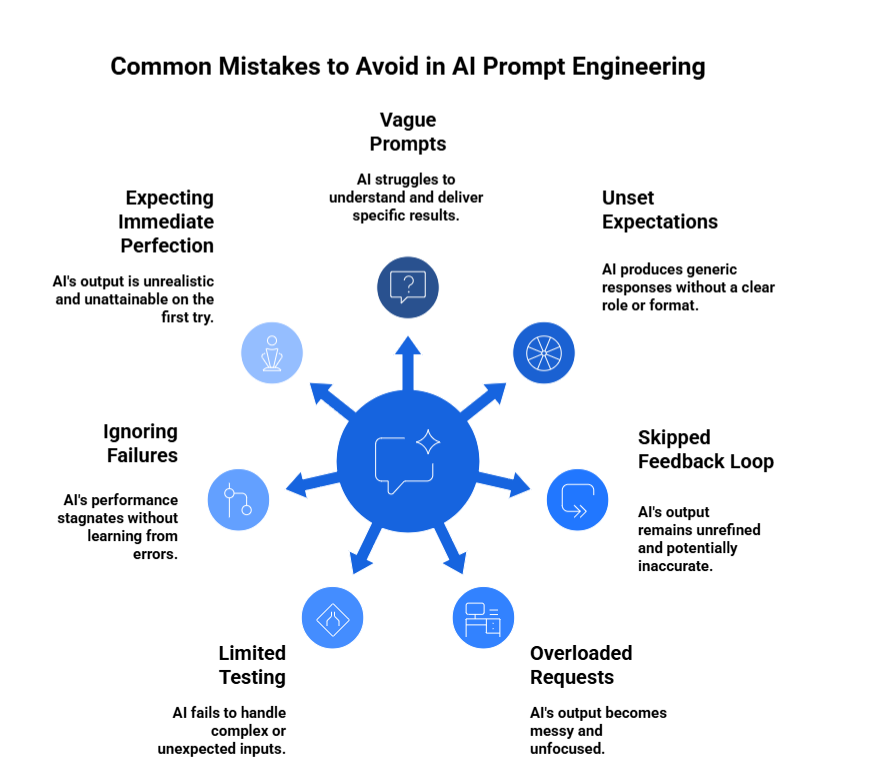

Common Mistakes to Avoid in AI Prompt Engineering

1. Being Too Vague

Asking “help me write something” instead of “write a 200-word email to customers about a shipping delay” leaves the AI guessing what you need

2. Forgetting to Set Expectations

Not telling the AI what role to play (teacher, expert, friend) or what format you want (bullets, paragraphs, steps) leads to generic responses

3. Skipping the Feedback Loop

Never ask the AI to double-check its work or revise based on your feedback. Simple additions like “review this for accuracy” or “make this more conversational” can dramatically improve results

4. Cramming Too Much Into One Request

Trying to get the AI to write content, format it perfectly, fact-check it, and optimize it for different audiences all in one prompt usually creates messy results

5. Only Testing the Happy Path

Not trying your prompts with unusual inputs, edge cases, or different scenarios. What works for simple requests might break with complex or unexpected inputs

6. Not Learning From Failures

Treating bad responses as dead ends instead of opportunities to refine your approach

7. Expecting Perfection Immediately

Good prompts develop over time through testing and adjustment, not on the first try

Choose Kanerika to Redefine Your Business with High-Impact Agentic AI Solutions

Kanerika brings deep expertise in Agentic AI and AI/ML to help businesses move faster, work smarter, and stay ahead. We support clients across industries like manufacturing, retail, finance, and healthcare by building tailored AI solutions that improve productivity, reduce costs, and unlock real value.

Our purpose-built AI agents and custom generative AI models are already helping businesses solve bottlenecks and run smoother operations. From faster information retrieval and real-time data analysis to video intelligence and smart surveillance, our tools are built to perform.

Whether it’s inventory optimization, financial forecasting, vendor evaluation, or intelligent product pricing, our solutions are designed to drive results. Our AI can also perform arithmetic data validation, sales insights, and much more — all grounded in real business needs.

Kanerika’s approach is simple: build what works, scale what matters.

Partner with us to turn your enterprise data and workflows into powerful, AI-driven systems that deliver.

Transform Your Productivity with Generative AI-Driven Solutions!

Partner with Kanerika for Expert AI implementation Services

FAQs

1. What is prompt engineering?

Prompt engineering is the practice of writing clear, structured instructions for AI models (like ChatGPT, Claude, or Gemini) to produce useful responses. It involves choosing the right wording, format, and context to get consistent and accurate results.

2. Why does prompt quality matter so much?

Even the most advanced AI models rely heavily on the way you ask questions. A vague or messy prompt often leads to irrelevant or incomplete answers. A well-structured prompt reduces errors, increases precision, and saves time by minimizing back-and-forth edits.

3. How do I make a good prompt?

Start by being specific about what you want. Include details like tone, output format, word count, or audience. Use step-by-step instructions and, when helpful, show examples of the kind of output you’re expecting.

4. What are some common mistakes to avoid?

- Being too vague or broad

- Cramming too many instructions into one prompt

- Ignoring follow-up refinement

- Forgetting to define the AI’s role (e.g., teacher, developer)

- Not testing your prompt across different use cases

5. Can I reuse good prompts?

Yes, and you should. Once you find a prompt that works well, save it. You can tweak it slightly for different tasks. Keeping a prompt library is a great way to stay consistent and efficient.

6. How do I know if my prompt needs improvement?

If the output is off-topic, too generic, or doesn’t match your tone or format, your prompt likely needs more context or structure. Try refining it by changing one variable at a time (e.g., adding a tone or formatting cue).

7. Do different AI tools require different prompt styles?

Yes. While the general rules apply across models, some tools (like Claude or Gemini) may handle context or formatting slightly differently. It’s always smart to test and adjust your prompts depending on the model you’re using.