Your competitor just built an AI model with 90% accuracy using only 10% labeled data. Meanwhile, you’re spending millions on data labeling. The difference? They’re using semi-supervised learning.

This isn’t about cutting corners. It’s about working smarter with the data you already have. While most enterprises sit on mountains of unlabeled data, the leaders are finding ways to extract value from it without breaking the bank on annotation costs.

According to McKinsey’s 2024 global AI survey, 78% of organizations now use AI in at least one business function, up from just 55% a year earlier. Yet most struggle with the same bottleneck: the astronomical cost of labeling data. This practical guide shows business leaders how to leverage their unlabelled data mountains for competitive advantage, with real implementation strategies and proven ROI metrics.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

What is Semi-Supervised Learning?

The Basic Concept Explained Simply

Think of semi-supervised learning like teaching someone to recognize cars. Instead of showing them every single car model with a label, you show them a few labeled examples. Then you let them study thousands of unlabeled car images to understand patterns themselves. They learn what makes a car a car without you having to label everything.

That’s exactly how semi-supervised learning works in AI. You start with a small set of labeled data that tells the model what to look for. Then you feed it massive amounts of unlabeled data. The model finds patterns and relationships on its own, using the labeled examples as guideposts.

How It Differs from Supervised and Unsupervised Learning

Supervised learning needs labels for everything. It’s like having a teacher check every single answer. Expensive and slow.

Unsupervised learning uses no labels at all. The model finds patterns but you can’t control what it learns. It might cluster your customers perfectly or it might group them by completely irrelevant features.

Semi-supervised learning sits in the middle. You guide the model with some labeled examples, then let it learn from the rest. It’s structured discovery. You get the precision of supervised learning without the massive labeling costs.

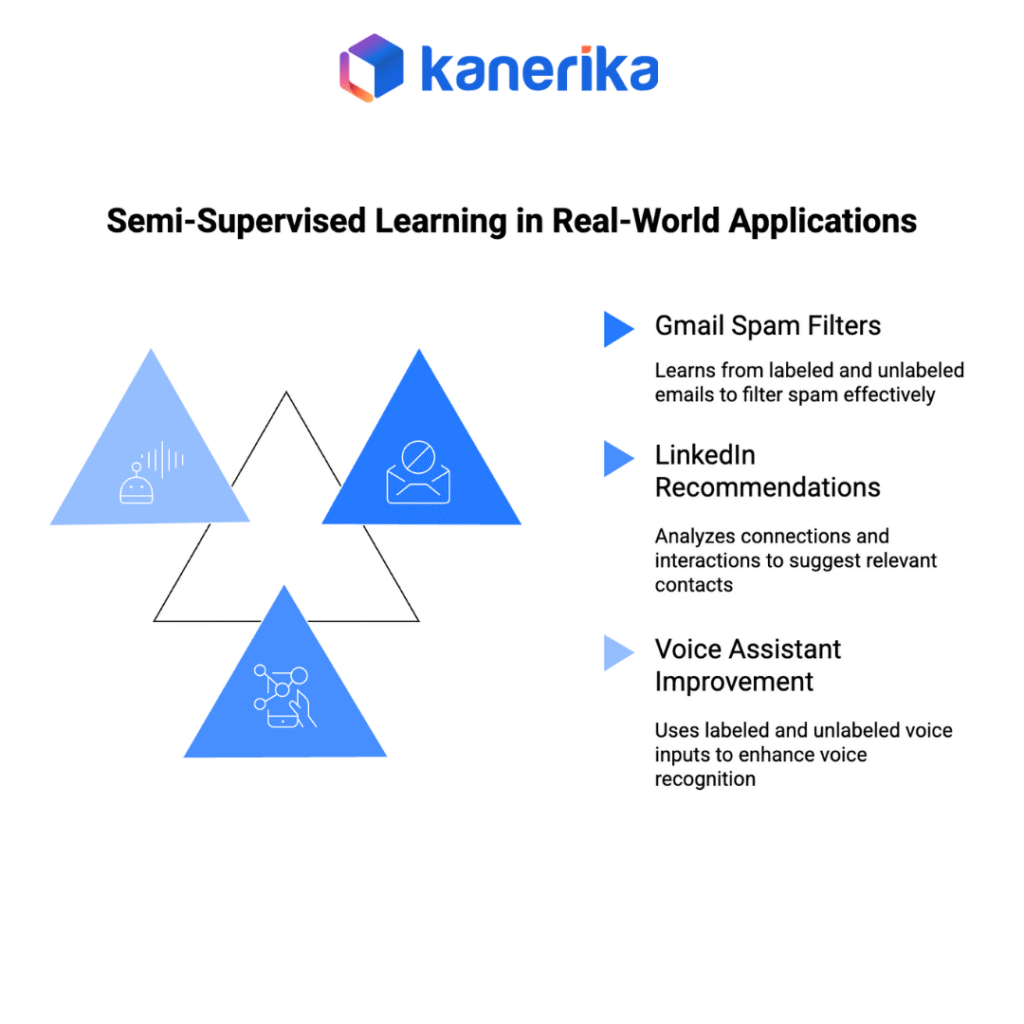

Real Business Examples You Already Use

When Gmail filters spam, it doesn’t have humans labelling every email. It learns from a small set of marked spam emails plus patterns in millions of unlabelled messages.

LinkedIn’s recommendation engine works the same way. A few confirmed connections teach the system about relationships. Then it analyzes countless profile views and interactions to suggest relevant contacts.

Your smartphone’s voice assistant improved through semi-supervised learning too. Engineers didn’t label every possible voice command. They labeled core commands then let the system learn variations from millions of unlabelled voice inputs.

The Business Case for Semi-Supervised Learning

Cost Reduction in Data Preparation

Data labeling typically consumes 80% of an AI project’s budget. A single labeled image for computer vision can cost $5-50 depending on complexity. Medical images? Even more. Financial transaction labeling? You need domain experts.

Semi-supervised learning changes this equation. Instead of labeling 100,000 images, you label 10,000. The model learns from the other 90,000 unlabeled images. Same accuracy. Fraction of the cost.

According to data from Statista and industry reports, the global machine learning market is projected to reach $503.40 billion by 2030 with a CAGR of 34.80%. However, 72% of IT leaders mention AI skills as one of the crucial gaps that needs to be addressed urgently, and access to relevant training data is the second most common challenge machine learning practitioners face when productionizing their ML models.

Faster Time to Market for AI Projects

Traditional AI projects stall waiting for labeled data. Six months for annotation. Another three for model training. By then, your market opportunity might be gone.

Semi-supervised learning accelerates everything. Start with whatever labeled data you have. Deploy a working model in weeks, not quarters. Improve it continuously as more data comes in.

McKinsey’s research indicates that 69% of C-suite companies began investing in generative AI over a year ago, yet only 47% say they are making slow progress in building Gen AI tools. The main bottleneck? Data preparation and labeling requirements.

Competitive Advantage Through Data Efficiency

Your unlabeled data becomes a competitive moat. While competitors struggle to label enough data, you’re already in production.

Every customer interaction, every transaction, every sensor reading feeds your model. You don’t need to label it all. The model learns from patterns and improves automatically. Your accuracy grows while your costs stay flat.

This compounds over time. The company that implements semi-supervised learning today has better models tomorrow. And much better models next year. The gap widens every month.

Where Semi-Supervised Learning Creates Value

Customer Service Automation

Customer service generates thousands of interactions daily. Labeling every conversation is impossible. But you need to understand intent, sentiment, and resolution quality.

Semi-supervised learning handles this perfectly. Label a sample of conversations for key intents. The system learns to categorize the rest. It identifies new problem types you didn’t even know existed. It spots trending issues before they explode.

McKinsey reports that marketing and sales departments are seeing some of the greatest returns on AI investment, leveraging these tools for personalized content, improved customer targeting, and automation.

Fraud Detection and Risk Management

Fraudsters evolve constantly. By the time you label new fraud patterns, they’ve moved on. You need systems that adapt without constant manual intervention.

Semi-supervised learning detects anomalies in unlabeled transactions by understanding normal behavior from limited labeled examples. It spots new fraud patterns before you even know to look for them.

Privacy and data governance risks like data leaks are the leading AI concerns across the globe, selected by 42% of North American organizations and 56% of European ones. Semi-supervised learning helps address these concerns by reducing the need to expose sensitive data for labeling.

Quality Control in Manufacturing

Manufacturing generates massive sensor data. Temperature readings. Pressure measurements. Visual inspections. Labeling every data point for quality issues is impossible.

Semi-supervised learning identifies defects using limited labeled examples of failures plus patterns in normal operations. It learns what “about to fail” looks like before actual failure occurs.

The Manufacturing Industry holds the largest share of the Machine learning market at 18.88%, followed by finance at 15.42%. These industries lead adoption precisely because semi-supervised techniques solve their data volume challenges.

Document Processing and Compliance

Enterprises process millions of documents. Contracts. Invoices. Regulatory filings. Manual review doesn’t scale.

Semi-supervised learning extracts information accurately with minimal labeled examples. Label key document types and fields. The system learns to handle variations, formats, and edge cases automatically.

Implementation Strategies for Enterprises

Assessing Your Data Readiness

Start with data inventory. What labeled data exists already? Customer feedback with ratings. Transaction histories with outcomes. Support tickets with resolutions. You probably have more than you think.

Next, evaluate your unlabeled data. Volume matters but relevance matters more. Million random images won’t help train a document processing model. The unlabeled data must relate to your problem.

Quality beats quantity. Clean, consistent unlabeled data works better than massive noisy datasets. Fix data quality issues before implementation or they’ll multiply in your model.

Building vs Buying Solutions

Building in-house gives control but requires expertise. You need ML engineers who understand semi-supervised techniques. Data scientists who can validate results. Infrastructure for training and deployment.

Organizations that use AI or AI agents are about as likely as they were in early 2024 to hire individuals for AI-related roles, with 13% of respondents saying their organizations have hired AI compliance specialists, and 6% reporting hiring AI ethics specialists.

Buying accelerates deployment but may limit customization. Vendor solutions work well for common use cases. Custom business logic might not fit their frameworks.

The hybrid approach often works best. Use vendor platforms for infrastructure and standard models. Build custom layers for your specific business logic. This balances speed with flexibility.

Data Fabric vs Data Virtualization: The Truth About Modern Data Integration

Explore how each works, their benefits, challenges, and which approach best fits your organization’s data maturity.

Common Pitfalls and How to Avoid Them

First pitfall: using too little labeled data. While semi-supervised learning needs less labels than supervised, you still need enough to guide learning. Start with at least 1% labeled data and increase if accuracy suffers.

Second: ignoring data drift. Models trained on old patterns miss new trends. Implement monitoring to detect when model performance degrades. Plan for regular retraining with fresh data.

Third: assuming unlabeled data is unbiased. Only 37% of companies surveyed report they are taking measures to track data provenance and therefore ensure trustworthy AI. If your historical data has problems, semi-supervised learning amplifies them. Audit results regularly for bias and fairness.

ROI and Performance Metrics

Measuring Success Beyond Accuracy

Accuracy matters but it’s not everything. Consider precision versus recall tradeoffs. High precision might mean missing opportunities. High recall might mean too many false alarms.

Business metrics matter more than model metrics. Customer satisfaction. Revenue impact. Cost savings. Operational efficiency. Connect model performance to business outcomes.

Track improvement over time. Semi-supervised models get better as they process more data. Month-over-month improvement often exceeds initial accuracy gains.

Cost-Benefit Analysis Framework

Calculate total cost of ownership. Include labeling costs, infrastructure, personnel, and maintenance. Semi-supervised learning reduces labeling costs but might require more sophisticated infrastructure.

Quantify benefits beyond cost savings. Faster deployment means earlier revenue. Better accuracy means fewer errors and their associated costs. Improved coverage means catching problems others miss.

In a survey conducted by McKinsey, it came to light that adaptation of machine learning does not always lead to cost-cutting, but it surely leads to an increase in revenue. Consider opportunity costs too. What could your team do instead of labeling data? Strategic work that drives growth rather than repetitive annotation tasks.

Case Study: Fortune 500 Implementation Results

According to McKinsey’s analysis, organizations extracting the most value from gen AI show a strong preference for highly customized or bespoke solutions, with these “shaper” or “maker” archetypes significantly outperforming those using off-the-shelf solutions.

A major retailer implemented semi-supervised learning for demand forecasting. Previous approach: manually categorizing products and labeling seasonal patterns. Cost: $3 million annually. Accuracy: 72%.

New approach: semi-supervised learning with 10% labeled data. The model learned patterns from sales data, weather patterns, and social media trends. Cost: $800,000 annually. Accuracy: 81%.

But the real win? Forecast updates went from monthly to daily. Inventory costs dropped 23%. Stock-outs reduced by 34%. Total impact: $45 million in recovered revenue and reduced waste.

Kanerika: Your Partner in Semi-Supervised Learning Implementation

Proven Expertise in AI-Driven Transformations

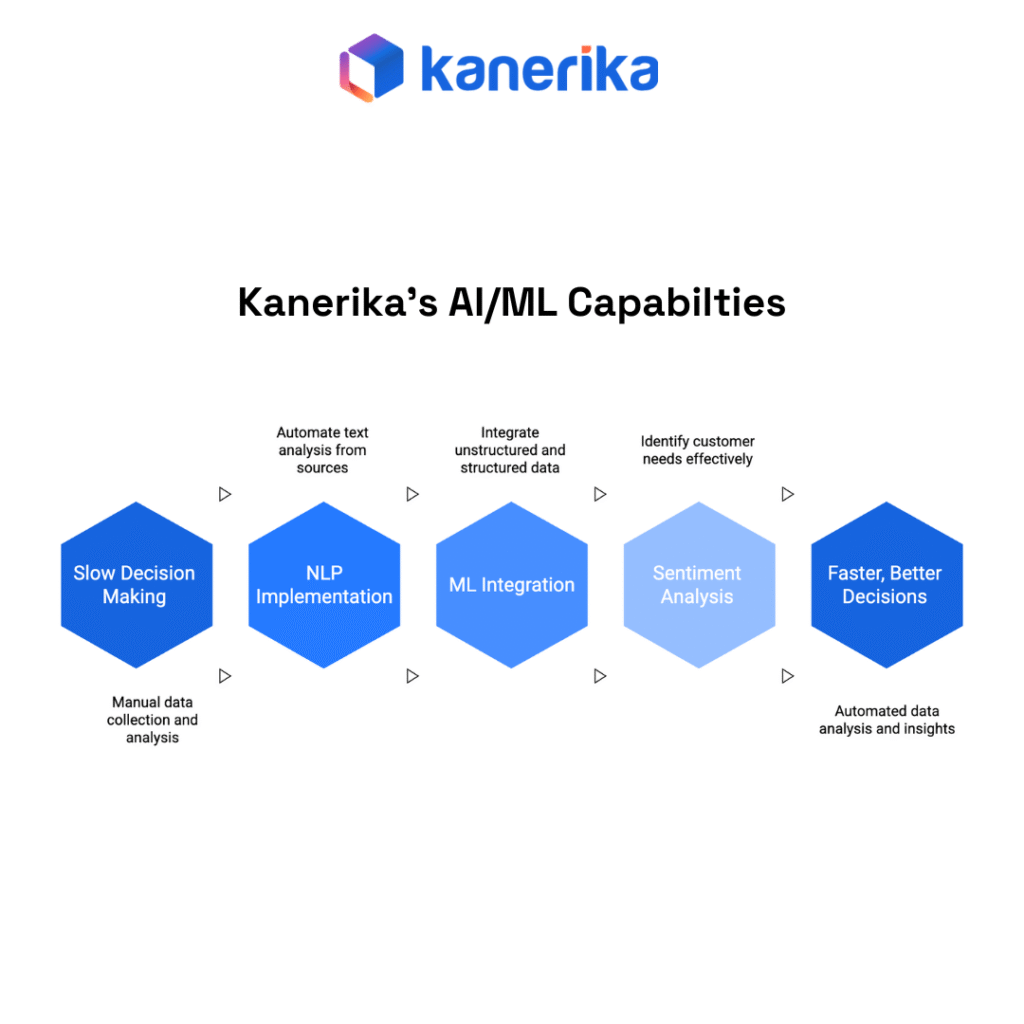

Kanerika has demonstrated remarkable success in implementing generative AI solutions for enterprise clients. One notable example involves a leading conglomerate that was struggling with manually analyzing unstructured and qualitative data, which was prone to bias and inefficiency.

Kanerika addressed these challenges by deploying a generative AI-based solution utilizing natural language processing (NLP), machine learning (ML), and sentiment analysis models. This solution automated the data collection and text analysis from various unstructured sources like market reports, integrating them with structured data sources. The result was a 30% decrease in decision-making time, a 37% increase in identifying customer needs, and a 55% reduction in manual effort and analysis time.

This case demonstrates Kanerika’s ability to implement sophisticated AI solutions that work with limited labeled data—exactly what semi-supervised learning requires. By combining NLP and ML techniques with smart data integration, Kanerika helps organizations extract value from their existing data assets without massive labelling investments.

Industry Recognition and Trust Markers

Kanerika is a premier provider of data-driven software solutions and services that facilitate digital transformation. The company maintains rigorous quality standards, backed by ISO 27701 certification, SOC II, and GDPR compliance. As a distinguished partner of Microsoft, AWS, and Informatica, Kanerika’s commitment to innovation and strong partnerships positions it at the forefront of empowering businesses for their growth.

These certifications matter when implementing semi-supervised learning systems that handle sensitive data. ISO 27701 ensures privacy information management meets international standards. SOC II compliance demonstrates operational excellence in security, availability, and confidentiality. GDPR compliance means your AI implementations meet the strictest data protection regulations globally.

Real-World Impact Across Industries

For another leading ERP provider facing ineffective sales data management and a lackluster CRM interface, Kanerika leveraged generative AI to create a visually appealing and functional dashboard, providing a holistic view of sales data and improved KPI identification. This enhancement resulted in a 10% increase in customer retention, a 14% boost in sales and revenue, and a 22% uptick in KPI identification accuracy.

What makes these results particularly relevant for semi-supervised learning? Kanerika achieved these improvements without requiring the client to label every piece of historical sales data. Instead, they used intelligent pattern recognition on existing structured and unstructured data sources—exactly the approach that makes semi-supervised learning powerful.

Chris Benson, Chief Information Officer at SeaLink Travel Group, shares his experience: “Kanerika’s assistance in SeaLink’s Incorta implementation has transformed our data interaction. They effectively manage our environment, provide technical solutions, and streamline our relationship with Incorta’s support team, ensuring valuable progress in our projects.”

The IMPACT Methodology

At Kanerika, the company leverages the IMPACT methodology to drive successful AI projects, focusing on delivering tangible outcomes. This structured approach ensures that semi-supervised learning implementations deliver real business value, not just technical achievements.

The methodology encompasses:

- Identification of optimal use cases for semi-supervised learning within your organization

- Measurement of baseline performance and setting realistic improvement targets

- Planning the integration with existing systems and workflows

- Adaptation of models to your specific industry context and data characteristics

- Continuous optimization as new data becomes available

- Transformation of business processes to fully leverage AI capabilities

This systematic approach has proven particularly effective when organizations need to balance the desire for AI innovation with practical constraints around data labeling budgets and timelines.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

Getting Started with Semi-Supervised Learning

Pilot Project Selection Criteria

Choose problems with clear success metrics. Revenue impact. Cost reduction. Time savings. Avoid vague goals like “better insights” for your pilot.

Pick use cases with abundant unlabeled data. The more unlabeled data available, the better semi-supervised learning performs. Thousands of data points minimum. Millions are better.

Start where you have some labeled data already. Even imperfect labels work as a starting point. You can improve quality iteratively.

Team and Infrastructure Requirements

You need three core capabilities. ML engineering for model development. Domain expertise for validation. Data engineering for pipeline management.

Infrastructure requirements depend on scale. Start with cloud platforms for flexibility. McKinsey’s technology trends analysis shows that cloud and edge computing investments increased despite broader market challenges, with AI and robotics investments recovering to higher levels in 2024 than two years prior.

Don’t forget ongoing operations. Models need monitoring, retraining, and updates. Plan for this from the start or successful pilots won’t scale.

Roadmap for Scaling Success

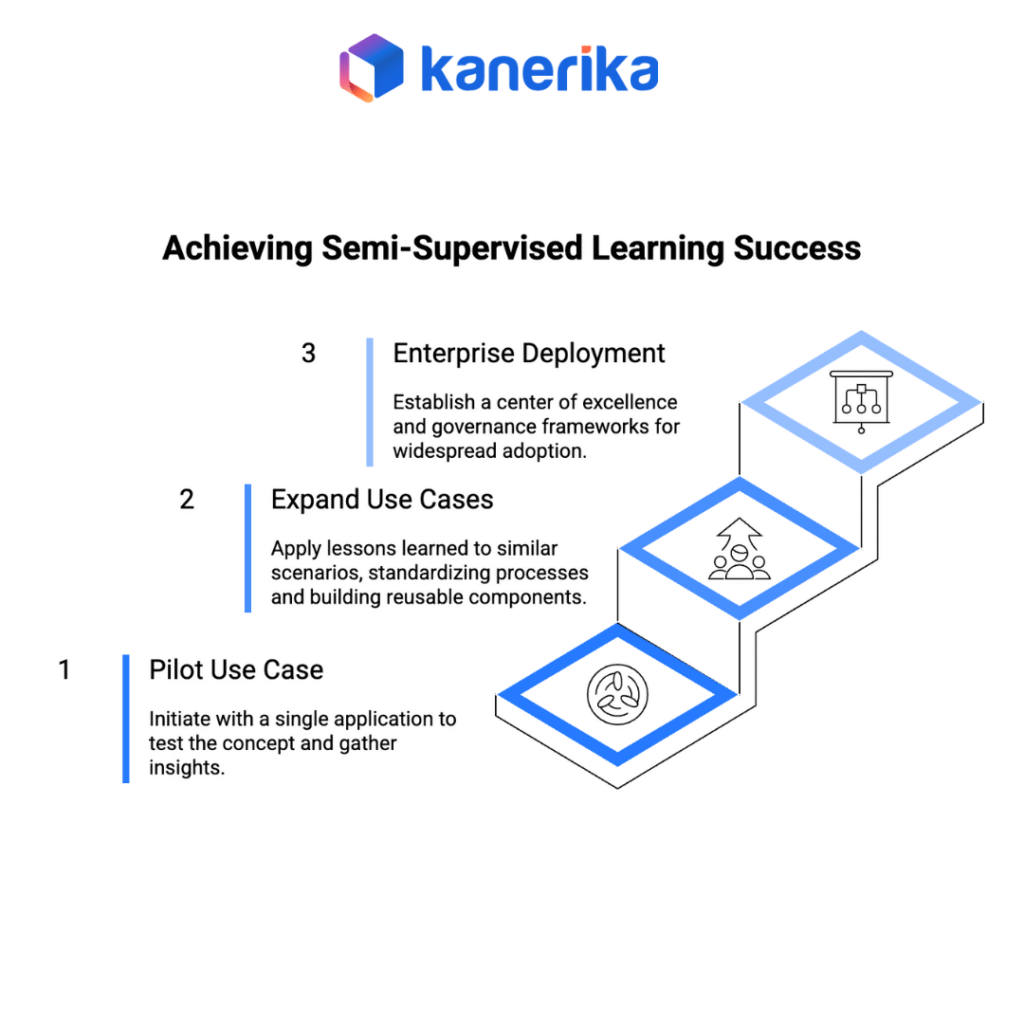

- Phase 1: Pilot with single use case. Prove the concept. Document lessons learned. Build internal confidence.

- Phase 2: Expand to similar use cases. Apply learnings from pilot. Standardize processes. Build reusable components.

- Phase 3: Enterprise deployment. Create center of excellence. Establish governance frameworks. Enable self-service for business units.

McKinsey research suggests that almost all companies are investing in AI, but only 1% believe they’re at maturity. While 92% are planning to increase their investment in the next three years, the challenge is not employees but leaders who are not moving fast enough.

Success comes from iteration, not perfection. Start small. Learn fast. Scale what works.

FAQs

What is semi-supervised learning?

Semi-supervised learning cleverly bridges the gap between supervised and unsupervised learning. It uses a small amount of labeled data (like supervised learning) alongside a much larger pool of unlabeled data to train a model. This boosts performance beyond what’s possible with just the labeled data alone, making it highly efficient when labeled data is scarce or expensive to obtain. Essentially, it learns patterns from both labeled and unlabeled examples.

What is an example of a semi-supervised learning model?

Semi-supervised learning blends labeled and unlabeled data. A good example is a model training on a set of labeled images (cat/dog) alongside a much larger set of unlabeled images. The model learns patterns from the unlabeled data to improve its accuracy on the labeled data, needing fewer labeled examples than purely supervised methods. This boosts efficiency and reduces the need for expensive labeling efforts.

Which of the following are examples of semi-supervised learning?

Semi-supervised learning uses a mix of labeled and unlabeled data to train a model. Think of it as leveraging the information inherent in the unlabeled data to improve accuracy beyond what’s possible with just the labeled data alone. Examples involve using a small labeled dataset to guide the learning from a much larger unlabeled dataset. It’s a sweet spot between fully supervised and unsupervised learning, bridging the gap when labeled data is scarce.

What is the difference between semi-supervised and unsupervised learning?

Unsupervised learning explores unlabeled data to find hidden patterns and structures, like clustering similar customers together. Semi-supervised learning uses a mix: a small amount of labeled data guides the learning process on a much larger set of unlabeled data, improving accuracy compared to purely unsupervised methods. Think of it as a helpful nudge towards better pattern recognition.

What are the two 2 types of supervised learning?

Supervised learning teaches computers using labeled data – like showing a child pictures and naming them. The two main types are classification, where we categorize things (e.g., spam/not spam), and regression, where we predict continuous values (e.g., house prices). Essentially, classification sorts things into boxes, while regression predicts a number on a scale. Both use labeled examples to learn the patterns.

What is the difference between semi-supervised learning and active learning?

Semi-supervised learning uses a mix of labeled and unlabeled data to improve model accuracy, essentially leveraging the unlabeled data to boost learning from the limited labeled examples. Active learning, however, *strategically* selects which unlabeled data points to label next, focusing on the most informative samples to maximize learning efficiency. Think of semi-supervised as passively using extra data, while active learning actively seeks the best data to label. The key difference lies in the *control* over the labeling process.

What is the difference between semi-supervised learning and weak supervision?

Semi-supervised learning uses a small amount of labeled data to guide learning from a much larger pool of unlabeled data. Weak supervision, on the other hand, leverages noisy or imprecise labels (like heuristics or rules) to create a larger, but less reliable, training dataset. Essentially, semi-supervised learning refines learning with *some* good labels, while weak supervision starts with *many* imperfect labels. The key difference lies in the quality, not just the quantity, of the available supervision.

What is an example of unsupervised learning?

Unsupervised learning lets computers find patterns in data *without* pre-labeled examples. Imagine giving a computer a bunch of photos – it’ll group similar images together (e.g., all cats, all dogs) on its own, discovering structure in the data without you telling it what’s in each picture beforehand. This contrasts with supervised learning which requires labeled examples. It’s like exploration versus instruction.

What is semi-supervised clustering?

Semi-supervised clustering blends the strengths of both supervised and unsupervised learning. It uses a small amount of labeled data (knowing some points’ true cluster assignments) to guide the clustering of a much larger unlabeled dataset. This improves accuracy and efficiency compared to purely unsupervised methods by leveraging partial prior knowledge. Think of it as giving a helpful hint to an otherwise unsupervised algorithm.