As astonishing as it may sound, 33% of IT leaders have revealed that they employ machine learning models for business analytics.

Not only in the IT sector, Machine learning is increasingly becoming a transformative force in diverse industries including healthcare, retail, finance, and manufacturing. This trend reflects machine learning’s potential to drive efficiency, improve decision-making, and propel businesses forward in an increasingly data-driven landscape and why effective machine learning management is crucial.

Efficient machine learning models are paramount in driving optimal performance, resource utilization, and overall success in data-driven applications. They enhance accuracy, reduce computational costs, and facilitate faster decision-making. Additionally, they contribute significantly to the scalability and sustainability of machine learning applications, making them essential for maximizing the benefits of artificial intelligence in various domains.

When it comes to machine learning model management, there are a few best practices that can help ensure efficiency and accuracy. These can streamline the deployment process, minimize errors, and ensure that your models are running smoothly. In this article, we’ll discuss the ideal strategies for effective deployment and monitoring of ML models, and how Kanerika can be your trusted consulting partner in achieving your requirements.

Table of Contents

- What is Machine Learning Model Management?

- Fundamentals of ML Model Deployment

- Why Should You Monitor Your Machine Learning Models?

- Advantages of Successful ML Model Deployment

- Best Strategies for ML Model Management

- Machine Learning Model Deployment Pipelines

- Scalability and Performance Optimization

- Monitoring and Logging

- Security and Compliance

- Maintenance and Updates

- Use Cases: How Kanerika’s AI/ ML Solutions Transformed the Clients’ Businesses

- Choose Kanerika for State-of-the-art ML Model Management Solutions

- FAQs

What is Machine Learning Model Management?

Machine learning model management is the process of deploying and monitoring machine learning models in a production environment. It involves managing the entire lifecycle of a machine learning model, from development to deployment and monitoring.

Effective machine learning model management is essential for ensuring that machine learning models are performing optimally, producing accurate results, and meeting the business requirements.

The various steps involved in machine learning model management are as follows:

- Model Selection: Choosing the most appropriate machine learning model for the task at hand based on the available data, business requirements, and performance metrics.

- Model Training: Training the selected machine learning model using the relevant data and optimizing the model’s hyperparameters to achieve the best performance.

- Model Deployment: Deploying the trained machine learning model in a production environment, where it can receive new data and generate predictions.

- Model Monitoring: Monitoring the performance of the deployed machine learning model and making necessary adjustments to ensure optimal performance.

Fundamentals of ML Model Deployment

Deploying machine learning models is a crucial step in the machine learning pipeline. It involves taking a trained model and making it available for use in a production environment. In this section, we will discuss the fundamentals of model deployment.

According to Mckinsey, sales and marketing are the most profitable domains that can leverage Machine Learning Models

Choosing the Right Model Deployment Strategy

- Batch Inference: This strategy involves processing data in batches, which can be useful for scenarios where the data is not time-sensitive.

- Real-time Inference: This strategy involves processing data in real-time, which is useful for scenarios where the data is time-sensitive.

- Hybrid Inference: This strategy involves a combination of batch and real-time inference, which can be useful for scenarios where the data is both time-sensitive and not time-sensitive.

Understanding Model Serving Patterns

- Request-Response: This pattern involves sending a request to the model and receiving a response back.

- Asynchronous: This pattern involves sending a request to the model and receiving a response back at a later time.

- Streaming: This pattern involves sending a continuous stream of data to the model and receiving a continuous stream of responses back.

Containerization and Model Wrapping

These are essential to ensure that your model is deployed in a consistent and reliable manner. Containerization involves packaging your model and its dependencies into a container, which can be deployed on any platform that supports containers. Model wrapping involves creating a standardized interface for your model, which can be used to interact with the model in a consistent and reliable manner.

Check out: Machine Learning Operations MLOps: A comprehensive guide

Read More – Automated Machine Learning

Why is Machine Learning Model Management Important?

The global machine learning market is expected to experience significant growth, with a projected growth rate of 38.76% between 2020 and 2030 – Market Research Future

Managing your machine learning models is an essential part of the machine learning development process. Without proper management, your models can become outdated, inaccurate, and unreliable, leading to poor performance and wasted resources.

There are several reasons why you need to manage your machine learning models:

1. Model Drift

Machine learning models are trained on historical data, which means they are only as accurate as the data they were trained on. As new data becomes available, the model may start to drift, meaning its predictions become less accurate over time. Without proper management, you may not be aware of this drift, leading to incorrect decisions and wasted resources.

2. Changing Business Needs

Business needs can change rapidly, and your machine learning models need to adapt to these changes. Without proper management, your models may become outdated and no longer relevant to your business needs.

3. Model Performance

Managing your machine learning models allows you to monitor their performance and identify areas for improvement. By continually monitoring and optimizing your models, you can ensure they are performing at their best, leading to better predictions and more accurate decision making.

4. Compliance and Regulations

Many industries are subject to strict compliance and regulatory requirements, and machine learning models are no exception. Managing your models allows you to ensure they comply with these requirements, reducing the risk of non-compliance and potential legal issues.

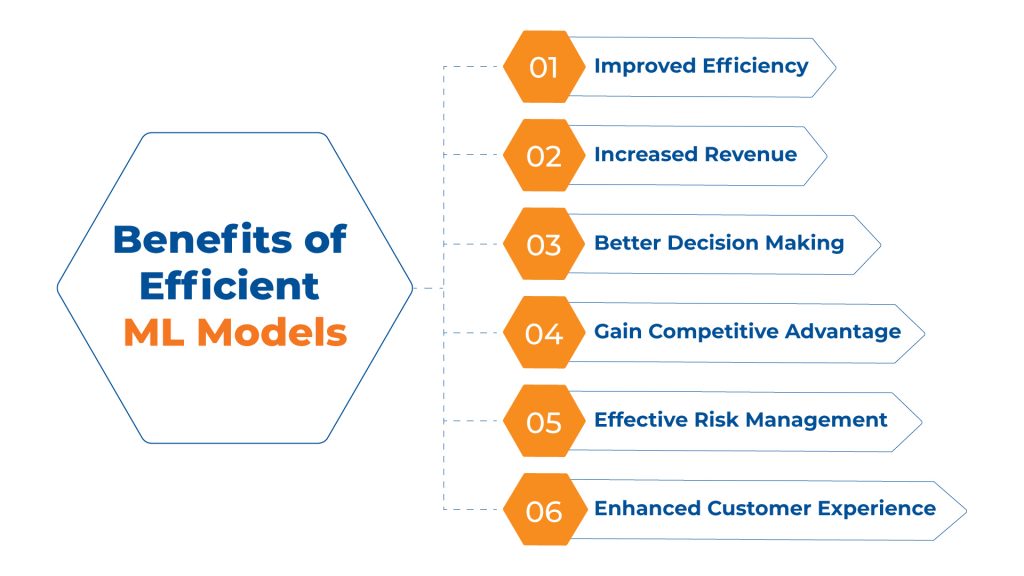

Advantages of Successful ML Model Deployment

1. Improved Efficiency

Efficient ML model deployment can significantly improve your business’s efficiency. With a successful deployment, you can automate repetitive tasks, reduce manual intervention, and speed up decision-making processes. This, in turn, can lead to faster and more accurate predictions, saving time and resources.

2. Increased Revenue

Deploying a successful ML model can also increase your revenue by improving customer satisfaction and reducing costs. By automating tasks and reducing errors, you can deliver better products and services to your customers. Additionally, by optimizing your business processes, you can reduce costs and increase profits.

![]()

3. Better Decision Making

A successful ML model deployment can help you make better decisions by providing accurate predictions and insights. With a well-trained model, you can identify patterns and trends that may not be visible to the human eye, enabling you to make data-driven decisions that can improve your business outcomes.

4. Gain Competitive Advantage

Deploying a successful ML model can give you a competitive advantage by enabling you to deliver better products and services faster than your competitors. By leveraging the power of machine learning, you can stay ahead of the curve and provide your customers with innovative solutions that meet their needs.

5. Enhanced Customer Experience

ML can be employed to analyze customer data, predict customer needs, and provide personalized recommendations. This enhances the overall customer experience, leading to increased customer satisfaction and loyalty

6. Effective Risk Management

Machine learning models can analyze historical data to identify patterns and trends. This can be particularly valuable in risk management, where predictive analytics can help anticipate and mitigate potential risks.

Also read: Navigating the AI landscape: Benefits of machine learning

Proven Strategies for Machine Learning Model Management

Managing machine learning models can be a challenging task, especially when dealing with large and complex models. Here are some best practices for managing your ML models effectively:

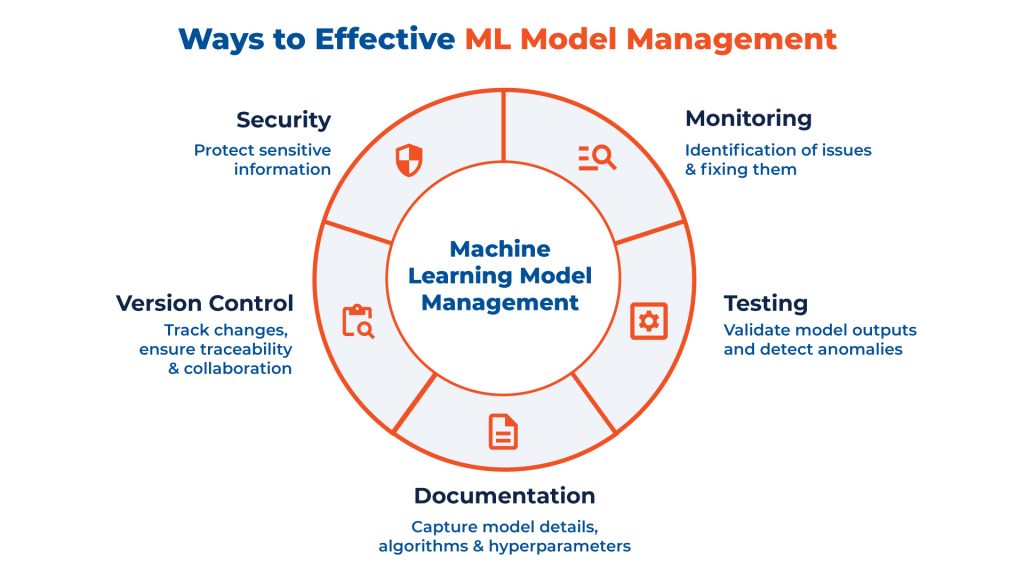

1. Version Control

Version control is crucial for managing machine learning models. It allows you to keep track of changes made to your models and helps you revert to previous versions if needed. You can use tools like Git or SVN to manage your model versions.

2. Documentation

Documenting your models is essential for efficient model management. Documentation should include details such as the model architecture, hyperparameters, data preprocessing, and evaluation metrics. It helps you and your team understand the model’s behavior and performance and makes it easier to reproduce the results.

3. Testing

Testing your models before deployment is critical to ensure that they perform well in production. You can use various testing techniques such as unit testing, integration testing, and performance testing to verify the model’s accuracy and performance.

4. Monitoring

Managing your models in production is crucial to detect any issues or performance degradation. You can use monitoring tools like TensorBoard or Prometheus to monitor the model’s performance and detect anomalies.

5. Security

Ensuring the security of your models is critical, especially when dealing with sensitive data. You should implement security measures such as encryption, access control, and auditing to protect your models from unauthorized access and ensure data privacy.

Machine Learning Model Deployment Pipelines

Deploying machine learning models is a complex process that requires careful planning and execution. Machine learning model deployment pipelines help streamline this process by providing a framework for building, testing, and deploying models.

Continuous Integration and Deployment

Continuous integration and continuous deployment (CI/CD) is a crucial component of any machine learning deployment pipeline. CI/CD helps automate the process of building, testing, and deploying models, allowing you to quickly iterate and improve your models.

To implement CI/CD, you should use a version control system such as Git to manage your code and a continuous integration tool such as Jenkins or Travis CI to automate the build and testing process. You should also use a containerization tool such as Docker to package your model and its dependencies into a portable image that can be deployed to any environment.

Automated Testing and Quality Assurance

Automated testing and quality assurance are essential for ensuring that your machine learning models are reliable and accurate. You should use a combination of unit tests, integration tests, and end-to-end tests to verify that your model is functioning correctly. You should also use techniques such as cross-validation and A/B testing to validate the performance of your model.

To automate testing and quality assurance, you should use a testing framework such as pytest or unittest to write and run tests. You should also use a continuous integration tool such as Jenkins or Travis CI to automatically run tests whenever you make changes to your code.

Versioning and Rollback Mechanisms

Versioning and rollback mechanisms are critical for ensuring that you can quickly and easily revert to a previous version of your model if something goes wrong. You should use a version control system such as Git to manage your code and track changes to your model. You should also use a deployment tool such as Kubernetes or Terraform to manage the deployment of your model and its dependencies.

To implement versioning and rollback mechanisms, you should use a branching strategy such as Gitflow to manage changes to your code. You should also use a deployment tool such as Kubernetes or Terraform to manage the deployment of your model and its dependencies. Finally, you should use a monitoring tool such as Prometheus or Grafana to monitor the performance of your model and detect any issues that may require a rollback.

Scalability and Performance Optimization

When deploying machine learning models, scalability and performance optimization are crucial for ensuring that the model can handle high volumes of traffic and provide accurate predictions in real-time. In this section, we will discuss some best practices for optimizing the scalability and performance of your machine learning models.

Load Balancing and Resource Management

Load balancing is critical for ensuring that your machine learning models are distributed evenly across multiple servers. This can help to prevent any single server from becoming overloaded and causing a bottleneck in your system. Additionally, resource management is essential for ensuring that your models have access to the necessary computing resources, such as CPU and memory, to run efficiently.

To optimize load balancing and resource management, you can use tools such as Kubernetes or Docker Swarm. These tools allow you to manage and orchestrate containers across multiple servers, ensuring that your machine learning models are distributed evenly and have access to the resources they need.

Optimizing Inference Latency

Inference latency refers to the time it takes for your machine learning model to make a prediction after receiving input data. Optimizing inference latency is critical for ensuring that your model can handle high volumes of traffic and provide accurate predictions in real-time.

To optimize inference latency, you can use techniques such as model compression, quantization, and pruning. These techniques help to reduce the size and complexity of your model, making it faster and more efficient to run.

Check out: Generative Vs Discriminative: Understanding the different machine learning models

Caching Strategies for Improved Performance

Caching is an effective way to improve the performance of your machine learning models by reducing the amount of time it takes to load and process data. By caching frequently accessed data, you can reduce the amount of time it takes for your model to make a prediction.

To implement caching, you can use tools such as Redis or Memcached. These tools allow you to store frequently accessed data in memory, making it faster and more efficient to access.

Monitoring and Logging

When you deploy your machine learning model, it is essential to monitor and log its performance to ensure that it is functioning as expected. This will help you detect any issues early and take corrective action to prevent any adverse impact on the end-users. In this section, we will discuss some best practices for monitoring and logging your machine learning model.

Real-Time Monitoring Techniques

Real-time monitoring is a crucial aspect of machine learning model deployment. It involves continuously monitoring the model’s input data, output predictions, and performance metrics to identify any anomalies or issues. You can use various techniques to monitor your model in real-time, such as:

- Dashboard Monitoring: A dashboard is an excellent way to monitor the performance of your machine learning model in real-time. You can create a dashboard with various metrics, such as accuracy, precision, recall, and F1 score, to track the model’s performance over time.

- API Monitoring: You can monitor your machine learning model’s API to track the number of requests, response times, and error rates. This can help you identify any issues with the API and take corrective action promptly.

Logging Best Practices

Logging is the process of recording the events and activities of your machine learning model. It involves capturing the input data, output predictions, and performance metrics to track the model’s performance over time. Here are some best practices for logging your machine learning model:

- Log Everything: It is essential to log everything, including the input data, output predictions, and performance metrics, to track the model’s performance over time.

- Use Structured Logging: Structured logging is a technique that involves logging data in a structured format, such as JSON or CSV. This can help you analyze the logs more easily and identify any issues quickly.

- Use a Logging Framework: You can use a logging framework, such as Log4j or Python’s logging module, to log your machine learning model’s events and activities. This can help you manage your logs more efficiently and make it easier to analyze them.

Anomaly Detection and Alerting Systems

Anomaly detection and alerting systems are critical components of machine learning model monitoring. They involve detecting any anomalies or issues with the model’s input data, output predictions, or performance metrics and alerting the relevant stakeholders. Here are some best practices for anomaly detection and alerting:

- Define Thresholds: It is essential to define thresholds for the model’s input data, output predictions, and performance metrics. This can help you identify any anomalies or issues and take corrective action promptly.

- Use Automated Alerting Systems: You can use automated alerting systems, such as email notifications or Slack messages, to alert the relevant stakeholders when an anomaly or issue is detected. This can help you respond to issues quickly and prevent any adverse impact on the end-users.

Security and Compliance

When deploying and monitoring machine learning models, it is crucial to consider security and compliance. Failure to do so can result in data breaches and regulatory violations, leading to legal and financial consequences. In this section, we will discuss some best practices for ensuring security and compliance.

Model Security Considerations

Machine learning models are vulnerable to attacks such as adversarial attacks, model poisoning, and data poisoning. It is essential to consider these threats and implement appropriate security measures to protect your models. Here are some best practices for model security:

- Use secure machine learning frameworks and libraries that have been audited for security vulnerabilities.

- Implement access control mechanisms to restrict model access to authorized personnel only.

- Monitor model behavior for anomalies and suspicious activities.

- Use encryption to protect sensitive data used in the model.

Data Privacy and Protection

Data privacy and protection are critical when deploying machine learning models. You must ensure that sensitive data is protected from unauthorized access and that data privacy regulations are adhered to. Here are some best practices for data privacy and protection:

- Use anonymization techniques to protect sensitive data.

- Implement access control measures to restrict access to sensitive data.

- Use encryption to protect data in transit and at rest.

- Regularly audit data access logs to detect and prevent unauthorized access.

Adhering to Regulatory Standards

Regulatory compliance is essential when deploying machine learning models. You must ensure that your models adhere to applicable regulations such as GDPR, HIPAA, and CCPA. Here are some best practices for adhering to regulatory standards:

- Understand the regulatory requirements that apply to your models.

- Implement measures to ensure compliance with these regulations.

- Document your compliance efforts and maintain records of compliance.

- Regularly review and update your compliance measures to ensure they remain effective.

By implementing these best practices, you can ensure that your machine learning models are secure and compliant, reducing the risk of data breaches and regulatory violations.

Maintenance and Updates

Maintaining and updating machine learning models is crucial for ensuring their accuracy and reliability over time. Here are some best practices to follow for model maintenance and updates.

Model Retraining and Updating

Machine learning models need to be retrained periodically to account for changes in the data they are trained on. This is especially important if the input data is subject to drift or if the model is used in a dynamic environment.

To keep your models up to date, you should establish a regular schedule for retraining and updating them. This schedule should take into account the rate of data drift, as well as any changes in the business or regulatory environment that may affect the model’s performance.

Deprecation Strategies

As machine learning models become outdated or are no longer needed, it’s important to have a strategy in place for their deprecation. This may involve archiving the model, retiring it completely, or replacing it with a newer model.

To ensure a smooth transition, you should plan for model deprecation well in advance. This may involve communicating with stakeholders, updating documentation, and providing training on the new model.

Documentation and Knowledge Sharing

Maintaining accurate and up-to-date documentation is essential for ensuring that your machine learning models can be easily maintained and updated. This documentation should include information on the model’s purpose, inputs, outputs, and performance metrics, and any relevant code or data.

You should also encourage knowledge sharing among your team members to ensure that everyone is familiar with the models and their use cases. This may involve regular training sessions, code reviews, or other forms of collaboration.

By following these best practices for model maintenance and updates, you can ensure that your machine learning models remain accurate, reliable, and effective over time.

Check out: Secrets of data augmentation for improved deep & machine learning

Use Cases: How Kanerika’s AI/ ML Solutions Transformed the Clients’ Businesses

Fueling Business Growth with AI and ML Implementation in Healthcare:

The client, a technology platform specializing in healthcare workforce optimization, encountered several challenges impeding business growth and operational efficiency. Manual SOPs caused talent shortlisting delays, while document verification errors impacted service quality. Dependence on operations jeopardized scalability amid rising healthcare workforce demands. By implementing AI applications in healthcare and ML algorithms, Kanerika improved the overall operational efficiency through accurate document verification, optimized resources, and streamlined operations.

Revolutionizing Fraud Detection in Insurance with AI/ML-Powered RPA

Our client, a prominent insurance provider that specializes in healthcare, travel, and accident coverage, wanted to move away from conventional methods requiring too much manual intervention. They wanted to automate their insurance claim process solution with AI/ML to spot unusual patterns that are unnoticeable by the human eyes. Kanerika, Implemented AI RPA for fraud detection in insurance claim process, reducing fraud-related financial losses. We leveraged predictive analytics, AI, NLP, and image recognition to monitor customer behavior, enhancing customer satisfaction, and delivered AI/ML-driven RPA solutions for fraud assessment and operational excellence, resulting in cost savings.

Optimizing Costs with AI in Shipping

One of our client, a global leader in Spend Management, leverages industry-leading cloud-based applications and deep domain expertise to transform the logistics freight and parcel audits business. They needed help identifying logistics and shipment costs across various regions, seasonal variations, and transportation modes. The existing approach, which relied heavily on experience-based decisions or a preferred vendor approach, proved inefficient. Kanerika developed an AI/ML-powered pricing discovery engine using 23+ parameters and ASNs for shipment pricing prediction which provided real-time cost visibility at the time of shipment booking, enabling proactive cost management. It reduced delays in closing accounting periods, avoiding price discrepancies and increased cost savings with a price prediction AI.

Facilitating AI in Finance Modelling and Forecasting

The client is a mid-sized insurance company faced challenges in financial modeling and forecasting. They wanted to find a solution to optimize their financial model and introduce AI in insurance to unveil trends, patterns, and fraud detection using machine learning. By leveraging AI in decision-making for in-depth financial analysis, Kanerika enabled informed decision-making for growth We implemented ML algorithms to detect fraudulent activities, promptly minimizing losses, and utilized advanced financial risk assessment models to identify potential risk factors, ensuring financial stability.

Choose Kanerika for State-of-the-art Machine Learning Model Management Solutions

When it comes to deploying and monitoring machine learning models, ensure that you have the best tools and solutions at your disposal. That’s where Kanerika comes in. Kanerika offers state-of-the-art machine learning model management solutions that can help streamline your model deployment process so that your models are performing optimally.

Our team of experts has years of experience working with machine learning models and can provide valuable insights and guidance throughout the deployment and monitoring process.

As an ISO certified IT consulting firm, Kanerika is committed to delivering technical excellence without compromising on the security. Beyond AI/ML, we extend our services to data analytics and integration, cloud services, RPA, and quality engineering.